Greetings! I am Lleu Yang, a GSoC 2024 student and the future contributor of Sample Sound Node.

In this summer, I plan to add a Sample Sound node that retrieves audio from sound files, and provides their frequency response over time for use in Geometry Nodes. The full proposal can be viewed here.

There is also a dedicated feedback thread. Please feel free to share your opinions on this project!

I will post my reports of work here regularly once the coding period has begun.

27 Likes

Community Bonding Period & Week 1

In this period, I:

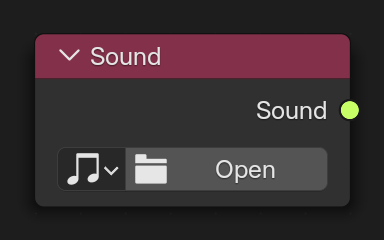

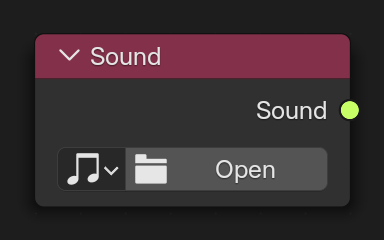

- Implemented Sound socket (which is the prerequisite of all future works) and Sound input node,

- Created a pull request containing all possibly related works of this project,

- Learned the basic usage of Audaspace APIs through reading

BKE_sound.c and Audaspace Device’s implementation, and

- Discussed with my mentor about how to implement the actual Sample Sound node.

Most of the works mentioned above were finished in the Community Bonding Period. I have not contributed much in the first week, since it has been a little bit busy for me dealing with assignments in school. Thankfully, I am much more available from now on.

In the next week, I plan to:

- Create a naïve Sample Sound node with access to time domain, by utilizing

aud::ReadDevice, and

- Get familiar with

aud::FFTPlan, and try to provide initial support (not worrying about caching at this time) for getting frequency domain information in the node.

13 Likes

Always remember to use ASan, unless you want to ruin your afternoon by accidentally overriding your handles with 7,350 floating-point audio samples.

Week 2

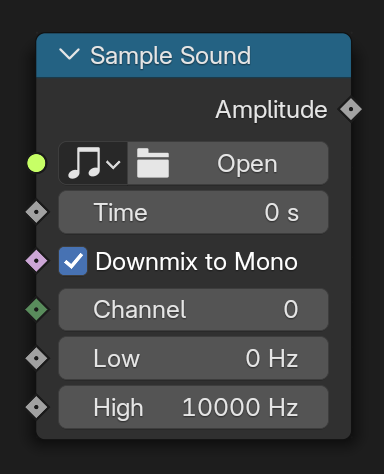

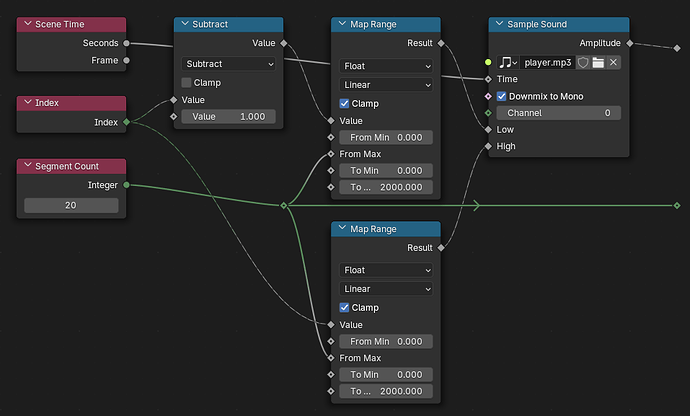

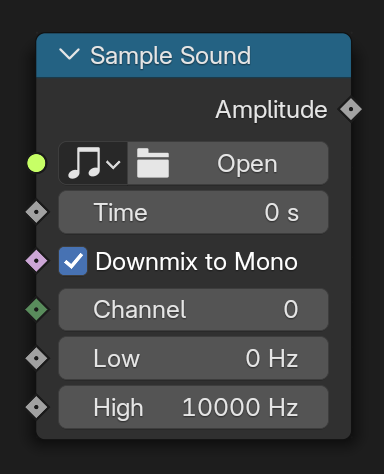

In this week, I implemented the naïve node with the ability to get amplitude from a Sound, as is shown below:

The project file is attached in a

comment from PR

#122228. The audio is made by myself and licensed under CC0.

At this moment, known issues are:

- Current temporal smoothing method is rather simple, inflexible, and even inefficient, which needs to be replaced by a faster, more accepted algorithm,

- While being easy to use,

aud::FFTPlan has no external binding for Blender, which means either modification of Audaspace code base or a little manipulation on the build system is needed, and

- Lots of

TODO’s in current code, which I believe will finally be cleaned up after a while.

In the next week, I plan to:

- Discuss with my mentor about how to integrate

aud::FFTPlan into Blender itself, and provide basic ability to get frequency information from a Sound.

- Learn more about properties of sound (intensity, pressure, etc.) and provide better temporal smoothing algorithms. The previous Sound Spectrum node should be a great reference.

26 Likes

The reason that the previous smoothing algorithm didn’t work (while it should be,) and how I solved it:

- const bool frame_rate)

+ const double frame_rate)

Uh-oh.

Week 3

In this week, I:

- Added basic FFT functionality to the node, and

- Implemented a very simple (and incomplete) caching mechanism.

The project file is attached in a comment from my working PR. The packed audio is made by myself and licensed under CC0.

Known issues, i.e. FIXME’s:

- The cache is not thread-safe for now. Although one can hardly make it crash in its typical usage (like what the video has shown), it may be unable to handle heavy workloads.

- Floating-point arithmetic error can cause sample position (time) to jump back and forth between different frames, making weird jiggles in animation.

- Assertion error happens when a new sound is specified while current scene is still in playback.

In the next week, I plan to:

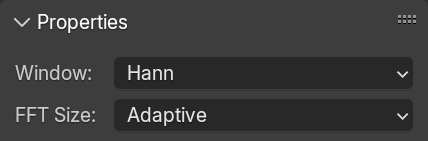

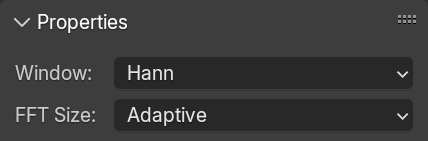

- Support various window functions for FFT,

- Support EMA for smoother attack/release, and

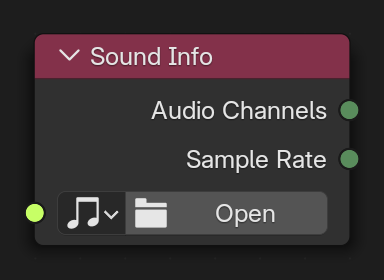

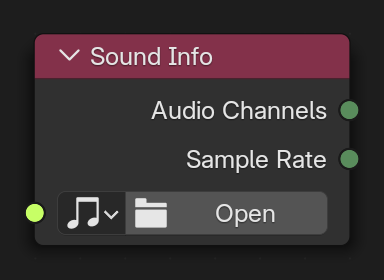

- Add a Sound Info node for conveniently getting important information (e.g. channel amount and sample rate).

29 Likes

Week 4

In this week, I:

- Supported custom smoothness (sample length) in the node,

- Supported adding window functions when doing FFT, and

- Rewrote LRU cache using Blender’s

BLI libraries.

The project file is attached in a comment from my working PR. The packed audio is made by myself and licensed under CC0.

Known issues:

- Overall amplitude (of FFT results) varies significantly with smoothness. This issues might be related to some properties of FFT itself and needs to be further researched.

fftwf_execute() fails to plan when multiple layers containing this node are present.

I will be inactive in the next week, since I have to prepare for the final exam (ends on July 5) at school. However, the next steps could be:

- Carefully design the previously mentioned Sound Info node to get more than just sample rate and channel amount from a Sound,

- Try to eliminate some of the crash scenarios, and

- Prepare for the mid-term evaluation of GSoC.

26 Likes

Week 5

As mentioned before, I have been preparing for the coming exam at my school, so no new lines were written in this week.

However, based on the last code review from my mentor, the proper caching strategy for FFT has been found. Once I finished the exam, I will instantly return to coding and realize the idea.

12 Likes

Week 6

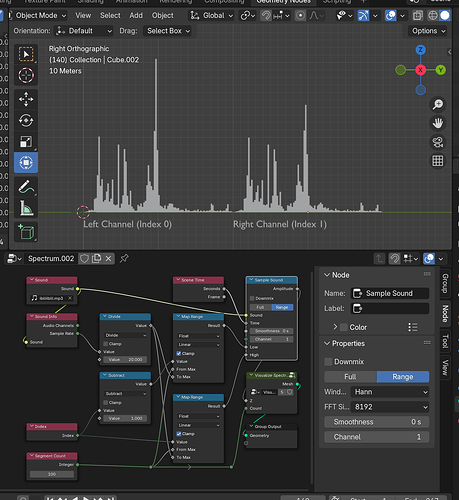

In this week, I redesigned the whole caching structure (throwing LRU away and keeping it simple) according to the instruction from my mentor. It allows users to choose FFT size by themselves, and is much more efficient than the previous solution. The difference between different FFT sizes is shown below (no smoothing is applied):

The project file is attached in a comment from my working PR. The packed audio is made by myself and licensed under CC0.

Due to the exam, the progress of this week is, still, a bit slow. The node with this new caching structure currently lacks temporal smoothing support. Thankfully, the solution to hardest part has become clear, and the rest steps (new features and improvements) would be pretty simple and straightforward.

17 Likes

Week 7

Glad that I passed the midterm evaluation!

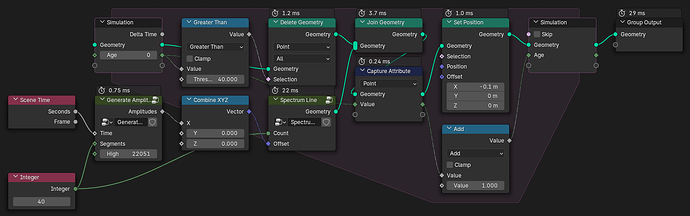

In this week I mainly focused on fixing issues mentioned in last week’s code review. Outcomes included a caching system that doesn’t involve too many manual memory management operations, a simplified yet more intuitive user interface (still, inspired by Sound Spectrum), some refactoring, and also bug fixes.

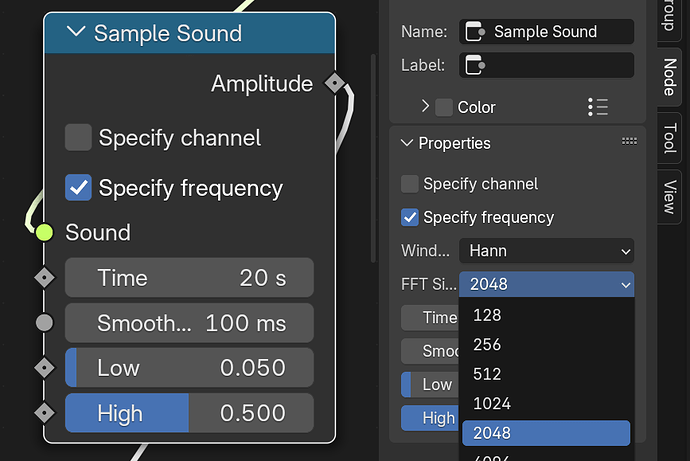

The screenshot above shows the new design of the node, which replaced absolute frequency value with relative factors, added units onto parameters, and placed rarely-used settings into the side bar.

After the last code review, more of the design goals has been clarified. Hopefully I will finish the caching system and be able to continue adding more new features in the coming week.

30 Likes

Week 8

In this week, I:

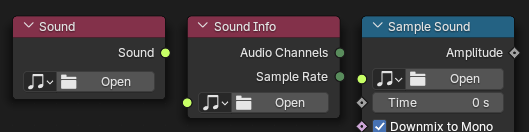

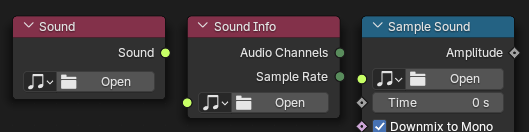

- Implemented a Sound Info node for getting important information from a Sound, and

- Made Sample Sound node able to sample a specific channel of given Sound.

One of the possible use cases of the node is shown below:

Note that after last week’s discussion with my mentor, we’re back to using absolute frequency combined with Map Range node in order to achieve similar experience like relative frequency, while making the node’s parameters as obvious as possible. This kind of technique will later be included in user manual.

As for now, nearly all primary deliverables of the node has been finished. Next steps would be design-wise refinement, bugfixes, optimizations and documenting.

23 Likes

Week 9

This week mainly involved changes in terms of code (refactors, bugfixes, etc.) and had no functional changes on the node, since I was a little busy with my personal tasks.

Nevertheless, a video meeting (for code review) with my mentor will be scheduled in the coming week. We should be able to go over all things that have been done for now, check for remaining tasks, and plan for the next steps.

6 Likes

Week 10

Here is the meeting note for last week’s video session: Sample Sound Meeting - HackMD. Our next steps would be based on this to-do list.

In this week I primarily focused on making code APIs cleaner and more intuitive. It was not until this week that I got know Blender already had the functionality to bake sound amplitudes (time domain information) into F-Curves, so we decided to limit the functionality of this node to solely computing frequency information from a Sound, in order to keep it as simple (atomic) as possible.

Next steps would be ticking off every list items shown in the meeting note. Still a bit slow this week, thankfully I’ll be able to fully devote myself again for the rest of the time.

6 Likes

Week 11

In this week, I:

- Supported interpolation between FFT results (which makes samples smoother especially when using large FFT sizes),

- Added an “Adaptive” option for FFT size, which automatically decide the best FFT size to use depending on scene frame rate, and

- Changed “Downmix to Mono” to a Boolean socket.

The project file is attached in a comment from my working PR. The packed audio is made by myself and licensed under CC0.

The last two weeks would be busier since there are many things to be wrapped up, and the final evaluation of GSoC is coming up. Documenting and bugfixes would be primary tasks in the remaining work period.

15 Likes

Week 12

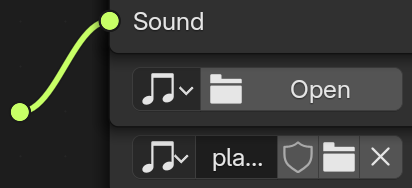

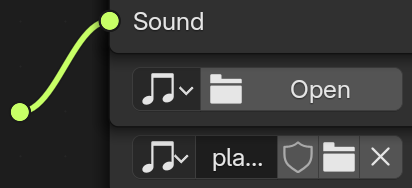

This week contains bugfixes and documenting (PR). There’s also a minor change on the interface of Sound socket that allows user to open sound files directly in the node editor, as is shown below:

The caching system is performing well for now, however there’s a working PR that creates more potential to get better performance inside this node. After discussed with my mentor, we decide to replace current cache implementation using the ConcurrentMap specified in the PR (which will likely to happen in the next week).

After the final week (Week 13) of GSoC coding period, I’ll continue to work and keep improving the node, then finally get my PRs merged into the main branch and open it for everyone to test and use

26 Likes

Final Report

Demonstration

The video below used Sample Sound node and simulation zone together, forming a 3-D waterfall plot.

The project file is attached in the description of my working pull request. The audio is made by myself and licensed under CC0.

Recap of the Goal

This project aims to:

Provide the ability to retrieve sounds from files in Geomety Nodes,

Provide the ability to retrieve sounds from files in Geomety Nodes, Generate amplitude/frequency response information based on several customizable parameters, and

Generate amplitude/frequency response information based on several customizable parameters, and Be written in native C++ with caching/proxy operations to speed up execution.

Be written in native C++ with caching/proxy operations to speed up execution.

State of Deliverables

Listed in Proposal

-

A new socket type in Geometry Nodes called Sound that corresponds to Blender’s data-block type of Sound;

A new socket type in Geometry Nodes called Sound that corresponds to Blender’s data-block type of Sound;

-

A Sound Input node that can read a single Sound from Sounds in data-blocks;

A Sound Input node that can read a single Sound from Sounds in data-blocks;

-

A Sample Sound node that takes a Sound, which then goes through several tunable internal processes, including:

A Sample Sound node that takes a Sound, which then goes through several tunable internal processes, including:

Gain control, (this could be easily handled by user,) Playback progress (sample time) control,

Playback progress (sample time) control, Temporal smoothing,

Temporal smoothing, Audio channel selection,

Audio channel selection, Frequency specification (FFT.)

Frequency specification (FFT.)

The Sound will be finally converted to a corresponding amplitude/power value (as Float) by using the options above.

-

WIP A series of usage examples and documentation related to all the deliverables listed above.

Extra ones

-

A Sound Info node that allows user to get audio channels and sample rate from a sound.

A Sound Info node that allows user to get audio channels and sample rate from a sound.

-

New unit of frequency Hertz (Hz) and Kilohertz (kHz) for expressing pitch of a sound.

New unit of frequency Hertz (Hz) and Kilohertz (kHz) for expressing pitch of a sound.

Pull Requests

Use Cases

The most common use case would be visualizing spectrum from a sound:

If combined with simulation zones (as demonstrated by the video at the beginning), it’s able to create a waterfall plot from a sound:

Next Steps

- Refine the manual and provide more usage examples for nodes listed above.

- Consider whether the Sample Sound node needs to have a “Bake” option that saves all amplitude values into file. For now, it seems that the computation is efficient enough.

- Keep fixing bugs and improving functionality until release!

What I Have Learned

Beside from basic coding skills, I’ve gained another two invaluable experiences.

Firstly, think before you code. This is probably the most important thing I learned in this summer. Coming up with a decent design is almost always harder than writing actual lines.

Also, don’t be afraid to ask. Although it’s a pity that I’m probably not the most suitable one to speak out this sentence (actually it’s my mentor who initiate a conversation most of the time…) I’ve already realized the importance of exchanging opinions and problems—it helps us to find the best way to implement everything, and that’s what makes a great software.

Last but not Least

I want to express my sincere gratefulness to my mentor, Mr. Jacques Lucke @jacqueslucke, for his constant support and supervision on this project. The last and hardest part to make the node able to be used in production, i.e. the cache, is also based on his solution. I also appreciate everyone who helped me during this summer. Without them, this project won’t be as complete as it is by now.

While it’s the end of this year’s GSoC, it’s not the end of my journey in Blender. I’ll continue to do my part on this project and the rest of Blender’s components, for it’s continuous contribution to the world of open source (creative) software.

51 Likes