It seems to me that there are a lot of misunderstanding in this thread. I can’t say I understand it all but at least after reading about this topic for some time now I think I can clarify some things for you.

Before I write everything, I would actually like to link a kind of long article but I guarantee it would be a worth read, by Chris Brejon: OCIO, Display Transforms and Misconceptions - Chris Brejon

And then about Filmic vs “Standard”

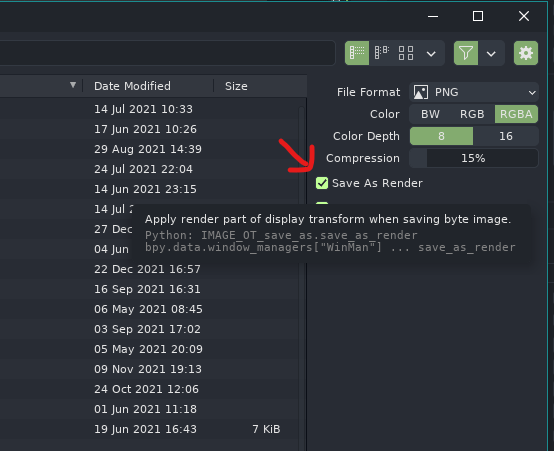

First of all, a showcase image showing why Filmic should be the default and why “Standard” should be avoided at all cost:

What does white mean? What did you mean when you said “white looks grey?”

Troy mentioned in his Hitchhiker’s guide to digital color that sometimes the most simple question gets overlooked, and it snowballed into some other misconceptions.

In this context, I assume you mean R=G=B=1. Here comes the problem, does it really mean white? What is white exactly? Again think about it if you haven’t if you overlook this you will miss a lot more.

The answer is, in current Blender’s case, R=G=B means it is using Rec.709 colorspace’s white point, D65 (6504k color temperature, sort of like one of the many “hue” standards for different kind of whites). (BTW this meaning of white would change after Cycles becomes spectral). Note that it does not have to be 1, R=G=B can be any number ranging from 0 to infinity (this is why it is called open domian, or scene referred), and it would still be D65. Therefore, in open domain scene linear’s context, white and grey is the same color with different emission intensities.

However, our monitor cannot emmit infinity power of light. You can have Nishita sky texture in Blender using real world’s sun strength, but can your monitor emit the same power as the sun? Can your monitor alone brighten the entire side of the Earth? Obviously no. So as a color management system, something needs to be done about it. the “Standard” view transform was never designed to handle this problem, the sRGB standard EOTF was supposed to only deal with range from 0 to 1, instead of 0 to infinity. Therefore the standard view transform never worked for Open Domain renderers like Cycles and Eevee.

What does Filmic do differently?

In Filmic Blender’s documentation we have this quote from Troy:

Filmic does two things:

- It compresses the scene referred linear radiometric energy values down to the display / output referred range. This aspect is known as a transfer function or tone mapping. The shape of the Filmic Base Log with a contrast aesthetic roughly emulates a photographic film curve.

- It compresses the gamut for high intensity values. As colour ratios increase in intensity, highly saturated ratios tend to be resistant to transfer function compression, which results in peculiar feeling imagery with some regions feeling appropriately over-exposed and others “lingering” behind. Filmic considers all colour values fair game, and attempts to blend colours into a consistent output that matches our learned expectations from film emulsion-like media.

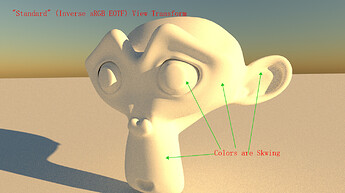

For the first point, Troy back in the days made this image:

From: cycles render engine - How to get accurate colors with Filmic Blender - Blender Stack Exchange

I believe that this image by Troy is enough to understand the first point, also known as “Tonemapping” (the name tonemapping is actually misleading because it has nothing to do with "tone’, but intensity).

Filmic takes the open domain intensity of around 16, and map it back to the 0 to 1 range for sRGB encoding. Therefore if you want display referred white from Filmic, you need to use R=G=B=16, not 1.

The second point is bit harder to understand, but let’s break it down. Look at the graph above made by Troy. Look at the sRGB transfer output, compare it with the original scene value’s RGB ratio, see how it changed the ratio because the out of range channels are clipping.

This is the root issue for the skwing. The buttom line is you cannot have a monitor as bright as the sun, you just cannot. A simple tonemapper may extend the range 0 to 1 to something larger, but it still has an upper limit, when values hit that limit, it is still going to skw. Therefore at least in the foreseeable future, this clipping problem will still exist for a long time with us, if not forever. But as a color management system, something needs to be done about it.

The first thing to solve a problem is to study it. Note that this part is covered in the Chris Brejon article I linked above, so if you are interested please go read it.

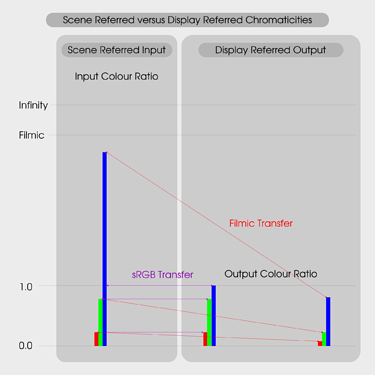

The skwing problem is dubbed the name “Notorious Six” by Troy and others, I don’t know who came up with this name first but this is how they call it. Here is why the name:

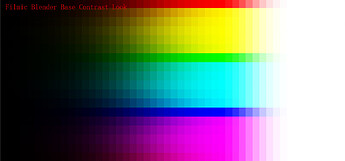

Here is a so called “color sweep”, a range of different colors increasing intensity from left to right. This is easiest why to see the “Notorious Six”. See as the intensity increases, the individual RGB channels are hitting their “1” walls and the ratio between the three skwed, and the wide range of colors becomes only 6 colors.

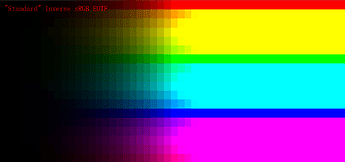

And here is Filmic:

What it did was that instead of letting it skw depend of the output device’s encoding, it tries to map it manually to a more sane color, in layman’s term “desaturate” it, note the more accurate term would be intensity based gamut mapping. The mapping ground truth is called “chromaticity linear” (or in layman’s term the destination of the mapping would be in the same “hue” of the original intent.) Note that Filmic is not perfect though, you can still see the “Notorious Six” before it goes to white. So it is definitely not perfect, but at least it tries to deal with the problem.

Conclusion, Filmic should be the default instead of “Standard” inverse sRGB EOTF, as it was designed to deal with Open Domain Scene Linear data that Cycles and Eevee generate, while the “Standard” inverse sRGB EOTF was not.