Point is the whole industry was sRGB (no filmic) and moved on to ACES. So ACES is the new standard. Its not easy to convert to ACES as nearly all textures, also game related, are in sRGB and with 8 bit color inputs in Blender its even worse.

So Filmic just ruin it even more, as you do not really see the results you would get in other render engines. Its just my advice after a long time career to have these two standards be defaults. Currently Blender ships without ACES support, so that should also be changed.

One of the first things I did in Blender so, was turn off Filmic LUT. Its an advice of professionals. Not a duty.

Aces isn’t the standard and not even the most commonly used configuration. It’s just basically an adopted pipeline( colour management system-arguable*) just as filmic is in blender currently. And also filmic doesn’t ruin your renders that’s a hoax. Filmic is actually as good as aces and ARRI k1S1 just not a common name in the VFX industry.

Well I think everyone has a different picture of that based on your work experience. The people around me would shake their heads about your comment, but then its totally ok for me. Its all depend on what you doing. I see no one using filmic or used it, hence for me its more of a problem that I remove in order to get the result I’m used too. That one of the aspects of our job, that people use software for different proposes. So for anyone in the game industry this discussion makes no sense at all.

But I can say, that everyone here working with Redshift or Vray on animations (not stills) is using ACES right now. Hence its a standard If you do jobs in the film and advertisement business. But again, you might see it from a different location and I wouldn’t say you wrong. But I guess there is no paper that analyses the whole industry.

Was just getting set up to do this, but it turns out that the resolve LUTs aren’t fully working ![]() It’s all well and good to tell people that they should be doing things a certain way, but it’s often not practical or possible… I’m guessing this is a limitation on the Resolve side of things, but I’d wager a lot of editing/compositing apps will have similar limitations and it’s a bit ridiculous for me to tell clients what software they should be working in to support my renders

It’s all well and good to tell people that they should be doing things a certain way, but it’s often not practical or possible… I’m guessing this is a limitation on the Resolve side of things, but I’d wager a lot of editing/compositing apps will have similar limitations and it’s a bit ridiculous for me to tell clients what software they should be working in to support my renders ![]() https://github.com/sobotka/filmic-resolve

https://github.com/sobotka/filmic-resolve

This is where you get it wrong.

LUT are lookup tables, but you’re not solving the tonemapping, what you do in Resolve is the following:

- Load the linearized image as EXR.

- Use Filmic/ACES/whatever to tonemap it (that is, convert the linear HDR data to something displays can show and handle)

- Then, apply LUT or looks at will.

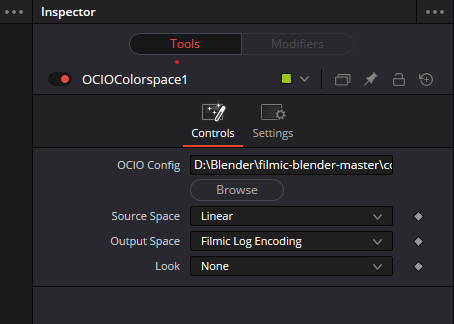

If you want to mimic Filmic, you need to take in account it uses Filmic Log, so when you load Filmic, to get the same result as in Blender, you use the same settings

And then you proceed. This way of working requires a full understanding of OCIO and what’s going on. Not as simple as loading a LUT and call it a day.

Nope, definitely not as simple as loading a LUT and calling it a day. Thanks for the details, very much appreciated.

This is true. According to the Technical Introduction to OpenEXR, you have a chromaticities attribute that defines how to interpret the channels. This is given in the usual CIE XYZ coordinates. In particular, it is possible for the channels to represent CIE XYZ values directly.

One implication is, regardless of what the channels actually represent, according to this convention, the pixel components are always going to be linear in CIE XYZ space.

I like the idea of being able to create render presets that could be saved and loaded. or is this possible already?

As far as I know, there isn’t one, however it can be created as an addon, if there is a good list with all settings needed for a few cases, it would be feasible to include them.

In some of my scripts I switch some prefix render settings like this, very simple stuff actually, but saves a bit of time. bpy.data.scenes["Scene"].view_settings.view_transform = 'Standard'

As for example since the Filmic alters the viewport colors, it makes color sampling with the eye-dropper giving wrong values.

Aside from which color space should be default and all that there is actually one thing I just found out that kind of confuses me: Is a utility pass like Ambient Occlusion supposed toalso be affected by Filmic Color grading?

My understanding about the AO pass is that it should rather be the whole range from white to black as it’s … well … a utility pass instead of a mapped color range, isn’t it?

are you serious? do you know how photographers check colour? do you think they pull out some complex model to figure out how to match white or a simple swatch? “so what”- this is literally the problem. the only scenario where tone mapping is good for an artist which doesn’t know what tone mapping is or how to use it to benefit there work is for accidentally good photorealistic renders. for literally everything else this is a bad setting to have as a default.

Lets talk about why its bad shall we, if you import an image in the sRGB colour space with the artists intention that the viewer is going to see these colours you instead get a horrible greyed out mess, in the real world what that translates to usually is adjusting the gamma and exposure, much more simpler concepts to understand, to match. As soon as these settings are changed all hope of having a true one to one translation of assets imported into blender goes out the window.

Again, define “white swatch”, does it mean closed domain sRGB (1, 1, 1) or does it mean something else. Do you think your color check card in physcial world is defined with sRGB? Do you think the spectral reflectance strength of that card is specifically closed domain 1 when you check your color? What value is “middle grey” and how Filmic handles middle grey?

Go on and re-read my post please.

Of coures sRGB isnt real world colour, its a colour gamut, it also happens to be the most abundent standard for monitors where gamuts like rec.207 or.709 are not, should you create a program to be set up to be conceptualy colour acurate and not tell new users this? Or should you set up your program to match colour to a widely compatible standard, and leave filmic for people that have a much greater understanding of working with specilised colour spaces?

Please try to keep the discussions civil. Members of the Blender community, interacting on an official website such as DevTalk no less, should hold themselves to a higher standard than the tone and attitude displayed in this thread. This applies to everyone, regardless whether you consider the color management default correct or not.

It is not a “color gamut”. It is a color space, which means it contains three components: primaries, transfer function, and white point chromaticity.

Again, go back to the questions I asked:

And I mentioned D65 is:

Here, there you go. The photopraghers are using the white swatch to do what is usually called “white balance”, it is a kind of chromatic adaption.

We have both BT.709 and sRGB using the same D65 white point, but the thing you want to look white in the scene isn’t always reflecting out D65 light. What happens is that the photographer just tells the camera “that chromaticity needs to be white in the shot”, the camera then “ok I will detect the chromaticity and adapt it to the white point of the BT.709/sRGB encoding color space, which is D65”.

TL;DR: It has nothing to do with you folks complaining Filmic “greying” the result. Keep arguing that would just make this go off topic.

EDIT:

Also, Filmic is still having some serious Notorious Six problem, so I agree to some extend that “Filmic as default is hurting the experience for people”. Everytime when I look at the art works from the Blender community, I can always spot some color skews in the renders. It’s time we go get something better. Like TCAMv2, like AgX. But going to the “Standard” sRGB Inverse EOTF? That’s not moving forward, that’s backwards.

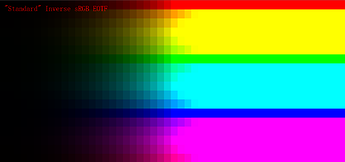

The “Standard” sRGB Inverse EOTF

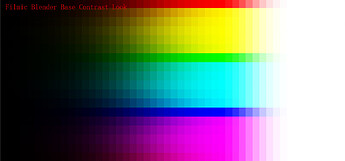

Filmic:

AgX:

TCAMv2:

Let’s move forward, towards a brighter future

I think a usability issue that in a way is making users who do not know about the subject (and may not necessarily be inexperienced artists) feel like the opening poster is that the default Solid view is not color managed.

A typical workflow—perhaps even common in NPR and stylized semi-realistic work—may consist in choosing nice vibrant colors there with the Viewport Display shading settings and perhaps even do texture painting there. The user will work with the model with such colors for a good while, eventually apply the colors to the configured shader (usually Principled BSDF), and finally will end up being confused when looking at the shading in the rendered modes. If the Filmic view transform (currently the default) was selected, colors become all washed out and gray-like, in particular if the scale of the model was reduced to human-like levels (the default cube and lighting settings do not appear to exhibit serious issues in this regard, so it does not seem to be a good example).

So, for people with some understanding of Blender settings but not enough understanding of the underlying color management system, a way to make colors look close to how they were in Solid mode is setting the view transform back to Standard. However, if colors were initially adjusted with Filmic in the color-managed viewport display modes, setting the view transform to Standard would make them appear extremely saturated instead.

The bottom line is that while Filmic might be producing technically better results, it does not work along general user expectations / intuitively, since most of the work is often done in the unmanaged Solid display mode.