Currently, if you want to use the compositor alongside the video sequence editor, you have to create another scene, do the composition in the other scene, and add the scene back to the VSE. While the feature of including scenes in the VSE is useful for numerous other things, it’s only a workaround for the use of composite nodes. It can’t be used to make custom inputs, it can’t be applied on a strip, it can’t be used to make custom transitions, etc.

There have already been some nice proposals for this feature such as this one. The intent of this one is to create a more fleshed out idea for how it could be implemented. This proposal could also bring some potential improvements outside of the VSE. The idea in its essence is fairly simple. Most of this text is explaining how it can be applied to different cases (such as clip transitions).

The idea

What the idea essentially boils down to, is removing the strict link between the scene and the composite nodes, and being able to use them like most other data blocks.

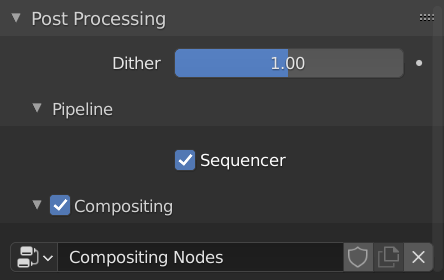

Just like meshes and materials are their own data blocks you can apply to any object from a dropdown, the composite node trees would also work the same way. You could have any number of them, and a single composite node tree could be applied to multiple strips. The “Output Properties” tab of the properties panel would have a dropdown letting you choose the composite node tree you wish to use. This is similar to how the “World Properties” panel lets you pick the data block for the background of the 3D world.

These images are there just to illustrate what I mean, they’re not an example of what the UI should look like.

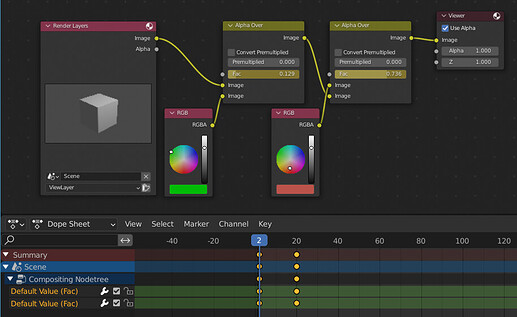

This would also make it possible to have multiple different compositor node trees for a scene, which you could quickly switch between.

Using them in the VSE

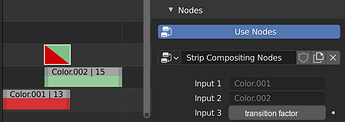

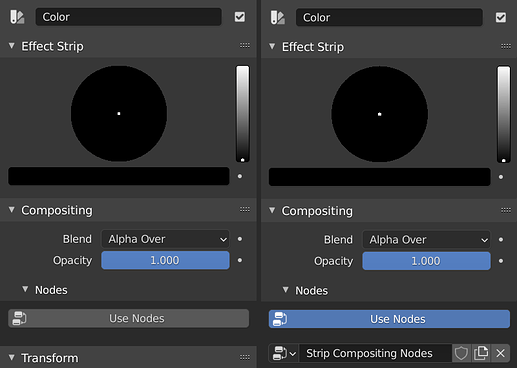

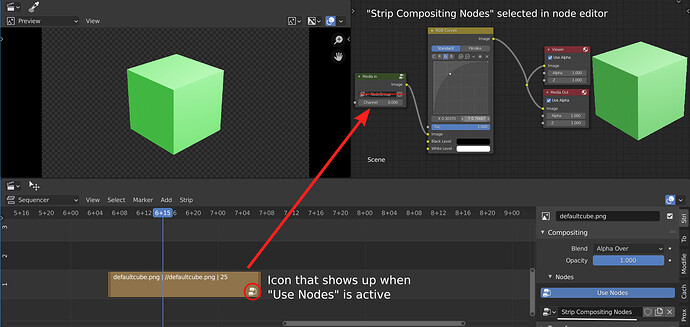

Each graphical sequencer strip would have an option to select a single compositing node tree, when the “Use Nodes” toggle is active. This would replace the “Modifiers” section of the strip.

The reason why you wouldn’t be able to stack multiple node trees like in this proposal, is because stacking them is less flexible than using nodes, and would only add further complexity. Since these compositor node trees also work as node groups (more on that later), you can apply multiple effects by adding the effects in the node tree of your sequencer strip. (This will be explained in further detail in the “Changes to the compositor” section)

A “Composite” strip would also be added. It would give no media input to the nodes, and it would show the node group’s output.

Changes to the compositor

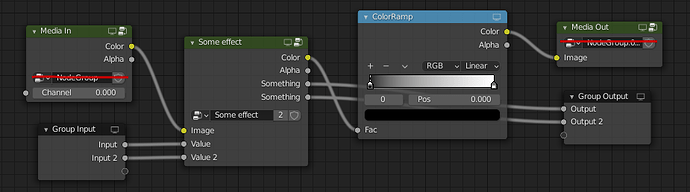

Each compositor node tree would be a node group, just like how geometry nodes are: You’d be able to include other compositor node groups inside other compositor node groups, or use them eg. on a vse strip as is.

Bringing clips in to the composite node tree

(Below is my reasoning for the idea, the idea itself is in the next subsection)

I wrote wrote my idea for this, but I realized that it had a bunch of flaws. I rewrote the idea to address those issues, but there might be things I haven’t considered. I’d like to hear what other people think.

These are the main things that have to be considered:

-

The node groups should be easily reusable: on different strips, as a node group inside another node tree, as the scene compositor output, as a transition between strips (eg. it should be easy to apply a node based transition on a strip, but it should also be easily usable in a node tree). It should also have the ability to take in an arbitrary amount of media inputs.

-

There needs to be a clear distinction of where the media inputs are “automatically” passed in: when the node tree is applied on a strip or when a node tree is used as a transition. Same for the media output. Node groups can have an arbitrary (but predefined) amount of inputs, so it needs to be clear to the user and the program how the media is passed to and from the node tree.

The fixed proposal

Media In node

A new node would be added, a “Media In” node. This node would work as a generic input node, taking whatever is passed to it: a sequencer strip, the scene, input from another node tree, etc.

(Special cases: If the node group is used on a scene, and there is no “Render Layer” node, the Scene the node group is applied on is passed in to the Media In node using the default render layer.)

The Media In node would have a “Channel” input. It would be used to select which of the “automatically” passed in medias would be used. There would be a default channel (0) where most things would be passed in: vse strips, scene renders, etc. For things like transitions between strips, a set of pre-determined channels would be documented for each case (eg. strip transitioned from: 0, strip transitioned to: 1. There will be more about transitions later.). A dropdown with textual names for each channel would be next to it, to make it easier to select the correct channel.

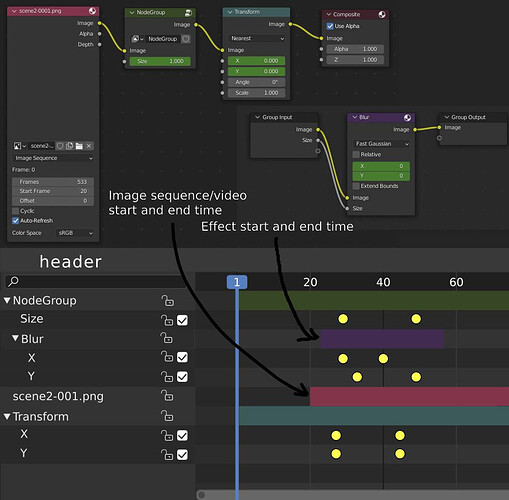

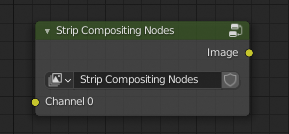

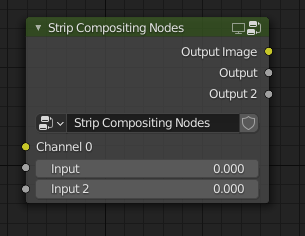

When used as a node group inside another node tree, each used channel would be listed at the top of the input sockets before the node group input sockets.

Media Out node

This node is the same as the current “Composite” node, but I’m calling it the Media Out node for the sake of consistency. (Naming is something I haven’t considered, these are just the names I use to explain the idea.) The Media Out node, like the Media In node, is read by whatever the node group is used on. It is also shown at the top of the output socket list when used as a node group.

Group Input and Group Output nodes

When used inside another node tree, the group input/output nodes would function the same way as they did before. The only difference would be that the sockets would be listed after the media in/out sockets as mentioned before.

The group input/output nodes are never read from or written to automatically. This creates the distinction between user modifiable inputs/outputs and the ones written to and read from by other systems such as the sequencer.

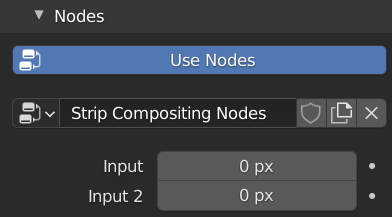

The group input node properties would be shown in the sequencer and in the post processing section of the properties panel, just like how they’re shown with geometry nodes. This would make it possible to create reusable templates.

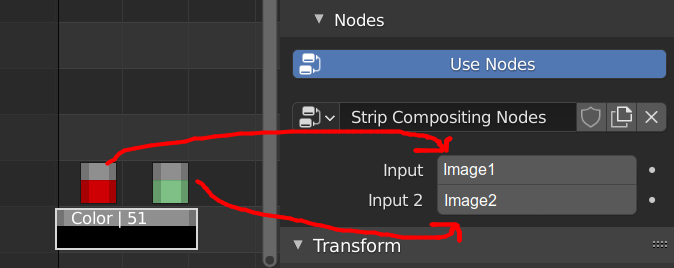

Example of a node group called “Strip Compositing Nodes”:

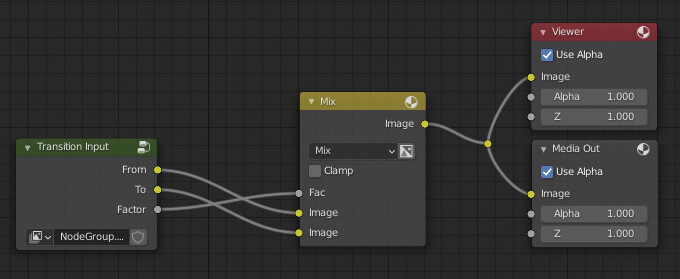

Used “as a node group”:

Applied on a strip in the sequencer:

Compositor keyframes and resolution

Each composition node tree would have its own frame rate. This could be changed for example under the “Use Nodes” button. The resolution of the output would be determined based on the amount of space taken by the different nodes in the composition. (eg. a composition with only a 50x50 px image would be 50x50 px).

Keyframes in a composition node tree would be local to that node tree. This is similar to how the sequencer strip keyframes move around with the strip as you move the strip around the timeline.

Sequencer in more detail

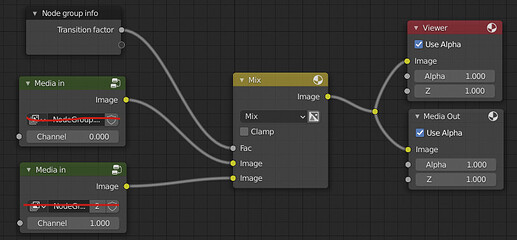

Transitions

A new “Node Group” (temporary name) transition strip would be added. It would be applied to strips the same way other transitions like Gamma Cross are applied. It would also have the same node group dropdown other strips have.

On the node side, in the Media In node, the channel input would be used to select which clip the Media In node receives. Channel 0 would be the strip that’s being transitioned from, and channel 1 the strip that’s being transitioned to.

The fade factor/progress would be exposed as a property in an appropriate node. The transition factor would be exposed as an input socket when the node group is used in another node tree, just like how the media in channels are exposed.

Cross fade example

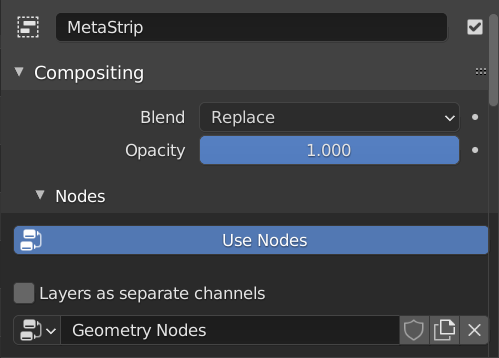

Meta strips

Meta strips would have a “Layers as separate channels” option, this would make it possible to access each layer individually by setting the layer number as the channel number in the Media In node. This is helpful if you have multiple layers with multiple strips, and you want to composite them together in the same way. The option would be turned off by default.

Other things

- Additional feature: In the scene strip, a dropdown to select which compositing node tree to use for the render.

- Forward compatibility: Older versions of Blender wouldn’t be able to handle this new system

- Currently there is no timeline for the compositor to edit the image/movie start and end times. I’ll make a proposal for one later.

I didn’t include my reasonings for every section of this proposal. If there are any questions or suggestions for improving it, I’d like to hear them.

![Previz Camera Tools [Add On] for Blender](https://devtalk.blender.org/uploads/default/original/3X/5/5/5528a1af07a0e95a5a57a5fddb64c0310783c164.jpeg)

(see

(see