GSoC 2024: File Import Nodes - Final Report

First of all I wanna thank @HooglyBoogly , @jacqueslucke , Blender Foundation and Google for this program as I have learnt so much in the last few months. 12 years ago when I started playing with blender as a kid I never imagined one day I would be contributing to it.

This summer I developed the File Import Node for Geometry Nodes aimed to reduce disk usage by externalizing data and also enabling data visualization workflows in Blender.

Creation of New Node

Adding new nodes to geometry nodes is very well documented in the Blender developer handbook they also link a PR to follow as an example. The api around nodes is also super well designed with the ability to customise inputs and outputs and well as hook into the node searching logic.

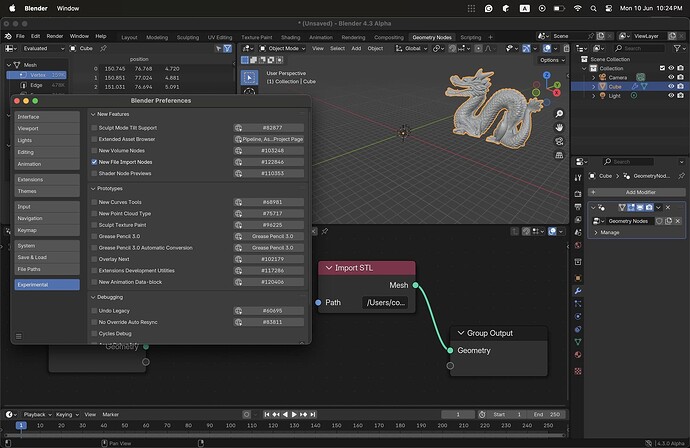

To follow iterative development at the very start @HooglyBoogly suggested that we put all the nodes behind an experimental feature toggle and keep merging each node as it’s developed. You can test these nodes in Blender 4.3 Alpha by switching on the new file import nodes feature in the preferences.

Refactoring Existing Importers

Existing importers (STL, OBJ, PLY) all work through the blender context and store the data loaded from file into the blender context. this posed the first issue as when the nodes in geometry nodes are getting executed we don’t have access to the blender context. This required major refactoring of existing importers to expose the loaded geometry to the node graph outside of the blender context.

STL being the simplest of the three formats was the first one I tried to refactor and It was pretty straight forward to abstract away the importing logic and wrap it with 2 exposed methods one for the existing blender context and other for geometry nodes.

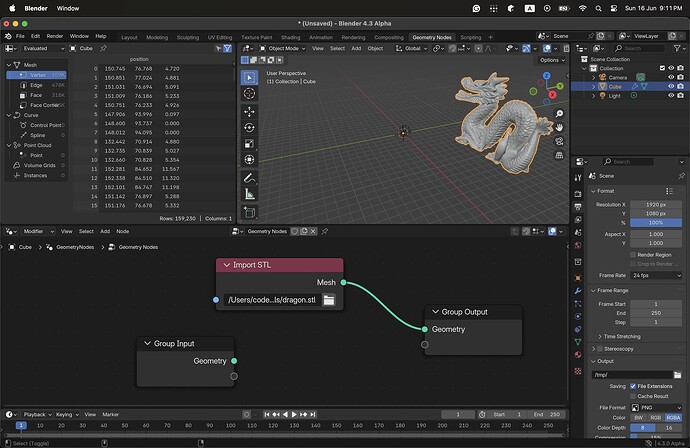

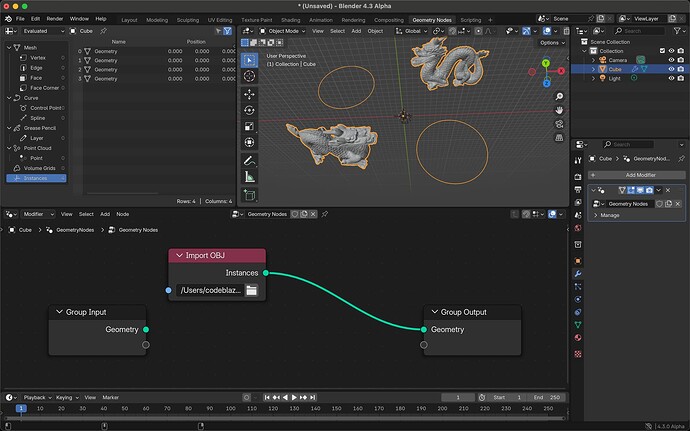

OBJ files were a bit tricky as not only they could contain multiple meshes but also can contain curves. At first I had node idea how we would handle such complications but the GeometrySet has an Instances component which is exactly what’s needed for this use case.

Tough importing as instances does add another challenge as we can’t just extract out the core OBJ functionality and wrap to exposing functions like STL. In case of the import invocation coming from we have to convert imported meshes and curves into instances and then create the GeometrySet.

Another issue is MTL file as OBJ file can have materials, for now these are ignored as there is no way to create materials from geometry nodes.

Point cloud files were pretty simple and similar to STL file. personally PLY support is the main reason I got involved with this project as I have been looking to integrate Blender into my day to day Computer Vision Research.

One thing that troubles me is the fact that the PLY importer loads the data as a Mesh rather than a point cloud, something I further want to look into and see if this can be improved.

I mentioned before that the nodes api is very well designed and I felt so the most when adding file path selection UI to the import nodes. Well all I had to do was implement file_path as a socket subtype of String and everything else just worked out of the box. This was something that I though would take me a lot of time but I was able to get it done pretty soon.

Midway

All the work so far was either refactoring existing code or building upon some well designed API’s but the next two tasks CSV Import and Geometry Caching needed to be built up from ground up

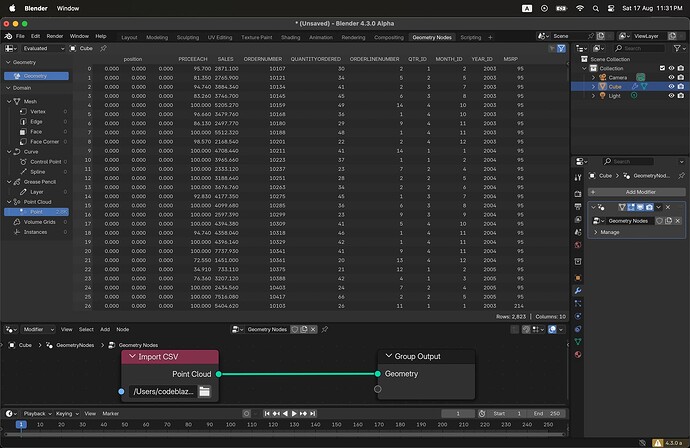

Blender doesn’t have the capability to import CSV files so the first thing was to implement the importer and we didn’t wanna use existing CSV libraries cuz it’s is just simple string handling right ? well the importer is still pretty simple but there were a lot of edge cases although the most time I spent was in designing the importer figuring out which individual functions should be created and utility classes.

Following questions were asked about to import and use CSV data

-

Should a custom data type be created for CSV data?

At first I thought this would be required but then after thinking of what the CSV data actually represents it became apparent that we could just use PointCloud where each row could be a point and each point can have arbitrary attributes.

-

Which data types to support and how to decide the data type of each column?

For now we just support Integers and Floats and just look at the first row to decide which type should be used for the whole column (first try int else use float). this is something I hope to expand upon in the future

I spent a week looking at the existing OBJ and PLY importers to get inspired into writing the CSV importer and ended up with an implementation that I am quite proud of.

There are still a lot of features need to be added to the CSV importer to make it fully useful and I’ll work on them post GSoC, like user selected column data types. It would be nice to also integrate this importer into the blender context to be able to import CSV files outside of Geometry Nodes.

I have to be honest my first implementation of caching was very similar to my solution of the leetcode question design an LRU cache and it wokred, but I felt uneasy about it. Technically the final solution is also is a LRU cache but is much more robust and beautiful, done by @jacqueslucke

First Attempt

To get started quickly I just used boost’s lru_cache and got something up and running. Since boost is an optional dependency I soon starting implementing my own lru cache which didn’t take much time (copying from the leetcode question).

At this point we had a cache that worked on a fixed number of items

Counting Memory

Fixed number of items is not at all ideal for blender as the size of each item is not fixed (1 mesh could be a simple 8 vertex cube another could be a simple million vertex cube), so instead of the cache being limited by a fixed item count the goal was to limit it by total size of the cache (256mb for e.g). This required size computation of GeometrySet

You can look at my attempt in this PR, I could only get size computation for mesh components working and then @jacqueslucke implemented the MemoryCounter API and soon the Global Cache mechanism.

Big Boi Cache

Integrating with the global cache mechanism was super easy and makes the code so much cleaner

Future Work

I’ll just throw some wild ideas I got while working over the summer.

- CSV improvements

- more user controlled import options

- support more types like strings and complex times like vectors and colors

- csv exporter

- Add support for more non-3d data types (JSON, YAML, SQL) and enable visual data pipeline curation workflows

- Add support for scientific computing for Geometric Data

- List and iterator contexts in geometry nodes (suggested by @clankill3r here)

Summary

I learned a lot over this summer and also realised how much I still have to learn to become a decent C++ developer. I come from a research background and deal with Computer Vision, Machine Learning, AR/VR and Graphics but did spend 3 years in the industry writing JavaScript.

This opportunity is something I have been looking for a long time and I enjoyed the whole process and I’ll definitely continue to contribute to blender.

Just a shameless plug in the end - check out my YouTube Channel