Hi all,

I’m Lucas and I’m excited to work on improvements to the Sequence Editor waveform drawing routines. Although I’ve contributed a couple of patches to Blender before, I’m a big open-source noob and I am excited to join the community and add to the project.

You can see my project proposal here: Blender project proposal - Google Documenten

Synopsis

Blender supports video editing through its video sequence editor. While the editor allows users to load videos and audio files, computing the audio waveforms for the audio tracks can take a really long time when working with large files (multiple gigabytes). This makes for a degraded user experience.

This project will reduce the time taken to see the waveforms by:

- Processing multiple audio sequences in parallel in the background,

- Only computing the waveforms of sequences that are visible in the user interface

Once these initial speed ups are achieved, I’ll explore improvement opportunities lower down the audio processing stack.

Benefits

By speeding up the waveform computation in Blender, we’ll be able to reduce the amount of time computational resources locked up in order to generate the data in the first place.

Creators will benefit from more immediate feedback whenever they add new sequences to their project. Blender will provide an enhanced and polished user experience with this strategy.

Deliverables

- Produce benchmark reports on Blender’s waveform computation

- Change waveform computation to be performed only for visible strips

- Compute waveforms in parallel

- Experiment report on changes to AUD_readSound

Once GSoC starts, I’ll post weekly updates in the comments.

17 Likes

Week 1 Report: May 29th to June 4th

- Wrote down some notes on how to configure and use Tracy to profile Blender. This is useful for when you want to measure exactly how any times a certain code path gets triggered (you can can’t do that with a sampling profiler).

- Got Blender profiling to work with Apple’s Instruments profiler.

- Looked for video assets I could use to profile Blender’s waveform computation. Settled on using assets from the Library of Congress since they are copyright free.

- Wrote a script (gsoc-2023-vse/Assets.make at main - gsoc-2023-vse - Blender Projects) to download and generate the video assets needed for testing. This script generates small, medium, and large .mov and .mp4 files (I picked those formats since they are the most commonly used video formats for editing).

- Agreed with @iss to change the deliverables order and work on parallelising the waveform computation of different strips.

- Read through the BLI_thread.h API.

- Read through the wm_jobs.c file to understand how jobs are created/fetched.

- Looked for examples within the Blender code base to see how a TaskPool can be used.

Notes

The Sequencer code uses the window manager jobs system to queue audio preview jobs. The window manager jobs system limits each owner (e.g. sequencer preview) to a single active job.

We can’t just push a job for each audio strip.

Next steps

- Investigate if we can just use different window manager jobs for each strip (this will probably cause problems with the progress bar).

- Investigate if we can just have that single window manager job push the audio strips to a TaskPool and have multiple threads work on that. Might need to synchronise how the work done to compute the progress bar.

5 Likes

Week 2 Report: June 5th to June 11th

-

Spent more time looking at the task pool utilities inside Blender: task_pool.cc

-

Discussed the next steps I put down the previous week with @iss. Agreed it would make more sense to use a task pool instead of dispatching many jobs.

-

Read through some more examples of TaskPool in Blender’s code base. In particular these were useful:

-

Tested my task pool implementation to compare the speed between multi-threaded and single-threaded processing. Demo videos below (NOTE: both videos were recorded in debug mode):

-

PR is up: #108877 - VSE: Process audio strips waveforms in parallel - blender - Blender Projects

Notes

I spent a lot of time debugging a use-after free problem with my TaskPool implementation. I didn’t realize that BLI_freelinkN would deallocate the preview job data and sometimes the threads would start executing after the data was freed. That cause a memory access violation error.

Thankfully the allocator marked the deallocated pointer location with 0xDDDDDDDDDDDDDDDD. Took a while but when I finally noticed that it was an easy fix.

Next steps

- Go through the PR review and get it merged.

- Measure the speed improvements in Release mode.

- Update report with speed improvement data.

8 Likes

Week 3 Report: June 12th to June 18th

- Not much progress due to heavy oncall load at work.

- @iss did some preliminary PR review and found a bug where we couldn’t push new files to the PreviewJob due to the TaskPoll work and wait call.

- Read through the TBB thread_group documentation to see if they had any way to just poll the thread group. TBB is the library that backs Blender’s TaskPool implementation. No luck with polling.

- Read through other Blender code to see how things were done (thanks @iss for the suggestion). These are the files I went through:

- Updated PR with a new commit that uses a ThreadCondition variable to notify the PreviewJob when new audio jobs are added to the queue. This fixes the previous issue somewhat, but there’s still a chance where the PreviewJob is terminating but a new audio job gets added to the queue. In this case the audio job will leak because it isn’t caught by the PreviewJob. The same thing can happen with the code we have in

main, but it might be unlikely to be hit due to how slow processing each file is and the serial processing of each item in the queue.

Demos

These demos are recorded with a release build.

Multiple files in parallel

Adding new files while others are being processed

Note: progress bar updates work just fine with this implementation and accounts for new files being added.

Next steps

Figure out how to deal with the race condition mentioned.

Two possible approaches are:

- Never terminate the preview job and just let it block on the ThreadCondition variable.

- Add some state flag to the preview job (like

bool terminating;): When the flag is true sequencer_preview_add_sound keeps waiting until the job is done. It then creates a new preview job.

5 Likes

Week 4 Report: June 19th to June 25th

-

Got @iss’s help with the PR review and got some pointers on how to use WM_event_add_notifier

-

Stumbled on an issue trying to use WM_event_add_notifier and spent some time investigating the issue:

- Just before an audio object is submitted to the PreviewJob, it’s tags field is has the bit SOUND_FLAGS_WAVEFORM_LOADING. If we just exit call WM_event_add_notifier in the sequencer_preview_add_sound function, there will never be another attempt to enqueue the sound: The flag is never cleared.

- I changed the code to clear the flag just before returning to get things working.

-

Not much progress due to another oncall week at work, but it’s finally done. Will have more time to move the project this week.

Next steps

- Get the PR merged

- Start looking into how we can compute waveforms only for visible strips

5 Likes

Week 5 Report: June 26th to July 2nd

- PR was up for review but we didn’t manage to get it merged (@iss was working in person with the rest of the Blender team).

- Chatted with @iss about the next steps:

- The sequencer draw code already only requests waveforms for strips that are already visible, so there’s nothing there we can improve

- The best option to improve things regarding visibility would be to determine how much (%) of the strip is visible and only read enough samples to render that part of the waveform.

I toyed with the idea of having a struct like this associated with each stripe:

struct WaveformSpanData {

struct WaveformSpanData* next;

void* waveformData;

uint8_t spanStart;

uint8_t spanEnd;

};

Every time we need to re-draw the strip we do the following:

- Determine what “span” of the strip is visible

- Check the if the current span overlaps with any existing ones

- If the span overlaps:

- If the span is entirely contained within an existing span, we just draw the waveform.

- If the span is not entirely contained, we merge the overlapping spans and only read the missing waveform data.

- If there are no overlapping spans, issue the read for this new span and push it to the

WaveformSpanData list

I’m still working on the implementation.

I need to figure out how to deal with AUD_readSound.

I’m not totally sure if I can arbitrarily seek to a position in the AUD_Sound object. If I can, then it should be fairly easy to just seek the audio to a position before requesting the new waveform data.

NOTE: Span start/end are tracked as uint8_t since a span only goes from 0% to 100% of the of the strip. We’ll require that spans start/end percentages be integers (instead of floating point).

Next step

5 Likes

Week 5 Report: July 3rd to July 9th

- Iterated a few times on the multithreading PR with the help of @iss. Still pending to be merged.

- I was able to get a working proof of concept of loading only the data for the visible part of a sound strip:

- In sequencer_draw.cc we determine the start and end position of the audio strip (values between 0.0f and 1.0f)

- When a PreviewJobAudio is enqueued for processing, it contains the start and end positions.

- When the job is processed, we figure out the correct start and end sample indexes by multiplying the start/end positions by the total number of samples in the audio.

- This lets us compute how many samples AUD should read in order to draw the visible waveform segment

- While I initially thought that using uint8_t values for the start/end would be sufficient, it didn’t work as soon as I started testing with large files. Quite often <1% of the strip was visible. By using integer markers meant I was had to load a lot more data than needed. I moved the code to use floating point numbers for now.

- Spent time reading through the following files:

- Unfortunately audaspace doesn’t offer the interface I need to make things work. I hacked (read copy-pasted) the AUD_readSound function to get what I needed for the PoC working. Need to figure out what is the process to get that code added to their repo.

Demos

NOTE: Both demos run a release build.

Blender Main

Changes present in Blender’s main branch (commit f2705dd9133a2594a152a6e1d48580e9c35d746e)

Partial waveform load proof of concept

Next steps

- Fill out the GSOC 2023 midterm report

- Get the multithreading PR merged

- Work out how to get the needed changes made to the audaspace library

- Prepare the PR with the changes to load only the visible part of the sound strips.

4 Likes

Week 5 Report: July 10th to July 16th

- Merged the multithreading PR .

- Still iterating over the design of loading partial sound segments. I just dumped all the ongoing changes to this single commit: https://projects.blender.org/Yup_Lucas/blender/commit/c623c3c266fc32b0903bbd7c141dd2032b56681d

- Dealing with multiple segments turned out to be more difficult than I initially anticipated:

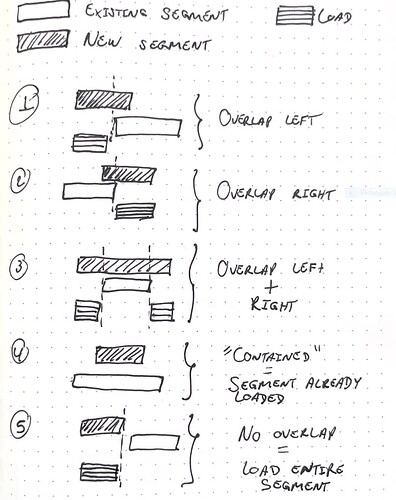

- When we need to draw an audio segment, it can fall under one of the categories below (see attached drawing for an illustration):

- Overlap with the “left side” of an already loaded segment

- Overlap with the “right side” of an already loaded segment

- Overlap both the “left” and “right” sides of an already loaded segment

- Contained inside an already loaded segment

- Does not overlap any segment

- The problem with this overlap approach is that we need to compact segments (i.e., merge them) over time. If we don’t do that, we’ll end up with a huge list of segments that need to be iterated over every time.

I’m thinking along the lines of eagerly merging segments whenever a new segment is loaded. This can happen in the background thread responsible for loading segments.

Diagram with segment overlaps

Next steps

- Keep iterating over the segment loading design

5 Likes

Week 7: July 17th to July 23rd

- Tried different approaches to partially load waveform segments. Nothing quite worked 100%:

- Explored keeping track of segments in a linked list like outlined in the previous week.

- Looked into Segment Trees as way to keep track of what segments have already been loaded.

- Looked into Interval Trees to keep track of loaded segments

- Current investigating some bug in the current implementation wip · 4113fe7771 - blender - Blender Projects that causes blender to crash.

Next steps

- Address bugs in the current implementation

3 Likes

Week 8 Report: July 24th to July 31st

Nothing to report this week. Had a busier than usual on call and I couldn’t get to the changes I had to make to get the implementation working.

I also let @iss know I am traveling this week (August 2nd till August 6th), so likely will be unable to make much progress here.

Week 9 Report: August 1st to August 6th

- Got partial sound data loading working. Only visible chunks get loaded as needed and this is able to leverage the multi-threading changes I made earlier.

- Trying to figure out how we can manage the partial waveform loading. The problem with loading only certain slices of sound is that the current drawing algorithm doesn’t work properly and causes some lines to get clipped.

- Got some pointers from @Ha_Lo on Blender Chat on alternative ways to draw waveforms that might work better for this use case.

WIP PR: #110837 - WIP: VSE: Implement partial waveform data loading - blender - Blender Projects

Next steps

- Nail down the waveform drawing to leverage the changes to use partial loads/multithreading.

- Look into the resources posted by @Ha_Lo

3 Likes

Week 10: August 7th to August 13th

- Looked into the history of AUD_readSound to see why the sound gets normalised by overall max value.

- Looked into the Bézier curve changes done in this PR as suggested by @tintwotin in the #vse channel. We’ll be able to use something similar to constrain the samples the waveform drawing uses.

- As suggested by @iss, I just changed my second version of the AUD_readSound function to avoid normalising values

- I am currently looking to do dynamic normalisation/renormalization as suggested by @Ha_Lo (when e find a new max, rework the previous samples.

Next steps

- Finish the work on the partial loads

- Document the work done throughout the GSoC project

6 Likes

Week 11: August 14th to August 20th

Demos

Without partial loads (main branch)

With partial loads (linked PR)

Next steps

- Finish documenting the code changes

- Prepare final GSoC report

- Work through the PR review

11 Likes

Week 12: August 21 to August 27th

4 Likes

![[Blender] Demo waveform load in main branch](https://devtalk.blender.org/uploads/default/original/3X/d/1/d1d7056bf093bf57e5b94687b6991457796f96ca.jpeg)

![[Blender] VSE: Partial waveform loading demo](https://devtalk.blender.org/uploads/default/original/3X/9/2/922f7c81276cfe9297ae3cce446e6017e54a54b6.jpeg)