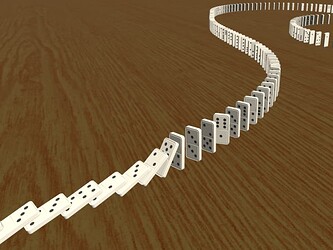

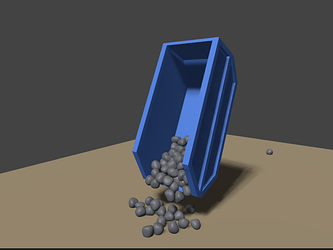

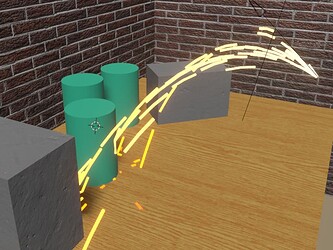

GIFs (click to expand)

This is an experiment to demo a combination of geometry nodes with rigid body physics in a “vertical slice”. The goal is to identify problematic areas and potential future goals.

I want to stress that is also NOT an official planning document or roadmap, just my personal exploration project.

WARNING!

This branch is unstable and requires a particular way of setting up nodes to make the simulation work. Existing features, like regular rigid body objects and the point cache, are partially disabled. DO NOT use this branch for important work!

Git branch: geometry-nodes-rigid-body-integration

Geometry nodes and iterative simulations

Since geometry nodes have landed in Blender releases several attempts have been made to use them for iterative simulations. The core idea is to feed back the output of the nodes (usually a mesh) into the next iteration. With some quasi-physics nodes one can get some pretty nice results:

- Hydraulic erosion by Simon Thommes

https://www.youtube.com/watch?v=H4nikXYGxYI

My own exeriments:

These experiments require some amount of python scripting to “close the loop” and feed the output mesh back into the nodes.

Python code copying depsgraph results back to the mesh (click to expand)

# Copies the depsgraph result to bpy.data

# and replaces the object mesh for the next iteration.

def _copy_mesh_result_to_data(src_object, dst_object):

depsgraph = bpy.context.evaluated_depsgraph_get()

src_eval = src_object.evaluated_get(depsgraph)

mesh_new = bpy.data.meshes.new_from_object(

src_eval,

preserve_all_data_layers=True,

depsgraph=depsgraph)

mesh_old = dst_object.data

dst_object.data = mesh_new

bpy.data.meshes.remove(mesh_old)

One downside of the python-based simulation hack is that it does not interact well with existing physics simulations in Blender, none of which have node-based integration yet. I decided to try and go a step further by implementing a C/C++ version of geometry-nodes-based simulation, in a rough-and-ready way to test feasibility.

This experiment focuses on rigid body simulation, but the findings would apply to other physics simulations too.

Features

Simple “geometry cache” to enable the nodes modifier to access data from a previous iteration

This is somewhat similar to what the Point Cache feature does, but i decided to write my own simply because it would be easier to just copy GeometrySet instead of working around the outdated API.

Inserting geometry into the cache

Pulling cached geometry back into the nodes modifier

Extension of the Bullet SDK integration in Blender to add and remove rigid bodies as needed

Current rigid body simulation is limited to individual Object ID blocks, but i want it to simulate point clouds (aka. “particles”), instances, and mesh vertices (which is a bit silly but basically comes for free).

A number of specially named attributes are used to export relevant data to the rigid body sim, such as flags for enabling bodies and properties like the initial velocity. Currently these are just regular named attributes, this would become more formalized builtin attributes eventually. After simulation the body transforms are copied back to the relevant position and rotation attributes of points and/or instances.

Adding rigid bodies based on GeometrySet data

Updating transforms after the rigid body simulation step

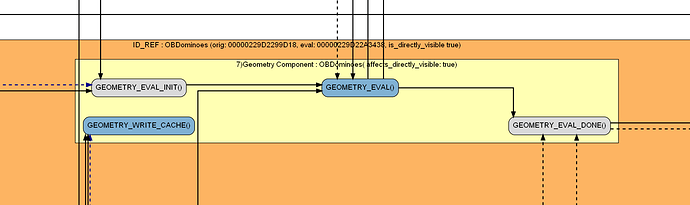

Depsgraph relations to ensure correct order of operations

The rigid body simulation is running on the scene level, outside of the geometry nodes process. The output of the node graph is an instruction for the rigid body simulation, not the simulation result itself. The dependency graph makes sure that the modifier is evaluated first, then the rigid body world updates internal rigid bodies for points we want to simulate. After the simulation the rigid body motion state is copied back into the simulated geometry, and finally stored in the cache for the next iteration.

Depsgraph relations for node ↔ rigid body interations

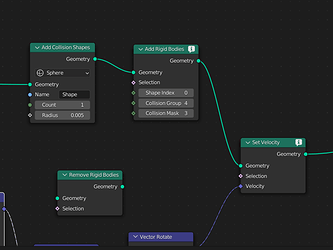

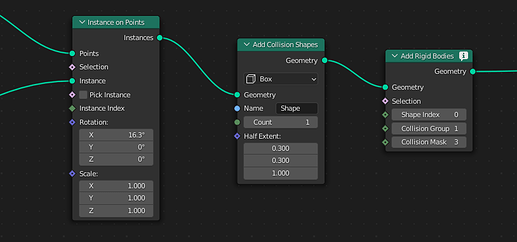

New geometry nodes to spawn rigid bodies and collision shapes

Each body in the simulation needs a collision shape to interact with the world. Collision shapes can and should be shared as much as possible. Simple particles can all use the same basic sphere shape. Individual shapes can be generated based on instances, much like the Instance on Points node generates visual instances.

The current approach is to create shapes separately, and then assign them to bodies using a simple index. This requires some care by the user if different shapes are used, but is the simplest way to map bodies to shapes for now.

Multiple shapes can be created using either a set of instances (similar to the Instance on Points node) or a fixed amount of variations for primitive shapes can be created (boxes, spheres, capsules etc.). Compound shapes are supported by Bullet and are useful for use with convex decomposition, but require some more design and testing.

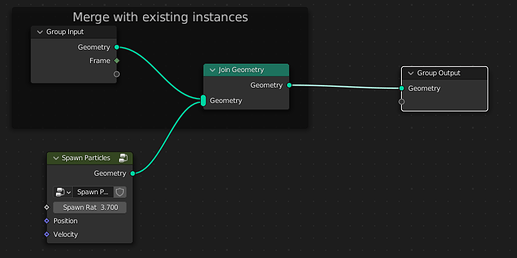

A typical setup is to generate geometry only on the first frame, or spawn particles based on some emission rate. These new instances or points are then joined with previous iteration geometry.

A specialized new SimulationComponent class is added to GeometrySet. It contains simulation data that can not be encoded in point attributes, such as collision shapes. This is more of a hack to get inconvenient data to the rigid body simulation within the existing data structures, but doesn’t work so well as a user-facing concept.

Further steps

Based on this experiment, here are some ideas for future simulation support. This is on top of the features already implemented here.

Systems Design (big ones first):

-

Implement a “visual debugging” feature to help users investigate hidden physics issues. Should record collision shapes, contacts, forces, custom events, etc. over time. Viewport overlay to show such data, make it selectable for reading numeric details. I would consider a feature like this essential for both users and developers. Quite a bit of work, but not so difficult to design (many game/physics engines have some variation of this feature).

-

Make the rigid body collection an optional feature for organizing bodies. Rigid body world should not need it as optimization to find bodies. Forcing object into this collection adds automatic behavior that is not very transparent. Collections are a user-level feature that shouldn’t be abused like this (IMO).

-

Dedicated node tree type for simulations (partially tested here):

-

Includes special inputs like time/frame, time step

-

Automatic merging of newly spawned geometry with the previous iteration. This could be done in separate “stages” like emit vs. modify.

-

Limitation to “simulatable” data types (points/instances for particles and rigid bodies, meshes for cloth, curves for hair, volumes for fluids, etc.)

-

-

Support “events” in simulation data, such as collisions (contact points). Those would be stored in the geometry as output from the simulation step. The nodes can then use this data to change geometry, kill and spawn particles, etc.

Overhaul existing implementations:

-

More flexible runtime cache implementation to replace the aging

PointCache. Needs better serialization for all kinds of data. I tried this back in the day using Alembic, but that was not a great solution (Alembic is designed for DCC-to-renderer communication). -

Clearer distinction of editing vs. timeline changes in dependency graph. I want to reset the simulation when the user changes node settings which require re-running the simulation. The cache should only be reset after such changes, but not on regular time source updates.

-

Node graphs potentially need to support suspending of the exeution task, or splitting into different stages. During a single frame update there are parts that need to happen before rigid body sim (spawn particles, update physics forces) and parts that need to happen after physics (react to events, apply physics motion, update render data). Currently the post-sim steps are a simple enough to be hardcoded, but it could be important to modify data based on the new frame’s physics state to avoid laging visuals.

-

Good opportunity to move BKE rigidbody code to C++. Current uses a mix of C and C++ which is a bit fiddly. Using C++ would remove the need for the RBI capi. Calling code is mostly C++ already, so not many C wrappers are needed, if any.

-

Current Bullet integration in Blender does not use collision groups and masks to their full potential. It uses a custom broadphase filter to add collision groups, on top of the internal group/mask system Bullet already has. This is easier to manage with collections (formerly “groups”) in Blender, but it excludes the possibility to have one-way interactions and disable self-collisions. These are essential features when using rigid bodies in large numbers as visual “particles”, because it drastically reduces collision pairs that need to computed.

Gnarly Areas

Just some details about things that currently don’t work quite right, both old and new.

Depsgraph complexity

The depsgraph needs additional nodes to handle the back-and-forth between geometry evaluation (i.e. the nodes modifier) and rigid body simulation. The geometry evalulation gets and explicit “DONE” node after the main eval, which is the new exit node and only finishes after rigid body syncing of transforms is complete. This might cause depsgraph loops in more complex cases, hard to tell.

Clearing cache and resetting simulation

Resetting the simulation requires removing and adding back rigid bodies. It’s preferable to avoid allocating and freeing btRigidBody instances a lot, so eventually there should be a memory pool for this purpose. Adding and removing bodies from the world is still a bit buggy, it seems that old contact points are hard to remove when reseting the simulation. Current Blender integration can ignore most of these issues because it only adds bodies once and destroys everything (including the world itself) on reset of the timeline.

Memory management in rigid body world is dodgy

BKE rigidbody memory allocation is broken. Adding and removing bodies at runtime causes frequent crashes because of dangling pointers. Only works so far because objects are only added once and the whole RB world is destroyed and recreated on frame 1.

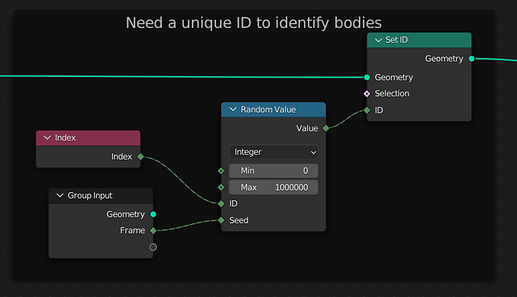

Mapping points/instances to rigid bodies requires unique and persistent IDs

I currently generate IDs in a somewhat crude way from the index, added on top of the largest ID of the existing points. This needs a more robust and automatic way to generate IDs for points that remain persistent over the whole simulation time line and don’t repeat even if particles are destroyed.

Applying rigid body transforms

Render data (points and instances) should get updated after rigid body simulation to include the simulated transform. Otherwise rendering will lag behind rigid body simulation by 1 frame.

The modifier node evaluation happens before rigid body simulation, so for now the post-sim rigid body sync is hardcoded to just update known location and rotation attributes of points and instances. Eventually this should be more flexible, allowing render data to use transform offset, and/or reacting to transform changes in a customizable way (e.g. change color of particles based on position). May require splitting the node eval into different stages.