This is just a new thread to continue the discussion started in Cycles requests thread: Cycles Requests

Cycles X looks very promising

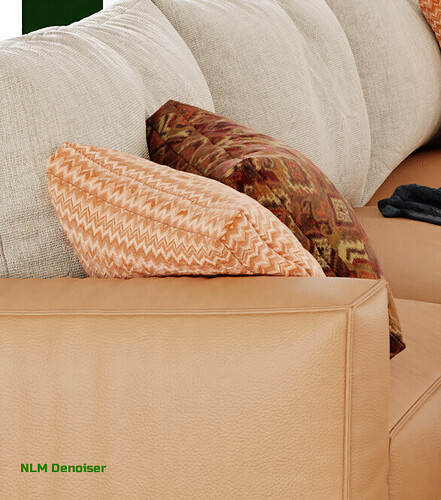

but why NLM denoiser planned to be depricated - Big question ![]()

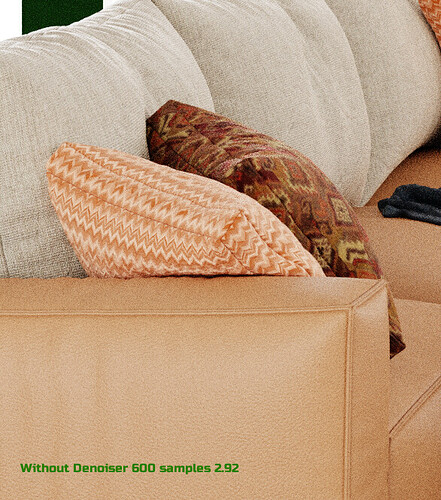

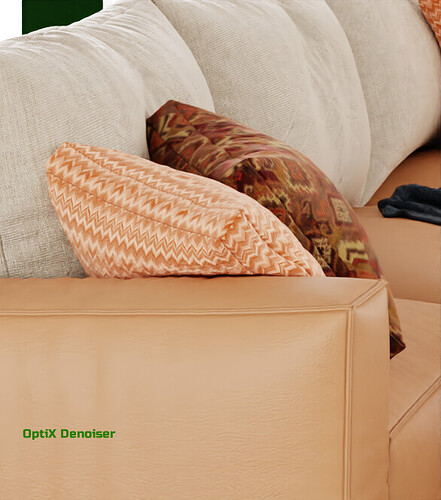

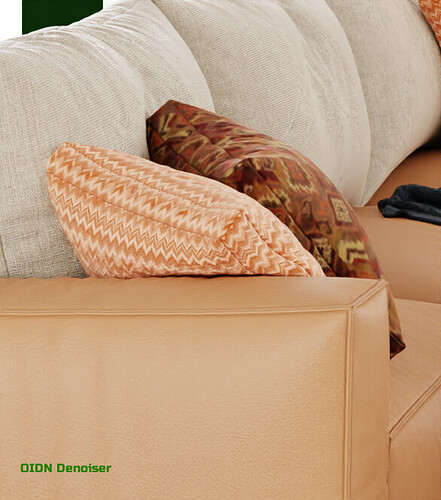

Rendered on GTX1070

In this test NLM looks more accurate

IMHO NLM is underestimated and do its job well

It will be sad to see future releases without it

I agree.

NLM is the best at keeping the render textures nice sharp. It is great when you have a relative clean final render and want to take out the last amount of small noise left.

OIDN smudges the textures to much for final render unless you have complex node setup in the compositor.

Optix great for preview rendering, but high quality final render it also smudges the textures.

Eric

NLM also has numerous issues that have not been resolved.

- Large black fringes around small highlights (even with many samples)

- Black/discolored pixels in bright, yet very thin highlights

- Noise in high detail regions not being processed

- Massive artifact counts in low light areas (where less samples are usually found)

- Actual denoising being close to non-existent once you’re over 20,000 samples

- No general edge/gradient detection, completely blurring the reflections in non-specular materials

The only issue with the latest OIDN release (that is not also an issue with NLM) is some over-blur in high frequency details. It has gotten a lot better since version 1.1 (which was the first version to be included in Blender).

I agree. I never got away from NLM for the renderings I do (high resolution, fine details). OIDN is great for rough noise in glossy materials, but is very bad when it’s about preserving details. I hope that the devs rethink their decision and keep NLM alive in some way.

I think right now would be a great time to do some kind of poll by the devs to get some feedback what the users care for. I’m sure NLM and tiled rendering will be rather high on that list.

In addition, NLM denoiser is the only one available in Blender with the temporal denosing feature for animation (Cycles standalone).

I also remember that Lukas Stockner had commented out there that he had feasible ideas to further improve NLM denoiser.

Anyway I do not remember if they had been assured that NLM Denoiser was going to be discontinued entirely for Cycles-X, or just that it was not implemented for Cycles-X for now.

What I found helps preserving texture is compositing the passes inside the compositor and sticking in the OIDN node in between (diffuse + indirect) and * color; so that you only denoise the luminance values, not the colors

As a heads up, OptiX 7.3 (released this month) added temporal denoising. Presumably Blender will have support for this feature by the time the Cycles-X branch is merged into master.

Source: NVIDIA OptiX™ Downloads | NVIDIA Developer

And it’s only really a matter of time before OIDN supports temporal denoising.

I also agree that NLM and tiled rendering should come back…

OID is slow, Optix is bad. NLM gives the best result even on low samples.

About tiled/GPU+CPU rendering, I actually noticed a significant slowdown in render times (about 2x slower), since I’ve always rendered my scenes using both, so I really hope they re-implement tiling or figure out a way to use the CPU as well, otherwise I won’t be able to benefit from the architectural speed-up at all.

Yess I agree. Its not that they will ignore temporal denoising. Its just that they just try to find a new AI based Temporal denoiser that is better. Its just temporary to not have Temporal denoiser. Maybe they will improve NLM and put it back or something that is similar.

I didnt notice that. I always had more artifacts in my renders with NLM. I agree that OIDN is so strong and smooths the picture too much. But OIDN is bad if you only use it in full power but if you use it by a factor by overlaying with noised render or if you do some compositioning its really great. I always use OIDN with mix node having 50 or 65 factor and it always looks great for scenes without fabrics or without very fine detail patterns. They even look better than NLM

But yes, like the sample images posted for comparison above, yes NLM is the best for such renders having so little patterns and fabric I guess. So we should still have an option like NLM so that it can be used for such scenes too. So I agree with you about it too. (Its really interesting that AI cant guess details, normally other AI can understand details and create even new details if they see patterns but new AI denoisers seem like non-AI more like marketting name. I hope one day AI really behaves like other AIs like image colorization AIs)

In the Cycles-X announcement (Cycles X — Blender Developers Blog) it is talked about how they wish to better optimize multi-device rendering (Multi-GPU and GPU+CPU) so it offers better load balancing (and presumably performance) without the use of tiles. This will hopefully help in resolving the performance issues you see. Keep in mind that the Cycles-X branch of Blender is still in relatively early development.

Looking foward:

Multi-device rendering: we’ll experiment with more fine-grained load balancing without tiles

Another thing, it seems like the goal is to move away from tiled rendering. In slide 14 of the technical presentation for developers they talk about the benefits of using progressive rendering.

Technical presentation for developers link: Cycles X Technical Presentation - Google Präsentationen

Assume progressive rendering

- Prepare for rendering algorithms that require progressive passes

- Pause and resume final renders

Great!

I understand that you are using only GPU there in cycles-x branch, even if you have selected both from the preferences.

===========

About Tiles or Full frame render. The main problem I have found in cycles-x build with full frame render is the high memory usage that increases the higher the output resolution.

Also with small tiles and denoising the memory usage is lower than full frame render, at least for OIDN. This (small tiles) was important if you were rendering large output resolutions so as not to exceed the system RAM for OIDN compared to using denoising in compositor.

I hope this has a solution with the new method and out of memory issues are not a problem.

In the technical presentation for developers, the aim is to reduce memory usage for high resolution renders by rendering the scene in tiles of a “2k” size.

Source (Slide 14): Cycles X Technical Presentation - Google Präsentationen

Memory usage

- Split up render into big e.g. 2K tiles to support very high res renders

- But no longer rely on these tiles as a mechanism for work distribution between devices

I was also informed by someone a while ago that OIDN actually has a RAM limit that can be activated. The way it works is from my understanding is you set a RAM limit (E.G. 1GB) then OIDN works on sections of the image in 1GB parts. Denoising it in tiles with overlap to reduce artifacts that occur from denoising in tiles. Keep in mind that the render itself does not need to be in tiles for the denoising to occur in tiles, OIDN can create the tiles itself.

The feature is mentioned in the documentation for OIDN and is listed as a variable going by the name “maxMemoryMB”

Source: Intel® Open Image Denoise

maxMemoryMB = approximate maximum amount of scratch memory to use in megabytes (actual memory usage may be higher); limiting memory usage may cause slower denoising due to internally splitting the image into overlapping tiles, but cannot cause the denoising to fail

I believe OptiX may also support tiled denoising (after the rendering). Not 100% sure on this.

Both ODIN and OptiX tiled denoising may be expanded on with Cycles-X to reduce memory usage. Maybe the semi-tiled/progressive rendering will also have adjustments made to reduce memory usage? Only time will tell.

Also, just as a note, I’m not a developer, I’m just speculating and providing all the information I can to help people understand what MIGHT be planned.

Keep in mind that may of the plans for Cycles-X are still primarily just suggestions and things can change at anytime.

The example here looks very promising (Smoother Denoising for Moving Sequences):

Hope we have OptiX temporal denoising soon in Blender.

I wonder if there was any way to test this for Cycles renders outside of Blender, with multilayer EXR sequences and denoising data for example.

Hi YAFU, you can, here are the command line switches from OptixDenoiser:

Usage : ./optixDenoiser [options] {-A | --AOV aov.exr} color.exr

Options: -n | --normal <normal.exr>

-a | --albedo <albedo.exr>

-f | --flow <flow.exr>

-o | --out <out.exr> Defaults to 'denoised.exr'

-F | --Frames <int-int> first-last frame number in sequence

-e | --exposure <float> apply exposure on output images

-t | --tilesize <int> <int> use tiling to save GPU memory

-z apply flow to input images (no denoising), write output

-k use kernel prediction model even if there are no AOVs

in sequences, first occurrence of '+' characters substring in filenames is replaced by framenumber

I had to build the SDK to get optixDenoiser binary but don´t do any tests for now.

CyclesX is build with 7.3 but I was not testing denoising quality, only get it to work and speed.

Cheers, mib

EDIT: I guess CyclesX is using the new denoiser, looks much better, makes no sense to use the new SDK but the old denoiser.

Thanks mib, I will investigate about this.

I tried to test it outside of Blender using the compiled SDK applications like @mib2berlin suggested when I first discovered that OptiX had temporal denoising, but two things stand in the way:

- I believe the motion vectors pass generated by Cycles isn’t in the format OptiX expects and so issues may occur. When I say it may not be in the right format, I mean in terms of colour and brightness and what they mean interms of direction and intensity. However, I could be wrong on this.

- I couldn’t get OptiX 7.3 to work as I didn’t have the required GPU driver (and because I run Linux and am not as comfortable messing around with certain things, I did not attempt to install the new drivers and will instead wait for them to be distributed by my distribution maintainers)

I will look into trying it again when I update my GPU drivers.

I also compiled the SDK (under Linux) and quickly tried to denoise an image sequence. I ignored the motion vector, albedo and normal passes for now and just fed it the beauty pass.

The first frame gets denoised but after that the denoiser fails with this message:

./optixDenoiser -F 1-201 -o /mnt/D/09_1a/Walk_in_the_park/denoised/denoise.0+++.exr /mnt/D/09_1a/Walk_in_the_park/to_denoise/Render0+++.exr Loading inputs for frame 1 ... Loaded color image /mnt/D/09_1a/Walk_in_the_park/to_denoise/Render0001.exr (1280x720) Load inputs from disk : 95.69 ms Denoising ... API Initialization : 183.44 ms Denoise frame : 19.19 ms Cleanup state/copy to host: 9.26 ms Saving results to '/mnt/D/09_1a/Walk_in_the_park/denoised/denoise.0001.exr'... Save output to disk : 100.44 ms Loading inputs for frame 2 ... Loaded color image /mnt/D/09_1a/Walk_in_the_park/to_denoise/Render0002.exr (1280x720) Load inputs from disk : 37.88 ms Denoising ... ERROR: exception caught 'CUDA call (cudaMemcpy( (void*)m_guideLayer.flow.data, data.flow, data.width * data.height * sizeof( float4 ), cudaMemcpyHostToDevice ) ) failed with error: 'invalid argument' (/home/steffen/NVIDIA-OptiX-SDK-7.3.0-linux64-x86_64/SDK/optixDenoiser/OptiXDenoiser.h:333)

I didn’t investigate any further but is a motion vector pass mandatory or optional? I ask because the error message says something about “flow.data”.

and @Alaska

Hi, is it possible you need the latest Cuda Toolkit, too?

I build the Cycles-X branch but cant got it to work until I change Toolkit from my 11 to 11.3.

Cheers, mib