No it’s exactly how it should work with a tiny threshold and way tooooooo many samples (your 10000000). AS is steered by the threshold so it stops rendering on pixels which has reached the threshold difference. It continues to render all the other parts of the pixels until it reached max samples. The workflow is something like this: set the samples at the highest count necessary for the worst (noisiest) parts of the render to reach the noise level you deem good enough. Then throw AS into the mix with something like 0.0005 to make the renderer abort on every pixel which gets clean enough easy so it will render only the difficult parts with more samples to the end. I think with volumes AS is still a little borked.

Ok, interesting. Your never ready with learning Blender

Think there is still a problem with Adaptive Sampling and Volumes

so for a proper test please set samples back to 2000 and threshold down to 0.001 or less.

if it still looks bad try setting min samples to 100 200 or more* (it really depends on scenes sometimes)

as of now min samples = 0 means around 44 (2000 sqrt)

Soo

2000 Samples

Have now threshold set to 0.001

And min Samples to 200

Takes 0.42 min

Then I have bumped the min Samples up to 1000 as a test:

And it does change literally nothing than 3 pixels???

And it takes 20 seconds longer

Threshold to 0.0001

min samples 200

the rest of the settings are the same

Render time 1.18 min. Without AS by 2000 Samples it also takes 1.18 min actually but it is noise free

It would be most useful to see a .blend file, difficult to guess what is going on here. We’re in the process of making some changes to the adaptive sampling pattern and threshold, so it would be useful to check why this scene seems to fail.

Note that the threshold should practically never be lower than 0.001, and even that is very low. For a render that is going to be denoised I’d expect values closer to 0.01.

I have found something interesting during tests…

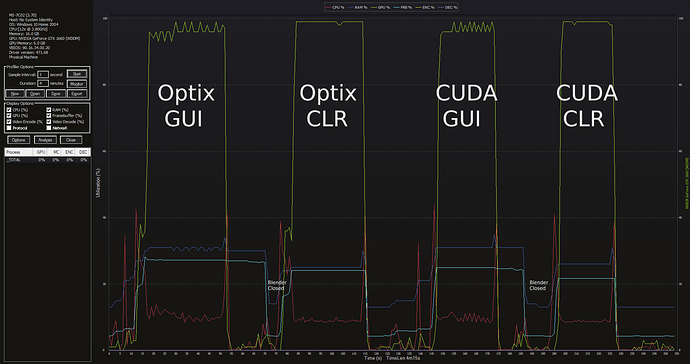

As you know command line rendering can be substantially faster than the standard ‘in-Blender’ method (F12/Ctrl+F12). I decided to try different combinations and see if I can achieve the same result but with my Blender opened and scene fully loaded. As it turned out (at least in 2 different scenes in my case), opened copy of Blender with the scene (viewport was set to shading mode) had no performance on the command line rendering. In other words, I was able to work on my scene, save it and then instead of pressing F12 or Ctrl+F12 to render, I just fired up console and used command line rendering instead. It was as fast as if I used command line rendering only. It was up to 10% faster than the standard method of pressing F12/Ctrl+F12.

Is it possible that the rendering window, the one that pops up after you press F12, eats up up to 10% of your performance? And if so, is it possible to have a rendering mode that would delay the output until after the rendering finished?

I used GPU only (OptiX) mode. 3600X / GTX1660

Blender GUI: F12 (Standard)

Command Line: Command line (without opening Blender)

Blender + CMD: Blender + command line (Blender opened, scene loaded but I used command line for rendering instead of pressing F12/Ctrl+F12)

1920x1080, 100 samples, OIDN:

Time in seconds:

Scene 01

Singles shots (BVH, OptiX structures, etc. for every shot)

Blender GUI: 38.22

Command Line: 36.1

Blender + CMD: 36.4

Animation (Persistent data)

Blender GUI: 34.03

Command Line: 31.25

Blender + CMD: 31.31

Scene 02:

Singles shots (BVH, OptiX structures, etc. for every shot)

Blender GUI: 23.51

Command Line: 22.74

Blender + CMD: 22.47

Animation (Persistent data)

Blender GUI: 20.58

Command Line: 19.34

Blender + CMD: 19.39

PS. Just did one more test:

Classroom Scene (GPU OptiX):

Default settings but 100 samples.

Blender GUI: 42.34

Command Line: 35.98

Blender + CMD: 35.94

That’s ~15% difference.

Classroom Scene (GPU CUDA):

Default settings but 100 samples.

Blender GUI: 36.38

Command Line: 31.78

Blender + CMD: 31.81

That’s ~13% difference.

–

One more test with a twist:

Barbershop (GPU OptiX):

Default settings but 100 samples + OIDN

Blender GUI: 86

Command Line: 71

Blender + CMD: *

That’s ~17% difference.

Barbershop (GPU CUDA):

Default settings but 100 samples + OIDN

Blender GUI: 89

Command Line: 77

Blender + CMD: *

That’s ~13% difference.

- I was unable to perform ‘Blender + CMD’ test because ‘out of GPU and shared memory’.

It would be cool if we get a visual cue where Cycles is still rendering and where adaptive sampling has stopped it. Like a switch in the render framebuffer to turn all still active pixels bright green. So we can understand and tune adaptive sampling / sample count better.

Please think about animations, flicker free denoising for animations needs more samples and smaller threshold than stills. Sometimes a lot more.

The “Sample Count” render pass can be used to help with this. You won’t be able to view it during a render but it’ll be available for view, and analysis, once the render is done. Pixel values of 1.0 correspond to needing the full sample count, values of 0.5 means that it only needed half of the sample count, and so on.

Yes I know but it’s so “non-interactive”. Got spoiled using Blender.

I don’t fully understand what Blender+CMD means.

Where do you send the bpy.ops.render.render command?

In the system terminal? (so that you should have another Blender instance opening)

Or in the very same Blender instance > Python console? (As an alternative to GUI command or pressing F12)?

Thanks for sharing the scene. Testing with the changes we are working on, I think the adaptive threshold is going to behave more predictably and noise will be reduced a bit.

However, adaptive sampling can’t really help reduce render time in this scene compared to uniform sampling. The volume noise is distributed quite evenly across the image. The main benefit of adaptive sampling in such scenes is that (hopefully after the upcoming fixes), you can choose a predictable noise threshold rather than having to tweak sample count scene by scene.

Is there a way to do that volumetric effect without ray marching? Maybe with a volume displace modifier? Also reduce noise rendering in low resolutions is very difficult when there is a area difficult to resolve, it is better to render in high resolutions with less samples and then scale the image, this is a technique used in ILM if I remember correctly

This one.

As I understand, one of the benefits of the command line rendering is that we don’t have to use GUI and therefore we can save some valuable resources that would go towards rendering. But during my tests I saw a different picture. Having one instance of Blender opened with the scene fully loaded had no performance impact on the other Blender instance that was rendering the same scene using the command line rendering. The only test that failed was the Barbershop scene, but only because that scene is memory hog and I ran out of it.

So, as i understand it

Blender + CMD = Command Line

no surprise if times are the same.

What is the benefit you’re after?

The benefit of having additional 5-15% performance boost that we get from the command line rendering, but without using the command line rendering.

I never tested that, but if it’s confirmed it would be handy to have a batch render button! I know how launch command line renders , but just having a UI button that launches a background render would be a timesaver in a lots of cases!

Maybe the blender instance that launches the background render could keep the handle of the process to stop the render with “cancel batch render” button, and automatically delete the placeholder frames on cancel. (If you just kill the process from the task manager the empty placeholders files are not deleted)

Just like that software that starts with M.

weren’t you using the system terminal? I’m confused again