I have a question… Particle Nodes is part of Everything Nodes? right?

Yep, let’s say that Function Nodes and Particles Nodes are the begining, but everything is part of the same effort AFAIK

@jacqueslucke in the function nodes branch it’s possible to have a custom modifier made by functions/nodes, right?

Could it be possible to have the same but instead of outputting a node having a constraint as output?

Would that be too complex?

Read more here: https://wiki.blender.org/wiki/Source/Nodes

There are a lot of documents there, can you point me in the right direction please?

Ok, I think a found it, in the IntitialFunctionsSystem, and it seems yes, Constraints are considered so… awesome!

Is this already accesible in the current function nodes state? I know modifiers can be used, but can constraints?

Thanks!

I would like to thank you @jacqueslucke for such incredible system. I’m a motion designer and this new system has a bright future. That said, I would like to make an important suggestion regarding usability. I know that everything is still a work in progress, so no judgement must be done right now. However, I find important to say how fast things can get complicated with BParticles. The basics are well rounded in my opinion. The influences and execution system are really good and simple to understand, but, as it is now, I think the end user will need more high level nodes (or groups) to go just a little beyond the basics.

Most of the more complex stuff requires vectors, lists, values, math, and this can be really tricky, because while everything makes sense on a logical way, most of the users won’t use BParticles beyond the basics. Not because they don’t want it to, but because they can’t. In some way, the system can held the user. I would like to call your attention to X-Particles, a third party particle system on Cinema 4D.

I find the influences system very similar to how X-Particles works, but there are a lot of “node groups” there, which makes the life of the user easier because they can create a very complex particle system/animation just by plugging in several other smaller functions (or node groups) on a easy way. But this is only possible because there is a lot of functions (or node groups) available.

I really like how, with BParticles, one can create your own turbulence system (as far I understood, judging by my own tests). We have a node group for this influence that can be tweaked on a low level, which is fine. Hopefully, one will be able to save this on thefuture asset manager (or something similar).

But, right now, almost everything “relevant” must be created on a low level basis, leaving the full potential of BParticles only for a minimal group of users. As an Animation Nodes user, I think that BParticles and AN are similar in this matter. Both are powerfull, but both requires many logical steps to go just beyond basics. For instance, I still have difficult on how to use loops properly in AN.

The key here is: what should be between me and the end result? The lack of my knowledge on how the tool works (and/or its limitations), or the lack of knowledge on how to make the tool?

I hope I’m making myself clear here. I thank you again for your efforts and I would like to know what you all think.

The big advantage BParcitles will have over XParticles is, that it is node-based and setups can be grouped.

Try to get an overview of an Xparticles system at a glance with more than 20 objects.

It gets confusing pretty fast, because the XParticles objects are linked together in a list (Outliner). Therefore you really have to know how your objects are connected in the Outliner.

BParticles will be much easier for beginners, once highlevel nodes will be available.

Almost every setup can be put into a group, so only wanted parameters are promoted.

I think it can’t be easier for beginners.

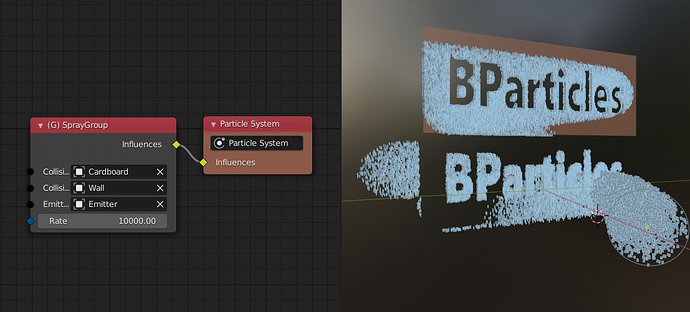

In this example, you only have set the emitter geometry, the two collision objects, and the emitter rate. Done… Only two nodes are required. I think BPaticles and Everything nodes in general, will get pretty powerful, once the community starts to build custom node groups for everything.

You don’t have to see what’s inside the node tree.

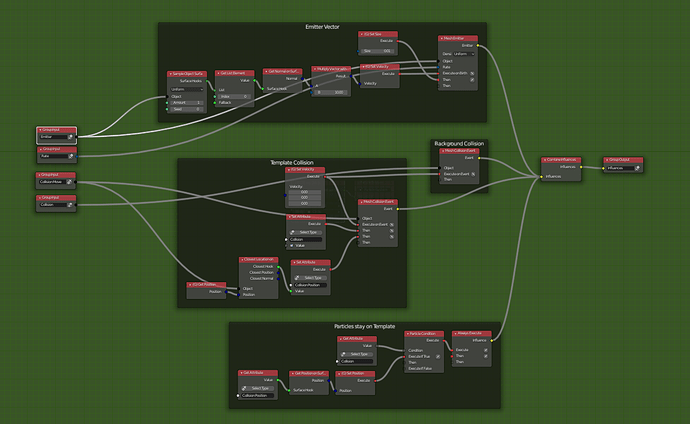

You’re absolutely right. For instance, I had a hard time trying to understand what’s happening on your node tree, but this is probably the fact that I doesn’t know the nodes you’re using (even though there is some math involved, which is another problem). But, expecting the average user to be able to do the same thing is unreal, so it seems that BParticles will need lots of high level node groups and the community sure will have to play a big role.

I think that’s more or less clear, @jacqueslucke stated it since the beginning, and that’s where the Power of Particle Nodes, Function Nodes and in general everything nodes relies, it gives the power up to very advanced users and with a framework that delivers proper performance, but it also delivers ease of use for more artist oriented users, si it all fits in a pipeline

The key here is the availability of node groups for the end user, both shipped with the software or available for download, and in use with the asset manager of course.

Hi Jacques. How do you feel about this proposal to simplify using nodes generally. It’s sort of Houdini-esque, but without the properties window.

It’s worth reading the comments, particularly the Testure back and forth, where the concept is explained in more detail.

Hmmm… It is not obviously, what positive effects of the proposal will be outweighs the disadvantages. And work spending is very high…

But, if simply COLORIZE the links (corresponds with input/output colors) then effect can be 50% of Your proposal. And time cost is minimal. And no disadvantages.

Indeed. If links and re-route nodes could be colorized it would be very useful.

Also, if links could be labled with text, which the user inputs, similar to labeling reroute nodes, that would be great. The text would then be endlessly repeated along the link line in reasonable intervals.

Or perhaps text could be displayed when hovering over or clicking a link.

So I’m very impressed with everything developed so far. Quick side note, @jacqueslucke your contribution to the motion designer community is legendary.

Anyway, based on my understanding of the code in Animation Nodes, the “Script” node was probably one of the more complex to design because you had to make sure that people couldn’t accidentally break blender. But how far off are we from having a script node here? Even if it can’t handle complex data types like the events etc, it would be so nice to write my own expressions/formulas vs having enormous groups for complex math/vector math. I can imagine that execution may not work the same because of the Uber Solver but is it likely to be implemented earlier or later in this development process? (Also if it already exists, please point me in the right direction)

Or is the python API able to interact with Particle Nodes in some other way?

Thank you for the examples. For any reason your file is not working with the current branch anymore (v2.83 from 2. Feb.). Did it change this much?

I will have a look tomorrow…

EDIT: All four files work fine with the build from 2. Feb

All necessary groups should be embedded in the files.

https://drive.google.com/drive/folders/1WD6WSeYaU6a9TU8qV8D9gfLZNv0C3iwU?usp=sharing

Is the reason maybe, because I’m using the official branch from blender.org? The nodes you’re using are not exsisting there. Are you using the branch from https://blender.community/c/graphicall/ ?

First off, just wanted how amazed I am by all the development happening, I hadn’t had a chance to test this branch for a few months and I was impressed with all the changes, Awesome work @jacqueslucke !

Also I’m totally onboard with the idea of the nodelib, I think it opens up a lot of potential for community participation; obviously having a better way to organize the different files containing the node groups potentially via the upcoming asset library would be a huge plus, but just having a path to a central repository of custom-made node groups is already incredibly useful.

(it reminds me a bit of how Houdini does it: on startup, or using a .env file, Houdini let’s you define a few environment variables for paths to look for Houdini Digital Assets (HDAs), mainly $HOUDINI_OTL_PATH and $HOUDINI_OTLSCAN_PATH but it also looks in $HOME, that way you can have both per-user as well as studio wide asset libraries).

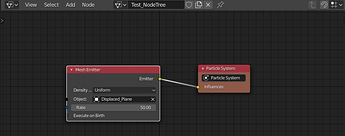

One thing that I think would be a super useful addition is, for the bparticles nodes that take an object input, like Mesh Emitter or Mesh Collision Event, to have an option to “use modifiers” in other words, that the node would apply the modifier at the current frame before evaluating its input.

As a very simple example of why this would be useful:

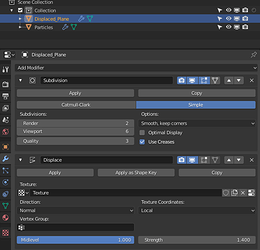

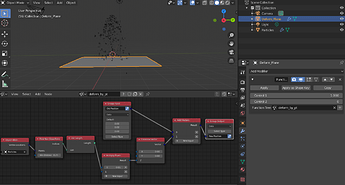

The image above contains a plane that has been subdivided and also has a displacement modifier applied. The idea is that this plane would later be used as an emitter for the particle system, however since right now there’s no way to get the result of the modifier, the particle system simply scatters new particles at the position of the undisplaced plane.

The same would happen if you tried to use the Mesh Collision Event node, the collisions would be detected in the wrong places since it’s not picking up the resulting mesh after applying all the modifiers.

My original motivation for asking for this feature is a bit more complex, but I still think it would be incredibly useful:

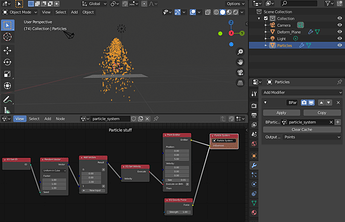

In the first image there’s just a simple particles system with some particles falling down, applied to the “Particles” object.

In the second image however, we can see that the “Deform Plane” object has a Function Deform modifier applied to it, and the node network for that function deform modifier is trying to use the result of the “Particle” object to deform the plane, in other words, using the output of the particle system as an input to the deform node network in order to drive the deformation based on the particles.

This might be a use case that we don’t intend to support, or it could be better achieved in some other way that better aligns with the design goals of the project (for example, keeping everything in a single node network that can run both the particle sim and the deform operation, and has multiple outputs (or conversely, the modifiers on different objects can “call” a different node from the network as their output)),

(also, linking modifiers in this way could introduce cycles into the graph, which I assume would be harder to detect than if everything where placed in a single network, it would be easier to not allow that operation or to alert the user with an error or warning).

However, I still think at least for the simple case outlined earlier, having the functionality of bringing a mesh into the network with all the modifiers applied would be beneficial.

Hi, I posted a tiny rant about node-UI design considerations and how implicit layout constraints arise from node in/out placement here:

It’s not exclusive to the particle nodes UI but, especially since there has been some struggle adapting the existing node-interface to meet the needs of the particle system here, it seems worthwhile re-evaluate some fundamental design conceits (ie: node graphs are always to be laid out left->right) and common causes of graph messiness (ie: the switchback/shoelace effect ![]() )

)