I’m still trying to wrap my head around this one- it’s been years now. the banding in dense volumes is caused by stepping, but what is the actual issue causing it? I’ve gone through blender’s algorithm a few times, and I must say I’m not enough of an expert to even know exactly how it works, even after playing with it a bit and getting results, but I understand enough that the system was built using stepping from the ground up, and to change that would require implementing lots of wide-reaching changes and be its own project.

looking at it as I am, though, is it possible that one of these three things is causing the issue?

- at line 221 in kernel_volume.h, the variable “tp_eps” is noted as “likely not the right value”

- at line 252 there’s a note “ToTo: Other interval?” that I don’t understand.

- at line 255 in, there’s a little note saying “stop if nearly all light is blocked” which, in a dense enough volume would happen within one step, and as well, it uses the tp_eps variable.

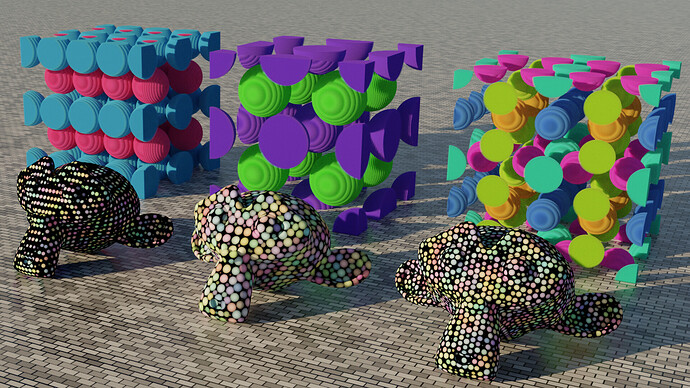

Is it possible this is causing issues with accurate extinction that only becomes more visible in dense volumes?- that would mean an optimization is biasing the results too much, and resulting in artifacts, but like I said, I barely have any idea what exactly these variables are. I’m kinda sure that eps stands for extinction per step, and if that’s wrong, it could result in the end of every step being counted as having 100% extinction, which I think is what we’re seeing here: #56925 - Cycles produces banded render artifacts in dense or sharp volume renders. - blender - Blender Projects , or perhaps it really was my first gut instinct, that it’s caused by uneven sampling distribution.

but I’m just grasping at straws. I don’t actually know what’s going on here, so I’m gonna play with the values (I do find it odd that they’re hard-coded) and see if I can manage a change in my test renders. There are a number of renders I have an interest in, and now that geometry nodes, volume to mesh, and so on are a thing, I might soon finally be able to manage the renders of my dreams, or begin using volumes in more creative manners.