The most important part, which already seems to be taken into account is that there should not be any texture editor. There should be just the shader editor with the ability to output RGB/Float type node outputs as a texture datablock. I know it’s already planned, but I will elaborate why this is so important:

Here’s a typical example workflow, which is currently very difficult to achieve and very limited:

One of the common modeling workflows is to displace meshes with displacement map, then use the same UVs to also map the textures on the mesh, and then decimate the mesh. This makes it very easy to rapidly, semi procedurally generate various assets, such as cliffs, and even make them game engine ready.

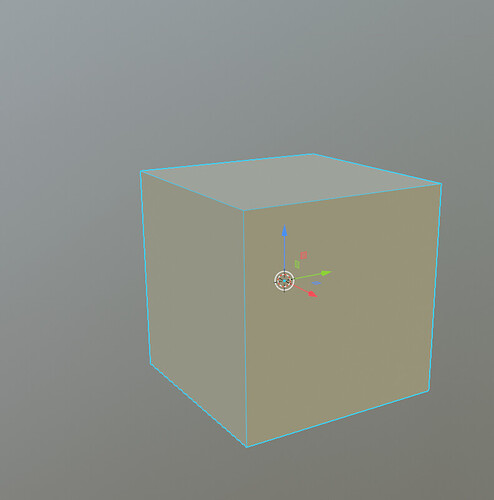

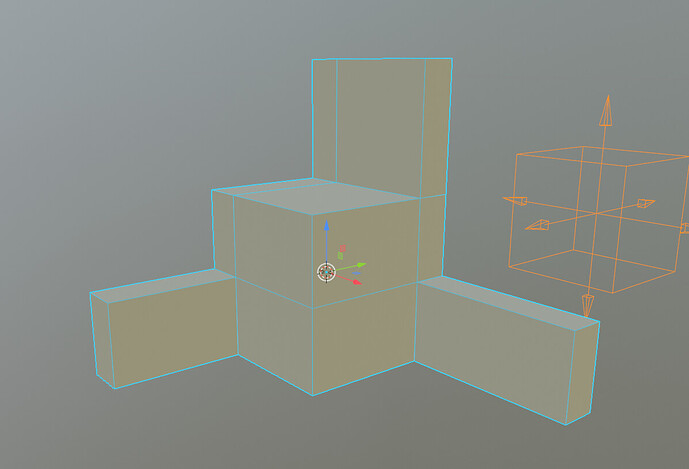

You will start with a simple shape, like a cube:

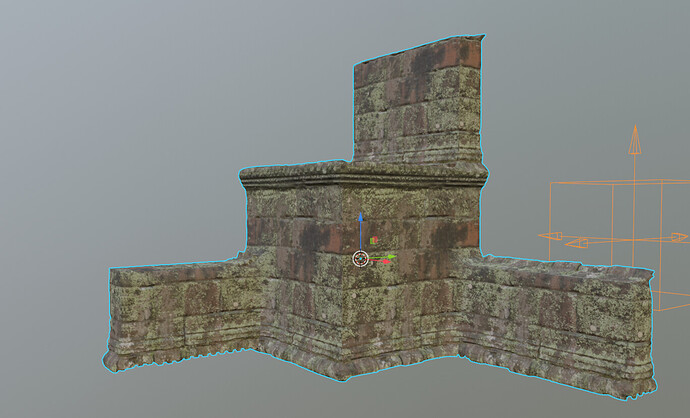

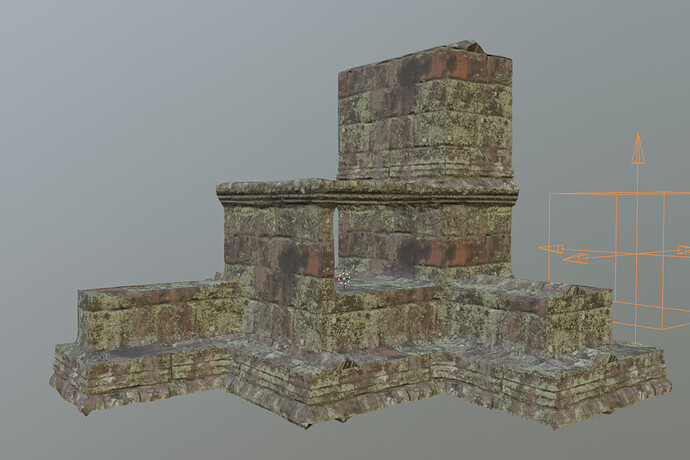

Which you can then very roughly edit to the desired shape of the object, in this case stone wall architecture:

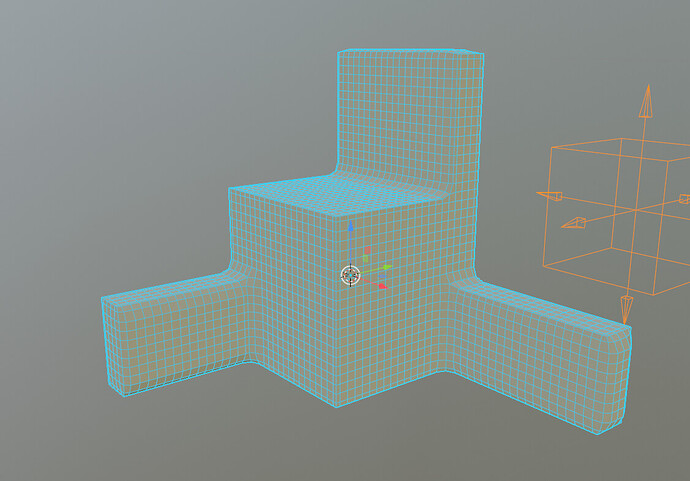

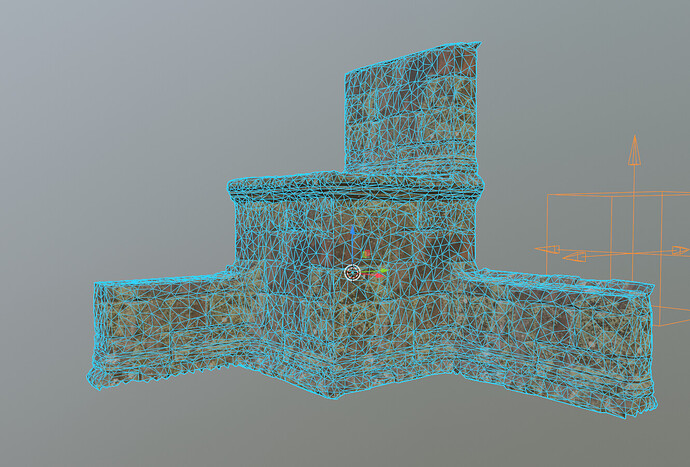

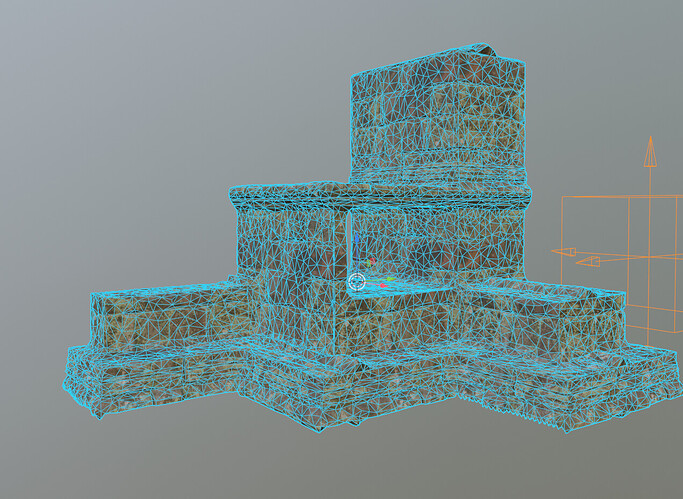

You will then remesh the object:

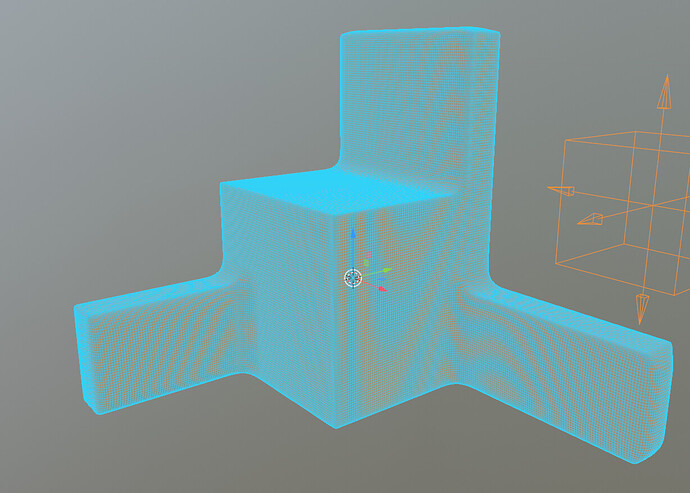

Add a bit of subdivision:

Now, this workflow has been impossible up until recently, thanks to this patch:

https://developer.blender.org/rB1668f883fbe555bebf992bc77fd1c78203b23b14

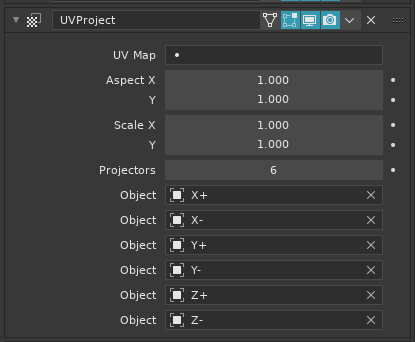

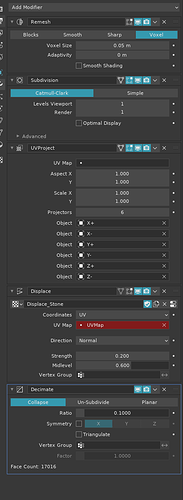

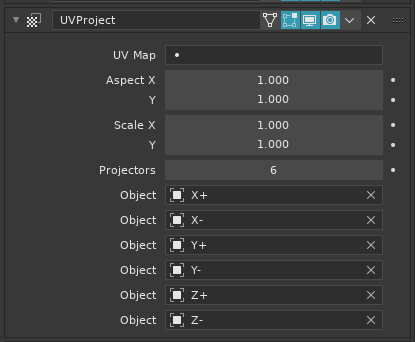

You can now apply the UV project modifier, and use it to generate box like mapping:

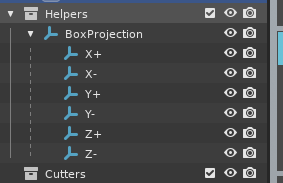

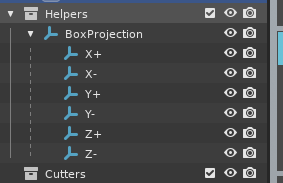

Unfortunately, the workflow is still very painful, as one has to create quite ugly viewport contraption to contain individual projection helpers:

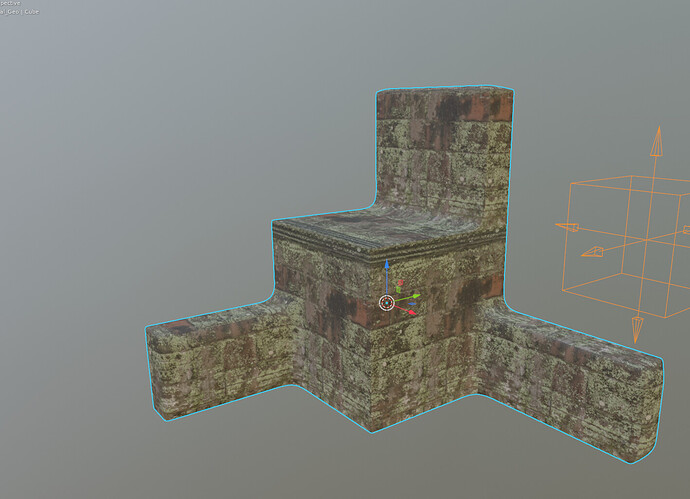

Then you can use displace modifier to displace the mesh with the generate UV coords:

And finally, you can throw on a decimate modifier, and turn it into a game ready asset:

You can then, any time, go back to edit mode, edit the architecture, and have procedurally generated lowpoly asset at the end:

The problem is the clumsy to set up, ugly modifier stack which has very limited UV mapping capabilities:

Now, this is how this all relates to Texture Nodes:

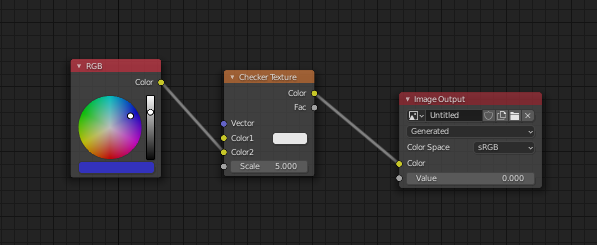

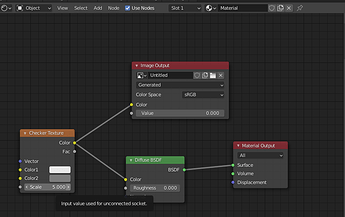

We should be able to simply create a material, with all the UV mapping and color processing intricacies and so on, which the shader editor allows, and then simply be able to branch it off at any point in the shader editor into an image texture, and use that in the displace modifier for example.

And then have that exact same shader editor UV mapping tree drive both material shading as well as the displacement mapping. This would open a huge world of procedural modeling possibilities in Blender. Those which other 3D software packages have for quite a while, but Blender has not.

Even something as seemingly simple as being able to use any RGB/Float/Vector node output anywhere in the Shader Editor as input of Displace modifier is a game changer.

In fact, Displace modifier offers “RGB to XYZ” as one of the modes, which combined with the power of Shader Editor would be even more powerful.

I really hope we won’t go the overcomplicated, schizophrenic way of having a separate texture node editor, detached from the shader editor, which will actually separate shading and procedural modeling further away, instead of bringing it closer together into one unified system.