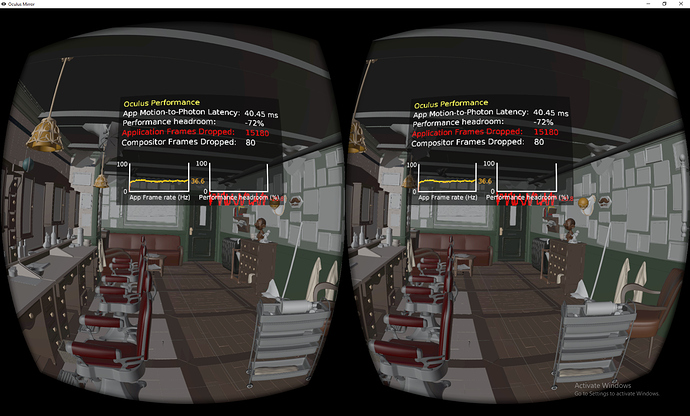

The barber shop never looked better!

Sadly oculus software update 1.39, broke the unofficial openXR support, it seems to report openXR 1.00 and our 0.90 loader (latest public known version) is not super thrilled about that

so OpenXR won’t be supported anymore? I hate facebook!

It will be supported, support is not even officially released. The thing is it was just changed to use the also unreleased OpenXR 1.0. This is not supported by the OpenXR functionallity available to the pubilc (and us). Which is another hint that OpenXR 1.0 will be released soon, likely during Siggraph.

So we just temporarily can’t use the Oculus runtime.

I’ll clarify what happened.

-

We found some dlls in the oculus software folder that implemented openXR support that worked with the OpenXR SDK 0.90

-

Oculus never said anything about openXR support, we were just lucky to stumble upon those dlls and were testing blender with it.

-

Latest oculus software update bumped the openXR version used in those dll’s to 1.0 which no longer works with our 0.90 sdk.

So now we have to wait for khronos to officially announce 1.0, once that is done we update our OpenXR SDK and all will (should) be good again

we were lucky we got early access, too bad it broke, but no need to be upset with oculus here.

Well that was fast. Oculus implementation (and a few others) apparently coming this week.

Well yeah, you could kinda see that one coming a mile away, happy to see it’s out though

The Last Third

We’re approaching the last third of the coding period. At this point I’d like to digress from my schedule and work on stuff that I find more important. Namely performance improvements and polish to get the branch into a mergeable state.

In the end we should have stable and well performing VR viewport rendering support. This would be the base from which we can work on a more rich VR experience. Possibly with the help from @makx and his MARUI-Plugin team.

Note that I’ve already done some work on performance. Last week we went from ~20 FPS to ~43 FPS in my benchmarks with the Classroom scene. Others have reported even bigger speedups.

The following explains a number of tasks I’d like to work on.

Perform VR Rendering on a Separate Thread

I see four reasons for this:

- OpenXR blocks execution to synchronize rendering with the HMD refresh rate. This would conflict with the rest of Blender, potentially causing lags.

- VR viewports should redraw continuously with updated view position and rotation. Unlike usually in Blender where we try to only perform redraws when needed. The usual Blender main loop with all of its overhead can be avoided by a giving the VR session an own, parallel draw loop.

- On a dedicated thread, we can keep a single OpenGL context alive, avoiding expensive context switches which we could not avoid on a single thread.

- With a bit more work (see below), viewports can draw entirely in parallel (at least the CPU side of it), pushing performance even further.

I already started work on this (b961b3f0c9) and am confident it can be finished soon.

Draw-Manager Concurrency

Get the CPU side of the viewport rendering ready for parallel execution.

From what I can tell all that’s missing is making the global DST and batches per thread data.

In general, this should improve viewport performance in cases where offscreen rendering already takes place on separate threads (i.e. Eevee light baking). Most importantly for us, it should minimize wait times when regular 3D views and VR sessions both want to draw.

Single Pass Drawing

We currently call viewport rendering for each eye separately, i.e. we do two pass drawing. The ideal thing to do would be drawing in a single pass, where each OpenGL draw call would use shaders to push pixels to both eyes. This would be quite some work though, and is not in scope of this project. We can however do a significant step towards it by letting every OpenGL call execute twice (for each eye) with adjusted view matrices and render targets. This would only require one pass over all geometry to be drawn.

The 2.8 draw-manager already contains an abstraction, DRWView, which according to @fclem is perfectly suited for this.

So I could work on single pass (but multiple draw calls) drawing by using DRWView.

Address Remaining TODO’s

T67083 lists a number of remaining TODOs for the project. They should probably all be tackled during GSoC.

I could need some feedback from other devs on points made above:

- @fclem is what I wrote above on draw-manager concurrency correct, or is there more work needed that I didn’t notice? So is this doable in a few days of work?

- @sergey it seems that for drawing on a separate thread I need to give it its own depsgraph. Only to ensure valid batches for the separate GPU context I think. Is that correct? Would that mean duplicated updates if so, or would the depsgraph only update batches and share the rest with other depsgraphs? I guess thanks to CoW, we just need to do correct tagging to avoid unnecessary workloads?

Also, I didn’t pay much attention to the increased memory load this all would bring, so if you see a serious issue, please let me know.

Monado OpenXR runtime crashes at swapchain creation (VK_ERROR_DEVICE_OUT_OF_MEMORY)

Mentioned it to the developers in the Monado discord and they had this to say:

Interesting crash, I have seen it with the WinMR headset when OpenHMD failed to parse the configuration.

It sounds like the driver is failing to read the config and reporting zero for screen size.

It’s a OpenHMD bug

It seems to be random so sometimes it just works for me

I have added some better error messages to catch that error condition again.

Upon testing Blender with Monado all I really get is this in the terminal, it doesn’t present anything on the HMD:

Connected to OpenXR runtime: Monado(XRT) by Collabora et al (Version 0.1.42)

Thanks for the hint, I didn’t know about this. I won’t work on such device specific optimizations during GSoC, but it’s interesting to look at this once the foundation is there and stable.

I checked but unfortunately this wasn’t the issue. Joined the discord channel too to check with Monado devs. There’s no clear outcome but suspicion is that it’s a limitation of Optimus support on Linux.

Regarding your issue - It’s weird that it simply does nothing, Blender should catch and report any errors (at least if it can - e.g. it can’t handle a driver crash). Try launching Blender with --debug-xr, you may get more info then.

https://developer.nvidia.com/vrworks 1

I figured the information above might provide some ideas and/or solutions to some of the issues listed in the link below.

What I’ve described is stuff we can do on the Blender side to get a great base line performance for all supported GPUs. With VRWorks, we can push things even further then for GPUs supporting it. It seems to be especially tailored for high range devices.

I’d be amazed if the VRWorks license is compatible with ours (but admit, i didn’t bother to actually go check it)

Sorry for the late response. That’s great news!

I think I can find some time next week to make a plan to move the BlenderXR user interface elements over to your new OpenXR branch. I think you also already took a look at BlenderXR, so please share your impression.

I’d like to think about some bigger picture questions sooner than later:

- How will tools/operators be used in VR? Will we extend operators for VR execution? Or is it better to have own operators for VR, which execute general operators?

- How will VR UIs be defined? I would still prefer not to set one VR UI in stone, but to allow users to enable workflow specific UIs. E.g. a simplified UI for VR sculpting in a 101 template. This brings me back to my idea of Python/Add-on defined VR UIs.

- How will users set up VR settings? E.g. shading mode, base position, floor vs. eye level tracking origin, visible scene/view-layer, etc.

- …

In my opinion we should have a good idea on how to address these points before moving on much further.

These obviously have heavy influence on what you plan to do. Maybe a VR meeting would be a nice idea, you’d of course be very welcome to join. I’ll get in touch with you once I know more.

Using latest git versions of openhmd, monado, openxr. Clean recursive clone and build of your branch.

The only interesting thing is that upon quitting blender I get:

Error [SPEC | xrDestroySwapchain | VUID-xrDestroySwapchain-swapchain-parameter] : swapchain is not a valid XrSwapchain

Object[0] = 0x00007fde8f34c400

Error [SPEC | xrDestroySwapchain | VUID-xrDestroySwapchain-swapchain-parameter] : swapchain is not a valid XrSwapchain

Object[0] = 0x00007fde8f34ce00

Error [SPEC | xrDestroySpace | VUID-xrDestroySpace-space-parameter] : space is not a valid XrSpace

Object[0] = 0x00007fde8f34d800

Error [SPEC | xrDestroySession | VUID-xrDestroySession-session-parameter] : session is not a valid XrSession

Object[0] = 0x00007fdeba1fa400

Complete log: https://pastebin.com/raw/TwVTbQ9R

CMake output just to be careful: https://pastebin.com/raw/Fb4jAWyM

Dependency graph is aimed to be fully decoupled from main database and from other dependency graphs. Evaluation results can not be shared across dependency graphs, so you will effectively double amount of time needed to update scene for a new frame.

For the best performance and memory usage (on both host and device sides) you want to share as much as possible. This includes both dependency graph evaluation and draw batches: you don’t want, as an example, to evaluate subsurf twice, and do not want to push high-poly mesh to GPU twice.

P.S. To me almost sounds like there are deeper un-answered design questions.