I’m just the messenger. If you want more information talk to @HooglyBoogly

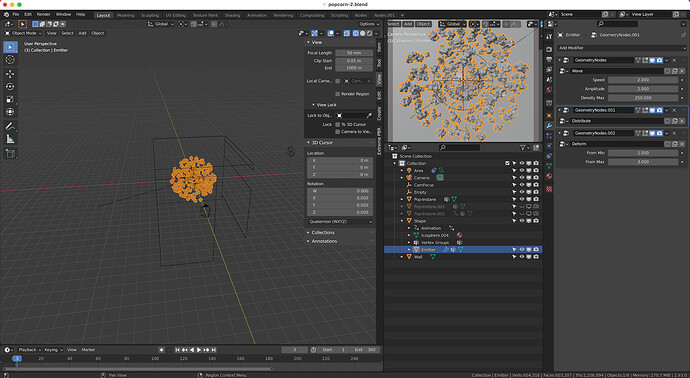

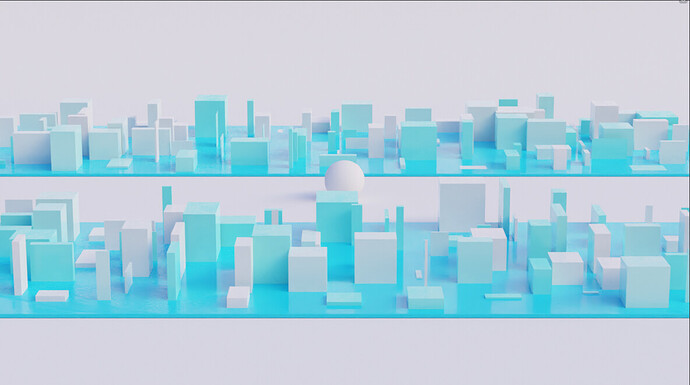

Hi all. At first, I want to say thanks for the team for great work and progress on GeometryNodes. I am using them mostly for motion design, doing things like:

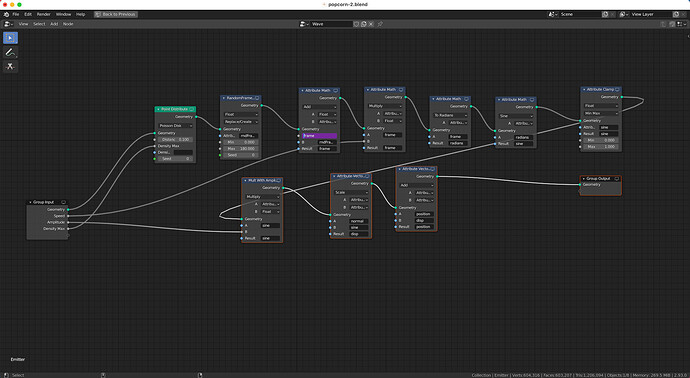

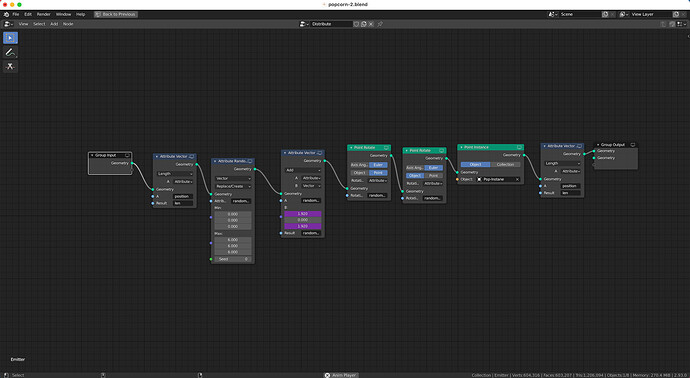

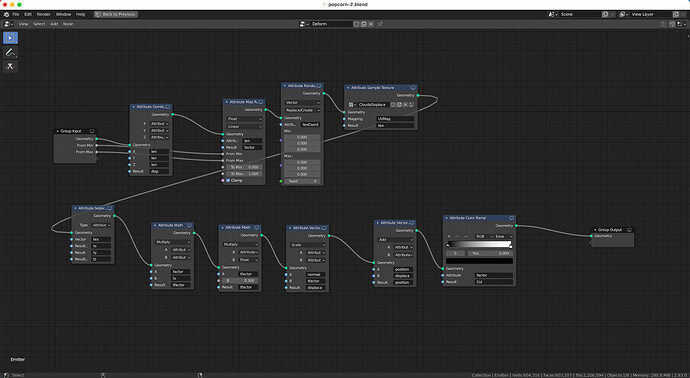

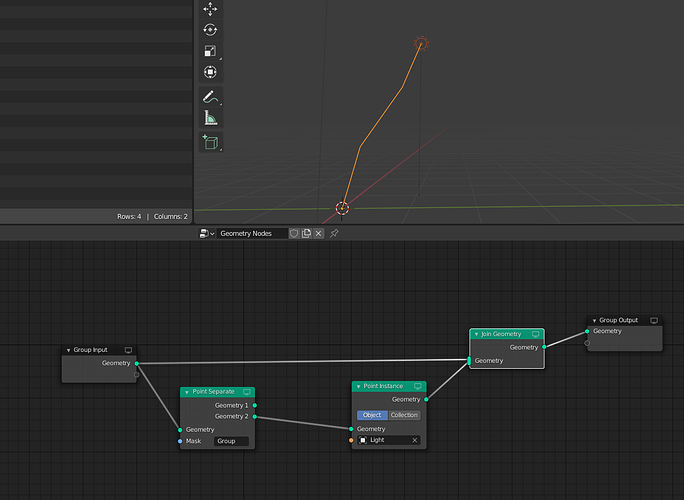

A set up are 3 geometry nodes modifiers like this:

Now I have a question, because for most of the ideas I have I came against the same roadblock - an inability to transfer attributes from PointCloud into the instances. For example, instances created PointInstance can’t access any attributes from the point that was used to create it.

When in 3.00alpha AttributeTransfer node appeared I thought it would perform that function, but it doesn’t. How difficult could be a creation of a node that is similar to Attribute Transfer, but works with the point cloud as an second input? Is that kind of functionality on the roadmap, is it even feasible?

Thanks again, looking forward to see the next iterations of GN!

Medieval wall constructor. Work in progress.

Curves to mesh and mesh to curves helps a lot!

Still can’t figure out how to make cutters more precise using as little extra curves/meshes as possible…

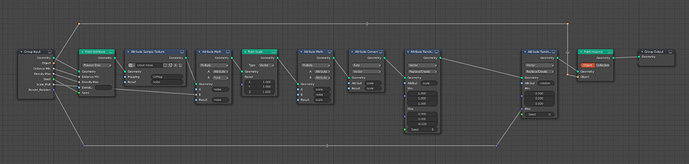

Work-in-progress raycast node:

Some shower thoughts on barycentric coordinates and general vertex mapping:

I can imagine future scenarios where outputting the barycentric coordinates from a mapping node such as raycast or attribute transfer might be useful. They allow you to keep a mapping between two different geometries, which is often expensive to compute, and then re-evaluate it cheaply when interpolated attribute data changes. If caching or conditional execution becomes a thing for geometry nodes at some point it might be worth looking into exposing such data instead of computing it only internally.

A further complication there would be data format: For a triangle mesh the barycentric coordinates are only 3 values per vertex, which can be encoded either as floats on the loop domain or as float3 on the point (vertex) domain. For general ngons it would have to be floats on loops (in case triangulation changes). And for point clouds there isn’t even a convenient matching domain. In general the mapping is a sparse N x M (vertex-to-vertex) matrix, which is not easily encoded as a simple attribute layer without extra buffer support.

I’ve been playing with Crane et al.'s geometry processing methods as an addon recently (github link) and the question was asked if these could work as geometry nodes. The answer is yes, but you would want to be smart about caching the expensive parts (computing sparse matrix operators like the gradient, Laplacian, etc.) and then re-use them.

Hello, I’m trying to do a bevel modifier after the GeoNode modifier but it wont convert the instances to real object, couldn’t find a way to convert them inside of geonode neither, is there a way ?

We shouldnt have a button on the point instance to convert instances to real mesh ? what if i want to do a cloth sim or a particles sim from the output of the geo node ?

It should automatically convert instances to real geometry after adding any node after the point instance, or adding a modifier afterwards, the bevel might be clamped due to smaller objects, try with the clamp overlap option disabled.

oh my bad ! i had some thin wall … clamp overlap was the problem

Collection info as input of 1st geometry in boolean with object info as “cutter” in DIFFERENCE mode loose collection objects uvs ?

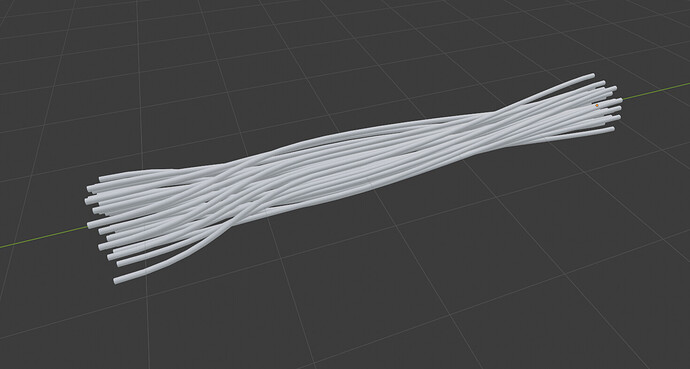

One of the first things I imagined using for Geometry Nodes for was making a node network that generates large bundles of dense wires/cables using a single Bezier curve as input. Does anyone have any tips for how I might go about this? Is this kind of thing possible currently with Geometry Nodes? I tried a few different things but I’m afraid to say I’m hopelessly lost.

Here’s a quick mockup that looks a bit like what I’m talking about:

Ouch, that user interface though. It looks like a DMV form to be filled out  I really hope it can be handled better, otherwise using it will be a real pain.

I really hope it can be handled better, otherwise using it will be a real pain.

Yeah, it’s a bit unwieldy. We’ve removed one input already (the “hit index”, it’s just the triangle index on the mesh). There are some ideas to make input lists so you only put in attributes that you actually need. Here’s the documentation revision, should give you a better idea of the current state: ⚙ D11620 Documentation for Raycast geometry node

Ultimately i hope we can implement something like the socket proposal i made a while ago (shameless plug): Proposal for attribute socket types

Pretty cool. I’m already loving this raycast node ! Is it possible to retrieve other (user-made) attributes from the target geometry as well ? or is that a job for the attribute transfer node ? by the way, aren’t those two (attribute transfer and raycast) a bit overlapping ? attribute transfer works only by proximity afaik (⚙ D11037 Geometry Nodes: Initial Attribute Transfer node.) but adding a raycasting method would basically make it into a clone of your raycast node.

Yes, user attributes can be interpolated as well. “Target Attribute” is the name of the attribute you want to read from the mesh, “Hit Attribute” is the name of the output attribute. Eventually it should be possible to add more than one attribute to interpolate. The code supports that already, but we don’t have a solid UI mechanism for it yet.

You are correct that there is some overlap between Attribute Transfer and Raycast nodes, in that they both interpolate attributes. But raycasting requires a mesh target (a surface to intersect with) while attribute transfer works with just points (anything where “proximity” can be defined). Personally i prefer to have separate nodes rather than trying to make these large behemoths that do everything and have a dozen mode switches.

Indeed, raycasting needs a proper surface, I hadn’t thought of that. Yes, fair enough. I’m all for atomic nodes, I was just thinking out loud.

It does not seem to be possible to Point Distribute on a mesh that only has vertices and no faces? I cannot use the Point Instance node since it doesn’t have density attribute… Anyone got any solutions to this?

You could generate a mesh from your point cloud with the convex hull node for instance, or use the existing points for instancing ?

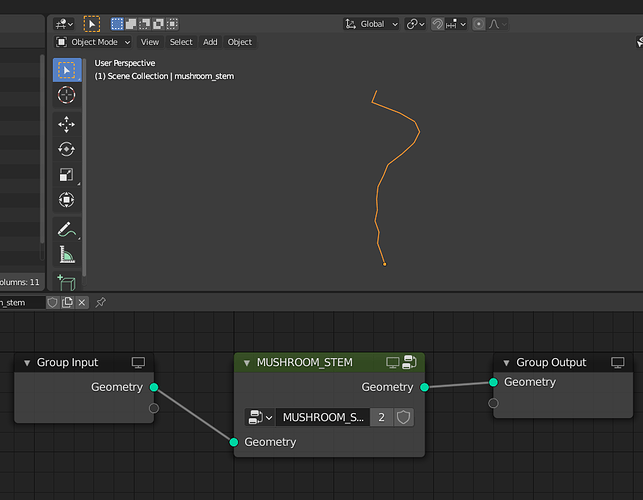

That will not work. I am basically trying to place a single object at the end of a line (made of 3 vertices initially and then subdivided) that has a vertex group on the last vertex named “tip” and align it in the direction of that vertex normal.

I can place the object at the tip by duplicating the “stem” and removing all the vertices except the last one, but then I lose the proper normal direction and it just makes the whole idea more complicated than it needs to be.

!!! That works, thank you!!

Hello

There’s a Huge wall/limitation in geometry node,

it’s the ability to communicate beween multiple geonode modifiers

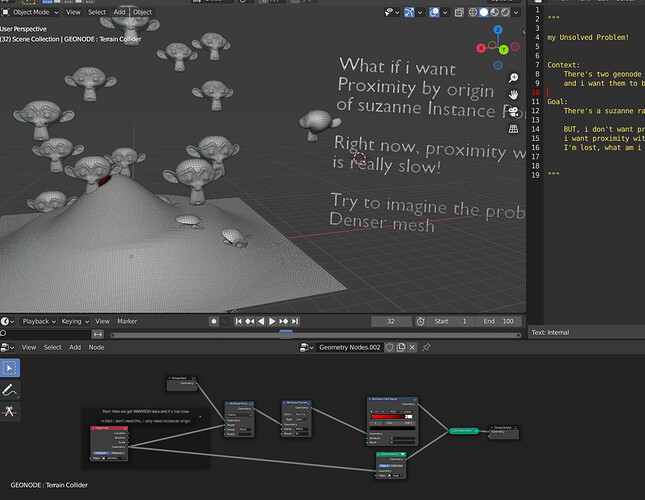

Here’s an example

pasteall.org/blend/2a77308506674517ab7d57bb1ac76f11

In this blend i have two objects with two distinct geometry node modifier, one geonode modifier that create falling suzanne, another that do proximity with suzanne instance

The problem is that it seem that it is completely impossible to read the instances location/rotation/scale values when working from an external object.

all the objdect info node is giving us is the applied mesh of all instances which is extremely slow to use!

Note that this issue has already been bough in the past

It seems that the answer to this problem is “just put everything in one node” which is a bad solution

Notes about geometry node bad assumptions: (imo)

in general, most of the limitation of geometry nodes are appearing because there are a lot of assumptions on how the users should use the nodes, ending in a lack of flexibility.

In this limitation above, the design assumed that users will always create a big nodetree containing everything. Which is far from ideal when working with multiple assets that interact with each other, or with a team. ideally you want to separate into multiple objects if it is possible (example in the workflow .blend above).

There’s more bad assumptions present, another one I keep hitting : the design also always assume that users will always do scattering using the point distribution node! but this is also a false assumption, we can emit points from meshes vertices which is a very common usage! Therefore these assumptions will create limitations and problems down the line, such as attribute not being created (the id attr is only generated by the point distribute node) or not attr not being transferred correctly (after point separate node for example) or simply the fact that vertices cannot be converted as a pointcloud domain.

(You will note that this last example if way less impactful that the first one above.)