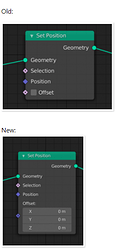

No, they got their own positions added to them, which makes sense if you consider that Set Position node does not collapse all vertex to the mesh center. It means the defaut value of that position vector is not 0 vector but original positions of the vertices.

not sure what you mean.

If you add zero vector to position, you do not get zero (collapse to world origin).

It seems that you add the zero vector if unchecked, and vec(1,1,1) if checked.

Maybe, there is a type mismatch for offset? (Bool instead of Vector)

– Edit –

I tested Suzanne now.

It rather looks like Suzanne got scaled. Displacing in normal direction would look very different … strange

If offset mode is enabled, position input vector acts like offset vector. I guess you were right, and I misunderstood the idea of boolean offset.

it seems ‘the position’ input defaults to the position attribute. It works if offset mode is disabled. If offset mode is enabled, the position attribute (default value) is added to the position attribute. It scales the model by 2.

@LudvikKoutny

Maybe something like this?

Well, it’s changed to a vector now.

I don’t have the access to the pc where I made that test on right now, but it’s just a combination of animated Voronoi and Noise texture displacement along z axis, nothing really fancy.

This was by design, but it has been changed today:

https://developer.blender.org/rBb3ca926aa8e1a2ea26dc5145cd361c211d786c63

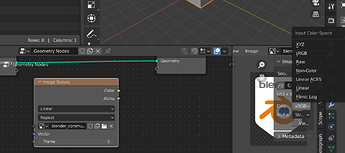

Find it a bit annoying that the image texture node does not have the color space options. The color space is used very often, like setting it to non-color etc is a frequent need, feels strange to go to the image editor just to do that.

I may have discovered a solution to the big controversy…

can you do a mockup?

The image texture node proved to be more complicated than expected because it needed to expose the socket. Without that ability it would have behaved like the shader texture node. I expect that Image handling will be improved in future. As a work around, you can set the color space option in the Image Editor.

I don’t quite get it. I don’t understand the logic of “no socket so let’s not have it at all”. From a socket perspective, have or don’t have the color space options will not make a difference now, in both situation, we just don’t have the socket. In terms of exposing the option to modifier, including or not including this option changes nothing at all. It’s not like not including it will make it possible to expose to the modifier, it just does not solve the problem. I don’t understand why this would be the reason for not having it. Having it right now does not contradict the act of adding a socket for it in the future, does it?

Or maybe I am understanding the problem wrong?

Ah, anyway, it’s already beta now, probably too late to argue about this.

One question

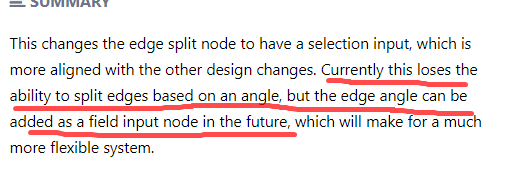

This is the commit message of the split edge node. Now it’s Beta and the select by edge angle node is still not there yet, does it mean the split edge node has a regression in 3.0 now?

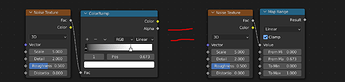

Currently there is no way to promote the color ramp widget and curve widget onto node group or modifier public interface, which is an import feature missing for reusable assets crafting, wish there would be a solution in the future  .

.

It has been the problem for years. I asked Pablo once in Blender Today, if I remember correctly he said “This might be a good first task for new developers” or something. I recently started to use the map range node in the place of the color ramp node because of this reason, though it only covers the use case of black and white color ramp with only two widgets. It seems they are planning a vector map range node for 3.1, which would enable replacement for colored color ramp, but the only two widgets thing is still a problem.

I use map range a lot actually, but this node is only for simple linear mapping, so no matter how they planned to improve it, it’s not gonna be an equal replacement for the ramp or curve widget, the purpose of these nodes are different. Besides the fact that both widgets can perform much more complex mappings, they are also very important artistic tools for user of a node group to tweak the result without knowing any detail of the implementation.

To be frank, there are many things with the grometry nodes that lacks consistency and usability. There may not be a short term solution, but I still hope there would be a thoughtful UX design to solve these problems some day.

Actually I think the difficulty here is the design aspect. How should the node work if it has sokects for widgets?

Color Ramp allows arbitrary amount of widgets, while up to now most nodes only have fixed number of sockets, with exception being the group input and output. How should the node work when there are more than two widgets? Does it just add sokects when you add more widgets? Then eventually the node would just get very long, since it can allow arbitrary amount of widget, the node can actually get ridiculously long.

To be fair this is not just GN, this has been the case for shader nodes as well.

There are already some industrial solutions. Like ICE treats the whole curve as a single value (think of it like an object) and promote the curve widget to upper compound automatically, and Houdini can not only export the widget, but also extract the handles by an array. There are many ways to solve this problem, but since geometry nodes is so young, I don’t know what the geometry node team think about supporting more complex data structure like array (for now they don’t even reach to a consensus on some basic topics like attributes), thus I can’t say which way is the better, it’s up to the team to decide.

Not only curve and color ramp, there is also no way to control enums with node sockets. I’m pretty sure all this will be figured out down the line.

hey, i am currently playing around with instancing with GN. Does anybody know why the viewport performance is so much faster when realizing the instances to geometry vs leaving them as pure instances? (around 30x faster). When rendering is of course the exact opposite.

I probably didn’t explain fully. The way Blender stores image data and how that image is used requires more data than a socket currently stores. This extra data for how the image is used can be stored in the node but this doesn’t work when sharing a node graph on multiple objects and you want to use a different image on each one. This is why this node is implemented differently to its shader node version and doesn’t have controls for color space etc.