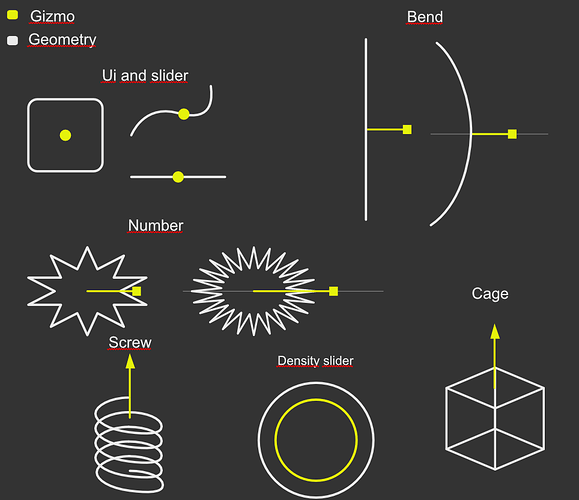

Thanks for posting this here. As you noticed already, custom gizmo shapes is out of scope for the first release for reasons mentioned in the original post. We don’t know for sure if we’ll have custom gizmo shapes in the future or if we’ll just add a couple more built-in gizmos that can be combined in new ways but still all feel familiar.

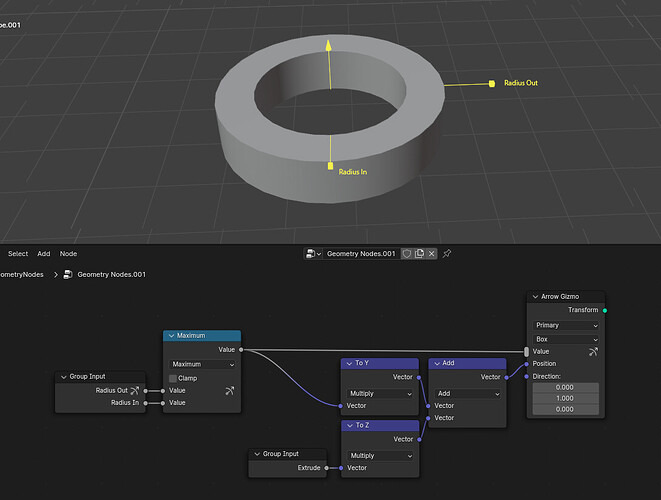

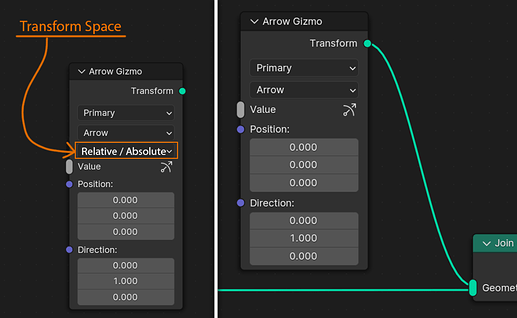

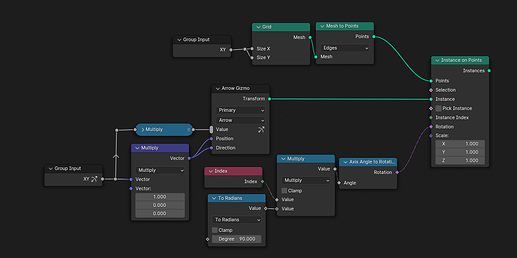

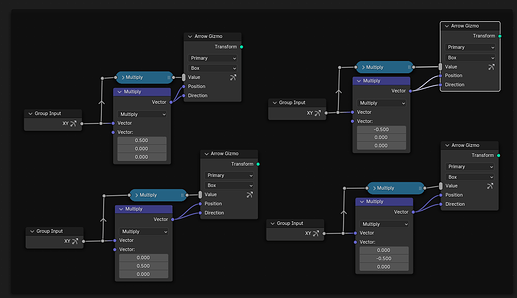

Your proposal looks interesting, but I think it misses an important point: How does Blender know in general how mouse clicks and movements in the viewport result in changes to the gizmo? Currently, you show how the gizmo geometry is generated from values that the gizmo controls, but not how to reconstruct the original values when the gizmo is changed in the viewport.

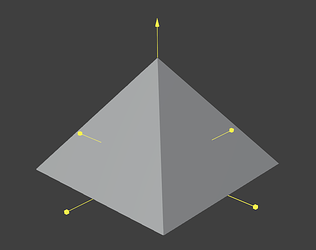

This can be detected automatically in simple cases like just controlling the radius of a circle, but for that you could also just use a built-in gizmo. Would be good to have a more compelling example for a custom gizmo that is not as easy to built out of existing gizmos shapes.

I didn’t think about it before, but I just noticed that this interaction design with the gizmo may also be related to modal node tools, where the node group handles the event processing (while we have a rough design for that, it’s still WIP).

Would be very useful if you can try to reliably reproduce the issue so that I can fix it.

Yes, that’s currently intentional. It often makes interaction with the gizmo easier and more predictable. Especially if changes in the gizmo also affect the curve the gizmo should follow.

We did talk about this kind of explicit curve-following already, and think it’s generally a good idea, but a bit out of scope for now.

In the context of this thread, a gizmo is something the use interacts with. I think it’s reasonable to be able to add what you say at some point, but it’s out of scope for now. This feature shouldn’t really be related to how the modifier API works though.

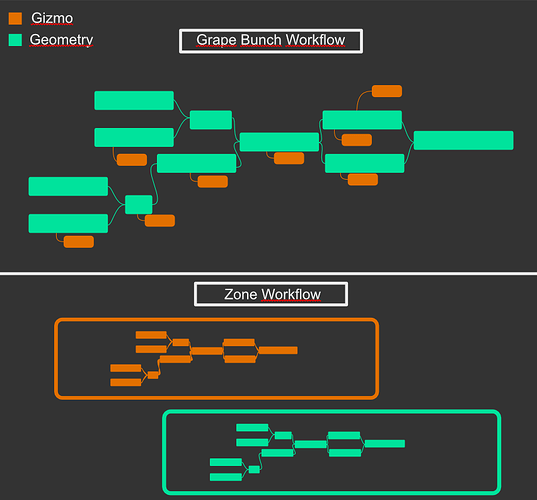

Yeah, as you found already, this solution isn’t very scaleable, because there is not a just a single geometry chain. Also, the gizmo wouldn’t even know at which point in the geometry chain it should start to take transforms into account.

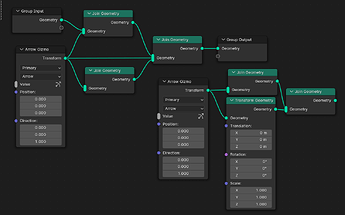

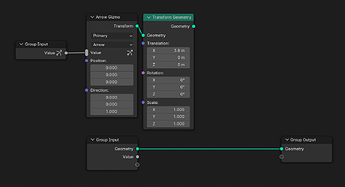

You should not, and you can’t without going into the node group. The first post describes when gizmos are active/visible in the viewport. You can only see gizmos that control Value nodes when the node group that contains that node is opened in some node editor. Someone just using the node group without entering it, will never see the gizmo. I think this perfectly aligns with the existing concept that you can only edit values from the outside that are exposed as group inputs.

While I think it’s sometimes possible to not mix the gizmo stuff with the rest of the node group, it doesn’t really work in general, unless you want to duplicate potentially large parts of the node group. To me, this seems to make things more complex and less flexible.

Totally agree, the design intended something else. See my reponse above.

Not sure, which “nature” you mean here. To me it feels fairly natural by now that gizmos that affect a geometry should be transformed together with that geometry, and for that they have to be linked in some way.