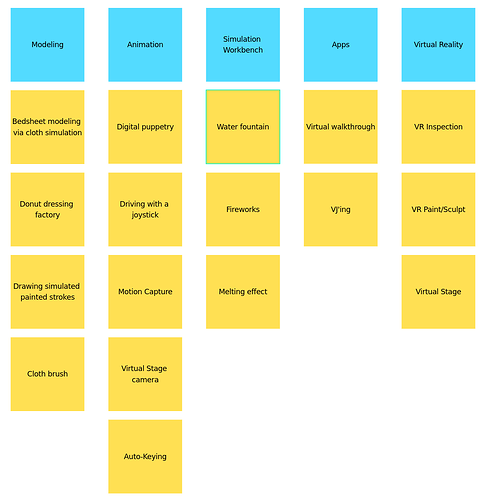

This workshop happened in the second week of November 2023 at the Blender head quarters in Amsterdam.

Present

- Bastien Montagne (assets)

- Dalai Felinto (2 days)

- Falk David (grease pencil)

- Hans Goudey

- Jacques Lucke

- Julian Eisel (assets)

- Lukas Tönne (4 days)

- Nathan Vegdahl (gizmos)

- Simon Thommes (4 days)

Notes

These are all the notes we took together during the various meetings over the week. There is also a blog post summarizing the different topics.

Volumes

Mesh to Density Grid,Density Grid to Mesh- Partially filled boundary voxels mostly only make sense for density and not so much for e.g. temperature.

Mesh to SDF Grid- “SDF” is just a math function, while a grid is a rasterized function.

- Also applies to

Offset SDFandUnion SDF.

- Also applies to

- Exposing the boundary thickness (“bandwidth”) might be a good teaching moment.

- “SDF” is just a math function, while a grid is a rasterized function.

Density to SDF Grid,SDF to Density Grid- Whatever grid is passed into the former node is interpreted as density (0 is “empty”).

- Needs a threshold input.

- “Voxel Size” input requires logarithmic input slider to avoid crashing things too easily.

- This way we don’t really need the resolution input anymore which mainly exists to solve that problem.

- Could provide a node group that computes a “good” voxel size from a geometry (bounding box) and a resolution.

- Makes it easier to not have to implement the resolution behavior in all volume nodes.

- Even if we replace the “Voxel Size” input with a transform matrix later, it would still be good to have a slider to control the size in the node.

- On the other hand, not having a slider would make it more obvious that the same transform should be used in all volume nodes.

Resample Gridonly takes the transform of the reference grid into account.- The reference grid should not be called

Topology Gridthen.

- The reference grid should not be called

Simplify Grid,thresholdvs.epsilon- it’s a delta for comparisons

- Check what name openvdb uses, same for

Densify

- Might need a special resampling node for SDFs.

- It has to make sure that the bandwidth is a few voxels large.

- A possible alternative to supporting grids in every function node could be to always use a

Gridnode when creating a new field.- Keeps the behavior of the function nodes simpler.

- Has the benefit that the user can know for sure that there are no intermediate grids (which can use a lot of memory).

- The downside is that it makes simple math operations harder on grids because one always needs the extra

GridandSample Gridnode. - We want function nodes to work similarly on grids and lists in the future, and for lists it seems more obvious that function nodes should be able to modify them directly.

Voxel Coordinatesshould be calledVoxel Indicesbecause coordinates tends to imply “positions”.Sample Grid+Sample Grid IndexAdvect Grid/Volumenode input should beOffsetinstead ofVelocity.- “Velocity” indicates something with time.

Menu Switch

- How do we handle invalid states?

- Should a default value be used when the value is invalid (i.e. if the enum item was removed)

- Error messages would ideally be displayed in the UI (though that currently won’t work when the node is part of field evaluation)

- Capture and store

- Capture can work, since the enum type can be propagated from the right ot the left. but since store has no menu output, there is no way for the type to propgate (without left to right propagation mentioned below). For now the capture node can be supported, but it may be safer to avoid that for now.

- Left to right propagation

- This could be helpful for nodes that only have menu inputs (and no outputs) (like the compare node for menu sockets, or the viewer node). But it needs a bit more use case motivation and it could be added later if necessary.

- Menu attributes

- It would be very nice to have a menu attribute as well, at least in the long term. But mixing runtime and static analysis in the node tree could be difficult and raises more design questions.

- This format isn’t supported by other formats.

- The mapping between stored attribute integer values and menu values should probably be controlled by the user.

- Configurable identifier strings and integers for items

- This could be helpful for more complex production bug-fixing, etc.

- Both are relatively easily-added features, but to keep things simpler, for now we can do without them.

Gizmos

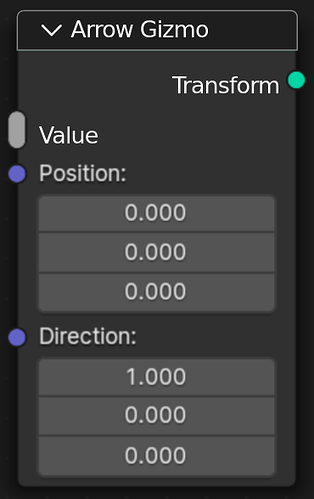

- The gizmo is a “UI controller” that’s attached to a group input, constant node, or the modifier input.

- Currently the prototype uses a “Gizmo Factor” node to control the derivative how the input affects the value. The generic math node should be used instead in a limited way.

- A bad gizmo connection should give a link error.

- The gizmo should respect the range of the input.

- Gizmos should support snapping, though that is a separate issue.

- The “Gizmo” output can be called “Transform”

- The gizmo node headers should be a different color like black (the output/input class)

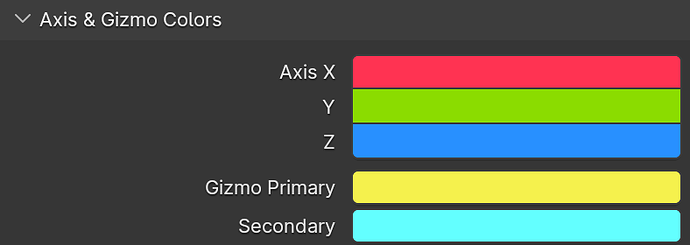

- Color

- In the gizmo node, users can pick a color from the gizmo theme colors (X, Y, Z, Primary, Secondary).

- Putting the color in the gizmo node does break composability a bit, but that’s a simpler approach than putting it elsewhere.

- It should somehow be clear that a socket (or node group?) can be controlled by a gizmo. A new gizmo icon can be added to the node header. Pablo can be contacted for a final decision here.

- It would be useful to have a gizmo node that has a curve input to be used as a “follow path constraint.”

- Expose shape options for Arrow/Dial gizmo.

- Check why arrow gizmo is thinner than arrows in translate tool.

- It’s not clear whether showing the gizmos for the first instance only is good enough. Other options are:

- Not showing the gizmos for instances.

- Showing them for all instances.

- Show the gizmos for the instance closest to 3d cursor or view center.

Baking

- In 4.0 we added support for baking individual simulations

- The next step is to update the bake node branch. The baking functionality is basically the same as for simulations.

- Showing some controls in the node itself seems essential. But want to avoid cluttering the UI with too many option should be avoided.

- There should be a button in the node itself to bake the current single frame, to make rebaking quick.

- It needs to be possible to rebake with a single click so that the node tree isn’t evaluated twice

- The bake settings are only displayed in the node editor side panel though, to avoid cluttering the node interface

- A “Still” / “Frame Range” enum controls which frames to bake on

- Extrapolation is not displayed in the “Still” mode because it always happens. In “Frame Range” mode, there is an extrapolation option.

- At some point a “Linear Extrapolation” feature would be helpful.

- The current bake status can be displayed as a node overlay, similar to how we display node timings

- There should be a button in the node itself to bake the current single frame, to make rebaking quick.

- An existing patch adds visualization for evaluated nodes, which is helpful to show users the effect of baking.

- Graying out would look better, but the problem is that newly added nodes would be grayed out. To solve this, nodes that aren’t connected to the output or viewer nodes would be an exception.

- Graying out should look different than muting. 25% would look more “editable” than muting, but maybe a tint is necessary.

- Graying out would look better, but the problem is that newly added nodes would be grayed out. To solve this, nodes that aren’t connected to the output or viewer nodes would be an exception.

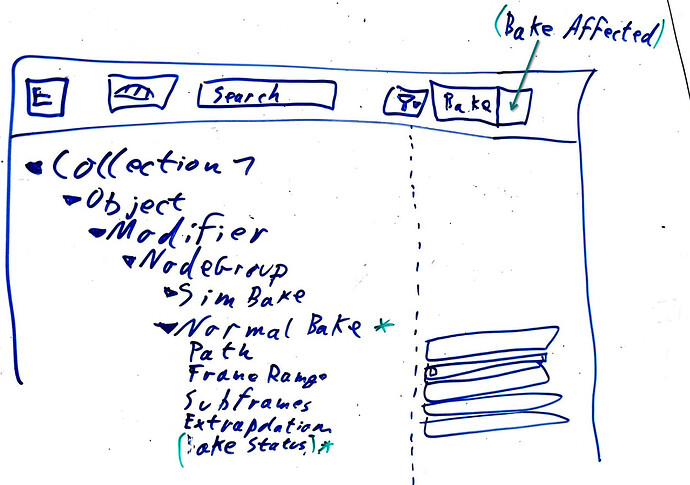

- Outliner

- Control over some things in a centralized place would be helpful

- Bake path

- Frame range

- Subframes

- Extrapolation

- Bake operator

- The outliner gets a new “Bakes” view mode, potentially called the “Bakery”

- The hierarchy can be similar to the data API view, where each settings gets its own row

- Bakes are displayed in the collection/object/modifier/node hierarchy, similar to the “View Layer” view mode

- Operators could change multiple bakes at the same time

- The bake operator gets a special button in the editor header that bakes the selection

- A “Select Affected Bakes” operator could select all bakes that depend on a selected bake.

- But we shouldn’t rely on selections too much, since dependencies can change without the user realizing it, so a “Bake Affected” operator would be good too.

- If you change a setting somewhere, how do you know what to bake?

- Selection syncing could work the same way as the existing “View Layer” mode, just syncing to objects rather than individual bakes.

- The selection status for bakes would have to be different than the node selection (stored in the modifier instead), which would make that lower level of syncing confusing.

- Affecting properties of more than one bake at a time

- “Copy to Selected” in the context menus copies properties to all selected bakes.

- For “groups” of properties like the frame range settings, there can be specific operators that affect all selected bakes.

- The main unresolved question is whether the bake control should happen in a new outliner view mode or whether the existing view layer mode should be extended.

- Functionally there would be a lot of duplication between the two mode, and fitting them together has benefits for consistency and flexibility.

- Requiring more configuration and cluttering the outliner with bake settings are potential downsides of reusing the existing view mode though.

- The decision depends on a bigger picture overall design for the outliner, so the UI team should be involved.

- Control over some things in a centralized place would be helpful

- Bake settings in group and/or modifier

- It would be good to control baking inside of node groups.

- We don’t want to force people to go inside of the node group or go to the outliner to change settings. It might be okay for some functionality to only be available in the outliner though.

- Often settings should be shared between multiple bakes inside of a node group. It’s not clear if those groups should be created by the user or the node group author though, or if they even really need to exist.

- A bake button in the group node bakes all simulations on the inside of the group.

- Bake names

- Names could be stored on the node as a “default name”

- This is controlled by the node group author

- It’s probably necessary to also control the name per context, because all the bakes in a modifier might be displayed in a flat list without their hierarchy.

- This is controlled by the user of the node group

- If this per-context name is empty, the default name would be used

- If the bake name affected the simulation/bake node’s bake path, it would need to be stored. So it seems okay to start adding names without changing bake paths.

- Names could be stored on the node as a “default name”

- Packing bakes

- Bakes aren’t data-blocks, but we can also pack non-data-blocks.

- The difference is that we have directories rather than paths.

- The implementation may be tricky.

- Bake paths would be passed around in geometry nodes as strings for the “Import Bake” node. But packed bakes don’t really have a file path.

- We might need a new socket type for “Bake Reference”

- Bakes aren’t ID data-blocks because we have a bake per node per use of the node

- It should be possible to bake directly into packed data and read from it. That might often be the preferred way of working.

- It should be possible to see (and change) whether a path is packed wherever the path can be seen.

- The topic is still unresolved.

Subframes

- Leave responsibility to keep re-evaluation of frames to the user but give analytic insight and utilities to improve performance

- Maybe line graph in timeline to see how many times a frame is evaluated

Asset Data-Block Handling

This topic is quite big and has fairly far reaching consequences for all of data block management in Blender. This needs more discussion with the stakeholders.

- See “Asset deduplication proposal” proposal below

- “Essentials” needs a OS-agnostic “file path” to facilitate deduplication (this is necessary because the library path needs to be the same for data-block deduplication to happen)

- Embedded data-blocks (including the essentials) are never re-read from the source file.

- When appending an embedded data block, it should be embedded into the new files.

- Embedded data blocks with the same library path are candidates for automatic deduplication when the data blocks are identical.

- The check if data blocks are identical has to be conservative. Some specific kinds of changes can be allowed (e.g. changing node selection).

Dynamic Socket Visibilities

- Automatic hiding of sockets we know for sure aren’t used seems like a good place to start.

- The issue with non-automatic calculation is that the user could hide sockets that are actually still used.

- Graying out sockets instead of hiding them would probably be safer, more consistent, and more helpful. Misconfigured “use status” wouldn’t be as big of a deal, and this would work better with panels as well, solving the “Boolean in panel header” topic.

- Option on the input whether to gray out or hiding

- Some input settings control inherent functionality that doesn’t change regularly and hiding should be part of the design of the node

- Option on the input whether to gray out or hiding

- Completely unused sockets with no links connected inside could theoretically have special handling. They are used often for mockups, for example.

- Input use status could depend on which outputs are used. To avoid graying out all inputs in new nodes, we would have a special case for when no outputs are used.

- However, this conflicts with other methods of showing evaluation status, and it would probably be better to avoid this complexity. The use status of inputs should be based on all outputs.

Asset Menu Paths

- Currently asset library organization and menu organization are coupled, which makes both things much less flexible.

- As an example, currently in projects the geometry nodes add menu might have a “Nodes > Geometry Nodes” submenu, because that’s how users want to organize their libraries.

- The asset authors should have control over where the assets are displayed in the UI rather than users, to avoid requiring users to configure the UI just to get easy access to features.

- Should all assets in the same catalog should be in the same menu?

- Options for storage

- Don’t change anything

- Store a menu path per asset data-block

- Store a menu path

- Just add an “Menu Level” option to catalogs to stop them from adding another level of heirarchy. The catalog tree gets an “edit mode” that adds a checkox to the right of catalog.

Replacement-Based Procedural Modelling

- Add border face merge collision as selection output instead of solving it in the same node with an input

- Removes complexity from the node itself, adds utility to identify merged geometry, use-cases didn’t seem compelling enough to include this in the node itself

- Add utilities to contort shape into the standard space

- different deformation/interpolation options

- alleviates need for the user to know about the exact system for the chosen standard n-gon space

Wildcard Socket

- General agreement about the usefulness of the socket and the shape is more or less okay

Rainbow Socket

- Actually rainbow sockets might work, but link colors are trickier. Maybe they will go back to the old gray color. Needs discussion with Pablo.

Realtime Mode

- Dalai has been iterating on the realtime mode design

- The pragmatic approach

- Have a separate realtime clock

- The “simulation time” and animation time can be locked together or unlocked

- Realtime mode as a mode

- The mode is something you enter where simulation is always active (though it can be reset or stepped through manually)

- No automatic simulation cache is stored

- Auto keyframing of inputs and subframes

- “Reset” and undo are related, because reseting should also apply to sculpting done while in the mode.

- Animation clock reset also triggers a “realtime reset”

- Baking can be turned on. Either the final state is stored as one baked frame (or to original data?), or while the scene clock is running, every frame can be baked.

- Triggering object animation in realtime mode is an open question

- Many of the use cases only require modal node tools.

Modal Node Tools

- A simulation zone allows keeping data from the last execution

- A new panel in the node group side panel is where the possible modal actions are configured

- Each modal action item is a keymap item and references a (initially just booleans) group input

- Multiple keymap items can map to the same group input

- Operation presets could be helpful as well

- A “Finished” group output is special and defines when the operator shouldn’t execute anymore

- Template node groups are an important way to make this more usable

- An “Is Interactive” checkbox controls whether to run the group without event inputs

- “Escape” is a hard-coded cancel operation that resets to the state when the operator starts

Grease Pencil

- The “Grease Pencil is Curves” design in geometry nodes isn’t quite right, since grease pencil is really a group of separate drawings

- The “curve nodes act implicitly on grease pencil layers” design can get us very far, but it can’t do everything.

- We still need a conversion node that takes a layer selection and converts to curves

- The node can create curve instances or curves, based on a “Flatten Layers” option

- The node is called “Grease Pencil to Curves”

- Geometries should get names, so they can correspond more directly to layers

- There could be a “Set Name” node eventually but it isn’t need for now

- Viewing instance geometry in the spreadsheet will also be helpul

- The instance references are displayed in a hierarchy, along with a user count

- Sampling nodes

- For now it seems okay if we just don’t support grease pencil in nodes that view an entire geometry

- The flattening is a consistent way to make this work, and the behavior remains explicit

- The design considers layers and instances to be more or less equivalent in this way.

- Applying modifiers

- At every keyframe, evaluate modifiers. Put evaluated layers that correspond to existing layers in the active drawings. For new procedurally generated layers, add a new layer and a new keyframe

- Do all evaluation first, then add layers and keyframes afterwards.

- More options might be necessary, to control whether every keyframe is affected or not.

Interactivity Option

- There have been many requests for an “Is Edit Mode” node, and controlling the interactivity/performance/quality level of procedural operations is important. The “Is Viewport” node is also related.

- An “Interactivity Level” input node may be helpful (with three levels: “Interactive”, “Viewport”, and “Render”).

- Integrating this with a group inputs is another option that’s potentially more flexible.

- This could be exposed to higher level node-groups in the same way as potentially Tool specific nodes would be. e.g. implicitly exposing the Selection as a socket.

Asset Deduplication Proposal

This proposal was written during one meeting. It should become a standalone proposal eventually, but for now it is included in these meeting notes.

The introduction of “Append and Re-use” for assets, made production files cluttered with duplicated versions of the same assets (e.g., essential node groups). That happens due to linking different files which have appended/reused the same assets. That leads to the following problems:

- Namespace pollution.

- Changes not being propagated on them.

On the flip side, it made local changes easy.

To address these problems we want Blender to deduplicate identical data-blocks:

- It is up to the implementation to decide how to determine whether a data-block is identical. If non-relevant changes (e.g., node position) can’t be told apart from relevant changes (values) we err on the side of considering them different.

- This should be handle automatically by Blender, which internally will need to find a way to tell different versions of the same asset apart (internal versionioning, hash, …).

We introduce a new way to bring a data-block into a file: Embedding:

- It works similarly to linking, where users have to make the data-block local before changing its properties.

- It preserves a “link” to the original data-block (and its version/hash/…) so it give users the option to update an asset.

- If the embedded relation is severed (e.g., when using an asset from outside the essential in a renderfarm) the file is still fully valid (it contains a full embedded copy of the original data-block).

Pain points:

- The logic to determine whether the datablocks are identical may be expensive.

- This may be too close to the concept of variants and versions for IDs, and thus could be tackled together.

- This should be budgeted as a project within the Blender planning.

- How it is called, and how this relates with linking needs to be clear and well communicated.

Naming

- Instead of “Embedding”, it could be called an “Asset data-block”.