Sorry for the uninformed question but couldn’t the effect you’re looking for be accomplished better with a transmission shader rather than SSS?

I didn’t look really deep into the whole subsurface scattering stuff, but I must say the difference between v2.93.5 cycles and the current is rather striking and to me somewhat unexpected.

I was re-rendering a picture of a toadstool made by my daughter with v2.93, and in the current build it is much darker and the subsurface translucency hardly seems to exist. Also the colors seem much more muted, but I think that’s a consequence of the sss being much weaker.

Maybe the new system is more realistic, I don’t know, but I do know it means tweaking/changing all my old nature scenes. Some way to get a more backwards compatible amount of sss would be nice if at all possible.

Did you check if it was world AO?

There is a bug in Blender 3.0 where materials with Christensen-Burley will render incorrectly in the object is scaled, but the scale is not applied. You might be experiencing this issue.

That’s it. With the scale applied it renders the same (eyeballing it) as v2.93.5.

@leomid

i tried that, but it doesn’t work even with a transmission shader; it gets even worse, because there is even less scattering…

As I said before, Christensen-Burley works great for translucent plastic materials, but unfortunately in 3.0 with way too much noise… so much that you render even with Cycles X longer than with Cycles (2.93x)

@slowburn

I can’t show any pictures of the objects I visualize for my client here, I signed an NDA. There is a lot of stuff lying around that would show the problem perfectly, but I can’t do that.

I am very far away from home at the moment, so I could use the handlebars of my bicycle as an example.

I would have to walk into town with a flashlight and look for a suitable object, but that is probably not necessary, because I think it is best and most impressive if everyone does the experiment themselves; because as I said it also works with a rectangular and translucent pencil eraser. (turn the block and look at the edges).

You just need something with lamellas or a fine profile, or at least an object that has edges.

You can also take your test object from your bug report to SSS

(cylinder with knobs) Just give the material more translucence/transparency and put the lamp directly behind the object, exactly opposite the camera. Actually the front side of your object should shine, because it is translucent/transparent, but Random Walk absorbs the light completely, so almost no light gets through. (in contrast to the eraser test).

You can increase the values for Subsurface scattering to 1 or 2, or higher, but this does not solve the problem satisfactorily; also it does not help to raise the values for the radius (then already more light arrives in front, but this is no longer realistic…)

As I said, Christensen-Burley does an excellent job, but unfortunately has way too much noise in 3.0…

Hi.

I have been doing some testing with OIDN Denoise node and I have noticed that I repeatedly make the following mistake.

Without realizing that in Render Tab I have “Denoise” checkbox enabled for final render, then I use “Image” output plugged into Denoise node, so what I’m doing is denoising the already denoised image.

To make this less confusing and not lead to user oversights like mine, my suggestion is that when you enable “Denoising Data” we have available “Noisy image” output in Render Layers node. And also in Denoise node rename “Image” by “Noisy image”. In this way we make sure that we are ‘always’ using Noisy Image in the Denoise node (Noisy Image <–> Noisy Image). Image output in Render Layers node continues to be what we had chosen in the Render tab settings and it is now not used for Denoise node.

I asked Brecht earlier about the difference of the Render Tab “Denoise” checkbox and the denoise node, he said they should be the same, one should not have higher quality than the other one. So from now on generally we should use the Render Tab denoise for render denoising, the denoise node would be more for denoising imported exr or something I guess. So mistakes like this would not occure that often I think.

With Render Tab denoise you don’t have granular control over denoising individual passes, with Compositor you have.

The problem is applying the denoising twice, not that existing denoise options give different result.

I just downloaded the latest beta and I can’t find a checkbox for a Position pass. Has it been removed?

Regardning the AA stuff, I agree that it’s nice to have options to output data passes with and without AA, but if we can choose only one it should definetly not be any AA on the position pass. Most tools that work with position passes will generate edge issues due to the blending of pixel values in that case.

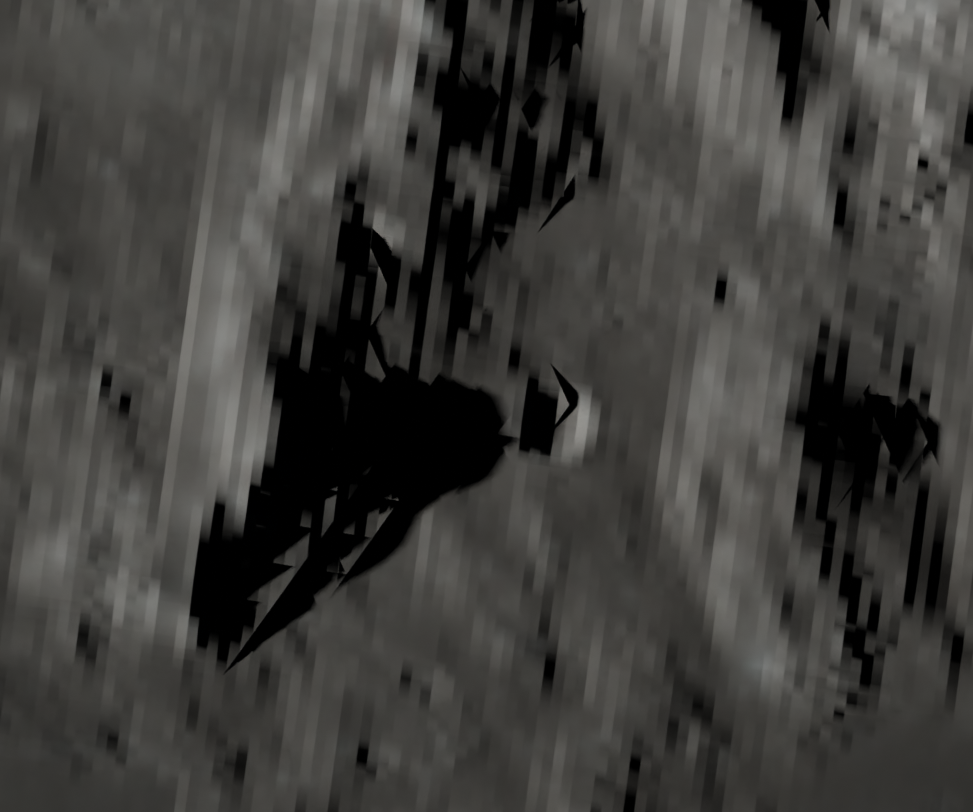

Here’s a bug I found with displacement and shadows. Cycles-X seems to act very strange with the shadows compared to the old Cycles. I don’t really have any idea why.

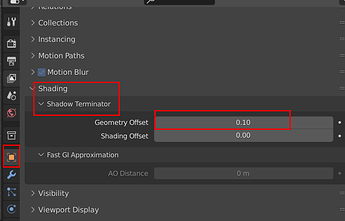

Probably has to do with this:

It’s for countering the shadow terminator problem (though it does not completely solve it).

0 would produce the same result as 2.93, but generally higher value should produce better result.

0.1 is enough for majority of the cases so it’s the default, but 1 should produce best results I believe, though sometimes some artifacts would still stay.

Here is an example using ANT landscape addon’s default mountain under the default point light.

in addition to what @silex wrote, sometimes you may want to reintroduce some noise into the perfectly smooth final render, and you can only do that by compositing

yes, Brecht really made my day… waiting to try it

Thank you Brecht… you rock.

It would be very cool to get a % denoise slider (a blend between the noisy and denoised image) in the renderer “Denoise” UI. From other renderers (Corona, Vray) I learned that a only 90% denoised image looks better than the perfect 100%. I’m having a lot of render layer and multiple batched scenes and don’t want to bother with blender compositing and it’s own outputs just for that.

I think maybe we should log this as a bug anyway to at least record it as a issue. Can you create this if there is not already something?

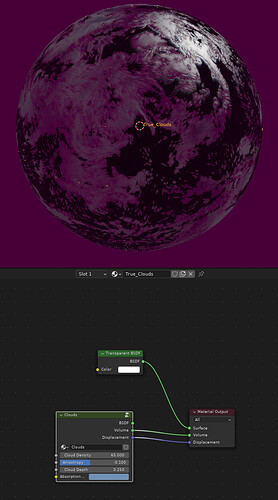

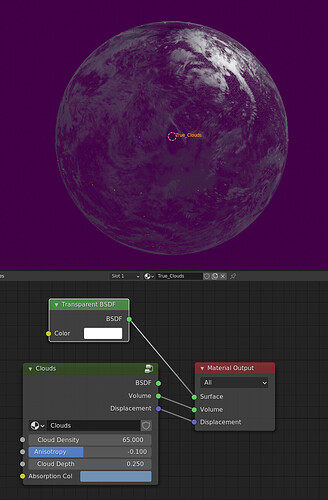

I didn’t see anything in the release notes up to now, but volumes appear to be rendering quite a bit darker than in 2.93. Is there something to explain this in the Cycles X changes, or would this be considered a bug?

EDIT : it looks like mixing translucency and volume isn’t functional in 3.0 beta.

compared to :

Hello @brecht and @sergey

I tested tile rendering a very high resolution image (19x10k px) on a CPU. Rendered with 3.1.0 master branch hash a2f0f982710d

With default cube and image size set to 19800x10800px there are massive memory spikes when rendering passes and even bigger spikes when reading from buffers after tiles are done or rendering is finished.

For passes so far the most intensive are cryptomatte. With the default cube scene and all 3 cryptomatte options checked RAM usage tops at 100GB!

All other passes combined without cryptomatte took at peak 180GB of RAM.

I really hope that this can be optimized. I was able to render far more complicated scenes with very high resolution with the same amount of RAM previously. And now the same scene when rendering is crashing Blender due to memory limitation after first tile is finished.

On a side note - during rendering on 3970X I didn’t see any performance regression reported here by @JuanGea

https://developer.blender.org/T92601

So maybe this regression is CPU arch dependant. The only time I saw any serious dips in CPU usage was when a lot of passes was checked. Dips happened mostly when one tile was between rendering tiles.