I’ve been trying to get to the bottom of a problem that I (and others, telling by forum posts and bug reports) face with using modified surface normals in materials. The resulting reports are usually something along the line of “broken normal map shading” or “bump mapping looks off in cycles but not in eevee”.

Here’s a list of normalmap related issues and forum posts, just from a quick search:

Summary

#126904 - Cycles: Normal Maps appear "flat" with low resolution mesh - blender - Blender Projects

#131605 - Cycles normal shading issue in assets with baked normal maps - blender - Blender Projects

#74367 - Bump Map and Normal Map causes flat shading. - blender - Blender Projects

#95729 - cycles normal shading issue with normalmaps and heightmaps - blender - Blender Projects

Normal map artifacts in cycles but not eevee - Materials and Textures - Blender Artists Community

Blender use normal map to render strange shadows in cycles, but everything is normal in eevee - Lighting and Rendering - Blender Artists Community

I would like to start by explaining what I think is going on, and why I think this is an important problem to solve.

Since this touches upon a core aspect of cycles, I probably won’t be able to tackle the problem myself, but I might be able to do some experimentation in code to get closer to a solution.

Tangent space normal maps are a clever way to keep surface detail high and poly count low, while still allowing the geometric surface to be deformed. In a perfect dot(n, l) world they work nearly flawlessly. In a path traced world, the trick can fall apart as issues arise when the sampled surface does not face the same direction as the geometric normal. Reflected rays can intersect with the surface and create shading artifacts.

Despite this, they are still crucial in workflows, especially in photogrammetry. If you are on a tight performance budget, a strongly decimated mesh with a heavy normalmap can go a long way.

I would like to argue that the current implementation is insufficient.

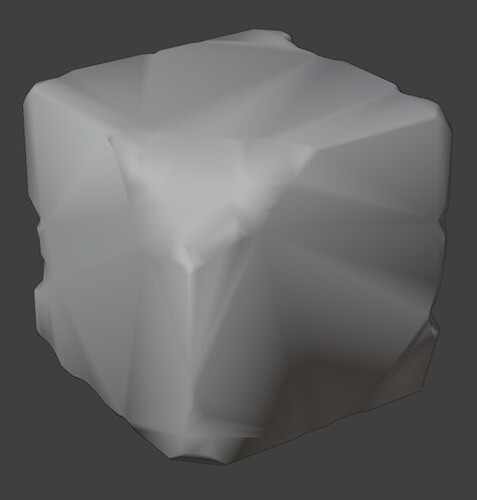

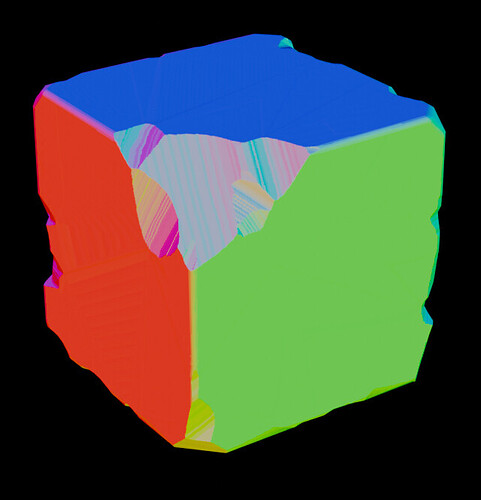

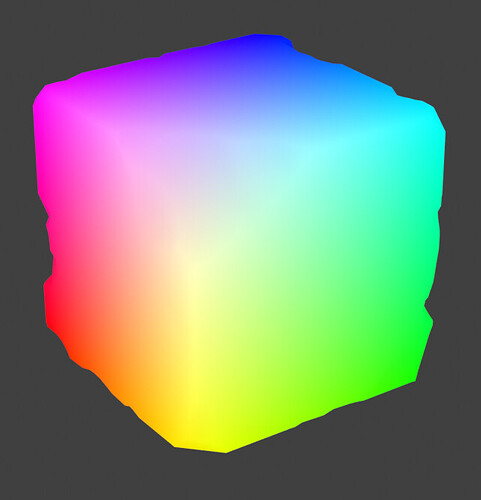

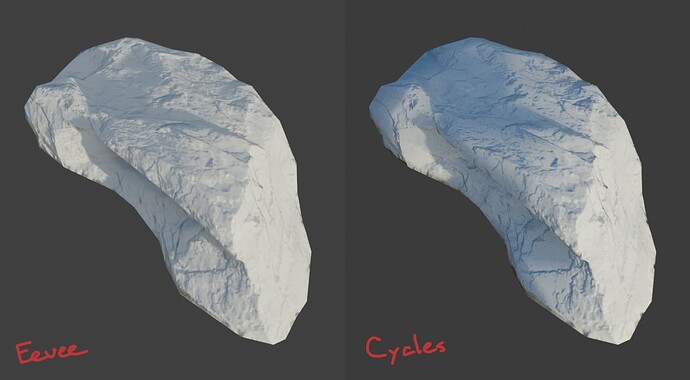

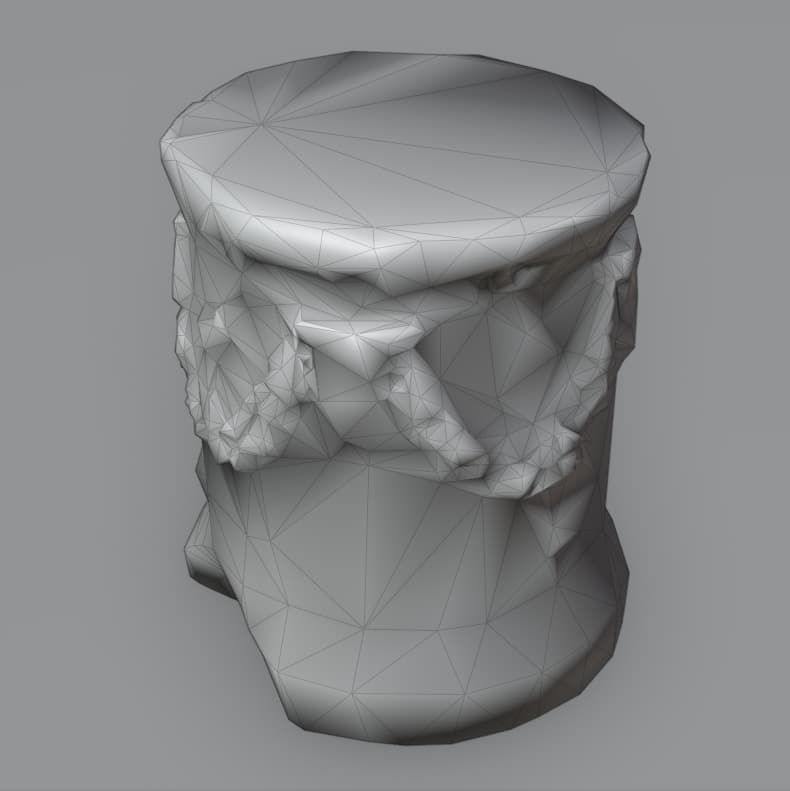

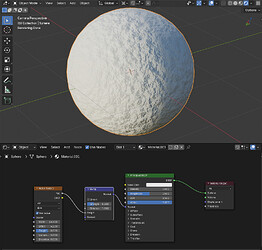

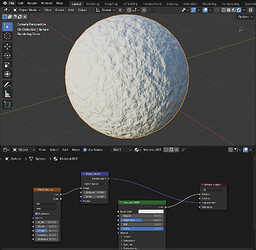

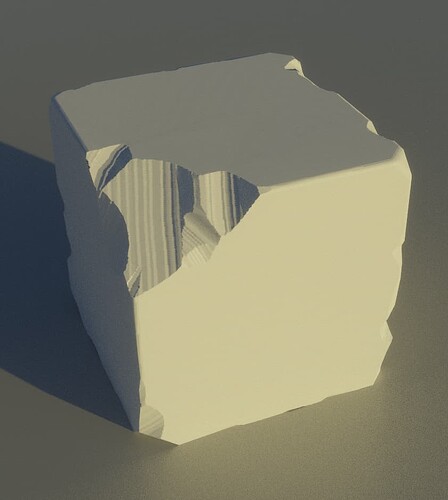

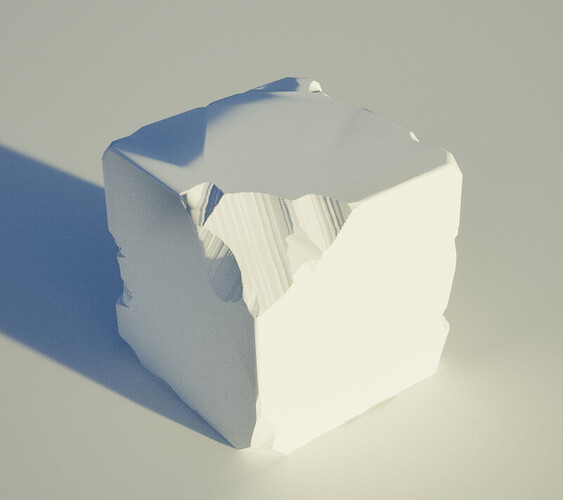

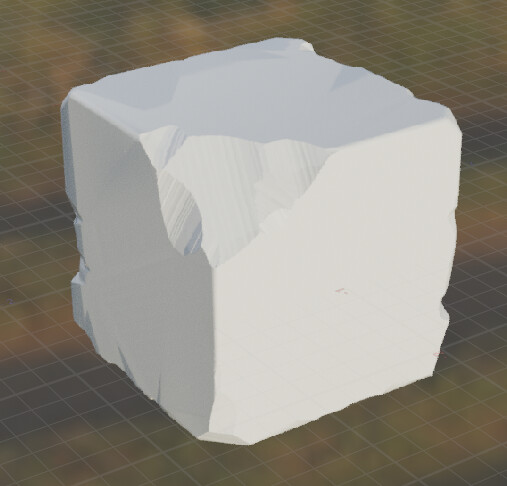

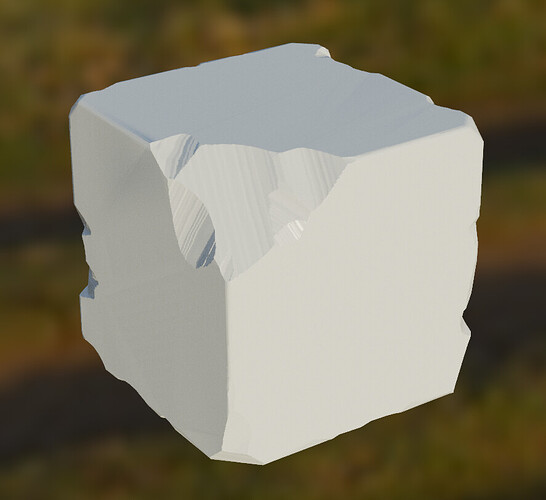

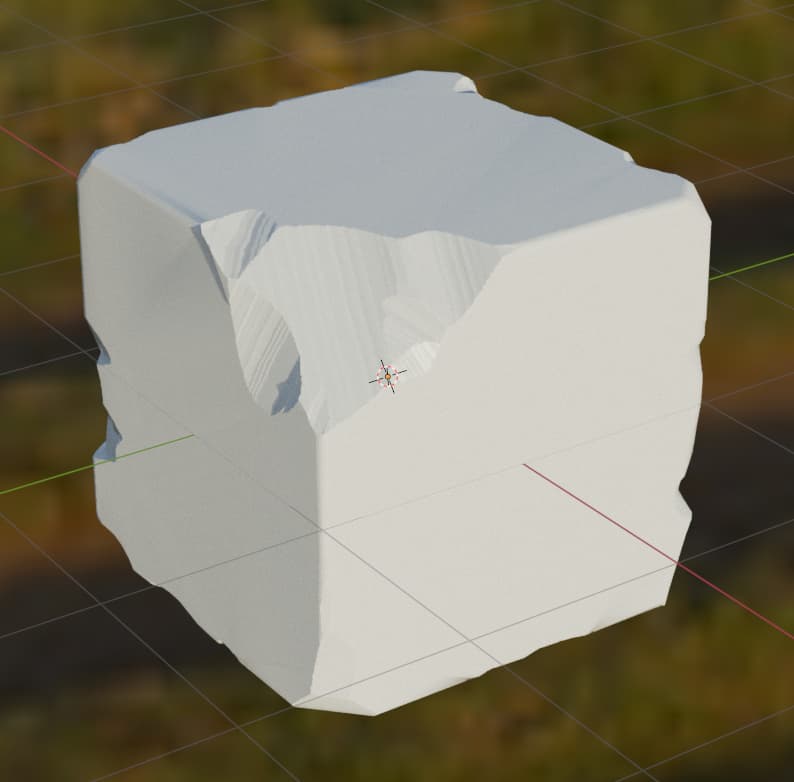

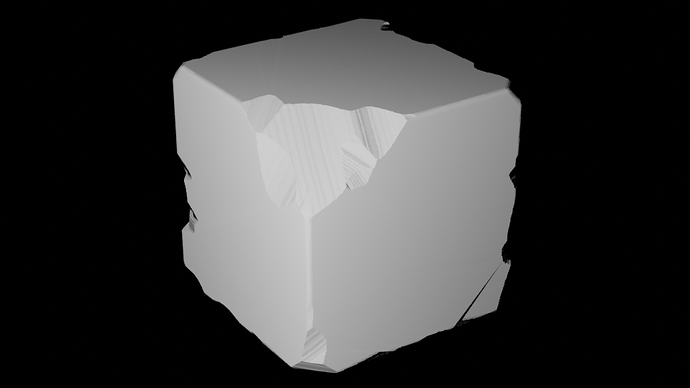

To demonstrate the issue, I have made the following example. It’s a high-poly mesh baked onto a decimated version of itself. A bit of an extreme example, and not best practice, but not unlike what might come out of a photoscan. The blend file can be found here.

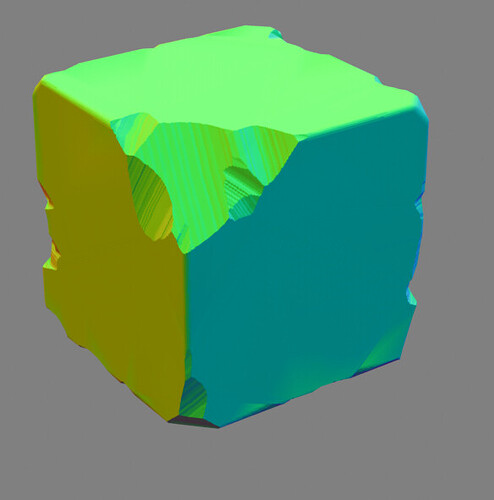

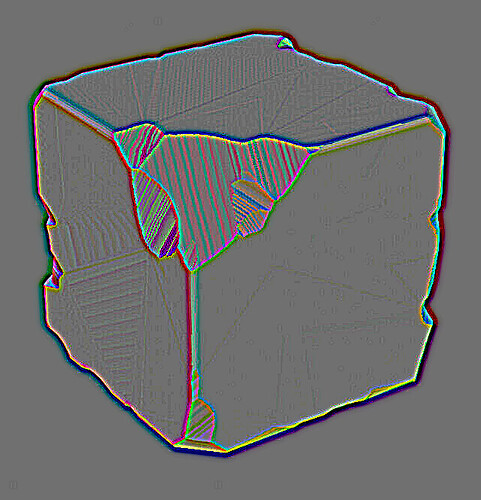

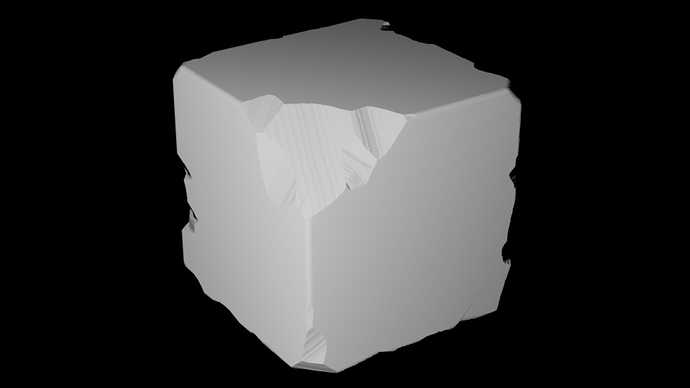

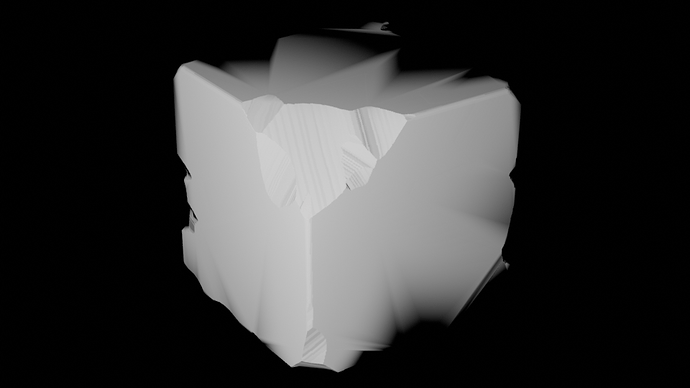

The normal and position pass look clean. Yet the shading result looks wrong, and while this is currently by design, I think it would be worthwhile to reconsider this design so that it align better with real-world scenarios.

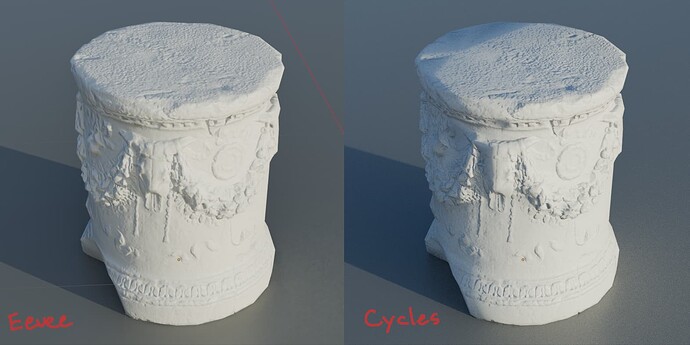

To demonstrate that his is not a hypothetical issue, here’s an example with a photogrammetry asset from Polyhaven:

(notice the lack of definition of surface details)

And from Quixel:

(notice the artifact-y stripes along the top)

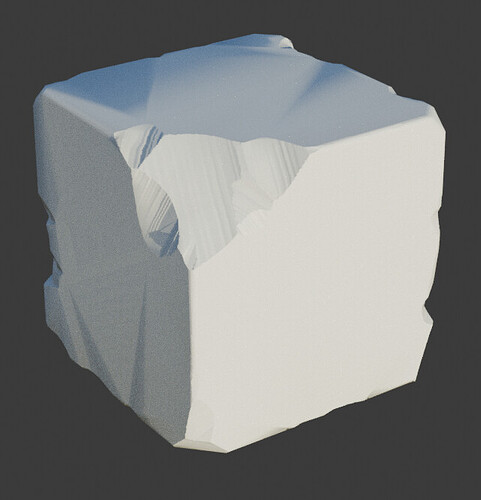

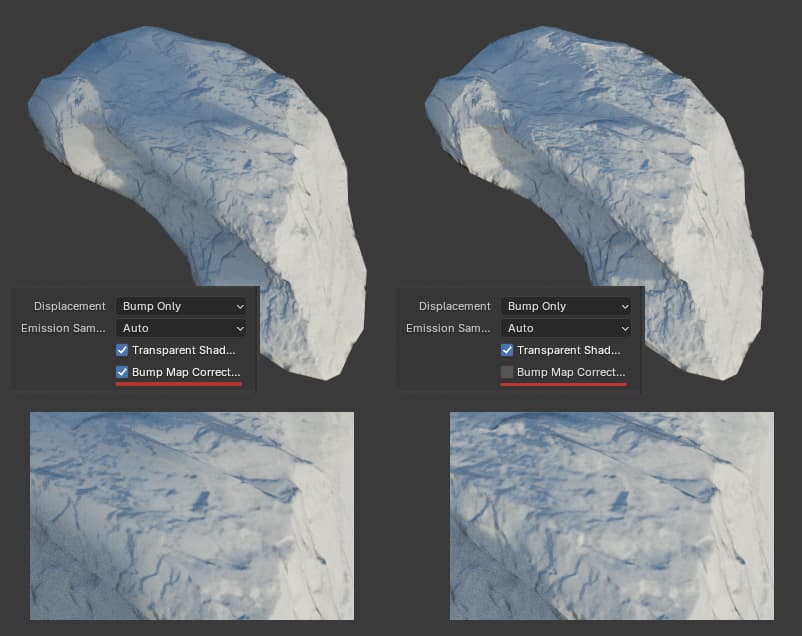

The cycles result suffers from two problems:

First, Bump Map Correction. This recently-added toggle allows us to disable this correction. With the option enabled, the application normalmap looks wrong to me. The surface is only darkened by normals pointing away from the lightsource. With the option enabled the surface looks more natural, with highlights where the normals are bent towards the direction of the light.

This is only part of the solution though. The second problem, possibly shadow-terminator related, causes dark spots to show up with strong vertex normal deviations, as shown on the top of this object. This can be mitigated by splitting the mesh by edge and baking an object-space normal map instead. This is not a viable solution to the problem in practice for users, but it shows what the result could look.

I’m diving into the Cycles source to see if I can spin up a proof of concept for a fix : )