firstly, apologies- I’m long-winded, that’s a reason I post so sparingly.

Yeah, the bump node is really not very easy to use, I can confirm that much. for one, “distance” isn’t immediately clear, as even in practice, it has a similar effect to strength and new users struggle to differentiate. It seems to mostly just be two adjustment sliders- strength flattens to neutral grey, and distance increases the output intensity of the normal map values.

I have an interest in the various parts of blender that “are suboptimal but known to have few true solutions”, so I’ve been following this for a good while, finding little workarounds here and there (nothing really easily accomplished or reasonable amount of work).

The discussion seems to be going between “this is an issue that multiple biased solutions exist for” and “this is known and intended behavior- it’s a limitation of normal maps and other path tracers do the same thing” and I don’t think either viewpoint should be taken as gospel- these things should be given to the user to decide- that’s why we have boxes to enable biased things, and have some “biased but almost unbiased” defaults already in the software.

Notably, the solution to “just only use the displacement input and ignore normals in things like the principled shader” … To me is just as restrictive as Alaska’s geometry normal replacements, which normal maps with multiple shaders breaks.

We should begin discussing functional alternatives, solutions, and algorithms to solve these things, because they’re issues in path tracing at large, but have easy biased solutions, and probably hard/unoptimized unbiased solutions to be found.

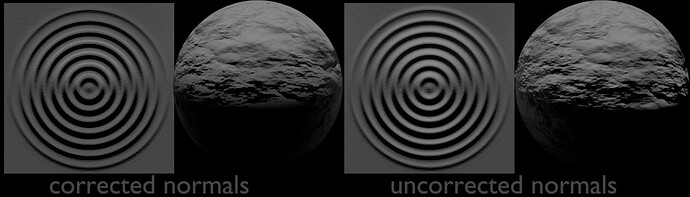

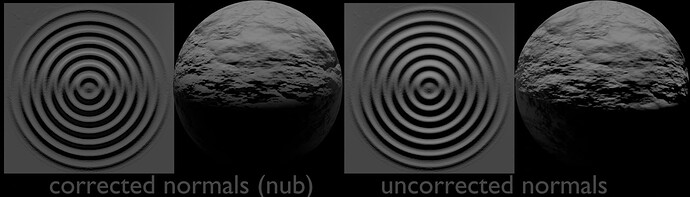

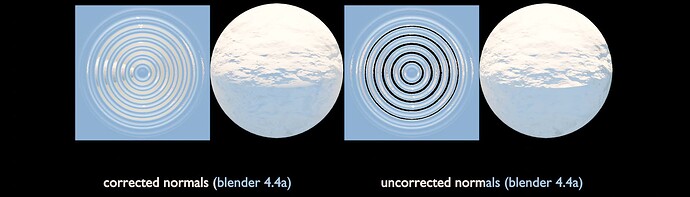

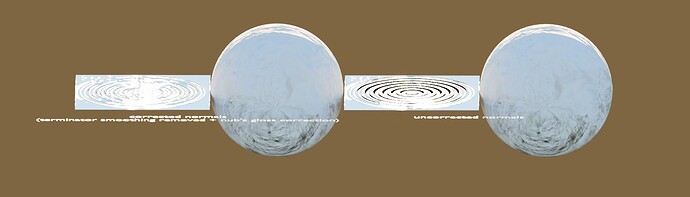

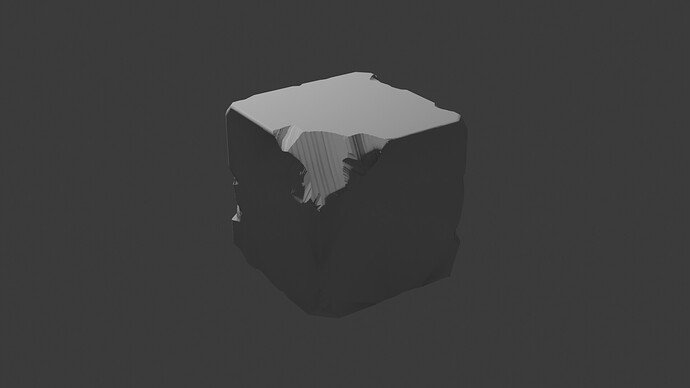

at the moment, it seems blender’s solution is to limit the angle that rays may reflect, to ensure normal maps don’t send rays through meshes. Anything past a certain angle seems to simply be given a bad normal, which works for things that don’t have deep normals to begin with, non-ideal geometry, and very detailed normals that interact with a gentle terminator.

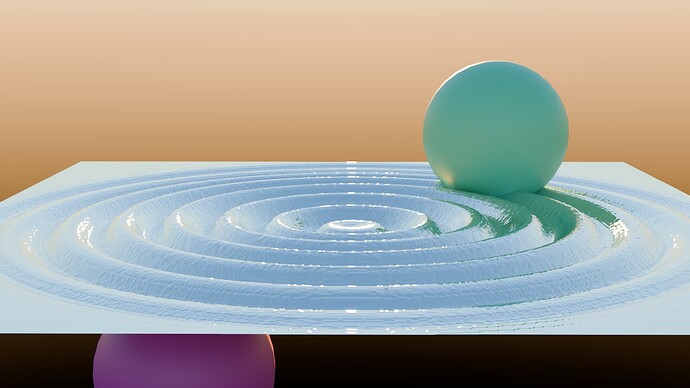

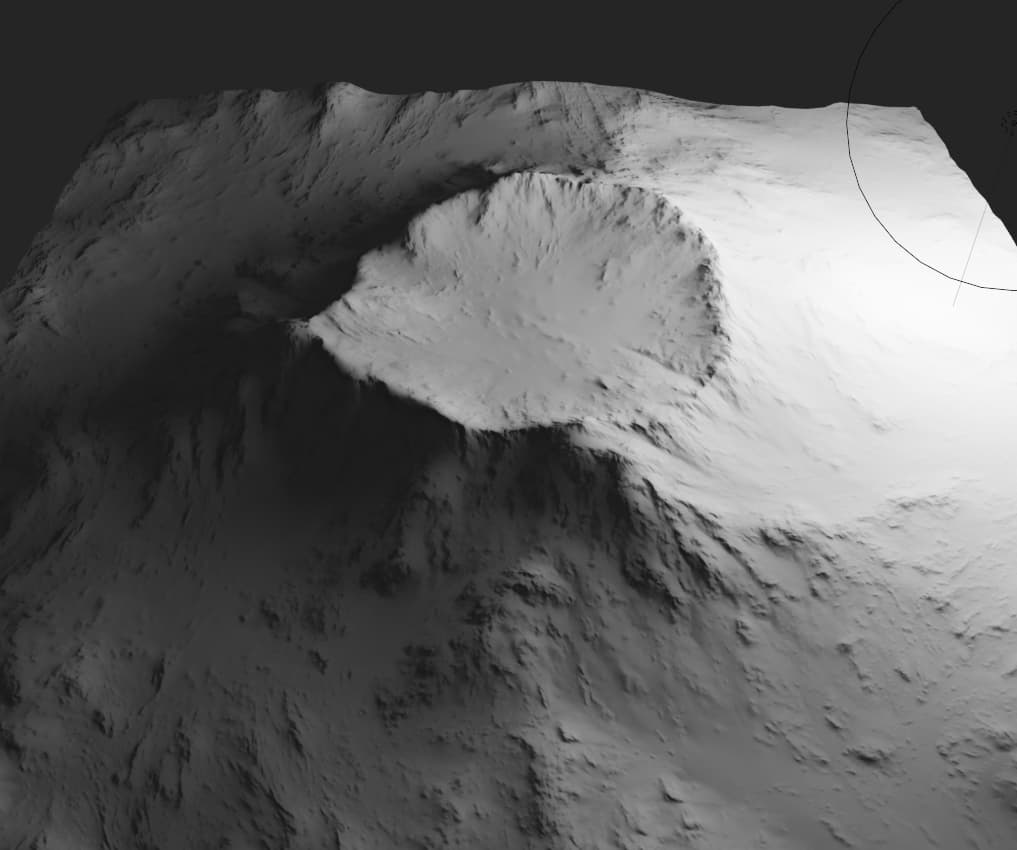

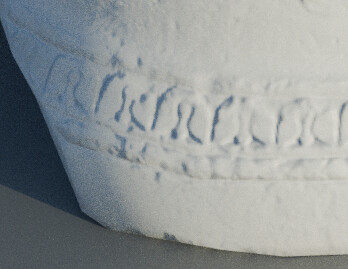

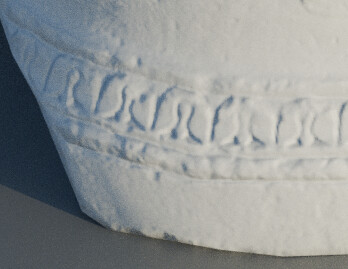

…but while this solution works for things like dents, wood grain, and uneven paint, it’s clear to me that normals are used for everything from stucco-style walls and tree bark to engravings and panel lining, all of which tend to exceed the 30 degree angle which seems to trigger “correction”.

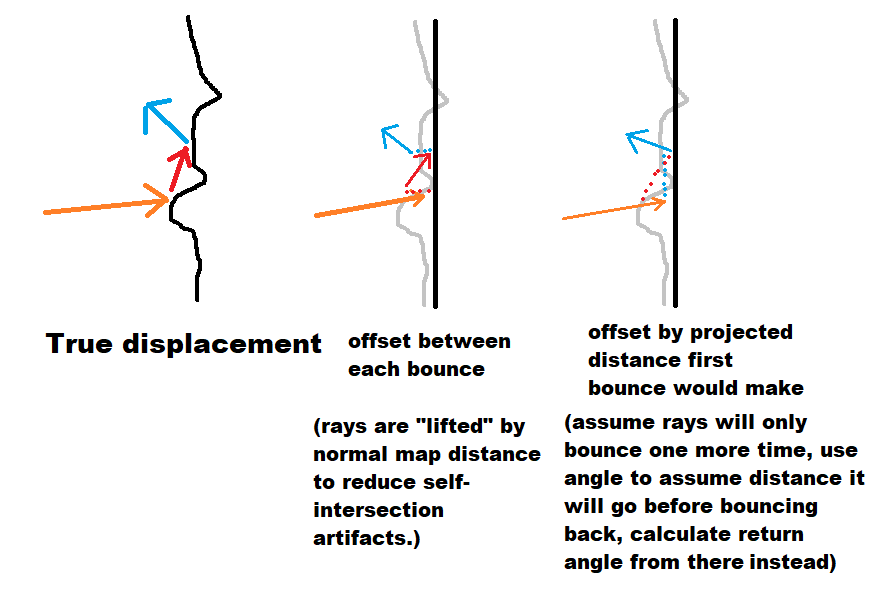

for example: A trick that games with a path-traced mode use is ray offsetting. it’s not unlike what cycles does for volumes or baking. Basically, when a ray intersects, you pull the ray up above the surface a little, and then allow it to continue. If it was to self-intersect, it will now hit the surface again. The actual texel it hits will likely be incorrect, but will be in the correct direction, and because a shallower bounce angle will go farther, it should have some semblance of coherence. There are a number of variations so I’ll just draw some of them.

Offsetting between each bounce is pretty simple, but can result in light leakage or black spots where things are too close to each other, first bounce projection does not have that issue, but as the name implies, cannot manage more extreme cases with 2+ bounces that result from very vertical normal maps. They would both probably be noisy, too.

another I mentally toyed with, is adding displacement data into the alpha channel. That would theoretically give blender the data it needs to treat a normal as a displacement- but we need a new bake workflow before we play with that.

I don’t think this is a legitimate worry, and I mean that in the best way. “normal correction” and normal maps in general already inject bias, so I think the most responsible thing to do at this point, is ensure normal maps work as intended as possible, even if they inject some bias and aren’t totally accurate.

I really want to see your ideas described or in action, and like this stack overflow answer, there do seem to be acceptable approximations. I can use displacement, but not for everything- that would be far too heavy, and most users don’t have giant render farms, and need to use CPU to handle displacement. Using a normal map itself should signal an acceptance that an approximation is acceptable.

But most importantly;

There are solutions out there, ones that don’t by default include “correcting” a user’s intentional choice in material, normal map or geometry normals

and for that reason, I think normal correction should by default be disabled.