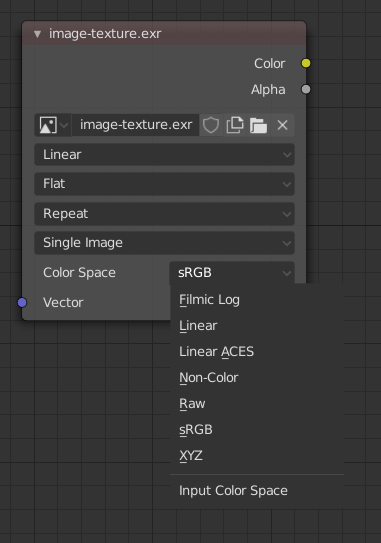

In the material image texture nodes there are options for Linear, Non-Color and RAW color spaces. I was wondering - what’s the difference? I understand that the Non-Color and Linear are pretty much the same, however Non-Color is never treated as color to avoid any possible color transforms, but why is there RAW option? It seems to me it’s unnecessary and only causes confussion. Am I missing something?

Whaever you choose here as Colorspace is just a semantic meaning for the data stored in the texture channels and defines how to interpret it. Non-color is meant for storing e.g. vector components in the channels. Raw is meant for color values that should be treated “as is”

Aren’t vector components stored in an image as Non-Color Data meant to be treated ‘as is’? Having inconsistency in color management settings and then in image texture nodes is probably confusing for new users. Why have different names for the same thing duplicated? As if color management was too easy to understand as it is…

If your image is saved as a high dynamic range image like .EXR etc, then the color space for you image should be changed to RAW. If it’s set to linear I don’t think you can take advantage of the high dynamic range, so things during compositing, like color grading etc, won’t be as effective. Furthermore some files are saved in 32 bit format, like normals, which need to be set to raw to be read correctly.

This is not true, as far as I know. 32bit EXR files are saved without any color transforms. I experience this in my work daily - I like Filmic transform, however if I save my renders in EXR it is not applied as 32bit EXRs are saved with linear data in them.

Ok, I see what you mean. Yes currently both are treated “as is”, but they are just more precise descriptions what’s stored inside the image, blender may use that info. From their current effect on how blender treats it they are currently identical yes. But it’s like calling margarine butter.

Yes I think for 32bit that is true.

Really? Then what is the point in saving them as EXR if it’s just going to be linear? Isn’t the point to store more data for compositing? Honest question.

You get a whole lot more data with 32 bits for editing to avoid quality loss with extreme edits(not many tools to do that though) as well as possibility to externally do compositing with render passes. Render passes cannot go through any color transforms for them to work properly - they need to be linear values and only after compositing to go through color transforms so you would run into issues if you did it with other formats or lower bit depth. Also you can do color grading outside Blender with the whole data coming out of the render process even though 16 bits should also be really enough for that in vast majority of cases. It is a way to save the whole data that render process generates unchanged. And on top of that OpenEXR format is just amazing as well - see: https://blender.stackexchange.com/questions/148231/what-image-format-encodes-the-fastest-or-at-least-faster-png-is-too-slow and https://www.exr-io.com/features/ It supports Cryptomatte and you can use it in Photoshop! That alone saves me hundreds of hours of work literally.

Sure I know that, what im confused on is I thought that linear was restricted to 8 bit, and raw could be 16-32 bit. So if you had a 16 bit exr image but put the color space to linear it wouldn’t read information in the 16 bit range? Or am I terribly wrong on this?

Linear and gammacorrected are the opposites here. raw is telling nothing about how the values inside have to be interpreted.

Information in 8 bits is not usually saved in linear values(it can be, but that would not be a good idea in most cases), instead it is saved according to the way human eyes work(sRGB, gamma 2.2 and so on…). We see dark tones better - more of the dynamic range we see is dark so if the data is saved linearly and only 8 bits are saved, we loose information that we see best, but it includes information that we don’t distinguish well, so when an 8 bit linear image is converted to the color space of some display we are viewing it on(sRGB mostly), the image looses a lot of quality. That should be true with 16 bits to some extent as well, even though 16 bits is a lot more.

This is a huge topic… It’s hard to talk about it without going off topic. I just wanted to ask about this RAW and Non-Color duplication on developer forums to either get clarification from some developer or to direct somebody’s attention that this is an area that needs improvement to make it clear and user friendly for new Blender users. It seems to me RAW option shouldn’t be there. I think it confuses people without any serious reason.

Yea sorry my knowledge could be better and I was trying to clear things I didn’t understand up, which is off topic. But I’m still pretty sure there is a difference between non-color and RAW. I’ve heard professionals say that if you save normals in the 8-16 bit range you should put the color space to non-color, but if it’s 32 bit you should put it to RAW. I would link a source but it’s from a paid course on CG cookie for making a door

With 8 bit for a channel we can store 256 different intensity values. And because of that isn’t really much, the space was stretched and squished in different areas by the gamma correction to make better usage of these different values . But the problem is, that now simple math like adding no longer works. Linear space values have not been changed. It’s still completely linear. A higher bit depth per channel allow up to 4 billion intensities per channel. That way we can much better save the differences between eg a room light and the sun. In an 8 bit that would not be possible. So linear space is much better for mathematical operations like they are done in rendering and high bit depth give us the room to store a wide range of values. To my understanding raw and non-color, are just there to indicate if it’s color in there or not. I would leave it in there. It’s just a descriptor.

Ok sorry I’m just going to pick your brains one last time. Isn’t sRGB only capable of being in 8-16 bit depth? I’m fairly certain there is no 32-bit sRGB right? In Photoshop, for instance, if you bring in a 32 bit exr it will ask you for permission to convert it to something lower, right?

As for non-color isn’t it the same, only 8-16 bit depth? That’s why we need raw which goes to 32 bit? I just really need to make sure I’m not incorrect in my assumptions here. Or does non-color go up to 32?

sRgb is a definition of a color space. I’d say the bit depth has nothing to to with that. So there should be 32bit sRGB space, just more finegrained. But sRGB was extensibly used to describe the capabilities of monitors. Those have a LUT with a custom bit depth, that is indeed much lower than 32bit per channel. But in theory this should also not be restricted.

But let me take a look.

I know bit depth and color space are different, but I’m fairly certain some color spaces are most commonly associated with certain bit depths, no? I know sRGB is commonly associated with 8-16, and openEXR is associated with 16-32-64. There could get a 32 bit depth with sRGB but it’s not common, I don’t think. It has to do with the tools right for a specific job right? 32 bit exr is good for compositing because you get a bunch of channels saved in the file and you have a ton of color information, so 8-bit exr, while possible is besides the point right?

Yes that’s all correct. You just have to distinguish between a file format and a colorspace. An image file format still have other aspects if it’s fitting to a problem. Color depth, compression, lossy compression, lossless compression, computational complexity of a compression algorithm used, alpha channel support, layers suppport, licensing terms, how common is it, does hardware accerleration for decoding exist, and much more. sRGB is a colorspace, linear or gammacorrected are features of a colorspace and openEXR is a fileformat.

Right, I see that now.

This makes sense to me now. Thank you!

So back to the original question - am I the only one who thinks having the same thing called “RAW” and “Non Color” in the Color Space list of image texture node makes no sense at all and only confuses people without any serious reason about a subject that is already complex?