I am using a surface book 2 with touch screen and pen enabled.

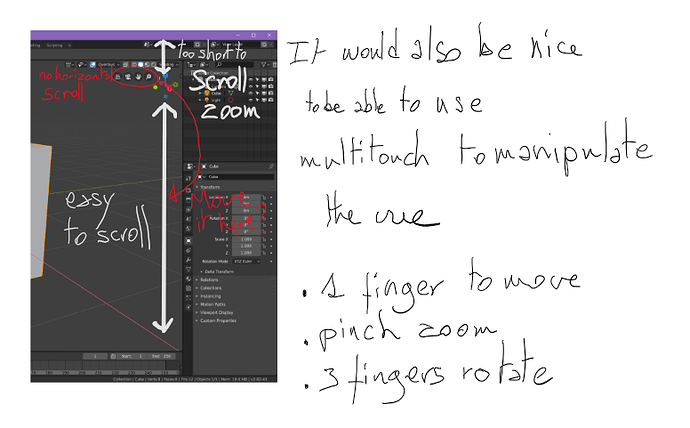

I couldn’t find a way to manipulate the vue with fingers.

An option should be added to rotate zoom move vue with fingers and manipulate vertices or objects with the pen.

Using the 3d view buttons is not well placed in my opinion for touch devices.

With a mouse the scroll becomes infinite so no problem but with touch or pen the screen borders limits the range of movement.

1 Like

This is something which I would also really appreciate. It depends how the touch interactions have been built into Blender, if they’re just emulating a cursor or if there is special handling of touch input. I’ve worked with touch driven UI and the match gets quite complicated quite fast.

I find that funny to read that, when we are speaking about a so custom program that has so crazy functionalities as 3d compositing with multiple render engines, that it is difficult to implement multitouch.

But I can understand…

Why exactly is it so difficult?

Just counting how many fingers touch the screen and the distance between points and some event listeners?

As a web developer I could make it but for Python I don’t know where to start.

And I don not really understand where this code should be written in.

Inside the binary or are there some .py files I could play with?

As far as I’m aware, Blender isn’t written in an event-driven paradigm. This is one layer of complexity to work around. Another is how the touch API is exposed to Blender, on the web it is easy because each browser vendor has written a bunch of code to make our lives easier - interfacing with the OS’ APIs and ensuring the data is all consistent with the JS specification. In Blender, I’m not sure, this sort of platform independent API might not exist.

There’s also implementation details. Getting just the basic functionality might not be too hard, but there is a lot to consider. Do you want to also allow touch and drag for box-select in order to be in line with mouse controls? What if your gesture starts on a clickable element within the 3d viewport? Do you click it? What if the gesture only moves a few pixels before ending? Do you enact a click? Where? Where the gesture started, or where it ended, or maybe somewhere in the middle? What happens if you start with one finger and then add another finger? Do you start scaling? Do you scale by the distance between the fingers and translate by the difference in the average location? What if the second finger is outside the 3d viewport? What about if it is over a button? When you’re scaling, should you scale such that the middle of the screen stays where it is? The center of the touches? 3D cursor? should that be configurable? Same issue when rotating. What do you rotate around?

I’ve built a multi-touch interaction on the web and faced similar issues. It isn’t impossible, but it certainly isn’t trivial. There’s just a lot of questions to answer.

I’m also not sure where this would be in the source code. I think this really belongs as part of an entire touch-friendly interface redesign/redevelop. Currently, swiping to scroll just toggles expandable sections, and tapping twice within about a second simulates a click followed by a ‘hover’, and expandable tools in the workbench are entirely inaccessible by touch, etc. That being said, if there is anyone who has any pointers on where to look, I might take a crack at it some time and see how far I get.

Me too! where to start? please which file?

Let’s do it Smilebags!