The sky color is ““more correct”” in a sense in the spectral image, though it’s a super crude approximation regardless. As said, that’s not entirely comparable because I’m using features to achieve this, which I can’t even really attempt to match in the RGB version. If I actually use the same material colors, it’s gonna be less of a difference. (though not none)

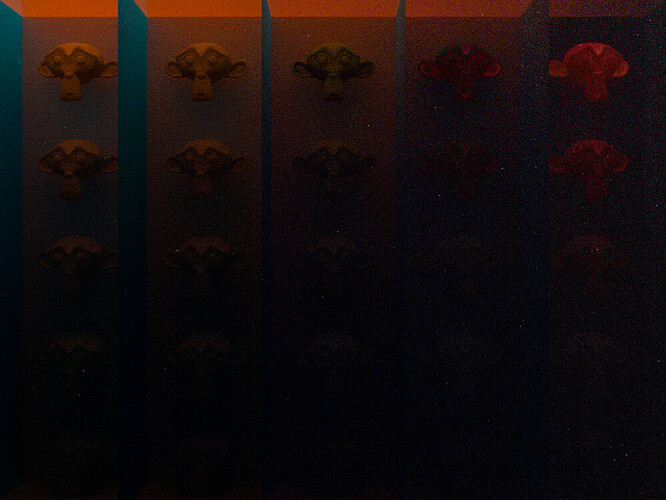

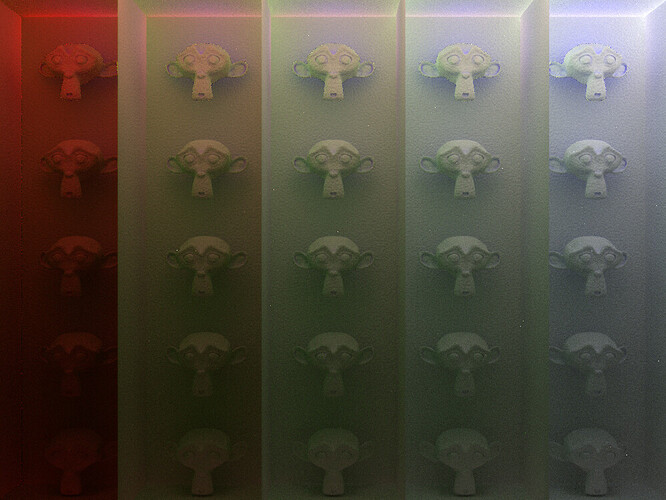

Ok here is a fair three way comparison using sun lamps. Two of these images you have already seen:

All of them use the Rayleigh scattering spectrum (at least approximately) as the scattering spectrum.

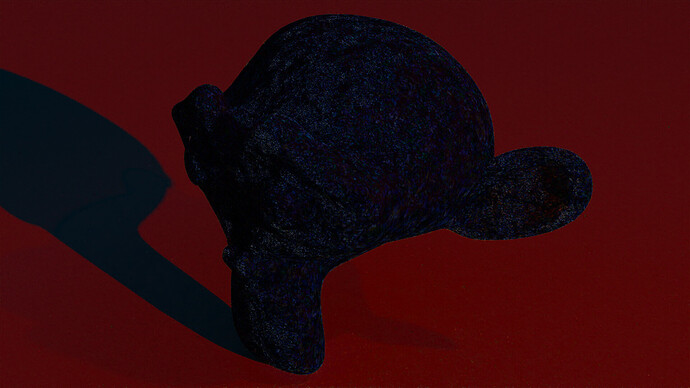

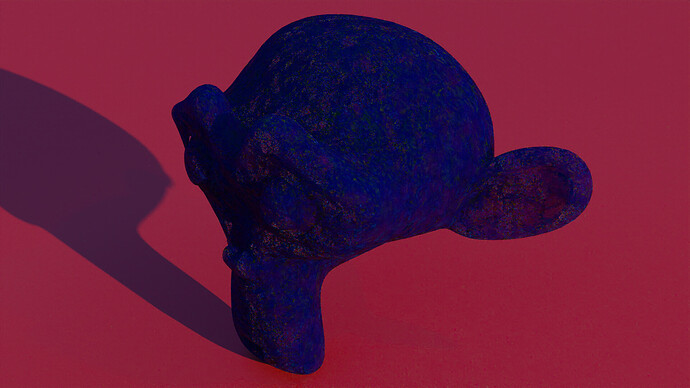

Pure RGB:

Spectral (backward compatible):

Spectral:

It’s clear that the spectral version, even with backward compatible materials, is slightly paler. The reason probably at least partially is that the orange ground color still manages to reflect more of the blue light than in case of RGB rendering. - Using actual spectra for the color only pronounces that though, as can be seen in the third image.

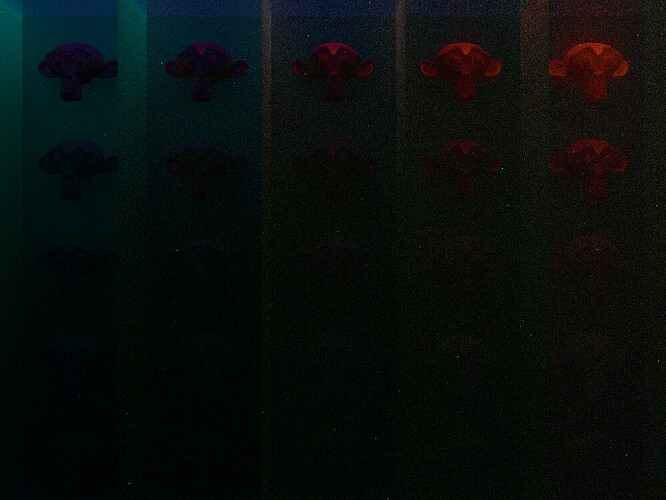

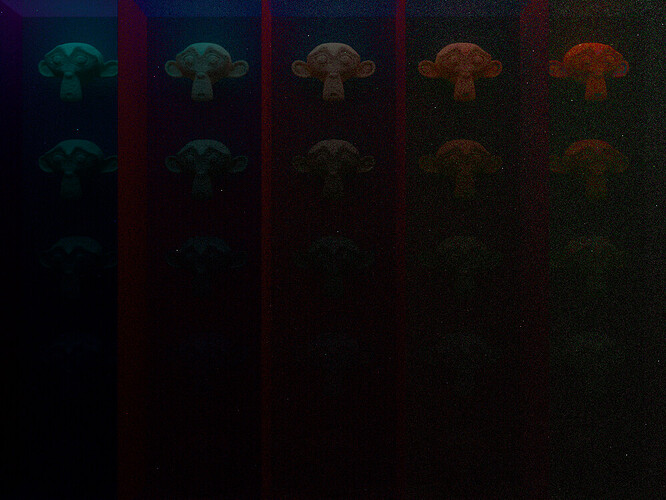

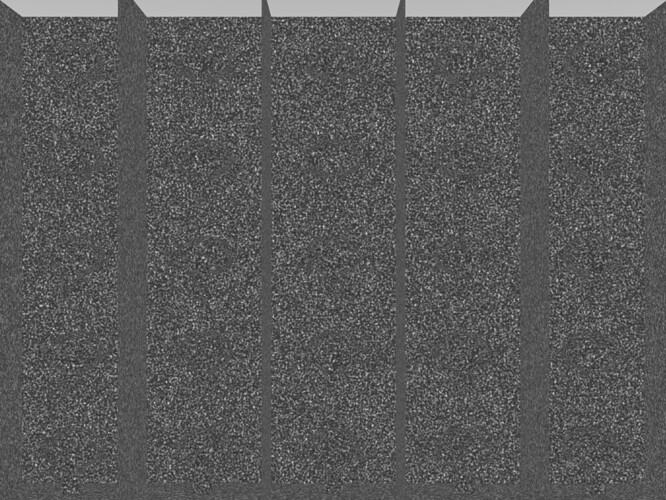

And here are the three way comparisons of these to make the differences even more obvious:

Diff RGB - Spec backward:

Diff Spec - Spec backward:

Diff RGB - Spec:

Here you can see that, if you use backward compatible materials (i.e. only RGB colors), Suzanne is actually really close. The differences are pretty even between R G B with actually quite a bit of difference in the green and pretty little in the red. She’s pretty dark too. Though not actually super close to black.

Meanwhile, the ground plane is way different. And clearly primarily in the red. So the difference is mostly NOT that there is more blue reflected (as might be expected due to spectral overlap) but somehow that red is missing. And I think that part is wrong.

The question is where exactly that difference lies tho. It may in fact somehow be an issue with current Cycles.

Meanwhile the two Diffs that involve the spectral (non-backwards compatible) materials both look quite similar, suggesting that these spectra have a bigger influence on the appearance than the fact that it’s rendered using more than three lights. That part is encouraging and expected.

The differences I can see suggest that Spectral also is slightly closer to itself than to RGB Cycles. (The Spec - Spec backward image is slightly darker than the RGB - Spec one). There also seems to be a hint more red in that one. And very noticeably, one of the shadows - the one from the sky lamp - just doesn’t show up at all in the Spec - Spec backwards inage when it’s still discernible in the third image. There also don’t seem to be any green noise spots or at least they are much rarer / weaker.

Anyway, main takeaway is that Spectral contains significantly less red than RGB Cycles does.

I wouldn’t spend much time on the Sky Texture (Nishita) at this point. Actually I’m figuring out a way to implement Multiple Scattering for the next Sky Texture  and maybe also make it instantly compatible with this Spectral branch. We will see, I’m still in the learning process. Meanwhile, again, don’t spend much time on the actual sky model, really I would let it RGB as is like the other 2 models.

and maybe also make it instantly compatible with this Spectral branch. We will see, I’m still in the learning process. Meanwhile, again, don’t spend much time on the actual sky model, really I would let it RGB as is like the other 2 models.

To be clear, while some of the above tests used the sky texture, in these last few I “faked” a sky by simply using a sun-colored sun lamp with small angle and a sky-colored sun lamp with wide (but not too wide) angle (something seems wrong with going for the full 180°. The light just renders WAY darker then. I’m guessing it’s an Importance Sampling issue? - it works fine with 120° and it’s not just a Spectral Cycles problem)

A somewhat similar experiment. Here the only light source is the glowing star thing. It’s placed in a scattering medium with the rayleigh scattering spectrum (or an approximation). The color of the star is the 5777K blackbody spectrum (or RGB equvialent) approximating the sun.

(The ground plane is just a regular light grey 0.8 diffuse/ white 1.0 glossy material so it should have no influence on the scene’s hues)

- RGB render is regular cycles (view transform is Standard to make it comparable)

- Spectral match is the exact same scene but in Spectral Cycles

- Spectral scatter only makes the scattering volume spectral

- Spectral star only makes the emission volume spectral

- Spectral full makes both volumes spectral

RGB render:

Spectral match:

Spectral scatter:

Spectral star:

Spectral full:

Quite interesting and a little unintuitive how these colors come together:

- As it turns out, the more realistic scattering spectrum has a big influence on the emitter’s apparent color, shifting it far more towards saturated orange,

- whereas the more realistic blackbody emitter has a big influence on the surrounding colors shifting them far more towards blue.

The reason for the second fact is simple enough: The RGB-approximated blackbody emitter simply doesn’t have a whole lot of blue to scatter away compared to the true spectrum. So of course it could never show up in the distance.

And I suppose similarly, the natural Rayleigh spectrum is more capable at affecting the emitter? It’s a bit more mysterious, to me at least, how that works, but it’s clearly quite important the overall image.

Either way this is a great showcase of how different results might get using this workflow.

Finally, here is a diff between the RGB version and Spectral match, showing where the differences lie in these renders.

It’s actually very close, especially further away from the star where scattered light dominates. Where there is more direct light, the discrepancies are once again greatest in the red channel but it’s at least a bit more even than previous tests suggested. In the raw images RGB and Spectral match you can clearly see that the star looks a good deal more saturated in the spectral version. This might have to do with the inherently smoother scattering spectrum from RGB upsampling. It’s not as smooth as the Rayleigh spectrum, but it’s smoother than the three lamps spectrum.

In an effort to better understand the color differences between Spectral and regular Cycles as it stands right now, I repeated the Suzanne In A Box experiments with the latest version and also created Diffs for all of them.

All of these images use the Standard view transform rather than Filmic to make them more comparable. Using Filmic would make them look better in principle though.

Additionally, I had a look at various render layers and as has been noted before, there are pretty large discrepancies in some of them.

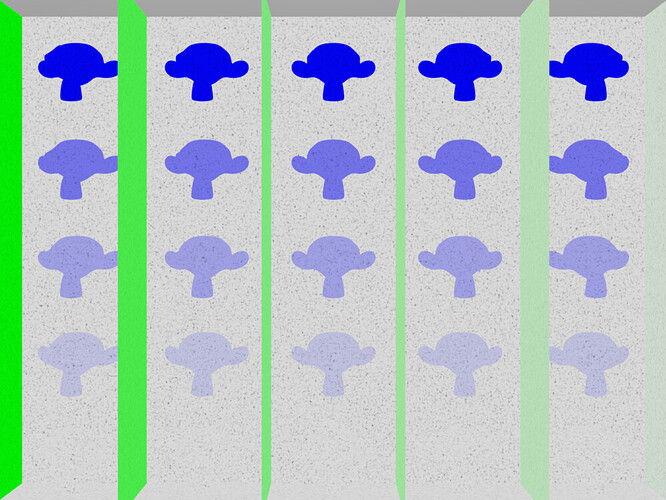

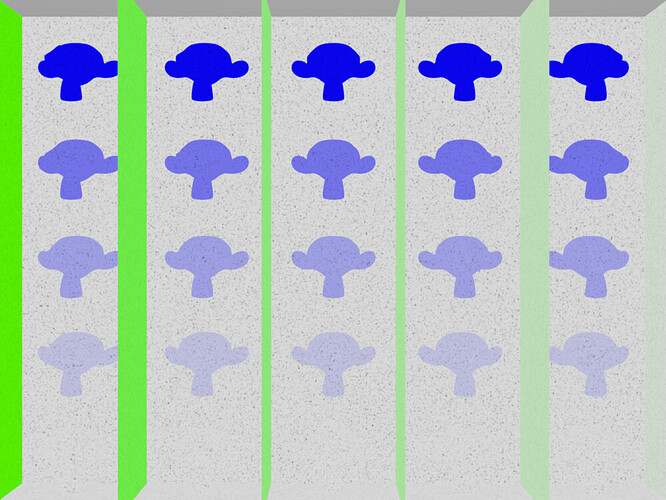

In the following, there are two versions (RGB and Spectral) plus their difference in six variants corresponding to either the ceiling lighting, the side-walls, or the Suzannes being either red, green, or blue.

Here goes:

- Emitter Blue, Walls Green, Suzanne Red:

- RGB:

- Spectral:

- Diff:

- Emitter Blue, Walls Red, Suzanne Green:

- RGB:

- Spectral:

- Diff:

- Emitter Green, Walls Blue, Suzanne Red:

- RGB:

- Spectral:

- Diff:

- Emitter Green, Walls Red, Suzanne Blue:

- RGB:

- Spectral:

- Diff:

- Emitter Red, Walls Blue, Suzanne Green:

- RGB:

- Spectral:

- Diff:

- Emitter Red, Walls Green, Suzanne Blue:

- RGB:

- Spectral:

- Diff:

So entirely expectedly, there are differences in all three channels of course.

And looking at, for instance, the three rightmost Suzannes in the top row of the first set (there are many more examples) it also seems clear that a lot of the differences are, in particular, in the indirect lighting, which is also expected.

However, comparing all the diffs, it seems to me it’s pretty clear that the biggest differences, even in very varied situations, tend to be in the red channel.

It would be great to compare specifically the difference as it appears in direct vs. indirect light for both diffuse and specular rays. Unfortunately, those channels appear to currently be broken in the spectral branch. Here, just one example, for the sixth set (which seems to have the lowest difference of them all)

- Diffuse Color:

- RGB:

- Spectral:

- Diff:

- Diffuse Direct Light:

- RGB:

- Spectral:

- Diff:

- Diffuse Indirect Light:

- RGB:

- Spectral:

- Diff:

- Glossy Color:

- RGB:

- Spectral:

- Diff:

- Glossy Direct Light:

- RGB:

- Spectral:

- Diff:

- Glossy Indirect Light:

- RGB:

- Spectral:

- Diff:

As you can see, the Diffuse and Glossy base colors are pretty close in each. In fact the two glossy ones are identical. Though the difference of Diffuse Colors reveals that there is quite significantly more red in the spectral version, as well as a slight difference in blue.

And all the Direct/Indirect light passes in Spectral are completely wrong. They lack color, and the top row of Diffuse Indirect Lighting, as well as the leftmost wall, just completely break. - It looks like high saturation colors aren’t quite correctly normalized right now.

(Note, for the most saturated portions, I set one channel for each of these colors except for the emitter to 0.9 and the other two to 0.001 in order to avoid what might be tiny rounding errors when it comes to values close to 0 or 1. So I don’t think this is an issue of exploding wavelengths or ones that go negative or something like that.)

What an awesome comprehensive test! It’s great to see that the Spectral one consistently shows more retained brightness in saturated indirect light. It makes sense that there’s the most difference where at least one aspect (light or material) is quite saturated.

It’s clear that the passes are completely jumbled up right now. Not sure what’s going on there since I believe I mapped everything to what it was supposed to be mapped to, but I’m sure I’ve just made a silly mistake somewhere in the code responsible for populating the passes.

I’m encouraged that there seems to be very little difference in noise performance, both luminance and colour noise seem indistinguishable from each other (permitting differences due to significantly varied light levels).

Noise isn’t quite identical, but yeah it’s very close. Almost surprisingly so, even.

The fireflies are in different spots (using the same seed) and I think (though this is relatively anecdotal) spectral rendering actually performs slightly better in that regard. It appears as if there are fewer noticeable hotspots. To be fair, though, this scene generally produces very few of those in general, and so it might well be pretty much random and about equal in that regard.

Yep, the noise pattern should be similar but maybe marginally different in some cases. I would expect fireflies which have a relatively neutral colour to resolve at least as quickly as RGB cycles, but very narrow spectra (without making use of the custom wavelength importance) will likely result in somewhat more noise than RGB. Much more thorough and quantitative testing would have to be done to properly compare noise performance. For example, the RMS of the difference between a ground truth render and a noisy render might be a decent starting point.

I’m not sure that’s even entirely fair. RGB can’t even distinguish between narrow and wide spectra in the first place. how would you even compare?

I guess the closest you could get to is to light your entire scene with spectra that are as narrow as possible but approximate sRGB? (Which would be weird in that sRGB inherently doesn’t correspond to monochromatic light sources)

I think the current heuristic of going “if we can see it more, it’s more relevant” is a good one for 99% of scenes at least. If your scene really needs to rely on a crazy narrow spectrum to sample from (perhaps because you are simulating laser light or something), I’d guess the way to go there is to actually change the sampling spectrum as is already possible.

That’s a great point. We can only compare them in cases where there’s a meaningful comparison to make. Scenes built with spectral in mind from the start might come with some more subjective benefits which are hard to quantify.

Fixing up the passes is my next task. Thanks again for the updated test.

Perhaps stating the obvious but it would probably be good to start accumulating a real render test suite in the same vain as what already exists for cycles/eevee sooner rather than later (for instance there’s tests for those engines that check the various passes). Almost certain that spectral would not be accepted as a contribution, being so large and fundamental, without the corresponding set of tests to continuously verify it.

The current suite is large, but very bland (in amount of color and light used). Would probably still have to follow it’s lead (in spirit at least) and create similar tests. Spectral changes I think would still be apparent with a similar light, color, sample count setup as the existing tests.

Certainly the current suite could essentially be reused and then added to. I think the 25 Suzannes scene I used above is a good candidate for that. Although it could probably be made to render faster.

And if the old suite is reused, currently it’d sound the alarm “THERE ARE DIFFERENCES HERE!” when, for once, that is entirely intended. It’s tricky to suss out which part of the change is spectral effects and which part may be actual bugs. - I still think there is something slightly iffy with the red channel at least. It just consistently has the largest errors (in that the differences in that channel compared with regular Cycles are the largest)

But anyway, yes, I concur: A solid rendering test suite for establishing the ground truth would be excellent. In fact it’d probably be worth it to go through every single issue or potential issue found in this thread thus far and make at least one test scene for that specific issue.

Oh for sure it wouldn’t be for comparisons with the existing set of checked in images, you would have a completely new set of images used for comparisons. What I mean by “follow what’s there currently” is to roughly use the same set of scenes and setups (or equivalent) to ensure spectral is doing the right thing in similar situations.

It’s not to ensure it matches with rgb in most situations, it’s to ensure that when you make spectral changes it doesn’t break pre-existing spectral results as well as forcing you to consider things like render passes etc.

And yeah, the existing tests use such small resolution, samples, geometry because they need to execute really quickly. The larger tests are better suited as “demo” or “benchmark” scenes.

Would it make sense to reuse those scenes in a test with other spectral engines out there?

I’d like to see what Luxcore outputs with those 25 suzanne setups, for example.

That would be interesting to see indeed. IIRC LuxCore actually isn’t spectral anymore, they moved away from being a spectral render engine when they renamed it to LuxCore, but if there are any other spectral engines out there that people have access to, I’d love to see the results.

I could probably change the scene to be better for that. As it stands, the way I did the materials doesn’t quite work for this though.

One thing I did was to add a plane in front of the boxes, which is invisible to the camera. These are actually closed rooms! And removing that plane makes a big difference in the appearance, since a lot less light would be reflected back. A lot of it would escape into the black void on the outside.

In Cycles, accomplishing this is very easy (just make it transparent if your ray is a camera ray) but I’m not sure how capable of that other renderers are.

I haven’t super looked into this yet but in principle I should be able to get it to work on Mitsuba 2, right?

I wish there was a ready version I could just simply use for rendering straightforwardly rather than having to build it myself tho.

Also on LuxCore, first google result yielded this forum thread Spectral rendering - LuxCoreRender Forums

Turns out we are being watched  hi there, lurking LuxCore folks!

hi there, lurking LuxCore folks!

The very first concern is increased render time. On that note, Spectral rendering is indeed slower than regular Cycles. And there are two separate effects there, I think.

- It takes slightly more computations to process any given ray (although this seems to be rather minor)

- in particular highly saturated, dark colors are often already dropped in regular Cycles, deemed minimally impactful to the render result. BUT Spectrally there may actually be quite a lot of remaining energy. So the cutoff tends to happen later.

I think the biggest time impact will be from that. It’s slower to render such scenes, literally because there is more visual detail. So that extra rendertime goes to good use.

This effect is very noticeable in this Suzannes scene: I set the render pattern from Right to Left so it’d start with the least saturated part and go to the most saturated part.

Regular Cycles speeds up quite solidly as it reaches the left edge.

And Spectral Cycles also speeds up. But not by as much. Simply because there is more indirect light to go around (and still appreciably impact the scene - this is, after all, the exact situation where the differences are gonna be the greatest)

Meanwhile the noise concerns seem to be very much addressed by the Hero Wavelength algorithm that’s at work here. It’s clear from the Suzanne test (btw I rendered at 1024 samples and deliberately did not use denoise - I even turned off the post-processing dithering that’s there by default to get maximum comparability) that the noise ends up being about the same.

Hi kram1032, I recently read Veach’s dissertation “Robust Monte Carlo Methods for Light Transport Simulation” where (amongst others) chapter 5.2 page 143 and chapter 6.2 mentions that light changes wavelength when crossing a dielectric interface and how to compensate the BSDFs for this effect. Also, Veach mentions in chapter 4.5 page 115 footnote 10 that spectral sampling should happen in frequency-space, rather than wavelength space. Could this explain the too-red issue?

I don’t think that should matter: Those are effects that would be false in a tricolor renderer as well as in a spectral renderer if neither of them compensates for this. I’d think they’d be false in the same way.

The example concerning BSDFs isn’t related to sampling specific wavelengths as far as I can tell, but rather about the material definitions. Changes to that would have to be made at a different point than what the current Spectral Renderer project does. (It may become relevant later, once new BSDFs are making use of the spectral data, such as for Complex IOR materials)

Also, this is from 1997. I suspect the state of the art has changed a lot since then. (For one, wavelength sampling is done through the hero wavelength algorithm which is much newer. AFAIK it has guarantees to converge to the right result)