Thanks for the heads up. I’ll still test it anyway. I can just reuse the albedo, normal and beauty passes needed for the OptiX temporal denoiser to get the OIDN results.

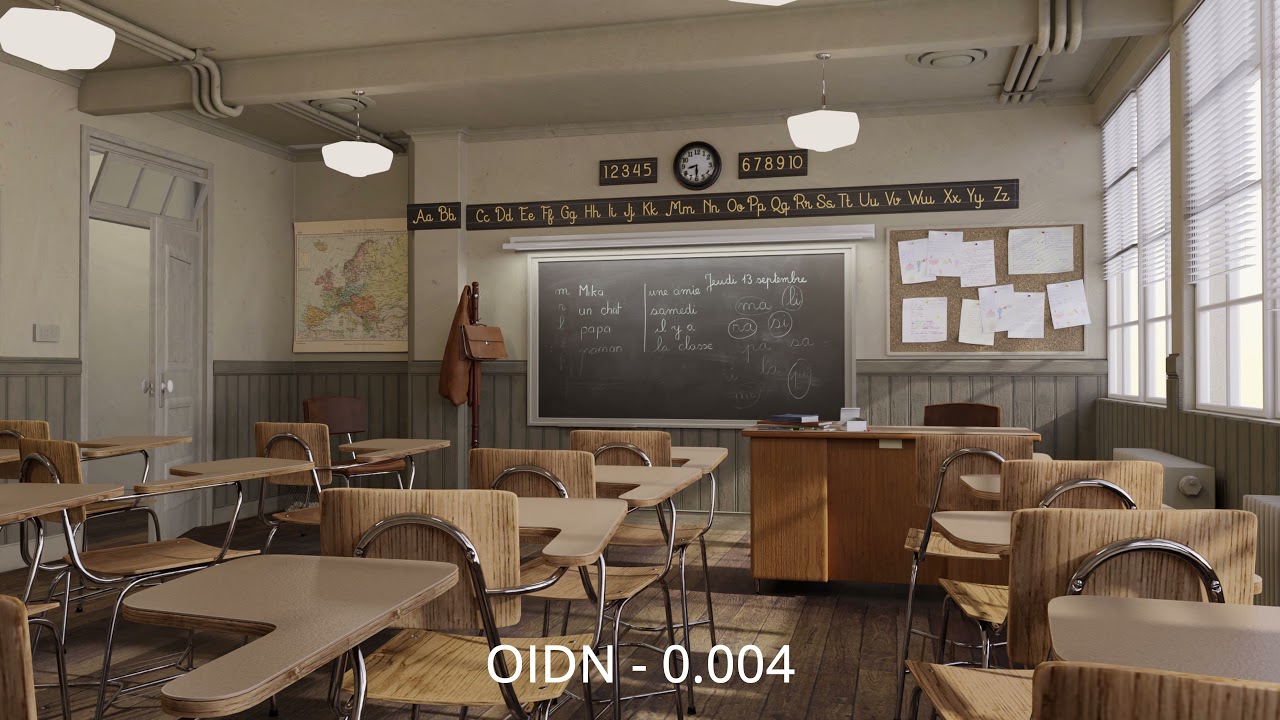

@deadpin and anyone else interested. I rendered out the classroom animation at various noise thresholds and tested the temporal stability of OIDN, OptiX, and OptiX temporal denoising at each of the noise thresholds.

The end bit of the video is of my own personal opinion. You may have a different opinion to me and that’s fine.

Also, watch in 4k if you can. The renders aren’t in 4k, they’re 1080p, but the video was upscaled to 4k as 4K videos on YouTube have higher bandwidth than lower resolutions.

Also sorry for any spelling mistakes or grammatical errors.

Note: The sample count for these renders was 2000 with adaptive sampling turned on.

Hmm, what was your Render sample count set to for this – looks like 128 still in the video?

[Edit] Answered below

Sorry, should of included that information.

The scene was rendered at 2000 samples initially with adaptive sampling turned on. I will edit this into the original comment.

I did save the “debug sample count” pass if you’d like me to run them through a python script to find out the distribution? I’ll just need to know what the python script is and how to use it.

Ah, cool  Interesting result for sure. It’ll provide a good starting point for other scenes I think. And yeah, the results are quite acceptable at the levels you mention. The new optix does seem to give a slight uplift in results too so at least there’s that.

Interesting result for sure. It’ll provide a good starting point for other scenes I think. And yeah, the results are quite acceptable at the levels you mention. The new optix does seem to give a slight uplift in results too so at least there’s that.

Only thing left is to at least confirm the motion vector / flow items. When this is integrated in blender proper who knows how it’ll work. For instance, enabling camera motion blur disables the ability to output the motion path pass. So there’s that little hurdle to get past at least too

I can provide the script over on blender.chat probably later tonight. Don’t want to muddy the thread too much.

Yeah, it increases temporal stability, but a little nit pick I have is that it reduces detail. For some people, this will be fine (for myself it’s fine, most of the animations I render in Blender are posted online and get compressed so this small loss in detail doesn’t really matter) but for others this could be a deciding factor between using a temporal denoiser or using a normal denoiser potentially with a higher sample count.

–

I’m fairly sure I’ve figured out the format. The only thing I’m unsure about is if the magnitude of the flow pass generated by Cycles is the same as what OptiX expects.

I was planning on submitting a patch so people could make custom builds of Blender with the (hopefully) correct flow pass for OptiX temporal, but I have basically zero knowledge in C++ and couldn’t figure out what parts of the code I needed to modify to get the results I want.

If you or anyone else wishes to take a look at it, here’s what I believe needs to be done:

At the moment the motion vectors pass in Blender produces a “file” with all four “colour” channels used, RGBA. OptiX only cares about the information from the Red and Green channels, but Cycles seems to produce them in reverse to what OptiX expects. So to fix this, you need to take the Red and Green channels of the Motion vectors and multiply them by -1 (or in a more “correct mannor”, compute the motion vectors in reverse). OptiX may also require that the Blue and Alpha channels are removed/set to 0 and 1 respectable I’m not 100% sure on this part (but I did this anyway through compositing for my tests just to be safe).

Along with this “multiply by -1” thing, for the patch to be accepted by the Blender foundation I’m pretty sure the “vector blur node” and such in the compositor will need adapted to understand the new format.

I have a better write up on the differences between Cycles and OptiX motion vectors here: Taking a look at OptiX 7.3 temporal denoising for Cycles

Just a heads up for everyone, I’m planning to do another temporal denoising test with all the different denoisers but on a scene with a moving and rotating camera and moving and rotating objects. It probably won’t make much of a different, but I thought I’d check it out anyway.

I also plan to do more tests with static and animated seeds. It may take a while to get all the renders done.

If anyone wants to know what the scene is, it’s a modification of the “Danish mood” scene from Luxcore examples page.

I finally got OptiX temporal denoising working on Linux (The driver update required is now officially available on my distribution). As such, I have written myself a python script to help with creating the commands required to denoise animations with and without the temporal information.

The script isn’t perfect, there are quite a lot of hard coded things and there are areas you where you can break the code easily. It’s also designed with Linux and its file structure in mind. As such, it probably doesn’t work on Windows. I’m ignoring MacOS as OptiX 7.3 isn’t available on MacOS.

It can be made more user friendly by giving it a GUI and skipping the last step (The last step is: “Here’s the command I generated for you, copy and paste it into a terminal”). But I basically wrote up this entire script based on the knowledge I found from google searches over the last day and as such, you can probably guess I’m in-experienced with python and probably won’t be doing that myself.

To run this script, save it to a python file and I personally run it in the terminal with

python3 '/PATH/TO/SCRIPT'

Here it is in a .txt format. Just change the format to .py and it should work:

OptiX command generation script.txt (2.8 KB)

Here’s a video giving an example of how I use it:

correct_answer1 = 0

correct_answer2 = 0

print("Hello and welcome to the OptiX standalone denoiser assistant")

print("Let's start off by collecting information needed for denoising")

print()

print()

print()

optix = input("Please enter the path to the OptiX denoiser: ")

print()

beauty = input("Please enter the path to the Noisy pass: ")

print()

albedo = input("Please enter the path to the Albedo pass: ")

print()

normal = input("Please enter the path to the Normal pass: ")

print()

output_path = input("Please enter the path to the Output folder: ")

output_path = output_path[:-2]

print()

output_name = input("Please enter the name of the Output file (E.G. Denoised): ")

print()

while correct_answer1 < 1:

animation = input("Are you denoising a animation? (y/n) ")

print()

if animation == "y":

correct_answer1 = 1

beauty = beauty[:-10]

albedo = albedo[:-10]

normal = normal[:-10]

start = input("Please enter the number of the First frame in the animation (E.G. 20): ")

start = int(start)

print()

end = input("Please enter the number of the Last frame in the animation (E.G. 150): ")

end = int(end)

print()

while correct_answer2 < 1:

temporal = input("Is the animation being denoised with Temporal Denoising? (y/n) ")

print()

if temporal == "y":

correct_answer2 = 1

flow = input("Please enter the path to the Flow pass: ")

flow = flow[:-10]

print()

print("Copy the command below and paste it into a terminal:")

print()

print()

print()

print(fr"{optix}-F {start}-{end} -a {albedo}++++.exr' -n {normal}++++.exr' -f {flow}++++.exr' -o {output_path}/{output_name}++++.exr' {beauty}++++.exr'")

elif temporal == "n":

correct_answer2 = 1

print("Copy the commands below and paste it into a .sh file and run it:")

print()

print()

print()

while start < end+1:

if start < 10:

print(fr"{optix}-a {albedo}000{start}.exr' -n {normal}000{start}.exr' -o {output_path}/{output_name}000{start}.exr' {beauty}000{start}.exr'")

start = start+1

elif start < 100:

print(fr"{optix}-a {albedo}00{start}.exr' -n {normal}00{start}.exr' -o {output_path}/{output_name}00{start}.exr' {beauty}00{start}.exr'")

start = start+1

elif start < 1000:

print(fr"{optix}-a {albedo}0{start}.exr' -n {normal}0{start}.exr' -o {output_path}/{output_name}0{start}.exr' {beauty}0{start}.exr'")

start = start+1

elif start < 10000:

print(fr"{optix}-a {albedo}{start}.exr' -n {normal}{start}.exr' -o {output_path}/{output_name}{start}.exr' {beauty}{start}.exr'")

start = start+1

elif animation == "n":

correct_answer1 = 1

print("Copy the command below and paste it into a terminal:")

print()

print()

print()

print(fr"{optix}-a {albedo}-n {normal}-o {output_path}/{output_name}.exr' {beauty}")

Edit:

I’ve updated the script to better suite my needs.

It’s still probably Linux only, and now on Linux there’s support for outputting the command straight to a terminal (in some situations), however it’s limited to terminals that map to “x-terminal-emulator” which I believe is dictated by your distribution? This code should be a bit more robust than the old one and offers better efficiency for one of the things I like to do (denoise multiple animations by changing a small number of variables)

import os #Import os functions for accessing the terminal later

#Set some default values for variables

optix_path = "NO_PATH"

beauty_org = "NO_PATH"

albedo_org = "NO_PATH"

normal_org = "NO_PATH"

flow_org = "NO_PATH"

out_path_org = "NO_PATH"

out_name = "NO_NAME"

is_animation = "NO"

is_temporal = "NO"

start_frame_str = "0"

end_frame_str = "0"

out_to_term = "NO"

start_frame = 0

end_frame = 0

exit = 0

print("Hello and welcome to the OptiX standalone denoiser assistant.\nLet's start off by collecting information needed for denoising. \n")

while exit == 0: #This creates a while loop to make sure the script only exits when the user decides.

is_digit_test_exit = 0 #This resets a value so the script can loop without issue.

power_of_ten = 10

print (f"\nop - OptiX Path: {optix_path}\nb - Beauty Path: {beauty_org}\na - Albedo Path: {albedo_org}\nn - Normal Path: {normal_org}\nf - Flow Path: {flow_org}\no - Output Path: {out_path_org}\nna - Output File Name: {out_name}\nan - Is Animation: {is_animation}\nt - Uses Temporal Information: {is_temporal}\ns - Start Frame: {start_frame}\ne - End Frame: {end_frame}\nc - Output Command to Terminal: {out_to_term}\n")

change_variable = input("Would you like to change any of these variables, (g)enerate a command, or (q)uit? ")

print("\n" * 100) #Clears the screen to remove distractions

if change_variable == "op":

optix_path = input("Please input the OptiX path: ")

optix_path = optix_path.strip()

if change_variable == "b":

beauty_org = input("Please input the Beauty path: ")

beauty_org = beauty_org.strip()

if change_variable == "a":

albedo_org = input("Please input the Albedo path: ")

albedo_org = albedo_org.strip()

if change_variable == "n":

normal_org = input("Please input the Normal path: ")

normal_org = normal_org.strip()

if change_variable == "f":

flow_org = input("Please input the Flow path: ")

flow_org = flow_org.strip()

if change_variable == "o":

out_path_org = input("Please input the Output folder path: ")

out_path_org = out_path_org.strip()

out_path_org = out_path_org.rstrip(" '")

if change_variable == "na":

out_name = input("Please input the file Name: ")

if change_variable == "an":

if is_animation == "NO":

is_animation = "YES"

else:

is_animation = "NO"

is_temporal = "NO" #Temporal information can NOT be used without animation data

if change_variable == "t":

if is_temporal == "NO":

is_temporal = "YES"

is_animation = "YES" #Temporal information can NOT be used without it being a animation

else:

is_temporal = "NO"

if change_variable == "s":

while is_digit_test_exit == 0:

start_frame_str = input("Please input the first frame of the animation: ")

is_digit_test = str(start_frame_str.isdigit())

if is_digit_test == "True":

start_frame = int(start_frame_str)

start_length = len(str(start_frame))

is_digit_test_exit = 1

else:

print("\n\n\nSorry the input value is not valid, try again.\n")

if change_variable == "e":

while is_digit_test_exit == 0:

end_frame_str = input("Please input the last frame of the animation: ")

is_digit_test = str(end_frame_str.isdigit())

if is_digit_test == "True":

end_frame = int(end_frame_str)

is_digit_test_exit = 1

else:

print("\n\n\nSorry the input value is not valid, try again.\n")

if change_variable == "c":

if out_to_term == "NO":

out_to_term = "YES"

else:

out_to_term = "NO"

if change_variable == "g":

beauty = beauty_org.rstrip(" .exr'")

albedo = albedo_org.rstrip(" .exr'")

normal = normal_org.rstrip(" .exr'")

flow = flow_org.rstrip(" .exr'")

out_path_org

digit_test_exit = 0

digit_count = 0

pluses = ""

while digit_test_exit == 0: # while loop for counting the number of digits on the end of the files

temp_character_saver = beauty[-1]

is_digit_test = str(temp_character_saver.isdigit())

if is_digit_test == "True":

digit_count = digit_count + 1

beauty = beauty[:-1]

albedo = albedo[:-1]

normal = normal[:-1]

pluses = pluses + "+"

else:

digit_test_exit = 1

digit_test_exit = 0

if is_animation == "YES" and is_temporal == "YES":

if start_frame >= end_frame:

wait = input("Sorry, it seems the input frame range is invalid. Try again. (PRESS ENTER TO PROCEED)")

if start_frame < end_frame:

flow = flow[:-digit_count]

command = fr"{optix_path} -F {start_frame}-{end_frame} -a {albedo}{pluses}.exr' -n {normal}{pluses}.exr' -f {flow}{pluses}.exr' -o {out_path_org}/{out_name}{pluses}.exr' {beauty}{pluses}.exr'"

if out_to_term == "YES":

os.system(fr'x-terminal-emulator -e "{command}"')

#os.system(fr'gnome-terminal -e "{command}"')

if out_to_term == "NO":

print(command)

wait = input("\nCopy the command above into a terminal to run it - (PRESS ENTER TO PROCEED)")

if is_animation == "YES" and is_temporal == "NO":

if start_frame >= end_frame:

wait = input("Sorry, it seems the input frame range is invalid. Try again. (PRESS ENTER TO PROCEED)")

if start_frame < end_frame:

zeros = "0" * digit_count

zeros = zeros[:-start_length]

power_of_ten = 10 ** start_length

command = fr"{optix_path} -a {albedo}{zeros}{start_frame}.exr' -n {normal}{zeros}{start_frame}.exr' -o {out_path_org}/{out_name}{zeros}{start_frame}.exr' {beauty}{zeros}{start_frame}.exr'"

current_frame = start_frame + 1

while current_frame < end_frame+1:

if current_frame < power_of_ten:

command = command + fr" && {optix_path} -a {albedo}{zeros}{current_frame}.exr' -n {normal}{zeros}{current_frame}.exr' -o {out_path_org}/{out_name}{zeros}{current_frame}.exr' {beauty}{zeros}{current_frame}.exr'"

current_frame = current_frame + 1

if current_frame == power_of_ten:

zeros = zeros[:-1]

command = command + fr" && {optix_path} -a {albedo}{zeros}{current_frame}.exr' -n {normal}{zeros}{current_frame}.exr' -o {out_path_org}/{out_name}{zeros}{current_frame}.exr' {beauty}{zeros}{current_frame}.exr'"

power_of_ten = power_of_ten * 10

current_frame = current_frame + 1

print(command)

if out_to_term == "YES":

wait = input("\nSorry, outputing this command to a terminal is not supported\nPlease copy the command above into a terminal to run it - (PRESS ENTER TO PROCEED)")

if out_to_term == "NO":

wait = input("\nCopy the command above into a terminal to run it - (PRESS ENTER TO PROCEED)")

if is_animation == "NO" and is_temporal == "NO":

command = fr"{optix_path} -a {albedo_org} -n {normal_org} -o {out_path_org}/{out_name}.exr' {beauty_org}"

if out_to_term == "YES":

os.system(fr'x-terminal-emulator -e "{command}"')

#os.system(fr'gnome-terminal -e "{command}"')

if out_to_term == "NO":

print(command)

wait = input("\nCopy the command above into a terminal to run it - (PRESS ENTER TO PROCEED)") #This is used to generate a stopping point

if change_variable == "q":

print ("Quiting...")

exit = 1It would probably be very easy to do a python version that is OS agnostics, or at least compatible

Probably would be easy to do that. Especially with a GUI that calls up the built in file browsers from various operating systems (and desktop environments) along with general calls for a terminal. But my python knowledge is limited… so… at the moment I’m sticking with what works for me, even if that reduces the usability for others.

One thing I was thinking about was potentially making a Blender add-on that allows the setup of OptiX temporal denoising inside Blender (until the feature is officially implemented). That way people don’t have to run a separate app/script and the GUI stuff is all handled by Blender. However, once again my knowledge of python is limited and as such I’m not sure I can make it without a lot of research and/or guidance.

It’s been a while but I’ve finally got around to reviewing the results for this Danish mood scene plus one with lots of foliage.

I’m sorry there’s no video to go with this comment, I find the process of making the videos a bit tedious. But here’s the results:

- The scene with foliage I’m just ignoring the results. I believe either the motion vectors were broken or the movement between frames was too large to produce good results with temporal denoising. On top of that, the image is already kind of mushy from the fact I’m denoising a lot of really fine detail objects.

- With the Danish mood scene, a lot of it lacks detail (E.G. White wall), and as a result the temporal denoiser did a good job in those regions. Shadowed regions seem to have more issues with the temporal denoiser, even if they’re on low detail surfaces (like the white walls). I did run tests rendering the scene with static and animated seeds, and the static seed produce a better result in my personal opinion in this scene.

As for what noise threshold you should use for OptiX standard vs OptiX temporal in this scene? I didn’t look into it that much, but I can tell you a noise threshold of 0.008 still isn’t that great, you’ll want to go for something lower like 0.004 or 0.002.

Good news! Patrick Mours is working on integrating OptiX temporal denoising into Blender:

https://developer.blender.org/D11442

Hi,

Thank you all for the great tips about running the OptiX 7.3 temporal denoiser.

I am new to Blender and am surely doing something wrong but actually I can’t get it to work properly using the compositor route described by @Alaska.

As far as I can tell from the code of OptiX 7.3, all the EXR files that are being passed as an input to the binary are getting their channel header extracted and matched according to the following pattern:

-

Afor channelA -

Rfor channelR -

Gfor channelG -

Bfor channelB

However when rendering EXR files for each layer, I end up with files having the following headers:

- Beauty:

Image.A,Image.B,Image.R,Image.G - Flow:

Image.X,Image.Y,Image.Z - Albedo:

Image.A,Image.B,Image.R,Image.G - Normal:

Image.X,Image.Y,Image.Z

Leading to OptiX crashing, telling me that it can not load the R channel (could be any other channel).

Basically for both Beauty and Normal, modifying the code to make it look for Image.{Channel} instead of {Channel} then recompiling seems to do the trick. However I feel like I did something wrong and those headers should have a proper name. Also, for Flow and Normal, I have absolutely no idea how to make them match the RGB channels OptiX is looking for.

Did you face the same issues ? If yes, how did you achieve to run OptiX properly from Blender’s output ?

Thanks in advance

I personally did not run into this issue and to be perfectly honest, I don’t have enough knowledge to help you with the specific issue you’re facing.

However, I can assist in pointing you in the right direction in doing this the “official” way. As @YAFU has pointed out, Patrick Mours (from Nvidia) is adding OptiX temporal denoising officially to Cycles-X.

This means you can use OptiX temporal denoising the “official” way. To do this you need to build a custom version of Blender by following these steps:

- Setup a Blender build environment by following the guides on this site: Building Blender - Blender Developer Wiki

- Ensure you follow the steps for setting up CUDA and OptiX when building Blender (Make sure you pick CUDA version 11.X and OptiX 7.3 or higher): Building Blender/CUDA - Blender Developer Wiki

- Following that, make sure you have Blender building alright and that specifically OptiX rendering in Cycles is working.

- Now you need to switch to the Cycles-X branch of Blender, to do this run this command in the Blender source code folder:

git checkout cycles-x - Wait for that to process and then you probably want to do a completely clean build of Blender. The easiest way is to just delete your old build folder and build again with

make. Make sure you once again enable CUDA and OptiX as required. - Following this you’ll want to apply the patch provided by Patrick Mours. There’s various ways of doing it, doing it by hand,

git apply, etc. I personally recommendarc patch. There’s a page explaining how to set uparchere (I also had to search around online for some extra help. In the end I just installedarcanistfrom my Linux distributions package manager and that worked): ◉ Arcanist Quick Start

6(a). Assuming you went with the arc route of apply the patch and you have everything setup, you need to apply the patch using the command arc patch D11442. It produced a error for me as arc was a little confused about stuff, but I went and manually fixed that issue.

- After you’ve applied the patch, you can build Blender again. And now you should have a version of Blender with the OptiX temporal denoiser built into the python console. Now follow the guide for using the new denoiser described in the patch notes ⚙ D11442 Cycles X: Add OptiX temporal denoising support

I’m sorry if this is a bit of a mess. Just ask if you need any help. I probably won’t be able to help in some areas, but hopefully others can help or maybe I can point you in the right direction.

Thank you for your answer. And also for sharing your screenshot about composition, it helped me a lot to get started.

I assume this would definitely be the best way to do it as using OptiX from outside Blender is not really something I’m looking for on the long run.

Actually your description is pretty clear. I’m gonna give it a try today and see what comes out of it. If it’s all about patching and building a custom release it should be ok. Hopefully this patch is gonna get merged soon enough.

I will let you know if I get something to work properly. Thanks a lot once again !

As a side note, if you need help with building Blender, you’re probably more likely to get a quick response out of someone over in the “Building Blender” chat channel over here (you sign into it using the same account as the one for this site): blender.chat

However, you can still post any questions here if you want.

In theory setting up a build environment for Blender is relatively straight forward if you follow the guide. The thing I had the largest issue with while doing my first compile last year was with CUDA and OptiX.

Part of setting up CUDA and OptiX requires you modifying the cmake file for that Blender build. I initially tried to do this by opening the cmake file in a text editor but couldn’t figure out how to get it to work. Turns out there’s a easier way to do it by using a tool known as “cmake gui”. It was available on my Linux distrobution, but it may be different for you.

This issue may be Linux specific:

The other issue I had was to do with CUDA complaining about the wrong GCC version installed. There are two ways around this, install the gcc version CUDA expects or modify CUDA to stop complaining. At the moment CUDA 11.X is designed to be best used with gcc 9 and 10 (not sure about the latest CUDA 11.4). Blender is designed to be compiled with GCC 9.3.X. I personally went down the route of installing GCC 9.3.X and using that. However, if you want to go down the route of modifying CUDA to not complain about a higher gcc version, then you can do so by modifying a file located here: /usr/local/cuda/include/crt/host_config.h (if you can’t find it, try searching for it in other directories)

Search for the region containing this (searching for the term gcc finds it quickly):

#error -- unsupported GNU version! gcc versions later than 10 are not supported! The nvcc flag '-allow-unsupported-compiler' can be used to override this version check; however, using an unsupported host compiler may cause compilation failure or incorrect run time execution. Use at your own risk.

#endif /* __GNUC__ > 10 */

and modify the end bit so it says something like:

#endif /* __GNUC__ > 20 */

(just change that last value to a value higher than your GCC version)

I hope everything goes well.

Hi, I have been stuck in blender motion vectors for long time. This is one of the important data i would need for my final project. Motion vectors I get has very large values, almost ranging min max from, (-max_width, +max_width). I tried almost every thing I could find in the internet. I am looking for some answers from you all.

I believe the values produced by motion vectors are in the number of pixels movement. So large values like 100 mean that between two frames that specific part of the scene has moved 100 pixels. So it’s possible that’s the issue you’re facing.

Another possible cause is that you’re experiencing a bug. Can’t be sure about this without access to your file.

If you’re using the temporal denoising patch (⚙ D11442 Cycles X: Add OptiX temporal denoising support) to gain access to those motion vectors, it’s possible that you’re also experiencing a bug related to that.

Can I be a bit cheeky and post my tutorial on doing temporal de-noising using motion vectors here ?

It might be relevant to the discussion as its a technique Ive used on several feature films with great success.

First doing spatial denoising then temporal by average or median 3 or 5 frames works very well for a lot of shots. But fails at specific areas.

Thought it might be useful for the discussion.

Now that ⚙ D11442 Cycles X: Add OptiX temporal denoising support has landed in master, has anyone here tested it yet?

I’m not a coder (not even Python) and I could get it to denoise single frames “by hand” and so far they look good. Can some Python-savvy guy post a commandline to denoise a whole sequence?

And while we’re here: Temporal denoising requires a motion vector pass which can only be enabled and written to EXRs if rendered motion blur is off. How would one use temporal denoising on motion blurred sequences?