I just thought I’d add some information here that’s a combination of what I’ve found out myself and what I’ve read from others.

- The motion vectors produced by Cycles contains two sets of motion vectors. This is mentioned in the Blender manual and Tobias Weis’ article on how to generate computer vision data from motion vectors from Cycles.

Vector:

Motion vectors for the Vector Blur node. The four components consist of 2D vectors giving the motion towards the next and previous frame position in pixel space.

–

Source: Blender manual Passes - Blender 4.2 Manual

Important information: The flow-values are saved in the R/G-channel of the output-image, where the R-channel contains the movement of each pixel in x-direction, the G-channel the y-direction. The offsets are encoded from the current to the previous frame, so the flow-information from the very first frame will not yield usable values. Also, in blender the y-axis points upwards. (The B/A-channels contain the offsets from the next to the current frame).

–

Source: Tobias Weis’ article Groundtruth data for Computer Vision with Blender – Dr. Tobias M. Weis

Looking at this in Blender this seems to be true. And just to simplify and explain things, here’s a quick summary:

The motion vectors generated by Blender are 2D motion vectors. The 2D motion vectors describe the motion of a pixel along the X and Y axis based on its speed. These X and Y axis are saved to the motion vector pass using colour channels.

Red=X axis (Horizontal motion)

Green=Y axis (Vertical motion)Blue=X axis (Horizontal motion)

Alpha=Y axis (Vertical motion)

There are two sets of X and Y axis saved. One describes the motion vectors from the current frame to the previous frame and one describes the motion vectors from the next frame to the current frame.

Note: The motion vectors can be saved with negative values (E.G. -10) to describe movements in the opposite direction. Hence it is important to save the motion vector pass in a float image format, like an EXR.

- OptiX temporal denoising expects a 2D motion vector pass and from looking at the example scene provided by Nvidia, the 2D vectors are saved to the Red and Green channel of the EXR files.

Red=X Axis (Horizontal motion)

Green=Y Axis (Vertical motion)

This lines up with Cycles. So why do the motion vectors behave differently?

Because of one thing. According to the OptiX documentation OptiX expects the motion vector pass to describe motion from the previous frame to the current frame. Cycles provides a motion vector that describes the motion from the current frame to the previous frame (plus extra for future frames). In theory the “plus extra” part (Blue+Alpha channels) should be ignored by OptiX, it only needs the Red and Green channel, so that should be a non- issue, but the fact the direction of the motion vectors is reversed is a issue. All the motion produced by Cycles is backwards (according to OptiX).

In temporal denoising modes, pixels in the two-dimensional flow image represents the motion from the previous to the current frame for that pixel. Pixels in the flow image can be in one of two formats:

- OPTIX_PIXEL_FORMAT_FLOAT2 (two floats, XY)

- OPTIX_PIXEL_FORMAT_HALF2 (two halfs, XY)

–

Source: Nvidia OptiX documentation: https://raytracing-docs.nvidia.com/optix7/guide/index.html#ai_denoiser#temporal-denoising-modes

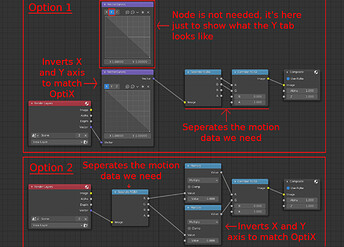

So, how do we fix this issue? I believe it’s just as simple as flipping the direction of the X and Y values for the current frame to the previous frame motion vector and saving that. In the process, I’m also going to get rid of the motion vectors for the next frame as OptiX doesn’t need it and I want to be on the safe side. This can be done with a few approaches, but here’s two that appear to work:

The results from these appear to match the general format expected by OptiX. The last thing I’m unsure about is if the magnitude is correct, but I believe it is.

–

I believe I have run some denoising tests using these new motion vectors (I’ve done so many tests I can’t be 100% sure about it) and I didn’t notice much of a difference. Will give it another try some time soon.

On another side note, the OptiX documentation says this about temporal denoising

The OptiX SDK provides the OptixDenoiser sample which could be used to verify properly specified flow vectors. When invoked with -z, the tool looks up the position in the previous frame of each pixel using the flow vector. The pixel value from the previous frame position is looked up in the current frame position and written. When denoising a sequence with the -z option, frame N with motion applied should look similar to frame N+1 in the noisy input sequence. There should be no major jumps when comparing these images, just shading differences as well as differences due to disocclusion and so forth.

–

Source: Nvidia OptiX documentation: https://raytracing-docs.nvidia.com/optix7/guide/index.html#ai_denoiser#temporal-denoising-modes

But the most important part to me is this:

When denoising a sequence with the -z option, frame N with motion applied should look similar to frame N+1 in the noisy input sequence.

Does this mean the noisy input should have very little variance between the previous frame and the current frame? As in, animated seed should be turned off? Or does it mean that the overall noise should be low so the differences between the previous and current frame are small? If it’s the latter, then that would explain why we don’t get great results. 100 samples still produces a lot of noise which can cause issues with the denoiser deciding that the scene is too different and thus doesn’t apply the temporal information?

Or am I just mis-interpreting the wording and instead it’s basically trying to say “temporal stability will be increased when using temporal denoising”.