Back on track with the thread main purpose (begging for Cycles features), I have one request:

we all know that now the “AO Bounce” trick from Simplify panel has turned into a more noble “Fast GI approximation” feature, which is great. Even greater would be to have more control on it.

I mean a dedicated “AO Distance”, not the same of world.

Also the ability to use a different world could be cool, even if you might go with just filtering lightpaths in the world shader.

Hey, OptiX temporal denoising integration in Blender to get results easily without complex user settings is also a Cycles Request ![]()

Just testing to see if this is something feasible and if it is worth it.

Another one: about Cycles being renewed and being its target to be

a “small studio” production render engine, that sits between a render engine with a focus on complete realism, and a totally programmable and customizable Renderman style engine.

I was wondering if volumetrics could be one of those thing that can be completely fake and yet work well. As they are seen in Eevee and in most realtime engines out there, fake volumetrics do work really well visually and of course require a lot less calculation time. So, wouldn’t it be cool if we could choose Fake/Real volumes?

They are in Eevee and most realtime engines because both Eevee and “most realtime engines” are Ray Tracer and Rasterizer hybrids. Cycles is a pure path tracer. Changing it into a rasterizer hybrid just for the purpose of fake rasterized volumetrics would require fundamental change to the renderer architecture which would come with a lot of drawbacks.

Second of all, you are citing literally 10 years old article. As in if a kid was born when this article was published, the kind would turn 10 a few days ago. That’s such a long time to change direction and goals, that significant portion of that article is probably not valid. Cycles is currently on it’s way to become much more than just “small studio” production render engine.

It could already be used on some of the tripe A production workloads, and it has only a few components missing to be used on almost all of them (volumetrics motion blur, proper shadow catcher…)

I do get the appeal of rasterized volumetrics. They just render so fast compared to ray traced ones. But it just makes more sense to render them in the hybrid renderers than trying to turn Cycles into one at this point.

Here is an extreme test of Optix temporal denoising with only 50 samples in Classroom scene. You note that higher render samples should be used for production for this to be really useful. I don’t have good hardware to test high render samples in a short time.

@Alaska, if you want all this discussion about OptiX temporal denoising could be moved to a new thread.

EDIT:

I’m going to delete my video, a better demo here:

Edit: I’ve created a new thread: https://devtalk.blender.org/t/taking-a-look-at-optix-7-3-temporal-denoising-for-cycles

Moving the discussion to another thread might be a good idea? Not really sure.

Also, I took a look at things and my suggestion from previous comments (Cycles may be using the “wrong” motion vector format) is probably incorrect. The motion vector directions from the OptiX temporal denoising sample seem to line up with what Blender/Cycles expect so I believe Cycles motion vectors should work with OptiX.

I’m going to give classroom a try myself, with 50 samples and maybe even 100 samples.

May also experiment with other things.

I am worried this test is most likely wrong. There are quite a few temporally stable denoising solutions which actually test noise variance in between the frames to determine what is noise and what is actual image data. But for that to happen correctly, the noise seed has to be different each frame, as if it was shot on a real film by a real camera. Otherwise, it’s much harder for denoising algorithm to determine if the artifacts sliding along with the camera are noise or scene detail.

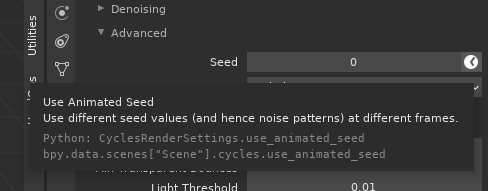

In your test, it’s apparent that you have your noise pattern locked, which I suspect decreases efficiency of the temporal denoising. I could be wrong, but I would still suggest you do the test again with this enabled:

Note: I’m not YAFU.

I’m planning to do a test with both animated noise and no-animated noise. Will report back with results and probably a video in a few hours.

(I did not share my results from my previous tests because the animations were really short due to the fact I was rendering 3 animations at once, all with long render times and wanted to test OptiX temporal quickly). However, since the “Classroom” scene is relatively quick to render, I should be able to generate a long animation quickly.

Classroom scene has random noise patern by default (animated like old school, there was no little button for random seed back then). I have tried static and random noise patern, and I have not found much difference in OptiX temporal denoising results (at least in my limited test to just 50 samples).

In the video it was my fault. There when I created the video in KDEnlive I got confused and put Noisy output with static noise pattern, but all the other comparisons there are with random noise pattern.

If you have fast hardware, a test with samples suitable for production would be good, this is the scene without applied denoiser containing very little noise, only residual noise. In my test people can come to believe that there are not many differences and temporal denoiser is not doing a very good job, but I think that it is doing a good job, we just can’t ask for miracles with only 50 samples.

By the way, I still wonder if “flow” output we are using is correct.

Edit:

An important thing when your render with OptiX denoiser enabled in the render tab if you then want to compare animation without temporal denoising. You remember to enable Color+Albedo+Normal in the Layers tab.

By the way, I think that maybe it would be better if that option is moved to the Render tab as it is for Viewport.

That’s interesting. I’d expect it to make at least some difference

I think the messages talking about NLM denoiser planned to be depricated should have stayed in the Cycles request thread.

Sorry if that was not possible and I complicated things by requesting to move OptiX temporal denoising discussion to another thread.

Anyway, I couldn’t secure a definitive conclusion regarding random and static noise patterns advantages with my limited test with only 50 samples.

So I just rendered out a set of four animations of the classroom at 100 samples

- Static seed

- Animated seed

- Static seed (but the camera is rotated)

- Animated seed (but the camera is rotated)

If you’re wondering why I ran those last two tests (rotated camera), it’s because I read something while looking at the documentation of Nvidia’s I-Ray and it just gave me a random thought to give this a try.

After I’ve denoised these scenes (plus some other tests where the flow pass is just black), I will probably post results plus re-render the scene at 300 samples over night (it’s late here, so I’ll go to bed soon)

Sorry, @MetinSeven changed the topics over. Maybe they could help?

Just in case you waste time realizing it like me, in classroom scene “Compositing” is disabled in Output tab under Post Processing item.

In my case, I eliminated default Compositing nodes from the Classroom scene, and only configured the necessary output layers for the test there.

Hi @YAFU,

The denoising sub-discussion started to lead its own life in the general Cycles Requests thread, causing a bit too much noise there (pun intended ![]() ). I think it’s more convenient for the Cycles devs to read the denoising posts in this separate thread than as a sub-discussion being tucked away into the many pages of the general Cycles Requests thread.

). I think it’s more convenient for the Cycles devs to read the denoising posts in this separate thread than as a sub-discussion being tucked away into the many pages of the general Cycles Requests thread.

Thanks for the heads up. I keep forgetting a lot of the demo scenes have compositing disabled.

Okay, it turns out tests are taking longer than expected. I hope to have results soon… but I created a bunch of little off-shoot tests as I went (and I now have 100GB of EXRs from 20 different denoising tests)

If you’re wondering what the tests are, they are as follows:

- Static noise

- Static noise with the camera rotated

- Animated noise

- Animated noise with the camera rotated

With each of these I rendered the scene with:

- OIDN (Colour+Albedo+Normal)

- OptiX 7.2 built into Blender (Colour+Albedo+Normal)

- OptiX 7.3 temporal denoising

- OptiX 7.3 temporal denoising but the flow pass is black (to see what happens without flow)

- OptiX 7.3 temporal denoising but the flow pass is just a transparent image (just in case black means something in flow data and OptiX can see transparency?)

I probably won’t share the video for many of these tests, they’re primarily there for my own observation. But I’ll share results soon (hopefully…)

I’ve just started rendering the 300 sample version of the scene. Will post information (and a video?) about when it’s finished.

Sorry, my video editing software keeps crashing and it’s getting really late. I’m unable to share a video now, so I’ll just write up a quick few notes:

- Temporally denoising the animation with black or transparent flow data didn’t seem to have much of an impact on the temporal denoising. It may be more apparent in different scenes with different sample counts and amounts of motion.

- My test with the rotated camera didn’t show any noticable changes. I didn’t expect anything noticeable to happen with it but I just wanted to test to make sure.

- As you would guess, OIDN and standard OptiX are not temporally stable at 100 samples at 1920x1080 in this classroom scene. OptiX temporal denoising helps, but there’s still areas of temporal instability. Hopefully the 300 sample render will help?

No problem. There is no rush.

Thanks for your tests and notes!