Guys, relax a bit

I think like 15 different people sent me links to this video over the last two days. While it definitely is an interesting paper, I think the video is waay too optimistic and uncritical.

Yes, from a research standpoint this is a great algorithm. But reading through the threads about it, everyone seems to think that it is the holy grail of rendering that will give you perfect images within seconds and magically solve everything that is wrong with renderers. That is NOT the case.

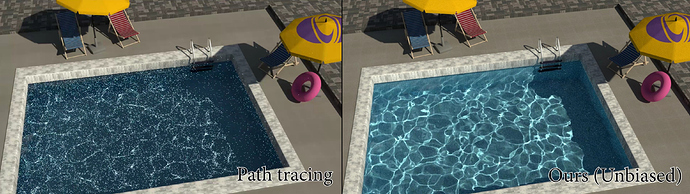

First of all - this only is about caustics. Nothing else. This will not make any difference to something that is not a caustic. If you currently have caustics turned off in Cycles, this will make nothing faster or less noisy.

That being said, this algorithm is far from being practical for production rendering. Look at all the example scenes - note how they consist pretty much only of objects that cause caustics? That’s because this algorithm works by picking a point on an object and then trying to construct a specular manifold connecting to a light source. That’s easy if you only have caustics-casting objects in your scene, but not so much in a realistic use case.

For example, take a look at figure 14 in the paper. It’s an equal-time comparison, and you’ll find that it shows 4000 samples of plain PT vs. 50 or so samples of SMS. That means that each SMS path is almost 100x as expensive as a classic path.

“Yeah, but the image is soo much cleaner at 50 samples, right?” Well, the caustic is. However, if this was e.g. a glass sitting on a table in a full ArchViz scene, the caustic would look great while the remainder of the scene would look, well, like it’s been rendered with 50 samples instead of 4000. Remember, SMS only helps with caustics.

And on top of that, it seems like some people completely missed the “equal-time” part and now think that every render will look good in 10 seconds because it says “50 samples” and 50 samples are fast with Cycles’ current algorithm…

Even the paper says this:

Determining when to use our method is another important aspect for future investigation. Attempting many connections that are ultimately unsuccessful can consume a large amount of computation.

That is what I describe above - the glass on the table would only cast a noticeable caustic on the table, but how is the algorithm supposed to know to that? So, it has to always try to connect a specular manifold via the glass, which will fail in 99% of the cases.

Also, note that the paper explicitly describes this as a “path sampling technique”, not as a rendering algorithm, because the authors themselves know that this is “only” a building block for future research and not something that is immediately usable.

Building blocks like this are important, but turning them into a practical rendering algorithm is another massive challenge. It’s much more likely that it will first be used for mush more limited applications - for example, I remember reading about some company having custom integrator code for light transport in eyes since caustics etc. are important to get the look just right for VFX. That’s a much more limited and therefore more practical use case, so there’s a good chance that whoever is working on that is taking a close look at this paper currently.

It’s not a question of how hard some algorithm is - I could easily implement a basic form of this, or SPPM, or VCM, or whatever in Cycles in a week or so. The problem is that Cycles is a production renderer, and that comes with expectations.

Everything we include has to work with live preview rendering, GPU rendering, ray visibility options, arbitrary surface materials, arbitrary light materials, volumetrics, DoF and motion blur, tiled rendering, denoising and so on - that’s not even close to the full list. Every single point I mentioned above is a complication/challenge when implementing VCM, for example.

It’s honestly impressive to see just how much attention this one paper got from this video - there’s many more out there showing comparable improvements for some special case, but they didn’t get nearly the same attention.

Don’t get me wrong, the paper is impressive and a great archievement. And I like the YouTube channel in question, but one clear problem is that it’s purely hype and not a pragmatic look at the topic. The amount of times I’ve explained over the last few days why we can’t “just implement this and be the most advanced renderer in the world 100 lightyears ahead of everyone else”…

So, here’s a somewhat lengthy explanation that people will hopefully see.