Based on the amount of confusion the mesh “scale” mechanics caused me personally and thousands of other people, I can safely say that it is the worst thing that happened to Blender. So why not get rid of it?

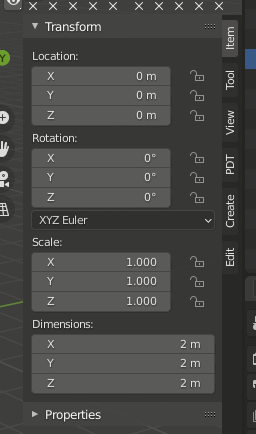

Let’s say we have a cube 1m x 1m x 1m.

When you increase the width of that cube to 2m the only thing that should happen is the cube should now have the following properties: 2m x 1m x 1m, which makes perfect sense, right? But instead of this Blender creates some weird abstract called “scale” that is monkey-wrenched to mesh data in some magical way that no one understands (according to the amount of confusion it raised on the internet).

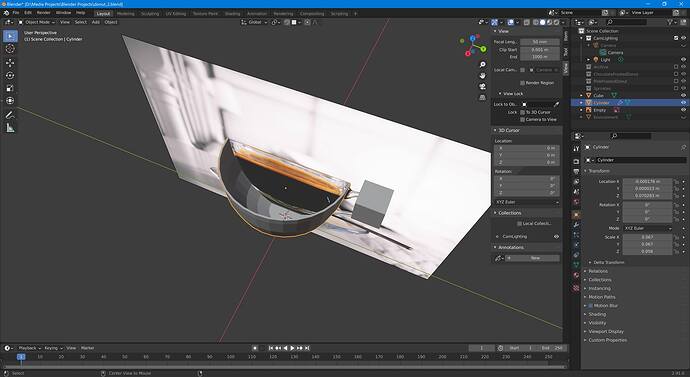

I wanted to use blender for precise engineering, but I gave up after spending several hours figuring out why some of the meshes in my project had the wrong properties and other meshes didn’t.

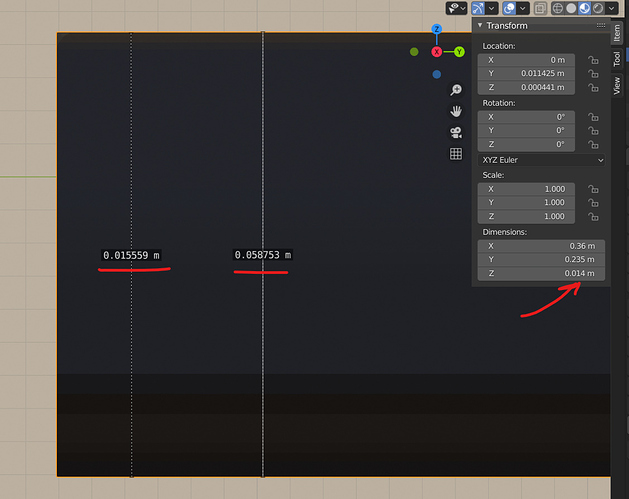

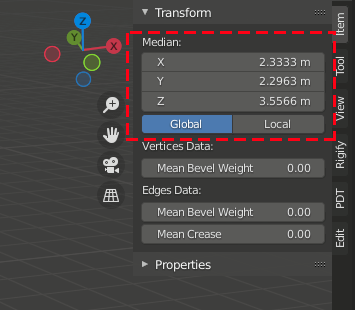

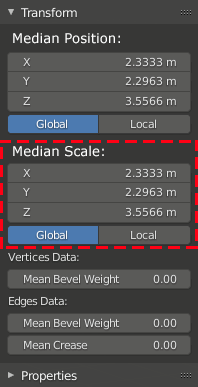

I mean, explain this nonsense:

This image demonstrates why I think this “feature” should be removed from Blender. I added a ruler in the object mode and then another one in edit mode and I don’t know what to believe. This block should be about 0.015m in height but after manipulating different properties of the object it now shows different confusing numbers everywhere.

I don’t know why we have to do these magic manipulations, but I applied the scale for every object after manipulating it, and it helped in some cases but didn’t do anything in others. And how am I supposed to back trace it to the beginning and figure out how this abstraction works and what I need to do to fix this problem that shouldn’t exist in the first place?

When I change the size of a mesh I should expect Blender to just change the size of that mesh. So why is Blender creating a magical unicorn instead? And why do we all have to spend hours learning how to use that magical unicorn?

If I want to “scale” a 1m x 1m x 1m cube and make it twice as big in size, I should change its width, length, and height to 2m x 2m x 2m, and after that all the modifiers, textures, UVs, measuring tools(!), etc. should simply update and reflect that change, right? If I change mesh property length, Blender should change the property length of that mesh. It cannot be any easier.

I love blender, but this feature makes me angry both as a developer and as a Blender user.

Pros of removing “scale” mechanics from Blender:

Objects will have precise properties in any context, without any invisible magic layers on top of it.

- It will simplify the codebase and increase the rate of development

- It will remove an unnecessary confusing layer of abstraction and decrease the amount of bugs

- It will save developers and digital artists millions of hours figuring out how this layer of abstraction works

- Blender will become usable for precise engineering