To demonstrate both of the features I’d like to suggest for Blender, here’s Quixel’s Jack McKelvie creating a beautiful rendition of Halo’s Blood Gulch in Unreal Engine 4 using them:

Create Halo’s Blood Gulch in UE4

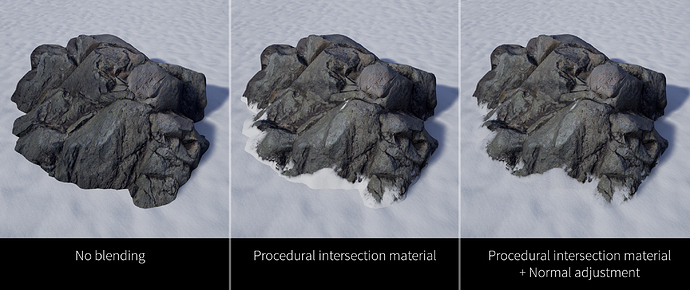

1) Pixel Depth Offset

When two meshes intersect, adds dynamic material blending and normal smoothing for a natural transition. McKelvie uses it numerous times in the video, for grass and dirt intersecting the ground, the bunker intersecting the ground, the mountains intersecting the Halo ring, the cliffs intersecting each other, and more.

If this were implemented in Blender, it would make creating outdoor environments with natural weathering and accumulation simple, without obvious hard edges. We’d obviously also need knobs to adjust the blending amount for both materials and normals.

I think this is critical for Eevee’s lasting success. Real-time homemade CG films could well be the future of independent moviemaking. Environments need to be believable for this vision to be realized.

2) Layered Material Painting

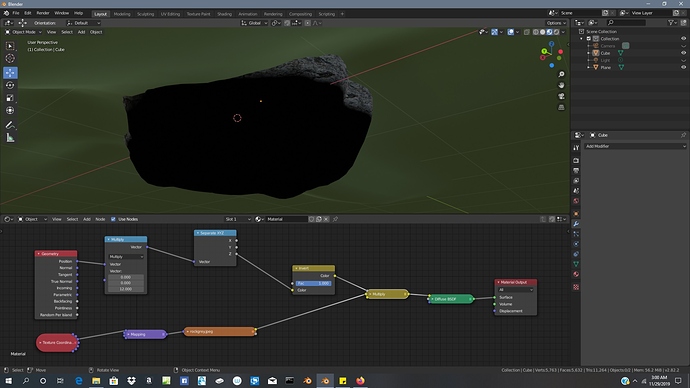

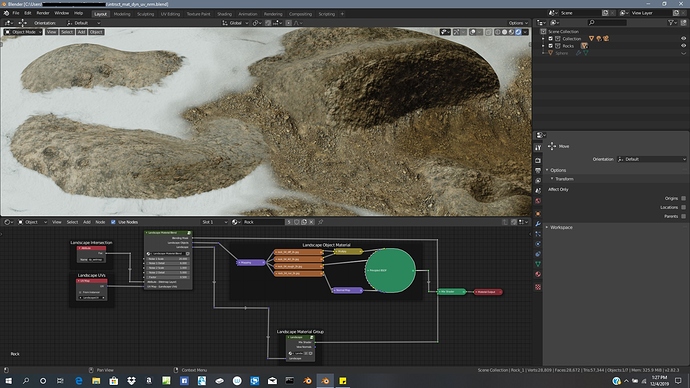

Simple enough concept. For a single mesh, the shader engine can layer materials (or layer the node groups within a single material), so that in texture painting mode you can arbitrarily paint those materials/groups across the surface of the mesh, such as for a landscape.

Right now, this is ridiculously difficult in Blender, requiring cascading two-input Mix Shaders each with their own maps as input to the Factor. In the following video, we see that Unreal allows shader groups to be compiled into a dynamically expanding layer node, which then exposes all of those layers for direct painting onto the surface, with blending techniques such as Height Lerp:

Texturing a Landscape in UE4 (Unreal Engine 4)

This would allow much, MUCH simpler handmade terrain creation.

__

These two features are, in my opinion, critical to Blender and Eevee competing with Unreal Engine in the real-time CG film space. Seeing that Blood Gulch rendition, I’m sure many Blender users would be happy to see more scenes like that, both as wallpapers and as potential environments in which characters can interact for film and TV.

P.S. – Weight painting vertices to place particle objects is nowhere as simple or performant as McKelvie painting the grass in that scene. Why can’t we have that?