Hello Everyone,

I’m BD3D, I’ve been working almost every day with blender hair particle system for almost 7 months, writing a commercial python addon called “Scatter”, meant to facilitate/automate the scattering workflow for artists, a bit like what Hard-Ops is currently doing for hard surface modeling but for scattering.

As the hair-system will be redesigned soon, I’m creating this topic, trying to highlight flaws of the current hair-system, mainly in the hair distribution area. I’m hoping that those flaws could be corrected with the new design. (as it can benefit everyone).

1// Particle Hair biggest Flaw, Inaccurate position on viewport.

One of the most problematic thing about the current hair system is it’s inaccuracy.

If the hair got any kind of influences over it’s density (for example vertex-groups or in this case a texture) the hairs positions in the viewport are False.

Note that this can be refreshed only when:

-toggling edit mode on/off on emitter

-changing active material from particle emitter

-hiding/unhiding a particle system.

(Even within python, the particle-matrix API is also affected by this bug. )

Let’s make sure that the viewport is accurate all the time.

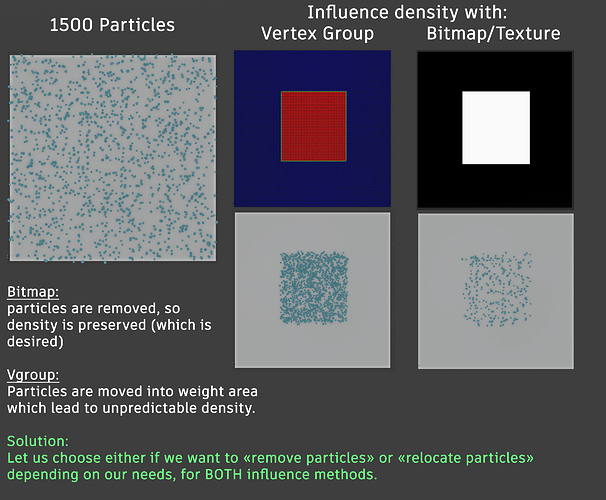

2// Vgroup/Texture Density Inconsistencies.

When an artist want to control his distribution area non destructively, he can either use a manual painted vertex-group, or creating a procedural texture.

But here’s a problem: the two solution have two different distribution behaviors, one will remove particles while the other will relocate them. Let’s just add a new a new option to choose one or the other for BOTH of them. (or getting rid of the this relocation system for vgroup, as it is mostly annoying users from both grooming and scattering workflows)

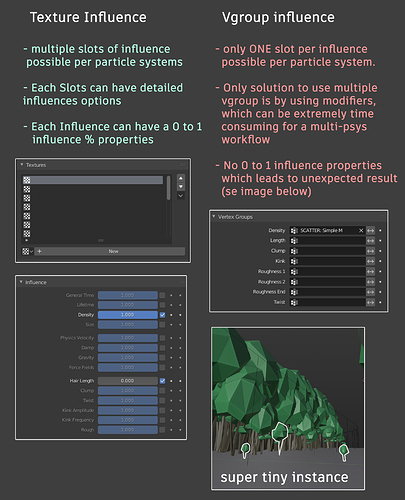

3// Vgroup/Texture General Inconsistencies.

When the user wants to have an influence, either if he choose an texture or a vgroup the basic principle stay the same, weight of 1 areas / White areas have full influence, gradually diminishing until a minimum of weight areas of 0 / Black areas, over multiple possible influence types per particle system.

so why the workflow and properties proposed for those two influence methods aren’t the same ?

the influence properties should be always unified when possible. as the task is highly similar for both methods.

4// A few Words about the link of hair combing and scattering.

This Topic is mainly aimed at the Hair system in general, scattering aside here.

Except for a few things to say about the link of scattering and hair particles.

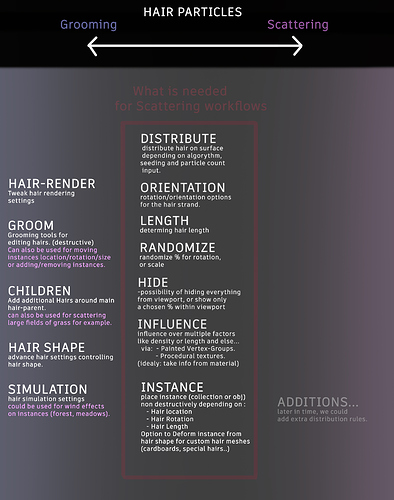

Some want the hair particle-system to be used exclusively for creating furs and hairs, and nothing else. I think that this is a bad idea for a multiple reasons:

-

The Hair-system need a scattering engine ( to distribute the hair procedurally on surfaces

have control over the orientation, scale, distribution algorithm and seed, randomization… ) -

The Hair-system need to support instancing anyway (for hair cards, custom hairs meshes…)

-

All flexibility will be lost. A non-flexible system in a tool such as blender is a bad idea, blender is about “blending”, tons of people use blenders in an extreme variety of ways, new cool features not flexible enough to be used in a variety of different ways doesn’t make a lot of sense in a melting pot tool such as blender.

-

Creating a working new scattering workflow designed for non destructive object instancing would be like re-creating an exact stripped down copy of the current hair distribution engine (see this graph below, we can see that the scattering engine the hair system is using can be used for a scattering workflow without any problem what so ever. The current hair scattering engine is working quite well for some simple scattering workflows, except maybe in the instance rotation settings that may need more precise options)

- possible solution:

making the hair distribution engine into it’s own universal engine,

for example a modifier or a node (or even just some API) with for only purpose to distributes any type of objects from a [location,rotation,scale] matrix into desired surface target with set of distribution rules.

- possible solution:

Bests

may i tag @DanielBystedt @sebbas

related :

https://developer.blender.org/T68981