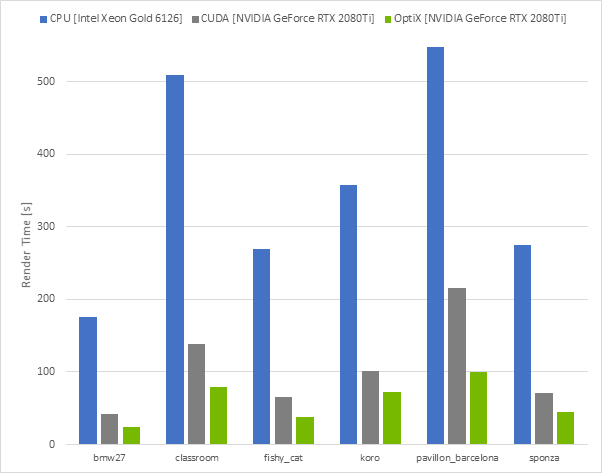

When reading the article [Accelerating Cycles using NVIDIA RTX — Blender Developers Blog](Accelerating Cycles using NVIDIA RTX), I took a look at the performance chart below:

Then I notice that for all benchmarks, rendering on RTX hardware (OptiX) takes some seconds to complete.

The bmw27, for example, that is the fastest to render:

It renders in ~45 seconds in CUDA, and in ~22 seconds in OptiX.

23 seconds is a really big difference when rendering something (considering it might be much bigger for longer renders), and I’m really happy RTX support is arriving for Blender.

However, I immediatelly associated the OptiX times as below my expectations. But why?

Because with the same card, the so-called “RTX games” can run at 60 frames per second (1920 x 1080).

That means in 1 second, 60 images (frames) are rendered.

Yes, I’m aware these RTX games (except Quake RTX) use the hybrid approach (rasterization + ray tracing), so each game uses RTX hardware for specific purposes (Shadow of the Tomb Raider for shadows, Battlefield V for reflections, and so on…).

However, If we take the game Metro Exodus as an example, it uses real-time ray tracing for global illumination (which I believe is a compute-intensive task).

Yet, it surpasses the average 60 fps (1920 x 1080):

Source: Metro Exodus PC Performance Explored Including Ray Tracing

Also, there’s the NVIDIA Reflections demo, which can render around 48 frames in just 1 second (1920 x 1080):

That means:

-ray-traced tech demo renders around 48 frames per second

-ray-traced game scenes render 64 frames per second (worst cases, according to the previous wccftech link)

-OptiX-powered Cycles renders 1 frame each ~22 seconds for bmw27 demo.

I can only think the games and demos are using ray tracing just a bit, but yet some of them (like the reflections demo) look very realistic.

Is OptiX the same technology behind demos and games? Or for them, there’s another approach?

I’d like to understand why both performances are so different, assuming both Metro Exodus and demos make use of ray tracing to some perceptible extent.

Thanks