I’m excited that texturing in blender will get some love but after reading this discussion I am deeply concerned. I’m really really sorry for harsh words but unfortunately, in my opinion, this is flawed design.

Problem with this proposal is that it tries to solve multiple different things at once but in result may end up as sum of all kinds of limitations of various systems and will be extremely hard to implement and maintain.

I think further discussions doesn’t matter unless we address those two quotes:

This may sound like a great feature but in reality it’s extreme limitation that will backfire. Bakes and textures hardwired to meshes basically eliminates ‘High-poly to low-poly’ workflow and other standard workflows (Tiled textures, trimsheets, atlasses). We are talking about majority of texturing use cases in games, and significant chunk in animation/ film production.

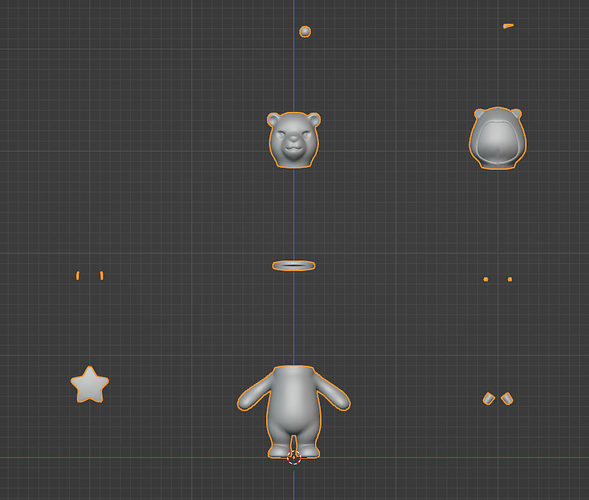

In typical ‘High-poly to low-poly’ workflow there are many variants of low-poly meshes. They share same UVs but geometry is prepared for different stages of pipeline.

In order to bake correct data maps (Normals, AO, Curvature…) we have to deal with rays overlapping on surfaces with close proximity and intersections between geo. It’s a craft, no baking system can or ever will be able to solve this automatically. We use “exploded” low poly meshes and move high poly to corresponding positions. Some programs allows to use multiple low poly meshes with names matching to high poly meshes so moving geometry is not needed. We often use cage meshes too. All those meshes to create single or few Texture Sets.

In texture authoring we arrange low poly geometry to see surfaces that are hard to reach and conveniently paint masks, select geometry etc. (Note duplicates sharing UV)

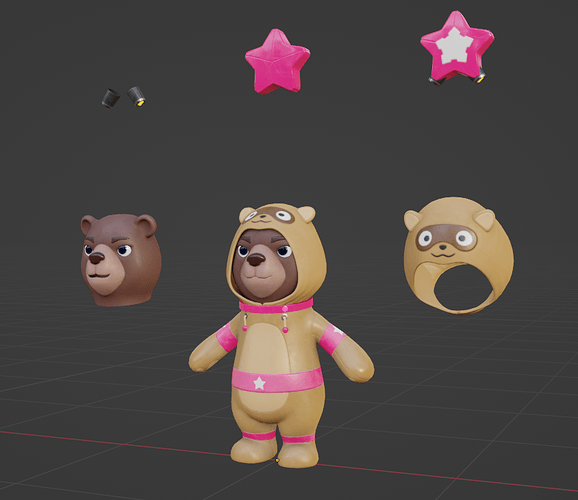

Final asset assembly may incorporate duplicating parts, geo for different shaders, additional geo with project-shared materials, detail maps applied in shaders etc etc.

Those are basics of modern texturing workflow, nothing fancy at all.

And yet none of this will be possible with proposed system if we hardwire textures to meshes.

I’m all for questioning workflows and researching novel ways but it’s not the case here. Current proposal doesn’t addresses today’s workflows (from other programs) and don’t present better alternatives.

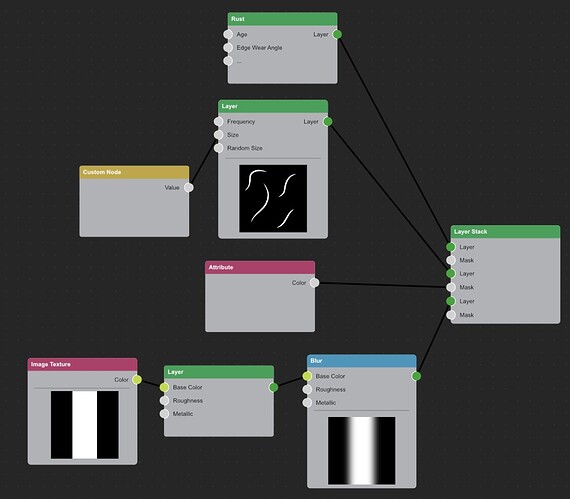

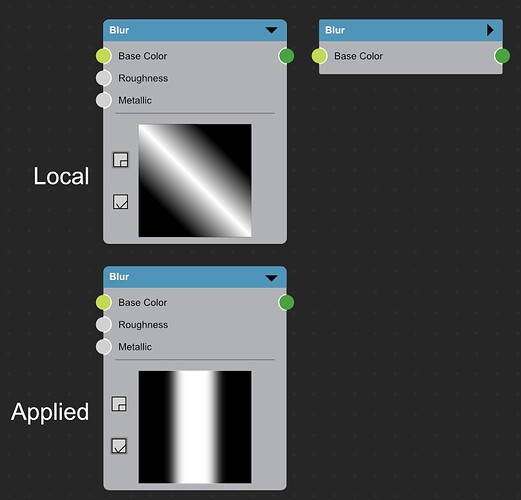

I think we need to step back and rethink goals of this project. Layers functionality is fantastic but apart of that this system looks currently more like some kind of image cashing solution for improved rendering speed and not actual texturing toolkit.

Again, sorry for being such a downer, frustration speaks through me probably. I’ve been researching texturing application and workflows a lot in past 11 years and I’m not particularly happy with current state of them. I’d be in heaven if Blender challenged the status quo. Can I help with that?