In the mockups you can see masks and modifiers are just nodes, they could be any kind of built-in node or custom node group.

See my reply here:

In the mockups you can see masks and modifiers are just nodes, they could be any kind of built-in node or custom node group.

See my reply here:

I understood that part. To make it clear I simply mean, the way to work currently (that only one program allows it) is stacking masks (and modifiers of the masks) inside textures mask. To achieve the desired effect. All this inside the stack. Not of the nodes (that as I have said I understand that there everything is possible).

Is this raised in the current design?

To give an example, this is my actual file with procedural textures. All that mask and modifiers are only for the mask itself. it’s not for texture(or PBR channels)

If we can not do this with the proposed design it will obligate user to use nodes. Or the design have a solution for this?

No screenshots like that please:

The plan is to support adding masks and modifiers from the layer stack UI. Such a system would not be limited to a handful of nodes, it should also be possible to add user created node groups from there. Likely there would be some kind of asset tagging to indicate which node groups are suitable as masks or modifiers, to avoid seeing a long list of irrelevant node groups.

There’s no specific design for stacking masks or modifiers on masks, it’s something we thought about but it would be interesting to see ideas for what the corresponding texture nodes would look like.

There should be an easy way to bake all textures in a scene, for users as well as exporters and renderers that need them.

As an occasional Blender user who uses blender to create assets to be used in other software (mostly “Enscape” assets for Revit) I think this is very important. Ideally the exporters (I mainly use .gltf workflow) could be updated to include a checkbox “bake textures”, so that we can use procedural textures while setting up assets and still have a very simple workflow to get these exported together with the asset.

Not sure how feasible that would be? Could it be made foolproof enough that users could download a procedural textures online, and be able to include them in their exports by just checking a box in the exporter, without ever having to actually understand the note setup of the texture?

I think this thread and the proposal would benefit from a little more elaborated mockup, Just Integrating the current mockup in the native blender UI would be much better.

Maybe even writing a simple manual for basic action like: painting PBR material, baking, adding layers stack to the Shader Node, adding layer stack to the Geometry Node, etc.

On another note, maybe some type of baking or caching can be included in the node that requires baked texture. So internally the blur and the filter nodes would be combined of a Bake Node and a Blur Node/ Filter Node.

When only an explanatory warning is visible to the user.

I think that we need to differenciate

and maybe

in my opinion textures and shares need to be unified and me

I would replace layer stack with the concept of modifiers. let me explain: if every geometry nodes is a modifier, every modifier can be seen as a layer of the geometry. in the same way every shader nodes is a material, every material is a layer of the geometry. only the concept of layers flows from bottom to top, the opposite of modifiers. masks, blends, effects and bakes are modifiers applied on the underlying layer, as for videosequenceeditor.

Overall I’m very happy with this plan. Two questions thou:

Do you see texture nodes and shader nodes existing in one editor? I’m asking primarily UI-wise.

Procedural texture need to be baked before using Blur node. This could be underlined with red dot/outline on layer/stack/blur node for example.My argument for this unification is that user have less interface to manage with one Shader/Texture editor and it’s representation in Properties.

And if baking can be made in a form of nodes in the same Editor, this design could also simplify baking workflow and solve dilemma where baking/caching UI should live.

My second question is about Attribute workflow. In the blogpost mockup there is an Attribute node present. If I understand this correctly it implies that attributes made with Geometry Nodes will be possible to use in Texture Editor too?

My question is how you see attribute flow direction in the future. Could it be possible to ‘send’ attribute from Texture/Shader Editor to Geometry Nodes too for example?

If you tab into the Texture Channels shader node, you would be able to edit the texture nodes right there without switching editors.

I think a single shader node graph with the BSDFs nodes, layer nodes and bake nodes is going to be quite hard to learn and set up correctly. You end up with rules about what you can put in a shader node group for it to be reusable as a texture, when and where you add bake nodes, which channels each layer should consist of, etc. It’s difficult to build a user friendly and high level UI when there is little enforced structure to the graph.

With this design were are trying to make it so that just naturally by the way things are set up, you could one click bake all the channels. Maybe that type of thing is possible with bake nodes too, but it’s not clear to me what the UI would be.

There could be a Texture Channels geometry node similar to the one in shader nodes. That would reference a texture datablock, and then you could use the channels as fields.

Sounds good. This wasn’t clear from reading blogpost.

I’d argue about setting a node graph. To me it’s way easier than navigate through dozens of sub-panels in texturing apps. But I get the point about setting rules. Tabbing into Texture Channel node from shader editor fills the need I had in mind, so no further questions.

The simplest way I can think of is putting baking nodes automatically after or before certain texture/layer/layer stack nodes if the user hits bake in stack properties. The content of the node could be identical to what is shown in properties.

This could allow for separate workflows if someone wants to use only nodes or only properties panel for work.

We don’t know how baking UI will look like, so what I wrote is shooting in the dark.

Do you plan to put baking in separate Properties tab or keep it within Layer Stack one?

That’s great!

Thanks for clarifications.

Maybe RCS’s number one request will finally be fulfilled for this project: Right-Click Select — Blender Community

Baking panel could be moved from Rendering Properties to Output Properties tab.

And more Baking panels relative to user baking requests could be added to this tab or View Layers tab (who could be renamed Render Requests).

Will texture layers be able to promote things such as float curves and their UI? Currently RGB/float curves, colour ramps cannot be edited or promoted outside of the current shader nodegroups, you have to edit them inside. I have wanted the ability to be able to promote curves/colour ramps as group inputs in regular shader nodes for a while… this may be Offtopic

I assume the texture layers panel UI will only have values that you can change and no curves promoted into the stack and you would have to jump into the nodegroup to edit them.

I really like the overall layered textures design ideas so far It seems well thought out.

This is very exiting, I like the general idea!

Bake button on texture node

One thing that would seem interesting to me is that if you have the option to bake textures, it would be like a switch on the (master) procedural/ baked texture node. Visually, I think it should be like Apple’s on/ off button in the node’s header bar, perhaps it should also affect the node’s outline.

When toggled off, the procedural mode is used, when toggled on, you will be prompted to save the baked textures to disk. But, if you toggle the switch off afterwards, you would return to the procedural workflow state. This does mean that the (master) texture node should remember:

Additionally, a single click to bake, would imply that all texture nodes are switched over to their baked state. The button for this should then be on the output node or somewhere in the UI, as opposed to on the nodes themselves. Although I can imagine you would be able to hook up a bunch of texture nodes to a bake node, which then bakes the result for the nodes that were connected to it and switches them to baked mode.

The idea behind this is that you can easily switch between the baked and procedural mode at all times. This could be useful for speeding up the workflow by having a shorter computation time when baked. Also useful for Blur like operations to be shown in the material preview. Furthermore, you can easily get back to the procedural mode to tweak the material later down the road and, easily re-bake them. Any operation that would destroy the possibility to switch between bake/ procedural should then (adjustable by user preferences) display a prompt message to confirm to proceed.

Also, by linking in the baked textures, it is possible to let a texture artist work on the texturing, bake out the result and have another member of the team load in just the textures by pointing to a file path that contains all of the textures, preferably with a “_Socketname” like indication.

Texture stack

Also +1 for the stack editing the texture painting through a stack, any Krita or PS user will appreciate that its there and it should preferably work the same way (albeit with procedural layer options aka ‘Smart Materials’).

Linked layers in the texture stack

An important aspect to consider is linked layers in the texture stack. In a complex case, you may want to create one mask and use it to drive multiple subsequent layers in the stack. For example, you may want to mask out the road from the pavement and later on use the inverse mask to create procedural manholes on the road. Being able to link these nodes on the fly means:

Conceptually, this is the equivalent of using Krita’s clone layer system, but then also for properties of a layer, rather than only cloning a layer.

Stack/ nodes

For showing the nodes as opposed to the stack in the panel, there could be a toggle switch view modes, much like how you change from solid to wireframe in 3d views.

Also, I think its good to consolidate texture nodes and shader nodes. The way I imagine interoperability to work, if this makes sense, is to have a top layer header “Principled BSDF” that cannot be altered by the user. Much like plugging in the texture node directly into the shader node, but then visualised inside the layers stack. Any layer below the shader header would then naturally reside inside a top level folder, user folders would then be subfolders of the shader folder.

If you want to create a more complex material, you would have several of these top level shader folders. The contents of the folder are then equivalent to input parameters, so the things you would want to affect as a user. The way they are wired up, would not be apparent, for that you would switch to the node view (e.g. create a mix shader node and hook up the two top-level ‘folder’ shaders to it and have the mix shader node connected to the output socket).

Actually, the idea of tabbing into the shader folder might be interesting. If you do so, you would only see the shader hierarchy and associated input/ output sockets.

Can’t tell you what a difference this will make for our studio

General thoughts

Is making a single texture datablock for many uses a good idea?

Yes, if conceptually that’s what it is don’t try to paper over it.

Are there better ways to integrate textures into materials than through a node?

Nodes are slow and a bit clumsy, again it comes down to speed. Subtopic

“Is it worth having a type of material that uses a texture datablock directly and has no nodes at all, and potentially even embeds the texture nodes so there is no separate datablock?”

Yes, in addition to a full node this would be helpful for when you just want a *&!$ texture

With this system, the users can now do the same thing both in texture and shader nodes.

Yes, that aligns with game engines, where you can alter baked textures in the shader system. No problem and again conceptual simplicity

What is a good baking workflow in a scene with potentially many objects with many texture layers? … how can we make a good workflow in Blender where users must know to bake textures before moving on to the next object?

Show the image in a thumbnail on the node. Important for usability and debugging. Since the bakes are just textures themselves, when not baked show them as a named but empty thumbnail.

Otherwise a single workspace with all of these elements is what is needed. Perhaps generalize the “Image Editor” to be “Texture Editor”. For the modes “View”, “Paint” and “Mask” add “Nodes” (or something). Add buttons in obvious places to perform the baking, exporting and other options.

Some textures can remain procedural while others must be baked to work at all.

Not generally a problem in other such applications. Regardless, if you have a thumbnail for the layer, you could simply omit that for pure procedural textures, or you can indicate it by how the thumbnail is shown (e.g. bold border for baked textures)

How do we define what a modifier is in the layer stack?

Not sure I follow, but if I understand it, the properties for the layer should make it clear

Can we improve on the name of the Texture datablock or the new planned nodes?

“Texture datablock” isn’t clear to me yet what that is, but that may be just studying the notes and thread. Regardless using the word “Texture” is probably best as that is fairly standard for this context.

“conflicts like blur node operating only on baked data”, is it even possible to add a “blur” gradient thing to procedurals at all ?

No. I was theorizing about combining texture and shader editor in one and how to solve potential conflicts.

The typical workflow is actually to bake once at twice the resolution of your output textures. In practice a good approach is to set your final bake density as you want it (4k for a typical 2k texture output) and it would be great to have a ‘quick bake’ button which does it at 1/2 or 1/4, for folks with slower GPU’s. We don’t bake in Blender due to the present limitations, I assume (hope!!!) it has GPU baking.

What is a good baking workflow in a scene with potentially many objects with many texture layers?

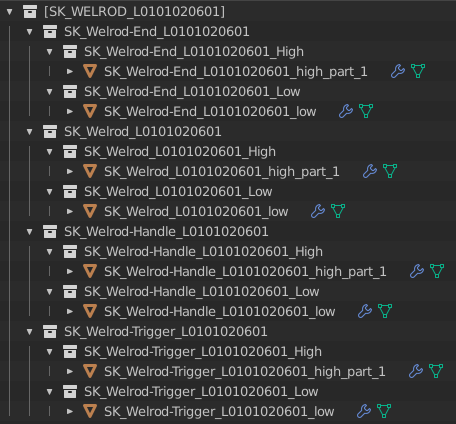

For AAA or portfolio work it needs to support separated parts of one object to avoid intersection errors. Also important for final asset export (e.g. a weapon that needs a separate trigger for in game motion) Other tools use a scene hierarchy for this - Blender can support this via the present collection system. For example, this uses our in-house naming scheme, but the tags (high/low) allow for automatic pickup for high to low baking - this is important to make it easy and fast to work with.

Caging is important - I don’t think Blender has an automatic caging system? Or does it … anyhow, if you support tags of some form (high, low) also support ‘cage’ for automatic pickup.

On the high/low/cage tags, you might be tempted to put these in a prop, but the problem is as you can see above you will often have many parts to a bake. Multiplied by 2 for the high, and maybe more than that for the cage. Plus all the bakes and textures, and so the assets explode. Naming conventions is important, so supporting the _high/_low/_cage nomenclature and collection hierarchy might seem clumsy or restrictive, but you can see how this becomes important.

Also, naming conventions on the output textures, you’d want a _N for a normal texture for example. And I’m not sure it’s mentioned but channel packing must be supported (e.g. _ARM is a single RGB output texture consisting of AO in red, Roughness in blue and Metalness in green)

Otherwise, as any house painter will tell you the biggest work and most important part of any job is the prep work. The final painting (= texturing) is the easy part, and the part that gets the wives to love you

In this case that means the modeling but more importantly the UV work. Blenders UV system needs work, the only way to do real work is by purchasing 3rd party addons (Zen UV and UVPackmaster being standouts). This is where most of the time is spent, texturing is typically like less than a quarter of the time spend on doing the modeling and UV prep. So improvements to UV, and tight integration with that system are important.

But having a real texturing system built in will make a world of difference, exporting back and forth to third party tools eats a lot of time. Being about to easily model, UV, bake and procedural PBR texturing in Blender will be an amazing improvement to our workflow.

Is the Cycles team concurrently looking at improving the baking back end? Can we have simultaneous EEvee and Cycles usage? That would be the dream, maybe later.

The design has baking in the Texture Channels node, which in principle should be able to bake anything except for light. It’s not in the texture nodes themselves, but in the shader nodes, because we want to keep texture reusable in different meshes.

So I have concerns with how the current design mixes procedural textures evaluated on the fly with ones that get rastered down to pixels as it seems to underestimate the differences between workflows and the requirements of rastered textures (ex: tilability by default, resampling resolutions throughout the graph, etc).

In the case of textures being reusable in different meshes I think that comes down to how its authored. Similarly Geometry Nodes can reference absolute scene objects. If the geometry socket for the bakers are exposed to the inputs of the graph than different objects can be baked, atlases reused, etc.

In the texturing process baking is something that needs to be artist directed and exposed at any level and for any purpose it can be.

Texture artists would want to bake trim sheets, foliage atlases, or tilable geometry down to planes and use those pixels on objects throughout the scene. They would also likely want/need to tweak the bake for perfect results: editing “cages” (per vertex ray distances) skews and employ a variety of baking strategies to get the perfect texture bake for a specific asset.

Baking textures is a lot more than just caching the surface properties at a given texel.

Reusability by texture artists for raster textures expected to come in the form of tilables/trims/atlases or “smart materials” (reusable node groups that accepts and is hooked up to baked inputs).

Reusing a texture in it’s entirety isn’t always feasible, especially when it has had details hand painted/stenciled onto it.

And as mentioned in discussion above, baking results and some settings should probably be per mesh.

Often times textures encompasses entire scenes or require special setups for each mesh for each texture set applied. Per-vertex/texel settings such as skew and max ray distance would also be very useful for tricky bakes.

So assuming that, what is the argument for putting it inside the texture nodes, what would be the advantages?

Being able to embed and use procedural geometry directly into the texture graph. Say you want to make a tileable texture with a bunch of 3D scatters (bolts/coins/pebbles/tangled wires/ivy/etc) create ornamental details, pannels, and trims, etc. All of these could then be baked down/rendered to pixels and used in the texture. Current industry workflows buckle when incorporating 3D models into procedural texturing with either a lot of inbetween steps, or settling for trying to work with cumbersome 2.5D heightfield workarounds. Being able to at any given point in a texture: let the artist jump in and work with geometry nodes, bake/render it down to pixels, all inside of the texture graph that can be shared, parametric, and re-used without poluting the scene space with messy baking setups, Would be a massive card up Blender’s sleeve.