I have re-read it again and took some time to process it. It looks really solid, and I think it would work. Here are some thoughts on the closing questions:

Is making a single texture datablock for many uses a good idea? Or does it make the workflow too fuzzy? Is there a better alternative? If not, how would filtering relevant texture assets work exactly?

Yes, I’d leave it up to users. Even if we did not have the modern tools like Asset Browser, naming conventions could be used. Now that we even have sophisticated tools like Asset Browser, users have choice between naming conventions, tagging and categorizing. That seems sufficient.

It’s actually even better, since for example you can use same texture datablock to displace a mesh in GN and texture it in Material Editor using the same texture with same mapping coordinates. Right now, in order to do that, you need to keep two separate systems manually in sync.

Are there better ways to integrate textures into materials than through a node? Is it worth having a type of material that uses a texture datablock directly and has no nodes at all, and potentially even embeds the texture nodes so there is no separate datablock?

While simplicity is almost always desired, I can think of rare scenario where someone would have a Texture Nodes (TN) datablock and they’d want to ray switch (light path based mix) for example diffuse channel before it goes into the material. Since light path node won’t be available inside the TN, having materials that directly use datablocks would make TN essentially incompatible with nodes like Light Path.

With this system, the users can now do the same thing both in texture and shader nodes. How does a user decide to pick one or the other, is there anything that can be done to guide this?

I suspect right now, most users actually want to do what the Texture Nodes are supposed to do. That while they are mixing shaders, they more often that not intend to mix only the channels of the PBR shader, since that’s the workflow people expect from other apps like SP too. The reason they use shader mixing and not channel mixing is because current system doesn’t allow the latter natively, so it requires some ugly workarounds resulting in very messy node networks.

There should be a general rule of a thumb set - Are you 100% sure you need to mix actual materials, and understand the difference between material mixing and material channel/attribute mixing?

- Yes: Use Shader Editor

- No: Use Texture Nodes Editor

- Unsure: Use Texture Nodes Editor

I was thinking about the fact it will be rather awkward situation that both the TN and Shader Editor will have large set of nodes which overlap. Initially, my idea was to actually reduce the amount of nodes available in the Shader Editor, so people do those things more properly in TN, but then I realized that doing that would once again remove the workflows that rely on nodes which won’t be supported inside TN, such as Light Path.

So the best course of action here seems to have both Shader Editor and TN be able to do almost the same, and re-evaluate the situation after a few versions to see if people still need all of the nodes in Shader Editor, or if there are some workflows that literally pretty much no one uses.

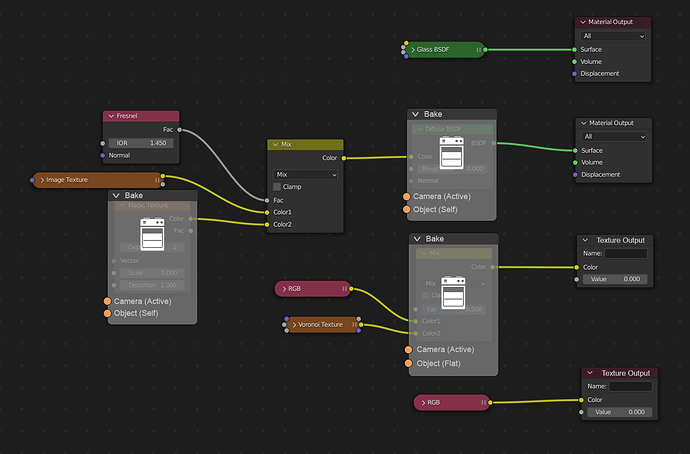

What is a good baking workflow in a scene with potentially many objects with many texture layers? In a dedicated texturing application there is a clear export step, but how can we make a good workflow in Blender where users must know to bake textures before moving on to the next object?

This is relatively clear. The ON/OFF state of the texture baking process should be stored on the object. The filename and output path of the baked textures should be stored in the material or TN datablock.

Then, the global render settings button to bake the textures, when clicked, should iterate over all scene objects, see if they have material with bake output and are enabled (set to perform the bake), and bake it. Baking all of the scene objects should be matter of just a single click, and user decides what should be baked and when by turning the bake state on and off on the object basis.

The texture baking file output system should support expressions, for example: "Assets/Textures/[objname]_[channel]_[resolution].png" so that the very same TN can be used on multiple objects and output unique textures for each:

Chasis_BaseColor_4094.png

Wheels_Normal_1024.png

(This is just an example, obviously it will have to be thought through more).

Here’s practical Example. Scene has Objects: 1, 2, 3, 4, 5, and 6, and then 3 materials with TN: A, B and C.

Object 1 has Material A, which has TN set up to bake “Color.png” texture. No expressions used in the filename. Object 1 occupies left half of the 0-1 UV square.

Object 2 has also Material A with the same TN datablock. Object 2 occupies right half of the 0-1 UV square.

Object 3 has Material B, which has TN set up to bake [objname]_Color.png. Object 3 UVs occupy the entire 0-1 UV square.

Object 4 also has Material B with the same TN datablock. Object 4 UVs occupy the entire 0-1 UV square.

Object 5 has Material C, which has TN set up to bake “Displacement.png” texture. Object 5 UVs occupy the entire 0-1 UV square.

Object 6 also has Material C. Object 6 UVs occupy the entire 0-1 UV square. Object 6 has the baking node set up fully, but baking state disabled.

Clicking the Bake button would produce following:

- Color.png texture where Object 1 baked color map occupies left part of the texture and Object 2 baked color map occupies the right part.

- Object_3_Color.png file with baked color map of object 3.

- Object_4_Color.png file with baked color map of object 4.

- Displacement.png file with baked map of Object 5,

- Object 6 was not baked and did not overwrite the bake of Object 5, because the bake toggle was disabled on it.

Some textures can remain procedural while others must be baked to work at all. How can we communicate this well to users? Is it a matter of having a Procedural / Baked switch on textures that can be manually controlled, or is there more to it?

I do not have clear answer to this yet, but what I’d say is crucial is to distinguish two different classes of baking - Input and Output.

Input baking is generating texture maps such as convex and concave area masks (curvature and occlusion), etc…, to be used to drive procedural effects materials.

Output baking is baking and collapsing the final visual result of the materials into a texture usable both outside and inside of Blender.

While both of these use cases could share features, it’s important to distinguish these are unique processes for unique purposes and therefore require different workflows.

I am thinking about a possibility of perhaps having a “Cache” node usable only inside TN. The cache node would have following parameters:

- RGB, Vector or Float input socket (selectable)

- Resolution

- UV Channel

- Output path

Resolution would define the resolution of cached texture

UV channel would define the UV channel to bake the input into

Output path would define optional storage of the texture outside of .blend file. If left unspecified, the texture would be stored within the file.

The cache node would work such that anything plugged into it would be cached and baked into the texture.

The drawback of this workflow would be that some nodes that absolutely require caching in some context, such as Ambient Occlusion or Blur, would simply not work out of the box, and would show a warning message they require a Cache node.

The benefit would be that the points of Caching are more explicit, so that the user does not unknowingly cache a lot more texture states than they intended, running out of GPU memory fast.

Can we improve on the name of the Texture datablock or the new planned nodes?

Since we have Geometry Nodes, and we are planning Particle Nodes, then “Texture Nodes” is obvious choice