That makes more sense now, thank you for the explanation.

We could imagine not using the term “layer”, and call it “texture” instead. So you might have “Compose Texture”, “Decompose Texture” and “Texture Stack” nodes.

I’m not sure if that’s overloading the term too much or if it’s helpful to try to emphasize that a texture can feed into another texture like that.

Yeah, I think ‘texture’ is already way overloaded. I just don’t like Layer because it conjures up old 2d software when that’s not really what they are at all. I think Graph or Filter would work better, but I don’t want to argue over this at all, I’m just trying to get a clear picture of the design right now. I’ll get used to Layer soon enough if it ends up called that.

But, for exemple the layer stack, is only for the type “shader”, or we can plug RGB Socket for create a combinaison with some texture for make one with ONE alpha ?

There’s this in the post:

“Blending, masks and modifiers simultaneously affect all channels in a layer.”

I expect this is a typo? Blending modes would be per-layer-channel, not per-layer, yes?

Because let’s say you’re doing a flaking paint layer. You’d want to Add the Height channel, Mix the color channel, and maybe Multiply or Subtract the Roughness channel in such a case.

In fact, the blending modes should be user-editable Layers in itself, if for example you wanted a special mode for blending Height, or if you decided Reoriented Normal Mapping is how you want to blend normal maps

Edit: Wait, now I’m confused again, I was thinking of the layers in… a certain popular texture painting app, where all you deal with is plain images instead of Layers as graphs. Haha. Now that I think about it, in the Blender version, the blending mode would be defined by the nodes in the Layer, right?

Certainly not all channels should be affected exactly the same way. What I was imagining was meta blend modes, that affect each channel in the appropriate way for some purpose. Manually setting up blend modes for each channel would be tedious and error prone, though probably should be possible.

The blend mode would be defined in the Layer Stack node.

Oh I see. That makes sense. And these meta-blend-modes would be user-definable as another node graph, right?

I’m absolutely loving the concept of having a single node to use in the shader editor that contains all channels, all layers, everything, etc, making it very easy to create a one click “bake all” button to bake all textures everywhere. This is a fantastic idea for a workflow and would be a major user experience improvement for Blender and major workflow improvement. Having simple drag and drop layers, layer modifiers, mixing painted with procedural, also very exciting. This combined with a brush system that allows painting to multiple texture channels at one time with different effects, would allow for very quickly and easily painting complex details into the surface of materials and baking the results into the textures to use in real time applications like game engines.

I don’t think I’m understating it when I say, this one change would potentially catapult Blender’s popularity forward into the world of game asset creation by effectively integrating zbrush/substance painter/maya workflows together seamlessly.

I think there may be some details to adjust along the way, but the thrust of this concept is fantastic in my opinion, can’t wait to see it become a reality.

I particularly like the option to use a layer stack, but to mix it with a node graph for some layers. That’s mixing power with usability.

In a sense it’s similar to the modifier system. Sometimes you just want to a bevel node, so being able to just click ‘add modifier’, ‘bevel’ is handy. Sometimes you want to create a complex node based system, in which case geometry nodes is there. It’s nice to have that balance between both.

Overall: Brilliant concept Brecht, I can’t wait to see it happen, very very excited for this.

Maybe? I’m not sure where the line is where we make the layer stack more advanced vs. requiring nodes to be used instead.

Speaking of terminology, what are you thinking of calling this new system?

" * What is a good baking workflow in a scene with potentially many objects with many texture layers? In a dedicated texturing application there is a clear export step, but how can we make a good workflow in Blender where users must know to bake textures before moving on to the next object?"

Ideally, Blender should have input/picker fields so that you can designate your baking objects to make it absolutely clear and simple to see what information is being baked. None of this bake to active or from selection to selection… Perhaps it might even be worth creating a dedicated baking viewport that lists all the data for baking or even a new properties tab dedicated just for baking (instead of buying it in render properties).

Great work on the initial design Brecht and the team!

I have been using blender for 17 years and this is realy a fantastic advance forward. For me this was the last thing missing.

I noticed one thing in the node graph layerstack output node. From my prediction and point of view the many mask inputs to the node would result in clutter.

I would recommend combining the mask with the layer so the mask and the custom node setup could be grouped and stored in the asset browser. Think of photoshop layer and mask is attached.

I hope my comment is clear. difficult to describe. if unclear I can provide a quick mockup.

The visual layer stack that can be reordered in the texture window would be better to keep as slim as possible to encourage the user to do large layerstacks.

this would enhance user sucess and vfx departments save time scrolling. There as well group mask with the actual layer.

Thank you for taking the time to go through user suggestions.

Instead of using tags or catalogs, we can instead have a node that saves any color input as a texture. We can then reference that node in GN,Shaders,Particles etc.

We can also do all this in the compositor where we can output our images to a “Image_out” node that we can bring in the shader or GN etc

Okay, now one more question for further clarification:

Will the new Texture Nodes be limited to just custom texture creation, or could it be used to shade materials as well (up to some extent), just like Shader Nodes?

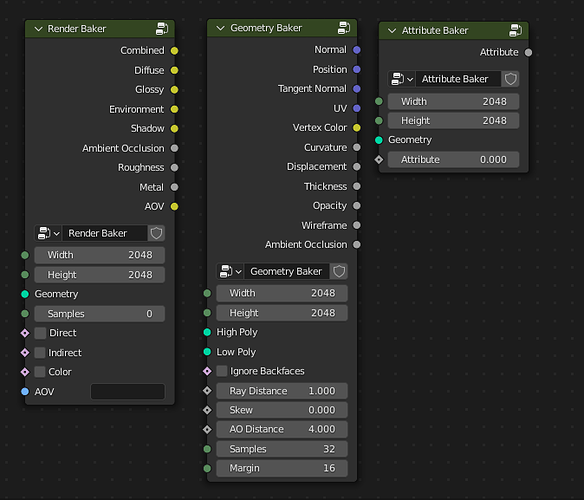

Thinking of it how would we feel about making baking a part of the texture nodes?

(Just a mockup, wouldn’t have to be seperated like this into three different nodes. I seperated by use case to avoid too much clogging and separate it into concepts/use cases. plenty of room for improvement)

I feel this would blend in more into the concept of “everything nodes” rather than just “texture nodes=procedural or image textures” as it could invite baking/rendering procedural 3D models directly into the texturing process rather than relying on scene setups. If that is too far outside the scope that is understandable.

The fact that rendering/baking is a process that takes a while would likely need to be solved UX wise anyways in geometry nodes when features such as simulations, quadriflow, etc make their way in.

basic textures nodes could be used generaly in blender what would be usefull:

- Wood types (include pine, oak, and radial )

- Concrete, stone, stone wall patterns (baked stone natural stone patterns), floor tile patterns.

- Weathering patterns, moss, rust.

- veins, and skin, and tree alike patterns

- scratch. radial normal

- spider webs and hexagon structure, ea basic pattern shapes

- dust, and dirt → maybe be aware of orientation and location dirt gets in tiney places holes etc.

- repeating wallpaper patterns (aware of how wall paper really repeats)

- rasters, think of all the rasters as used in printed comic books

- hatches, think of how a drawing person hatches levels of darkness with a single pen color (random lines, ordered lines, or dots or small patterns cross etc).

- cell alike patterns alike nature cells. (voronoi).

- ink scratches (ea alike marking something almost black with a pen, for pbr

- Is making a single texture datablock for many uses a good idea?

Yes, Please

- Are there better ways to integrate textures into materials than through a node? Is it worth having a type of material that uses a texture datablock directly and has no nodes at all, and potentially even embeds the texture nodes so there is no separate datablock?

I don’t see a better way

- With this system, the users can now do the same thing both in texture and shader nodes. How does a user decide to pick one or the other, is there anything that can be done to guide this?

User will naturally use texture nodes instead of shader for mayor things and only use shader for a high level development.

- What is a good baking workflow in a scene with potentially many objects with many texture layers? In a dedicated texturing application there is a clear export step, but how can we make a good workflow in Blender where users must know to bake textures before moving on to the next object?

Nodes is the best way to bake, and like it’s necessary to bake the basic maps it will be necessary to have.

I think it should be important to try to keep separated the “bake” concept (when you bake maps to use in the texture nodes or to export) from the “bake” when it will be used inside blender in the node tree. Maybe with a concept that you can “freeze” or “consolidate” the nodes.

It’s just Blender’s texturing system, doesn’t really have a name.

The current design only covers the case where you bake textures on the same object. What would the shader and texture setup look like on both objects? Maybe some system where you edit textures on the high poly object, and then on the low poly object you have a node that transfers the same textures channels from a high poly object? It would be interesting to see a proposal for how that works exactly.

I think layers can have an Alpha channel, which would act like a mask by itself already. The idea for the Mask inputs was for masks that are not part of the asset, which I think is common? Say for example you want to paint a bit of rust into some specific part of the mode

Of course all this is possible with node setups also, but the expectation was that masks specific to a material are common enough that it’s worth having in the layer stack this way.

The current design is not for texture (or compositor) nodes to output an image, because we want to be able to use the same texture on different meshes. That means we can’t actually produce an image from just the texture nodes, we also need it to adapt to the mesh each time.

“Shading” is a broad term. I think what’s possible should be clear based on the list of overlapping shader nodes that I gave?

The design has baking in the Texture Channels node, which in principle should be able to bake anything except for light. It’s not in the texture nodes themselves, but in the shader nodes, because we want to keep texture reusable in different meshes. And as mentioned in discussion above, baking results and some settings should probably be per mesh.

So assuming that, what is the argument for putting it inside the texture nodes, what would be the advantages?

Talking about the stack, what I have understood because in the proposed design I see the concept of texture stack still very fuzzy and I see many flaws in it. Not only do we need procedural textures, we need also

- procedural modifiers

- procedural masks

And that both can be used in the stack. Otherwise they will make the stack practically useless. Because in the end you would have to go to the nodes to configure anything. And any user of procedural textures knows that 90% of the procedural work is in the masks. Not so much in the texture.

And there is another thing that I think I understood and that seems to me to be a problem. And that is the fact that

“Blending, masks and modifiers simultaneously affect all channels in a layer.”

Does this mean that if we have a multiply for diffuse is a multiply for all channels? because it is a very big problem.