full EXR file format support.

- write\read UINT32 texture support.

- write\read meta data ( full text files )

- all compression options.

https://openexr.readthedocs.io/en/latest/TechnicalIntroduction.html

full EXR file format support.

https://openexr.readthedocs.io/en/latest/TechnicalIntroduction.html

If you check the example node graph in the blog post, there is no single node that both generates the scratches and mixes it with the previous layer. These are separate nodes.

Hand painted images and attributes are part of this, it’s certainly not fully procedural.

@brecht what are you thinking about the granularity of the nodes? For example, there’s several ways you can create an edge finder in the shader editor, but there’s no ‘Find Edge’ node explicitly. For texturing do you expect there will be a collection of primitives, or will there be higher level nodes (edge, curvature etc) or a combination of both?

I guess it also depends on what you consider primitive or advanced, an edge finder might be a fundamental node in texturing.

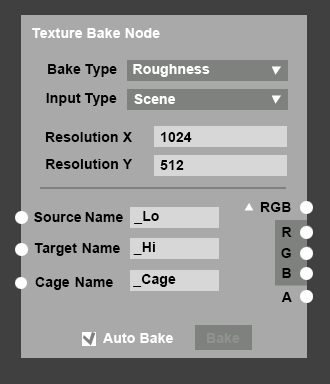

Hi there, if been skimming trough this topic I went to do some quick mockup about how I would like to bake with Blender using nodes:

There would be a bake node which makes you choose the type of bake you want to do and what type of input you want to use. The result would be saved in a cache folder so the artist can use it as a direct input for a next Node. If the user want to use it somewhere else or even combine it into an ARM map, they would use a File path node.

The first example bakes an ambient occlusion map and uses collections as inputs

There could be an additional dropdown choosing: Match by: object name, material, etc to prevent ray intersections without the need for exploded meshes.This makes it flexible to use one setup to bake normal maps aswell as ambient occlusion

The input type could also be the whole scene, the user than just has to define what to look for, like, _Hi, _Lo, _Cage or something. User would still have to match names of different meshes.

Lastly of course there could be just a single mesh for each input

This is just a mockup, and I know there are details missing like number of rays etc and could go ahead and cleanup the layout more, but I was just wondering if this was the type of workflow that would be possible?

Agama Material 的使用感觉很不错的样子,请问能作为设计参考吗

Built-in nodes will be similar to shader nodes in terms of how low level they are. The plan is to integrate node group assets more closely in the UI in menus and search, and bundle more higher level nodes with Blender that way.

The current design handles this different, with an Image datablock that has the configuration for saving all texture channels, and low/high poly settings on the object.

As I’ve asked about early in this topic, it would be interesting to understand why using nodes for this would be better, what problem they address.

The advantage of doing it at the Image datablock level is that this type of configuration is not specific to baking, and then can also be used for loading textures, image display and tools in the image editor, saving to a multilayer EXR, etc. The advantage of doing it all in the Texture Channels node is that changing from Procedural to Baked is just a simple switch, and there’s no need to set up separate nodes for wiring up and channel unpacking these baked texture to the Principled BSDF.

The reason to have high/low poly baking settings at the object level is to be able to bake multiple objects into a single image texture, with each having different settings.

Yes agree - I like the mockup that Lau-Paladin Studios did but it’s too granular, it would be a PITA to have to setup nodes for each type of bake separately (Rought, AO, Diffuse, Normal, …). Basically way too many nodes to have to deal with for each texture, better to have them bundled all together at a higher level.

Well it seems to me like the design has settled out, though I’ll admit I’m still somewhat unclear on it, a precise example would be great. But otherwise what is the plan now Brecht? You mentioned a rough timeline above, any thoughts on how it will go from here?

It’s going to be a few months before Blender developers start working on this, maybe May or June. The focus right now is on texture painting.

If a volunteer wants to start working on some part of this I can give pointers, but otherwise I don’t expect much activity until then.

Another thing to add to the texture bake node proposal to maybe keep in mind:

The Bake node should for sure also support collection input instead of only meshes. I also don’t completely understand what we need the Suffixes for if the meshes are connected to a node. It might be better to group the High-, Low- and Cagemeshes in Collections instead of individual mesh inputs. Splitting into many sub-meshes is rather common, I think.

I am not opposed to the suffix solution, at all,either. Most bakers use it to distinguish but it might have to be a more Blender- or at least baker-wide setting. Otherwise, if your workflow diverges from the standard, you’d have to input it every time. Or hook it up with string nodes to possible many different bakers, because ultimately many ,eshes might have to be baked onto one combined texture map in the end again.

Also UDIM baking is a thing to consider. That’s something I rarely see done right. even the best commercial bakers still seem to have problems with overlaps and UDIM tiling.

I thought I’d add some thoughts to this design feedback thread. I am not in any way dead set that this should be the way to go. Treat my input as an input to the discussion.

Defining objects to bake

First off I’m thinking about how to define what objects belong to the same texture space (i.e. mostly non overlapping uv’s that will be baked to an image texture). In some other applications this is called “texture set”.

In the example below, objects are baked per material. (The uv layout is very rough)

I guess that baking from “selected to active” is outside of the discussion here, but I think that both using collections and objects to define what object source should bake to what object target could be useful. Here is a link to a quickstart video for my addon “Bystedts blender baker” that could give some more info regarding how I think regarding baking from low poly to highpoly

Where do textures live?

I’m sure that Brecht has a good idea of how to best solve this issue, but I thought I’d give my 2 cents.

If objects that should be baked with texture nodes are defined by their assigned material, it feels logical to link the texture or textures to the material itself. If the material has zero users, the textures would not have any use and therefore be deleted from the blender file unless they are stored on disk as separate texture(s).

Brecht mentioned multi layered exr as a format to bake to, which sounds like a good standard format. However, a lot of external render engines and game engines does not support multi layer exr files, so it would be great to have an option to write to other file formats (which would likely result in one image texture per channel).

Being able to pack images to the blender file is a great way when sharing files via the internet, so it would be great to support that just for packing. Painting on multi layer exr files would likely still require unpacking the images first.

Resolution per material

If we define which objects belongs together when baking by material assignment, it makes sense to set the bake resolution in the material settings

The proposal mentions other file formats than EXR:

The Image datablock would be extended to contain a set of texture channels. With multilayer OpenEXR files this comes naturally, all texture channels are saved in a single file similar to render passes. For other file formats there is a different filename for each texture channel, or multiple texture channels are packed together into RGBA channels of fewer files, as is common for games.

And also there’s the use case baking multiple materials into a single image, which is why baking is proposed to be per Image rather than per Material.

Baking multiple meshes or materials into a single image becomes straightforward. Link the same Image datablock to the Texture Channels node in all materials, and Blender automatically bakes everything together, assuming the UV maps are non-overlapping.

Output textures to bring into another render application

In most cases, the texture(s) that will baked from the layer stack will what is primarily used in any render engine. The bake image output could possibly be defined by settings in the layer stack node, similar to the output node in the compositor.

The exception of other image texture output than the layer stack would for example be a procedural material in a game engine that uses a baked image with ambient occlusion to blend in dirt into the material based on the “state” of the object in game.

As you wrote; Using an image datablock as the target for bake does make a lot of sense and is way better for baking multiple materials to one image.

I like your suggested approach way better than my own suggestion with baking per material.

How do the user initiate baking?

Here are some thoughts regarding initiating baking of texture nodes

The image below shows a mockup where baking selected nodes is initiated via the right click menu. Only the texture nodes that is marked “Baked” will be baked.

Unselected texture nodes and texture nodes marked “procedural” are not baked.

In the image below, the layer stack node is active. By running “Bake active node and inputs”, the input nodes marked “baked” will be baked and finally the layer stack node will be baked. The user will save some time not having to select each node that needs to be baked compared to “Bake selected nodes”

Batch baking texture nodes

The image below shows texture nodes baking options in the render settings.

The idea in the proposal is to bake all texture channels at once, at the material level. There is no baking of individual texture nodes. Which use cases do you have in mind that would require more manual baking at the level of individual texture nodes?

I can see the use case where you want to bake texture nodes and turn them into an image texture or mesh attribute for painting. But that would be a one time operation that replaces nodes, rather than some state on nodes.

Yeah, having to bake objects individually is a scary idea and poor workflow. Having global option in render settings is insufficient, because you can not choose what should be baked and what not.

What should happen is that the ON/OFF toggle for the set up baking targets should be done per material/nodes/object, and the baking operation should be global.

In other words, there should be a “Bake all enabled baked targets” kind of button. Not bake all materials texture nodes or bake all materials layer stack.

Since the spreadsheet was introduced I imagined using that to control these kind of things. I think it’s way more versatile than render settings or per object, since you could select all objects and display a bake Boolean for all of the selected objects, collections, etc