Why not take the best of both world of Procedural/Node Tree and Layer/Stack into the Shader Editor.

Node Tree is reusable and modular, but node can become hard to decipher if too complex. While Layer (Stack) is more straight forward and quite easy to organize, but in some cases it’s difficult to make a procedural texture.

I propose to utilize Krita image format as the new node in shader editor. Why Krita? Several reasons :

1). Because it’s open source obviously.

2). We can open and edit a reserved non-destructive images.

3). Possibility to work back and forth between Krita and Blender seamlessly.

4). Krita has a more mature image authoring tools.

5). We can also export it to .psd format via Krita if your production demand it.

6). But the most important part is, it will encourage more people to use the open source applications

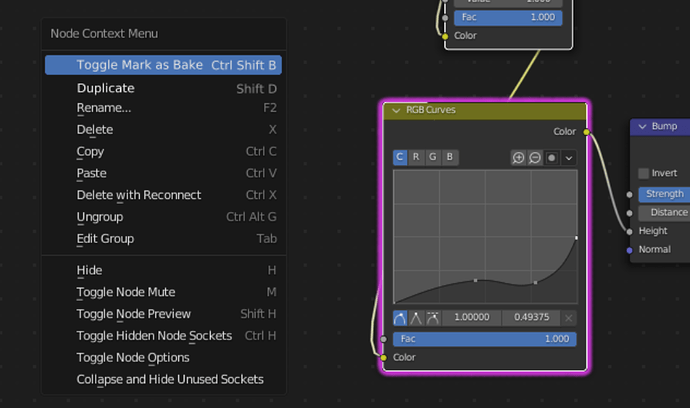

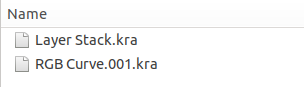

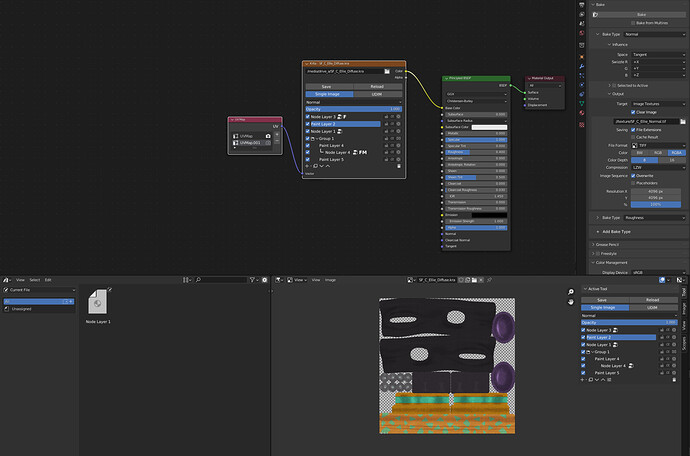

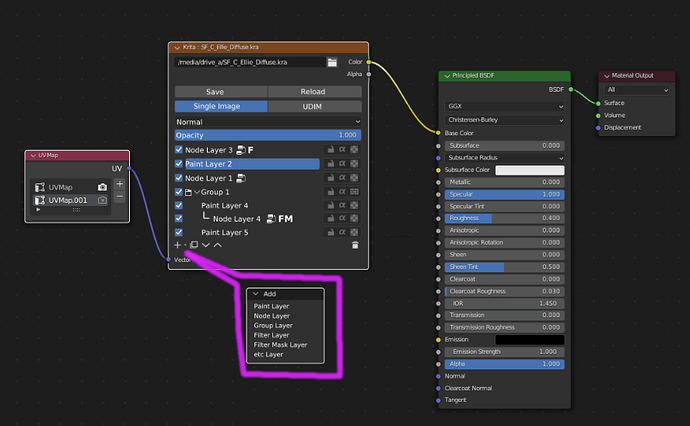

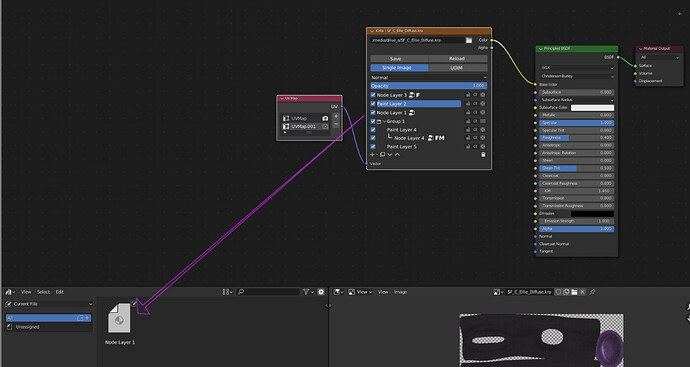

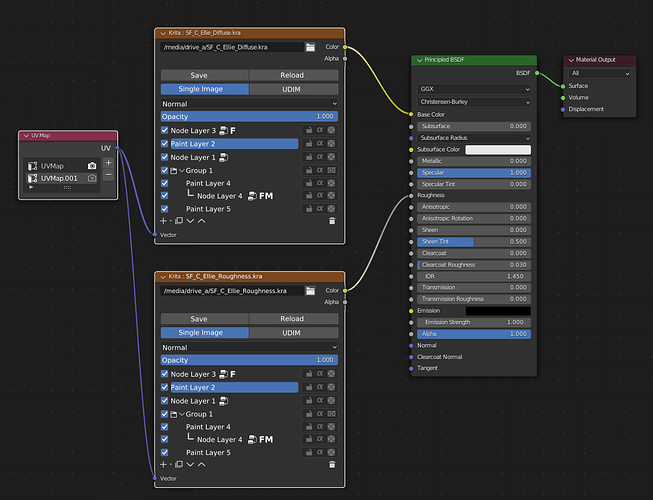

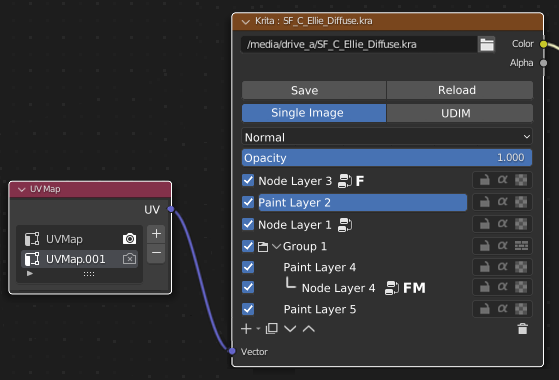

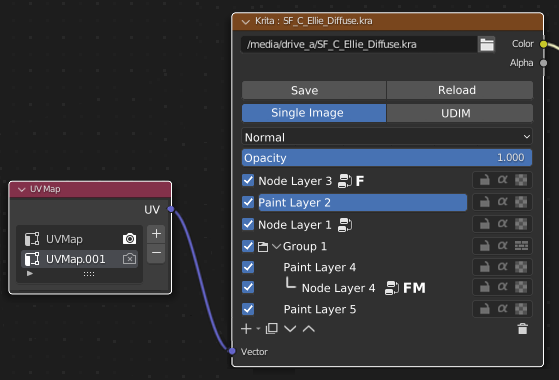

I can’t think of better name than Krita Node since it will open a specific .kra format. So what we see below is basically a carbon copy of Krita’s Layer window with some adjustments. You can add various kind of Layers, but one thing particularly different is the addition of Node Layer.

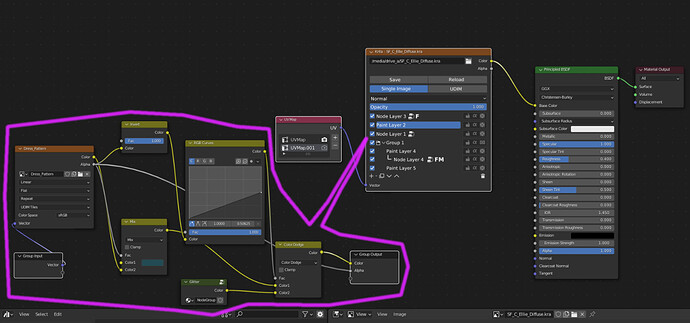

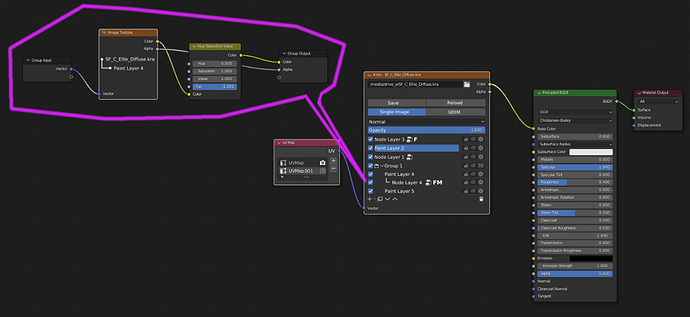

Here we see two kinds of Layer to store images, The Paint Layer is where we can draw directly or drag and drop an image from file explorer into it, and Node Layer (with the node icon beside it) which can be double clicked (or use the Tab button) and open it ala Group Node to see the node network. It will act just like the group node to reduce complexity and to organize easily. This Node Layer can also shows the Group Input and Output parameter, so user can tweak it without having to open the node. But i’m not sure where to put it, perhaps the layer is collapsible?

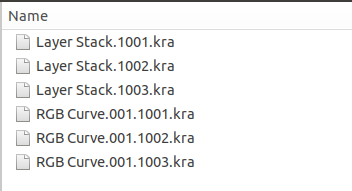

For Node Layer, if it’s too complex for Krita to read, perhaps Node Layer will be muted or “Bake” temporarily in .kra file, but still maintain the node information. We can do this treatment, except for the Filter Layer status of the Node Layer.

Another thing about Node Layer is that it can change the status to Filter Layer, you can see some of the layer with F (Filter) Mark and FM (Filter Mask) Mark, like the way Filter Layer works in Krita. The reason for that is because Blender has it’s own special Nodes that work exactly for this purpose (i imagine this Filter Layer will only limited to one special/effect node). This means that Krita will have the ability to convert this Blender’s node into it’s own Filter Layer and vice versa.

Another thing is the Blending Mode should be seamless and identical between Blender and Krita, i have tried several times to arrange images manually by replicating Blender node flow into Krita Layers to make .psd file, the Blending Mode gives me different result and distorted color. Even the parameters are quite different at the moment.

In Image Editor, the same Layering Format will appears in properties tab if Krita image file opened.

Since Node Layer is reusable, we can also drag and drop a specific Node Layer into Asset Browser. Or maybe right click Mark as Asset.

The same workflow also applied to the other channels. Here we see the Roughness.kra file and it’s own layers is used as the Roughness Color. I imagine perhaps it is better if the individual Paint Layer can also becomes Node which can be reused (cloned or instanced) by drag and dropping to another Krita Node. So, for example, when we select one Paint Layer 2 on Diffuse.kra, another clone of Paint Layer 2 will automatically selected on Roughness.kra. This is useful for PBR texturing workflow.

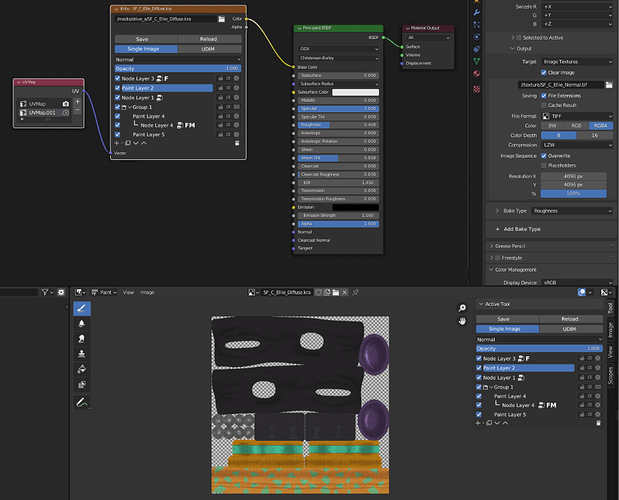

So some of us might ask, what if the UV changed and we need to adjust the texture to the new UV layout? Which is pretty common scenario in texturing department. That’s where the new UV Map Node come in handy… With a click of a button we can change by re-baking the whole Paint Layer images into the new UV.

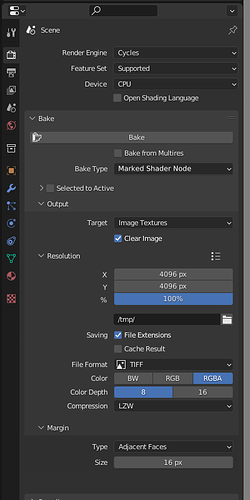

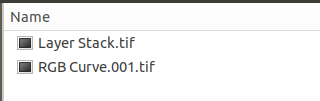

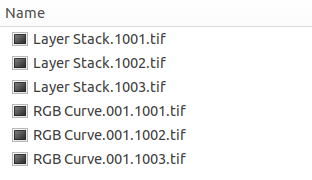

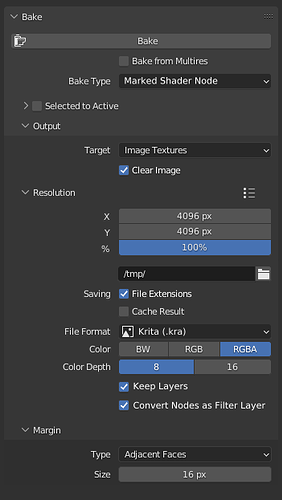

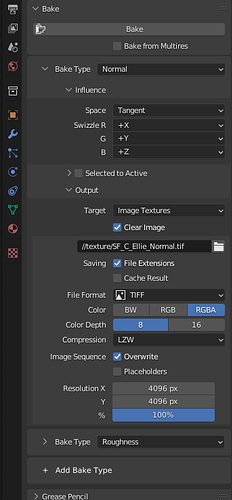

For the Bake workflow i propose using the Collection of Render Types that each one of it has it’s own render parameters. The reason for this is because for some productions the requirement for map textures are not uniform, for example diffuse can have 4K size and 16 bit tif file, while specular can have 2K with 8 bit tif file.

At the very bottom of the Bake tab, user can add as many Bake Type as he/she want. It would be nice also if user can make their own Bake template.

Overall, if we do this, i think this is going to be the greatest Open Source Universe merger of all time