Greetings!

I working with big object, 1000m

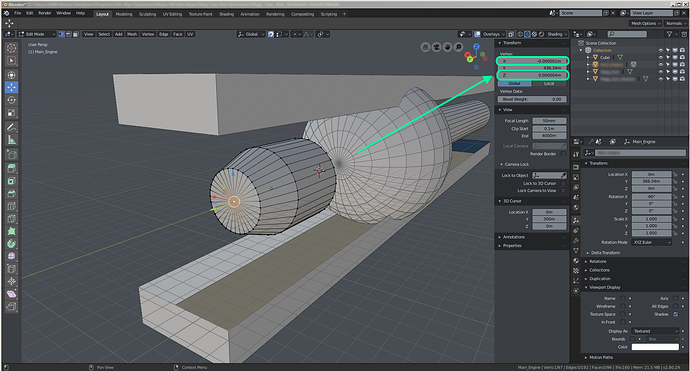

When I Edit components, arises inaccuracy.

Every time I fix inaccuracy, it arises again

Your object is a kilometer long and you are worried about being off by a few micrometers?

This sounds like a job for a CAD program. And maybe a better understanding of floating point math.

I am confused by this inaccuracy

In v2.79 this problem was not

this sounds very funny ![]()

Just a guess, but the difference might be in the way floating point numbers are rounded off for display in 2.80. I seem to recall some complaints about 2.79 not showing all the significant digits for floats.

Floating point values used by Blender and most other 3D software only have about 6-7 digits, so this kind of inaccuracy is expected. There were changes to the unit system in 2.8 though, by default it might be is displaying more digits than before which reveals the inaccuracy.

Can this be to fix somehow?

You can set the Length units to Adaptive, to get the unit system display behavior from 2.79. The inaccuracy itself is unlikely to be fixed, as it would significantly increase memory usage and decrease performance.

Thank you for the explanation, so there is no future plan to move to double precision?

I do, during my daily job, occasionally suffer precision problems using huge coordinates, representing very large land area (in meters or BU is very quick to get huge amounts of digits).

There is no current plan to change this, maybe at some point in the future.

it may be unpleasant for those with OCD and those of us with minor cases of OCD who have never taken a machining class to learn that a perfect 0.0 dead on accurate measurement doesn’t exist in the real world.

for all intents and purposes that is an incredibly accurate placement. you’re just too focused on it not being perfect.

those tiny numbers are entirely likely to be within real world machining tolerances even if you’re modeling to scale.

take the largest inaccuracy in your pic for example 0.000004m

that is only 0.004 mm.

that’s 4 microns

even the finest grain of sand is much larger than that (0.0625mm / 62.5 microns) and that is the smallest grain of sand.

a single red blood cell is 8 microns.

so you have an inaccuracy of half a single red blood cell on the z axis there.

average bacteria size is 2 microns.

so you have an inaccuracy of half an average sized bacteria on the x axis there.

get out your blender microscope. your’e going to need it.

if this were a real world object you’d need highly calibrated equipment to measure that accurately.

you sure couldn’t measure that by hand without accepting that as a good measurement because the margin of error due to human movement making that too small a difference to matter.

For sure we can agree most use cases don’t desire this precision.

But analyzing this problem further, and engineering an interface to give the user options for desired precision, can definitely provide significant performance benefits, in addition to empowering hardcore physics/CAD users who need greater precision.

Let’s discuss first encoding of variables.

Recently Google released an open source encoding and decoding library called Draco that accepts .OBJ and outputs encodings of a desired n-bit vectors, see for example: https://codelabs.developers.google.com/codelabs/draco-3d/index.html#6

So on the side of reduced-memory encoding, it’s not crazy to imagine that many mainstream users would love to see sudden 2x, 3x reductions in encodings via an approach like Draco.

But I’m curious about the other end as well, specifically with respect to micron-level simulations.

This level of precision turns out to be incredibly interesting in photogrammetry, because the size of a single pixel sensor for something like an APS-C camera (common DSLR) is approximately (ironically) 4 microns.

Since Blender is all about modeling light and the way it interacts with everything, I figure it’s worthwhile to remind us about the wavelength of visible light being between approximately 400nm to 750nm, i.e. 0.4 microns to 0.75 microns.

So, it strikes me as also being really interesting and valuable for science and engineering researchers to be able to model properties of light at least with 100 nanometer / 0.1 micron precision, and according to this interesting perspective on float v. double precision, we do indeed hit “radius of a proton” scale for double precision variables at the scale of “size of a room”: http://www.ilikebigbits.com/2017_06_01_float_or_double.html

Following up on the higher precision use case, suppose we would like to implement a switch that users can flip to allow for double precision variables in position encodings, does anyone have any experience or insight into the codebase to suggest where that project can start and what propagations may be entailed?

Ah, I miss mega-meters support, you miss nano-meters support

*Sorry to cite myself… https://www.youtube.com/watch?v=CL-Gj5XUpy4