Hi guys,

i have an idea for getting cycles noise free without a denoiser at very low samples (64/128?)

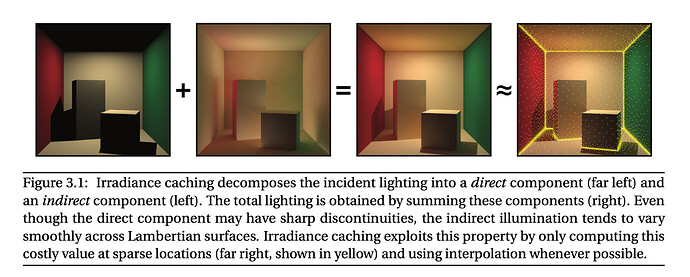

OK, so we render the scene, doesnt matter if tile or progressive, we get to the end of it at lets say 64 samples. Now the dark areas will be noisy. Now while the scene is still in memory, we get the Direct Light Diffuse pass only, and use it as an additional emission for an additive second indirect pass that will add the result to only the Indirect Diffuse AOV, nothing else. Lets say another 64 samples.

EDIT: Obviosuly this is a 2 pass rendering, the second 64passes take into account the diffuse direct (of course together with the texture albedo) as an emission of that surface

Because direct light is always super clean super fast, the result should be, at least for the diffuse pass very clean. The second bounce, and especially because now the surface is illuminated by a big area of the first hit surface. This could be extended to specular or refraction AOVs too, but diffuse GI would cover most problems.

Interior scenes would render with this extremely fast noise free, because in the second pass the walls are emmisive by that amount of energy bounced of course.

There should be also no artifacts, because the rendering is per pixel.

![]()

Hmm… I dont know, but what if there is another way… just putting ideas out.

Hmm… I dont know, but what if there is another way… just putting ideas out.  What if the renderers of the year 2040 are not pathtracers at all ? Hm…

What if the renderers of the year 2040 are not pathtracers at all ? Hm… that tries to put some ideas forth, maybe a blind chicken

that tries to put some ideas forth, maybe a blind chicken  also finds sometimes a corn

also finds sometimes a corn