Synopsis:

Blender comes with a built-in video sequence editor (VSE) that allows users to do basic to intermediate video editing tasks. While the editor supports retiming video and audio through the retiming keys, one particular feature that is missing for audio clips is being to preserve the original pitch of the audio when it is sped up or slowed down.

Thus, this project will focus on adding a toggle option to preserve pitch in the intervals between the retiming keys.

Benefits:

Pitch correction is important in video editing softwares as it allows for users to manipulate the timing and duration of audio clips and still retain the natural quality of voices, music, and other sound effects. By integrating pitch correction into Blender’s VSE, it allows Blender to better become an open-sourced alternative to other paid video editing softwares. This will also better integrate into the workflow for users already utilizing Blender’s VSE, as it eliminates the need to adjust audio pitch in an external program and enables them to stay within Blender’s packaged 3D modeling and video editing suite.

Deliverables:

- Investigation document - explore research papers (look at pitch algorithms for voice and music, benefits, and trade-offs to decide on determine the best implementation for Blender)

- Isolated implementation of pitch-preserving algorithm outside of Blender

- Integration of pitch preserving functionality in Blender

- End-user documentation

- Stretch Goals - Start framework for pitch shifter, which will allow users to adjust the audio up or down the specified semitones

Project Details:

Pitch correction is a difficult problem because it involves two non-trivial operations: pitch-detection and pitch-shifting. Typically, pitch correction systems share features like a pitch detector–such as autocorrelation and zero-crossing—a pitch choosing algorithm, and a pitch scaling algorithm–such as simple overlap (which operates in the time-domain) and phase vocoder (which operates in the frequency-domain with the usage of the Short Time Fourier Transform). For this project, I will explore audio papers and 3rd party libraries that go into depth about pitch-shifting. I have so far compiled the following papers and libraries for approaching pitch shifting, which I will begin reviewing in the upcoming months.

Papers:

-

Pitch Shifting of Audio Signals Using the Constant-Q Transform

-

Improving Time-Scale Modification of Music Signals Using Harmonic-Percussive Separation

Libraries:

Rubber Band Library - An open-sourced audio time-stretching and pitch-shifting library. Note that the license is GNU GPL V2, which is compatible with Blender. There will need to be considerations such as the binary size and how complex it is to build, which I will look at in the incoming weeks

Relevant Blender Forum Threads:

Relevant thread Discussion of difficulty with pitch animating which is relevant to the project:

Better Audio Integration - @neXyon

After exploring the research papers, third party libraries, and how others approached this problem, I will culminate my findings into a document, where if possible, I will compare the benefits and tradeoffs between the different approaches, and choose the best approach that fits the needs of Blender. If we decide to implement the correction algorithms manually in C++, then time will be put towards developing the algorithm outside of the Blender codebase. After receiving approval from my mentor and other developers, and users, the algorithm will be integrated into Blender’s audio library Audaspace and then into Blender’s main codebase.

Blender Integration:

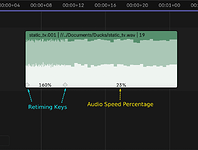

In Blender, retiming keys can be added through the shortcut I → Add Retiming Key, which can be used to adjust the speed of strips. These retiming keys can be repositioned to achieve the effect of speeding up or slowing down the audio as indicated by the audio speed percentage. However, the change in the audio playback speed has the effect of distorting the natural tonal quality of the audio.

I propose adding a Preserve Pitch toggle option under the Sound tab for each audio strip instance (including the ones that are created by the “Split Strip” operation) as demonstrated below, which will set the pitch-correction flag for that audio strip.

The pitch correction algorithm itself will be implemented in Blender’s high-level external audio library, Audaspace, which supports animating sound properties such as pitch and volume. Blender will then utilize Audaspace’s C/C++ API defined in extern/audaspace/bindings/C. For the pitch-correction functionally, the API will have to be adjusted in such a way that other projects outside of Blender remain compatible (or have additional discussions with the maintainer of Audaspace and other developer if breaking compatibility is acceptable)

Now, to toggle between the pitch-correction and non-pitch correction behavior, there are ideas being floated around, but more information is needed on how exactly this should be done while Audaspace compatibility discussions continue. The ideal scenario (credits to @iss) is that frame mapping is used so that we can avoid using the large float buffers for animating pitch in Audaspace and do the resampling/pitching efficiently while the audio is played. This will need to take into consideration the pitch-correction flag and sample that is compressed or stretched by some percentage.

Finally, as for the UI, this will require defining an RNA property in the function rna_def_sound() in rna_seqeuncer.cc which will correspond to a newly defined DNA flag defined in DNA_seqeunce_types.h Additionally, the UI for preserve pitch toggle will need to be added in the draw() method in class SEQUENCER_PT_adjust_sound in space_sequencer.py By default, the Preserve Pitch option will be turned on.

Project Schedule:

This is a large-sized project (350 hours) with a predicted completion time frame spanning across 17-18 weeks. I will likely be working part-time over the summer and will likely commit at least ~20 hours per week on this project after my semester ends on May 17th… I will probably be on vacation during one of the weeks over the summer. Regardless of whether the project is accepted to GSOC, I still intend to further explore different approaches for pitch correction over the summer and make some progress on this project. Additionally, in the incoming weeks (if I have time), I will refresh my digital signal processing knowledge through reviewing the following digital book and implementing a naive phase-vocoder in C++ in preparation for this project.

Week 1-3

-

Read and explore different approaches; look at research papers, 3rd party libraries, or what others have implemented

-

Create an investigation document listing benefits and tradeoffs for each approach (Deliverable #1)

-

Research Blender’s codebase further and really solidify details with mentor and other developers

Week 4-6

-

Continue experimenting with implementation of pitch correction algorithm

-

Finalized isolated implementation of pitch correction in either Python or C++, focus more on a naive implementation in C++ (Deliverable #2)

-

If we’re using a 3rd party library, the weeks can become additional buffer weeks or time spent into integrating the library to Blender

Week 7-9

-

Begin implementing pitch correction algorithm into Audaspace

-

Integrate into Blender using Audaspace’s C++ API

-

Ask for feedback and fix any issues from the community

Week 10-13

-

Implement UI changes for pitch correction toggle

-

Finish integrating pitch correction toggle functionality (Deliverable #3)

-

Continue to ask for feedback and fix any issues from the community relating to pitch correction functionality

Week 14-16

-

Prepare for final submission

-

Make sure the code and functionality is well-optimized and memory-efficient (i.e no major bottleneck delays while preserving pitch and audio is being previewed)

-

Clean-up code and add test cases (if needed)

-

Finalize user and developer documentation (Deliverable #4)

Week 17-18

- Buffer Weeks, think about the pitch shifter stretch goal if enough time remains or fix any other bugs with VSE