Hi everyone! I’m currently writing up a proposal for adding HDR video support in VSE. And would like to ask if my current project schedule estimation looks achievable as well as if I missed some aspect of adding HDR support.

It looks like viewport HDR display is currently only supported on MacOS. While I’m very much fine with renting a macbook for the duration of the project, I want to ask if maybe there is a spare one available to borrow at Blender HQ in Amsterdam (I live in Utrecht). Also, am I safe to assume that adding HDR display support on windows as a later personal project will be quite non-trivial?

Cheers!

[This is a copy of the draft and might be out of date, refer to the link above for the most up-to-date version!]

Project Name

Adding High Dynamic Range (HDR) support for video in Blender.

Name

Xiao yi Hu

Contact

LinkedIn: www.linkedin.com/in/xiao-yi-hu-52b420196

Synopsis

HDR video allows for more immersive visuals and expanded artistic expression through wider dynamic range in brightness contrast and color gamut compared to traditional SDR video. This project aims to add functionality to allow users to view, render and export their videos using various HDR formats such as HLG HDR10 within the Blender video sequence editor (VSE).

Benefits

Currently there is no direct method for Blender users to view and export their videos in HDR within Blender. By adding this functionality, artists using Blender will have the option to create their video projects in higher fidelity with increased range of visual expression.

Most well known video editing software packages such as DaVinci Resolve and Adobe Premiere require paid licences to be able to edit videos in these formats. Adding HDR support to Blender allows those unable to afford or justify these licence costs a way to produce HDR content free of charge and provides Blender a key differentiating feature compared to other free video editing software.

Deliverables

- Add importing support for HDR video.

- Reading and understanding HDR metadata.

- Decoding PQ and HLG video data into Blender’s internal linear working and sequencer colorspace.

- Add exporting support for HDR video.

- Exporting HDR metadata.

- Encoding Blender’s working and sequencer colorspace into PQ and HLG video data.

- Expose new encode and decode functionality to users via UI.

- Enable video sequencer to display HDR content on supported displays.

- Documentation of implemented deliverables

Project Details

Deliverables and priorities

The main priority of this project is to allow users to decode and encode HDR video in blender and implement the required UI. Users first need to have HDR data to work with and while suboptimal, it is possible to create simple video editing workflows where you preview the HDR grading on short rendered video files instead of in the editor.

Rendering HDR in the VSE window is second priority and will be worked on after the video decode and encode has been implemented.

For a complete overview of the subtasks and their timelines, refer to the project schedule section.

HDR and Color Spaces

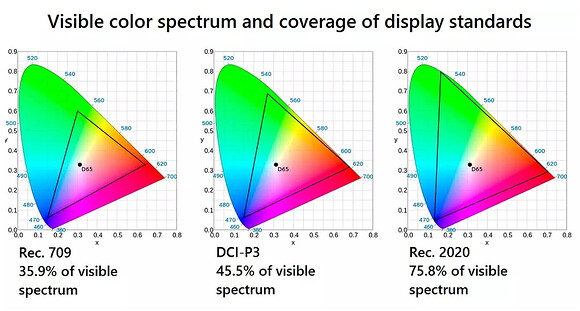

To understand the core goals of this project, let us briefly define what a color space is before looking at what tasks need to be done in this project. A colorspace is defined by a color gamut and transfer function. These define the colors that can be displayed and the dynamic range in brightness. Outside of the colorspace, HDR standards also specify a bit depth and metadata formats which are used by displays to improve their output if they are unable to display full HDR.

Color Gamut

All current HDR video standards use the same Rec.2020/BT.2020 color gamut, which defines the colors that can be shown by a video. Blender already supports these wider color gamuts and can both read and export data within them, so no work needs to be done here.

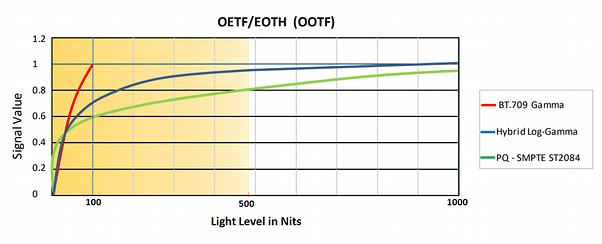

Transfer function

The transfer function defines brightness as a function of signal intensity. There are two different transfer functions used in HDR standards, first is Perceptual Quantizer (PQ), or SMPTE ST2084, which defines how intense the brightness is up to a maximum of 10,000 nits. Most HDR content however, is mastered to only 1000 nits. This transfer function is not compatible with non-HDR displays.

The other is Hybrid-Log Gamma (HLG) which is a hybrid transfer function, where lower signal intensity results in the same SDR gamma curve brightness, but is able to hold more information for highlights. HLG is compatible with SDR displays and maps brightness to SDR gamma curve values when viewed in SDR.

These two transfer functions are the missing key components in Blender for HDR video support. Right now it is not possible to properly import and export video data using these transfer functions.

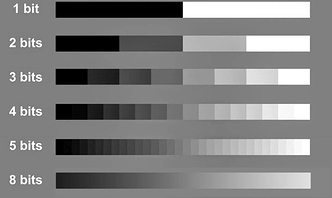

Bit Depth

Next is bit depth, which is how many bits signal intensity of a color value is encoded in. 8-bit color depth gives 256 brightness levels with each added bit doubling the brightness level count. For HDR video you need to export with at least 10 bit color depth (1024 brightness levels). Though standards such as Dolby Vision define a range up to 16 bit color depth.

The higher the max brightness level you want to master your video to, the higher the bit depth must become to prevent color banding from showing up in the video. PQ requires 15-bit color depth to have no perceptual banding at a max brightness of 10,000 nits.

Blender already supports 10 and 12-bit video encoding, which is enough for 1000 nit HDR content.

Tasks

Encoding and decoding HDR

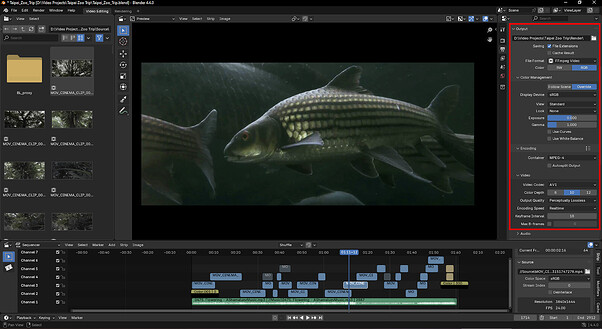

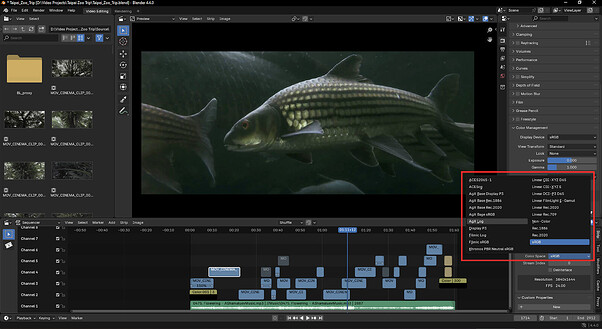

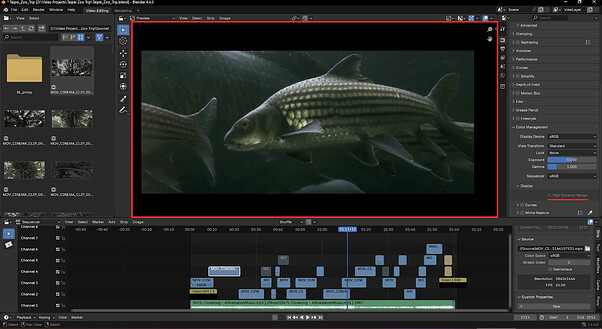

Right now Blender does not support the PQ and HLG transfer functions when decoding into and encoding from Blender’s internal working colorspace. Support requires modifying how Blender calls ffmpeg’s C api and adding metadata reading and writing. New UI is necessary to allow users to select the HLG and HDR10 formats for encode. These options should be added within the encoding section highlighted by the red box.

Right now the display color space and bit-depth are available in separate areas, this makes it harder to determine where the transfer function/HDR format option should be added in the UI. It is important to start conversation about this early on, personally I tend towards adding a transfer function drop down menu to the color management submenu with 8-bit color depth being disabled on selecting HLG or PQ transfer functions.

The main scene color management settings UI need to be adjusted too as the output color management settings by default follow the same color management settings as the scene. This area is the top red box in the second image below.

Adding HDR Color spaces for video strips

Users must also be able to specify if video strips are of a specific HDR color space source.

In the images below you can see areas where the UI must be modified for users to select HDR color spaces for imported video strips. See the bottom red box in the image.

The most straightforward solution would be to add Hybrid-Log Gamma Rec.2020 (HLG) and PQ/SMPTE ST2084 Rec.2020 (HDR10) to the list of selectable color spaces in the dropdown menus in these areas.

Displaying HDR and OpenColorIO Configuration

When HDR decode and encode implementation is done and time permits, HDR displaying will be the next major task. Blender’s current way of rendering the VSE is by writing colors to the overlay texture of the GPU Viewport which is restricted to encoded linear display space, or sRGB, values.

To be able to display HDR on enabling the High Dynamic Range display setting, the HDR content areas of the VSE window need to be drawn to the color texture and corresponding pixels in the overlay texture need to be marked as a hole so that the gpu_shader_display_transform.glsl shader merges the two textures properly such that HDR values are preserved. Code from the 3D viewport and Image window will be used as reference since they can already display HDR content. See below for a visualisation of the relevant HDR content area.

Currently Blender’s default OpenColorIO configuration only supports the BT.2020 gamut with a 2.4 SDR gamma curve as transfer function. BT.2020 gamut profiles with HLG and PQ transfer functions need to be added.

Project Schedule

June - Preparation

- Start familiarizing myself with ffmpeg’s C API and Blender’s codebase while finishing the university semester.

- Learn Blender code standards.

- Setup a build environment.

Week 1 - Getting Started

- Meet with mentor(s) to discuss deliverables and implementation approaches.

- Start discussion on how new functionality could be exposed in UI in case there are differing opinions within the Blender development team, keep discussion going throughout the project.

- Start work on HDR decode.

Week 2-4 - HDR decode

- Add metadata reading support.

- Add decoding HLG and PQ video data into Blender’s internal working color space.

Week 4-7 - HDR encode

- Add metadata writing support.

- Add encoding HLG and PQ video support.

Week 8 - UI and documentation

- Add UI for selecting HDR formats in colorspace management and output encode settings.

- Add UI for selecting HDR color spaces for video strips.

- Documentation for decode & encode.

Week 9-10 - HDR Display

- Write HDR content to GPU View color texture

- Stamp holes in GPU View overlay texture

- Add HLG and HDR10 specific color spaces to the default OpenColorIO profile.

Week 10-12 (First 2 weeks of new semester) - Wrapping up

- Kept open for schedule overflow from previous weeks.

- Cleaning up the code and documentation.

Bio

Hi, I’m Xiao yi, a first year MSc student in Game and Media Technology (Computer Science) at Utrecht University with a keen interest in computer graphics. My first interaction with Blender was when I was 12 and started using it seriously when it made me fall in love with computer graphics at 17. Most of my experience with Blender was with 3D modelling and rendering, which I did for personal projects, clients and at an indie game studio.

Since starting my studies in computer science, I have been using Blender for personal projects including video editing. Beginning at the tail end of 2023 I have been getting into HDR content and wanting to be able to edit HDR recordings, but found to my frustration most popular editing software required expensive licenses to be able to do so. Therefore, when I saw the listing for HDR video editing in the list of project ideas for the GSoC, I immediately had to apply!

In terms of relevant experience, I have used C++ for over 4 years within embedded software contexts as well as writing personal 3d renderers, game engine and physics solver projects, used ffmpeg via CLI for basic projects and have familiarity with color spaces through image processing and computer vision courses and personal video editing projects.

In preparation for the GSoC I have started doing research about the technical specifications of color spaces used by different HDR standards, color management within Blender internally and artist workflows. For ffmpeg, I have found a tutorial series on how to use ffmpeg’s C API by Stephen Dranger and will use it to prepare for the project.

As a long time Blender user and fan, it is incredibly exciting to have the opportunity to contribute toward its development and help enable the production of more glorious HDR content!