Possibly, I did not work hard to optimize the code. The advantages of ADMM-PD cloth is that it’s implicit and has hard strain limiting. On a few simple high resolution pinned meshes it performed a bit better (with default settings), but that’s the extent of my tests. It certainly could be used for the cloth brush.

For performance, yeah it would be really interesting to see what can be done on that. It seems to run slower or faster than legacy depending on what I’m doing. But interestingly, it appears to run really well with live scene alteration. With some TLC to performance, I think I we almost might able to use this comfortably in real time!

I’m running with an R7 2700x, 1080ti, 48gb ddr4

Nothing in blender wants to put my GPU to use(besides renders), and when its using my CPU, it barely uses half power of every thread. On that note, are there any GPU options down the road? I’m fond of playing with video game sims that use my GPU, and it blow blender out of the water in terms of speed. I’m very hopeful to see blender follow that in the future. But I’ve gotta know, why haven’t I seen anything simulation related use GPU in blender?

Here are a few oddities from David Li

Fluid sim running on GPU in browser: http://david.li/fluid/

Soft body sim(dunno what its running on) in browser: https://www.adultswim.com/etcetera/elastic-man/

They seem so powerful, that I’m distraught to find we aren’t able to do this with our actual tools. And I’ve never known why thats the case.

A total understatement, hah. There’s a lot going on in the background to precompute reusable data (SDFs and factorizations), and certain behavior (e.g. moving around the collision obstacle) will require recomputation. It doesn’t have to be that way, but it’s how I’ve implemented it for now. You can expect me to elaborate more on this in the manual.

Absolutely. One of the most expensive components of the solve (the linear system) is trivially parallelizable. However, there is only benefit from GPU parallelization if the resolution is high enough to offset the cost of parallelization. That’s worth doing in the future.

Are you talking about GPUs? In which case yes. I used to do a lot of that back when I was working on microclimate sims. But certain things are a headache to implement on the GPU, not to mention support long term when APIs inevitably change. That’s what holds me back most of the time.

It’s also likely the sims used in video games are simplified models that are tailored to a specific task. They also have a bigger budget

I wonder if two colliders intersecting might have something to do with the case there, at least initially:

This has already been fixed. I wasn’t properly indexing multiple obstacles from the Blender structs.

@mattoverby Will we have control over collision friction? I’m wondering if the collision settings will be taken into account before the end of the GSOC… I have more free time now to test but I miss friction a lot… Also, there’s some strange behavior when using self-collision and exaggerated deformations like falling from a very high place.

There is an approximate friction I can include. It’s not very accurate but better than nothing I suppose. I am knee deep in usability and bug fixes, so I don’t know how much time I will have to introduce new features.

You’ll have to show me a gif of what you mean. But it wouldn’t surprise me if its the result of tunneling (vertex passing through an obstacle and being projected to a bad location). This artifact can be alleviated by increasing the number of substeps. The way collisions are detected, it’s not really set up for super exaggerated deformation. The elastics can handle extreme deformation, e.g. you can stretch a neo-Hookean solid to infinity in ADMM-PD where as other solvers will crash. But the trade off is less accurate deformation sometimes.

Conjugate Gradient VS LDL^T self collision.

I built the branch right now so this test is with latest build.

EDIT: After test with 2min sub steps the both became better but the ears still have the same problem.

Ah. This is not what I expected, but I have an idea of what could be happening. Have you seen this problem with other meshes?

Funny thing about the monkey mesh. It’s not closed or manifold, I think. I know that it is at least not closed. As a result, the self detection algo is not guaranteed to work (but it usually does anyway, so I allow it to try). I think we’re seeing an artifact of that, but I could be wrong. I’ll see if I can reproduce the error on my side and test other meshes as well.

If that does turn out to be the problem I’ll probably just disable self collisions for such meshes, like I do with obstacle collisions.

Hello and congratulations! It’s really impressive project!

Just a quick question, can we have vertex groups for self collisions instead of disabling it completely? This would give some freedom and more control for fine-tuning results.

Best Regards!

Albert

Yes, there is already a vertex group for self collisions. I added that sometime last week.

Thank you for your great contribution!

I did a test with manifold. The manifolded monkey is much better, but in the ears, the thinner part, still have a battle between the vertices. Manifold meshes is better.

The teapot manifold is subdivided with 1 level, causing issues… What I think, as an end user, is that maybe an option to set the self collision distance because the issue seems to be in the thinner part of the objects.

Hmm I appreciate you trying that out. I did just commit a change to disable self collisions if the mesh is not closed. But you’re right there are still some artifacts that are occurring at thin regions.

A slight issue with this. There is no self collision distance.

However, I can make the self collision detection more robust at the cost of run time (well, a bit slowdown for high resolution surface meshes, should be fine for low res).

Could you send me those teapots and monkey so I can test this more quickly on my end? Maybe just the blend file, if you don’t mind.

This seems to be a good decision. I already tested and got this warning.

This is not only a good plan but also a “must have” feature, self collision is important.

Already sent. Note that changing the linear solver from CG to LDL^T solves the teapot issues but not for manifold monkey…

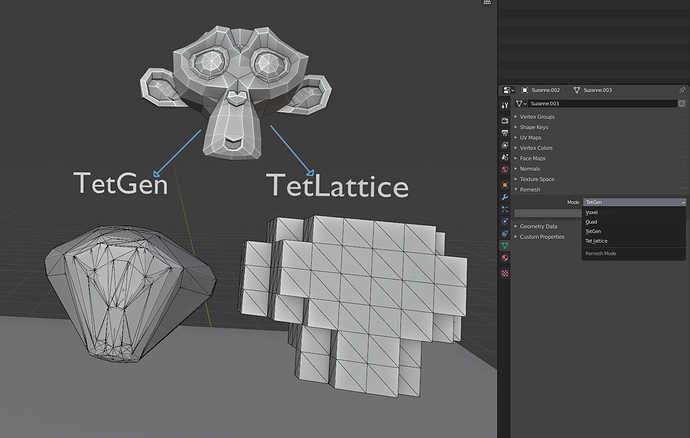

I didn’t notice this nice new options in remesh menu! Awesome to have this option, especially to use the old and legacy softbody method. @mattoverby is it possible to have an option to delete interior faces when remeshing with these new options? Somehow have a result with only an outer surface but with this inner edges only connections?

And thanks for this! For me, this new options alone are a huge contribution!

I did notice that, which was interesting. That is actually the reason why I included the LDL^T linear solver as a baseline. If the LDL^T solver is having issues, then there is a problem with the constraints and it’s not just non-convergence in the global step.

It is possible, but I am trying to budget my time to fix the critical bugs within Blender and solver issues on certain meshes. I actually do want the interior faces, that way we can “cut” the mesh in half and inspect the interior (which is especially helpful for TetGen). The remesher operation is just for a quick visually inspection. There are a number of other things I wanted to add to the remesher, like changing input parameters e.g. lattice subdivision, tetgen options.

I will have a section on this in my final report. I’ve spent a large amount of time this summer trying to robustly go from some input surface mesh -> something that can be deformed, self collided with, etc… And far less time than I hoped optimizing the speed and utility of the solver. It would have made my life so much easier if there was a Blender tet mesh object type! Then all of the meshing/remeshing operations could be done outside of the solver code, and the solver’s only job is to simulate it. That would be a necessary change if we want better soft body sims in the future.

As an end user, I would much prefer a slow but accurate (0 artifact) result, then a super fast but buggy behavior, I say don’t be afraid of adding accuracy related code even if it slows down the solver, and then give the end user the choice to pick which one to use.

(Apology in advance, if this is already the case, I mainly based my comment on the examples given in the 2 soft body related threads).

Keep up the good work, I am a huge fan of simulations in general, and this new solver is a great addition

Unfortunately there is no choice of collision response that will be artifact free. The fact that we are approximating the deformation through a low-resolution lattice alone introduces artifacts, regardless of the method used for collision detection.

The culprit is that we are doing online discrete collision detection. This has some benefits: it is fast, we can do large time steps or even quasistatic sims, and that we can begin the time step in a tangled or otherwise intersecting state. But this comes with downsides: we can get tunneling if things are moving too fast, or sometimes flat and thin regions of embedded meshes aren’t going to be resolved adequately.

Let me rephrase it then:

As an end user, I would much prefer a slow but accurate (as few artifacts as possible) result.