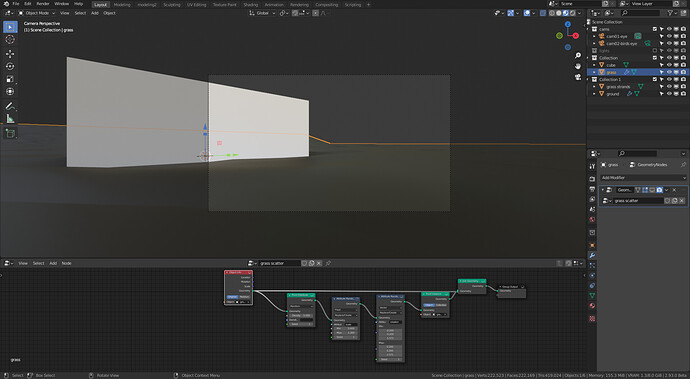

Thanks for the updated example. I tried a new scene with default settings with generic cube scattering it on a subdivided displaced plane and it seems to work, so it must be something wrong in that file.

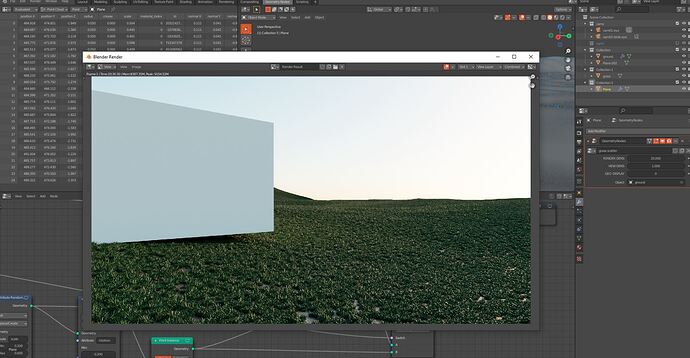

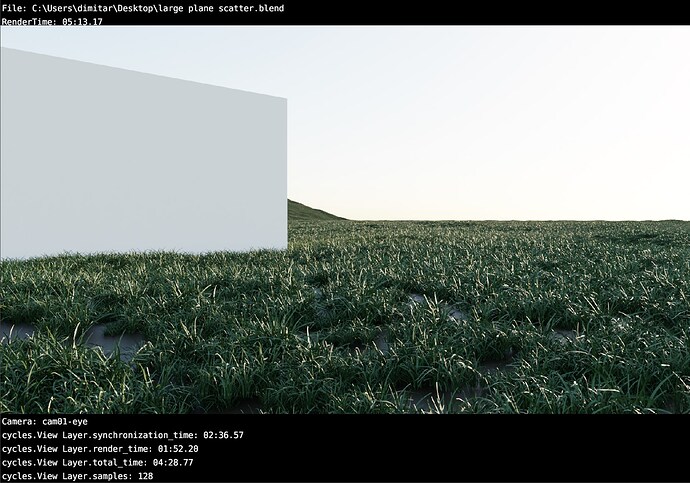

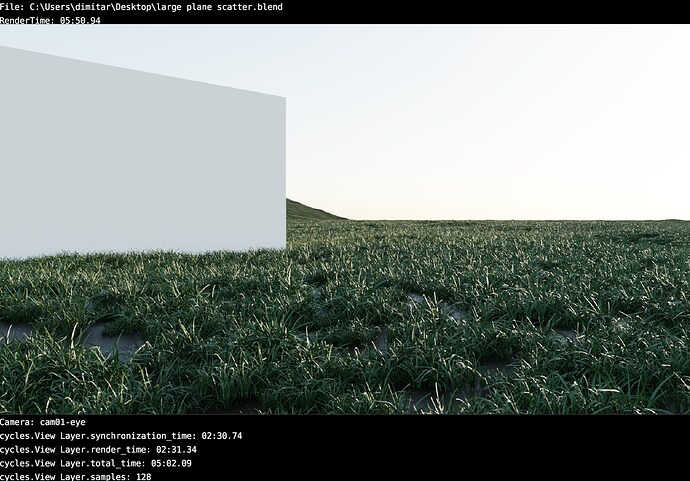

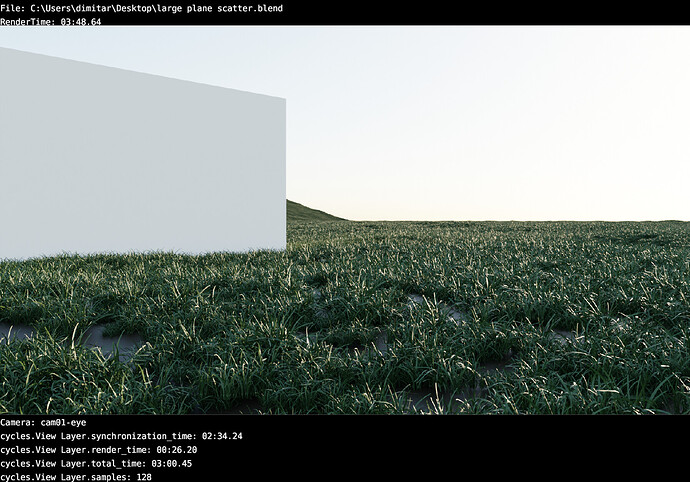

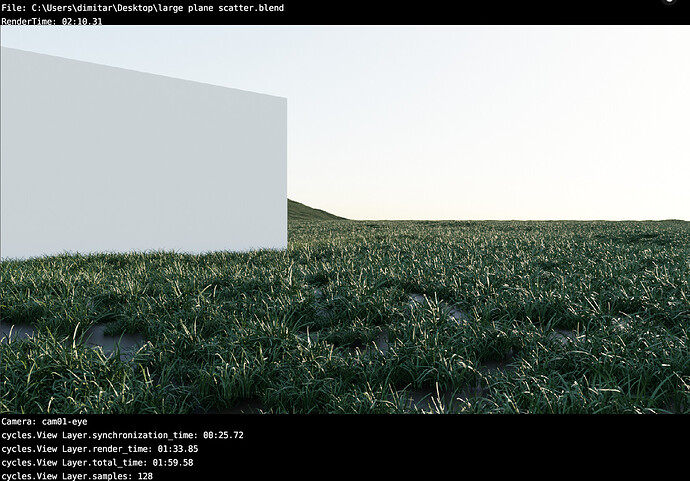

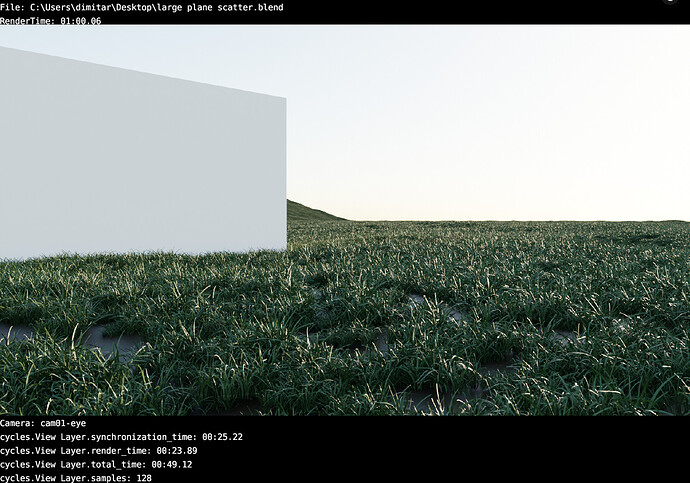

It works and with your scene if you have the geometry nodes on different object than the displaced one.But this image shows the main problem rendering instances from geometry nodes and thats the translation time.Its four times the density than your scene around 32 millions instances.The translation time is 25 minutes and the render time is around 8 minutes.Its not very practical on heavy production scenes.

Hello, I meant that I’ve enabled the modifiers for rendering only, not in the viewport, and that the rendering process with the modifiers on crashed Blender.

I tried to render your scene on cpu and precomputation took “only” 2 mins on Ryzen 5 2600. Maybe there is some issue with rendering too many instances on gpu right now. Can you do a test on cpu with your machine?

Also I tried recreating this scene in another program and parsing time was surprisingly similar at 1m50s. Maybe Embree is the limiting factor here ::

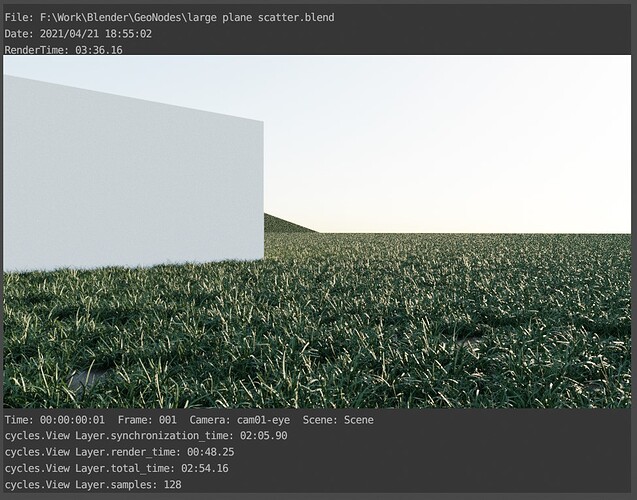

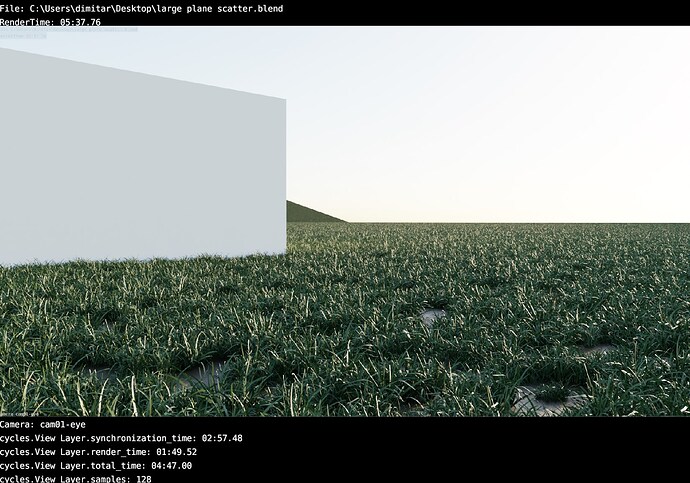

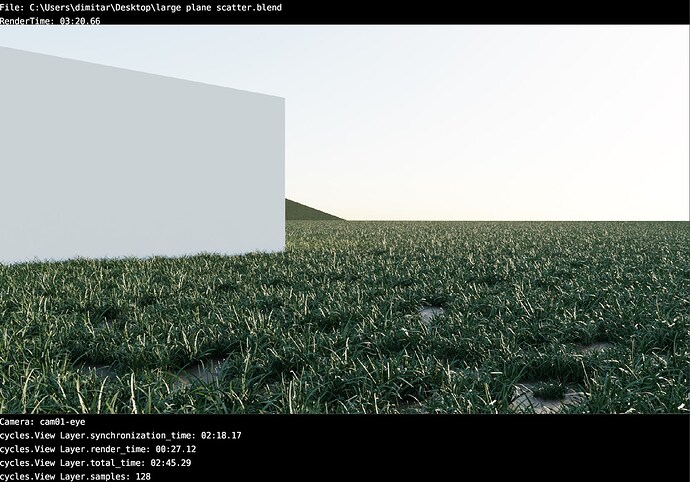

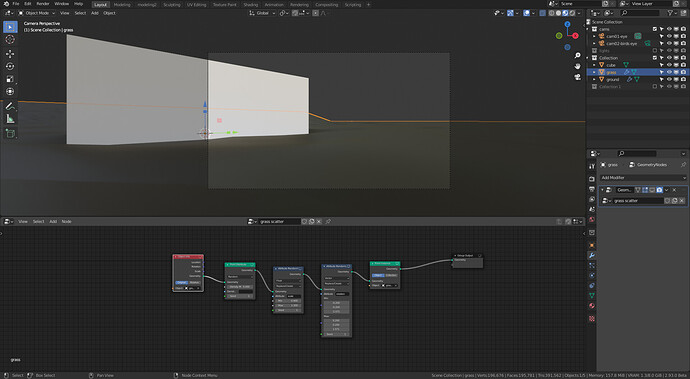

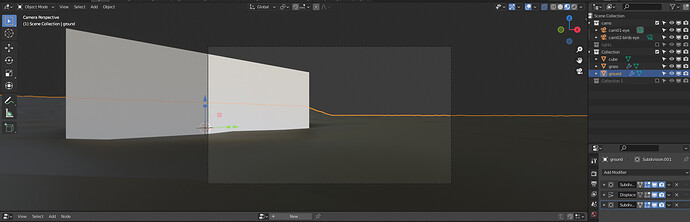

Stress Test comparisons

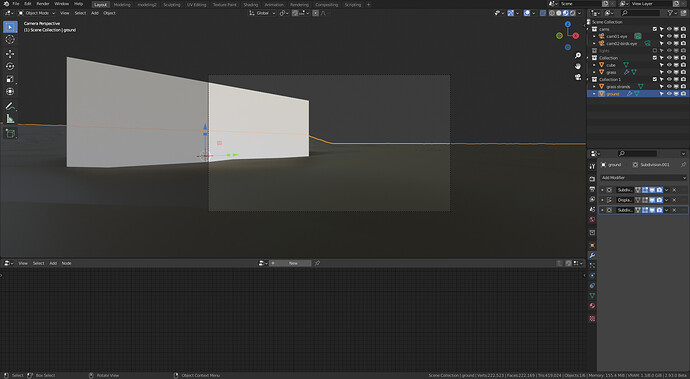

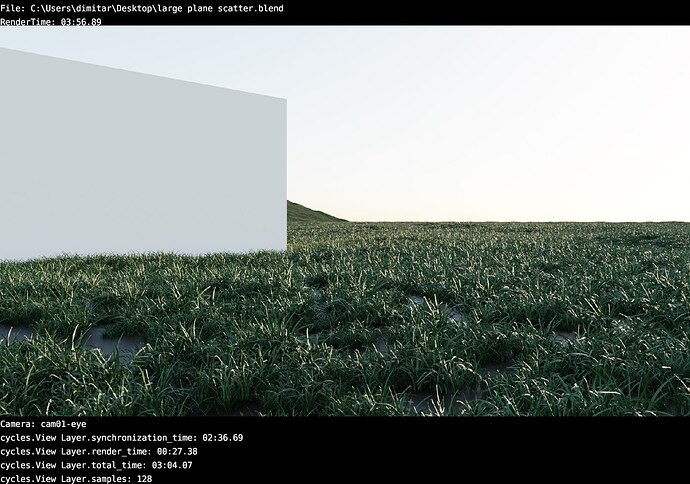

Here are some tests based on the feedback. Displace and geonodes on the same object crashes, yet I’ve tested displace with geonodes in simpler examples and it works. Suggestions to use a separate ground object seem to work well. Dynamic Paint-based camera cull really improves rendering times. I would have thought cycles takes better care of what’s actually in the render view, but it seems it doesn’t at the moment.

No subdiv no displace (simple plane)

CPU

GPU

Separate ground object w/subdiv & displace combined

CPU

GPU

Separate ground w/subdiv & displace not combined

CPU

GPU

Camera cull (dyn paint) with separate ground w/subdiv & displace not combined

CPU

GPU

So, dynamic paint it is, although I find the process of having to move a few frames forward quite counterintuitive in order to sync viewport display with what will be rendered, but this seems more like a dynamic paint issue than geonodes issue.

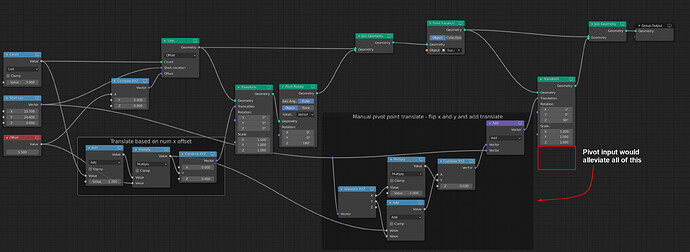

Pivot input in transform node

On a completely separate node, we really need to have a pivot point vec input in the transform node

This example took much more than I thought it would due to having having to flip x and y in my vector transform when rotating, as the translate seems to occur after the rotate in the internal node operations. That’s the only way I can explain to myself the vector I need to input in order for this to work properly.

It’s cool though, at the moment, it’s like driving a 1985 Fiat Panda in the offroad mountain tracks, anyone that can manage will only be able to manage better with a 2020 Land Rover Defender ![]()

Node “attribute proximity” with face is absolute distance, so it is not possible to work only on one side at time.

Would be pretty handy to be able to use raw signed distance from face, positive and negative values.

Cycles rendering is quite well optimized actually. If you look at your examples with and without camera culling, the rendertimes are really close. Cycles cannot automatically cull off-camera geometry, because it might still appear in reflections, cast shadows, etc. Also it seems that scene preparation is entirely single-threaded right now, so any parallelization there would make a huge difference for modern processors.

Cycles-x! Cycles-x! Cycles-x!

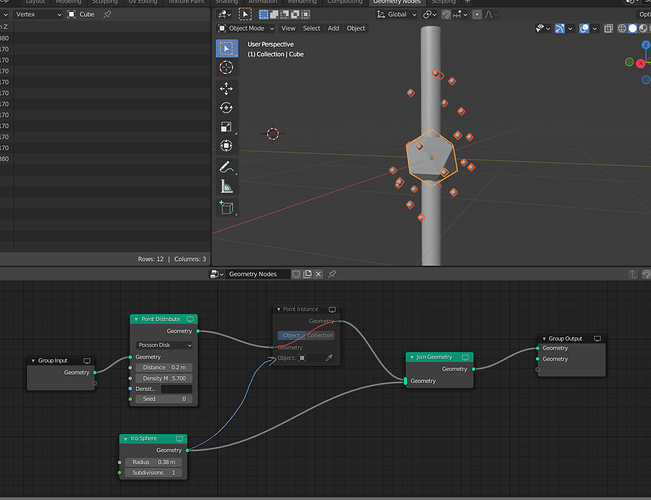

is there a way to use the geometry from primitives nodes to be clone by the point instance or similar?

With the addition of the Switch node, I am once again confused why we have pretty much all the node with simple selection between data types, but just specifically for Math node, we need to have Attribute Math and Attribute Vector Math separate. Can someone explain this? Why is there this weird exception when literally all the nodes other than Attribute Math show that it’s not necessary to have vector specific version, since there’s a data type dropdown menu anyway…?

Unfortunately this is current limitation.

Do you mean combing math and vector math with a drop down menu selection? This is interesting, I never thought about it that way

I asked the same question not long ago, and someone quote this to me

“Eventually” sounds very vague but I guess we can only wait

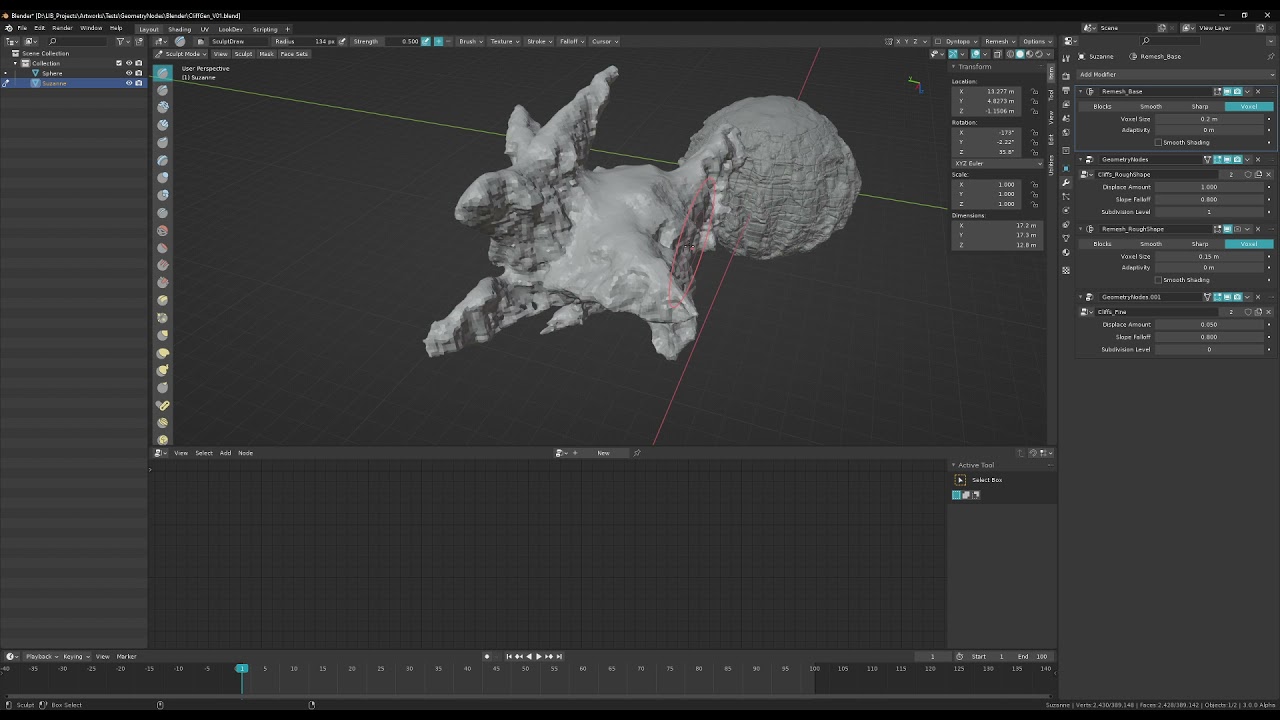

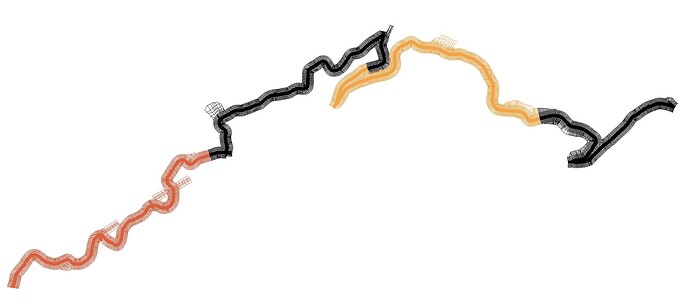

I tried to do some procedural cliff generator using the geo nodes, and while possible, the amount of mental overhead needed to deal with the nodes in the current state is just too much. There are some people who often argue against node based programming, claiming that it’s inferior to the text one, and limitations of current design give them a lot of ammunition for their arguments. It combines the worse of both worlds. Having to deal with constant typing of words into attribute fields, and having to interact with clunky, too high-level UI on the nodes to do even most essential of the operations.

I really hope this will be remedied by actually having something to use in those bright blue node slots, so I won’t have to do a giant, long snakes of the attribute vector math nodes and instead will be able to use it in the same way I can in Shader Editor.

Here’s some thingy that more or less allows you to sketch cliffs. But the performance isn’t great at the moment unfortunately, and also remeshing needs to happen on modifiers outside of the geo nodes:

I would attach the .blend file also but this forum doesn’t allow it (nor the zip files).

For blender file attaching, you can use Pasteall I think.

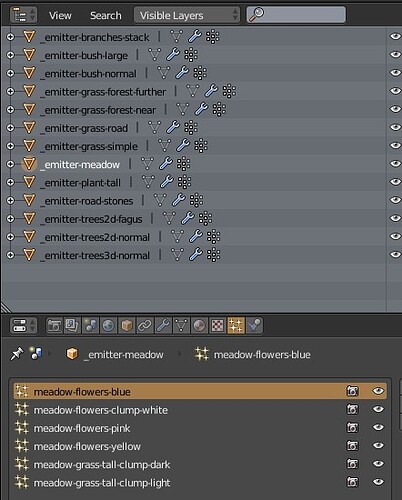

What feedback your performance test is based on? Nobody who complained about the performance is about to use GNodes for projects consisting of a single plane with evenly distributed single grass asset. Try to create some larger, natural looking scenery using Blender, and then let us know how “it works” for you.

The fact is Blender currently doesn’t offer any smooth workflow for such tasks, also considering Blender 3.0 is even slower than 2.79 in the basic features which GNodes relies on (like vertex / weight paint), I am not sure we can expect this will change any time soon (I would love to be wrong here!).

My testing of GNodes looks like this - I download nightly build every week, create 1M mesh and try to vertex / weight paint it. If it is laggy like in the current version, I know I can stop the testing because GNodes won’t work for me.

Anybody knows whether there are some plans for fixing / improving this basic stuff which GNodes relies on? Shouldn’t this have the highest priority?

Geometry Nodes has been always presented like a tool for scattering, and scattering means distributing assets over large area (otherwise you can place it manually), and the distribution needs to be controlled precisely and smoothly (using weight / vertex paint). This very basic task is currently almost impossible to manage.

But don’t get me wrong, I for sure appreciate all the development, it has definitely some great potential.

I am not sure what you are getting at here. I mentioned this is a stress test with about the right number of particles. It’s not meant to be a realistic looking, which I thought perhaps the white box helps describe that ![]() These tests are meant to see how many instances are too much and the impact on render times, really.

These tests are meant to see how many instances are too much and the impact on render times, really.

With the new switch node you can visualise less instances so your viewport stays more performant and then use the is viewport node to have different density when rendering

I imagine a lot of the bottle necks of performance are general Blender bottlenecks dealing with many objects instead of GN specific bottlenecks?

With the new switch node you can visualise less instances so your viewport stays more performant and then use the

is viewportnode to have different density when rendering

The viewport performance even with the high amount of particles is not bad (in Object Mode) and it can be improved easily by reducing the view distance - this is acceptable solution to me because it doesn’t change the look of the scene. As our work is all about the precision (we create reality-based “game sceneries” - rally stages), I need the preview to be as close to the in-game result as possible (so proxies or reduced density wouldn’t work for me). Maybe LODs would also do the job (hopefully Blender will get the feature in future, I don’t want to base our work on 3rd party addons).

So in our case the particles preview is not problem - could be better, but OK.

The problem is with editing things - the distribution of particles (Weight Paint), adjusting particles properties, editing emitters geometry / UV mapping (especially if you have some modifiers assigned - like ShrinkWrap). Then everything is really, really slow.

Currently we have to split the projects into small sections to make sure the ground is ~100k max.

Then we need to move the particle systems to separate emitters (editing a larger emitter with 20 particle systems is impossible), while 1 emitter can have just few particles systems assigned (so we end up with having like 15 duplicated grounds) … then I am able to achieve ~5fps when Weight Painting.

And of course if I change anything in the ground, I have to update all the emitters to match the ground again.

OK, maybe our work is little specific, but I think the question should be whether it is that extreme to justify such workarounds. I believe that in 2021 we should be able to at least edit 1M mesh weights smoothly.

Or is there any other (faster) method planned for controlling the distribution with GNodes?

Is there anybody in the know about the design decisions pertaining to instanced objects and their materials?

As it stands now an instanced object loses it’s material as soon as it gets ‘realized’ instead of linked, though it’s not really obvious to the user which actions would cause that to happen.

For example, if you instance an object (with point instance) and you add a point rotate behind the point instance, you lose the material. (see for example my bugreport ). Now this is of course not very useful to do, but I can’t oversee if there are other situations which would cause your geometry to become real and thus lose it’s material.

Also, if you apply the GN modifier, you lose all material info for all instanced objects. Which also seems undesirable to me?

The boolean modifier does the same, but there the materials are never used. Not even when the modifier is not yet applied (Which IMHO is s shame in the boolean modifier as well, as I’d like the option to combine the materials there as well), so it’s less surprising.

I’m sorry if this has been discussed before. I tried searching the topic, but either it hasn’t really been discussed (unlikely) or I used the wrong search terms (probably).

edit: I found another situation where this behaves very confusing. If you add a curve modifier (or maybe any modifier?) after the GN modifier, the instanced objects get realized and lose their material. I’d consider this a bug. (I’ll update my bugreport)

Any news on point instancing editable geometry?