That would essential !

The problem is that such method need vertices

I’m almost sure it’s possible to do this within the nodes

How can we make sure there’s no particle on the same spot?

It’s quite common when we create some custom position for our particles that some of them have the same position.

Is there any task already created for a point merge node?

Hi, can you elaborate a bit further on that? What do you need to “pull out”? What for?

Hi, is there a way to manipulate shapekeys in GNodes ?

That would be awesome.

Is it feasible to make Shape Keys work like Vertex Groups attributes? It would be awesome to have a shape key attribute or a way of controlling shape keys with Geometry Nodes.

Question for the developers: would it be possible to make Is viewport node treat Cycles interactive shading mode as ‘render’ and not as ‘viewport’? I understand why current behaviour is desirable for Eevee, but Cycles interactive can manage the same level of complexity as the final rendering. Seeing the full representation of what is generated by GN modifier would be very helpful during scene assembly, because most of the rendering is done in interactive mode. Or do you plan a separate Is Cycles node?

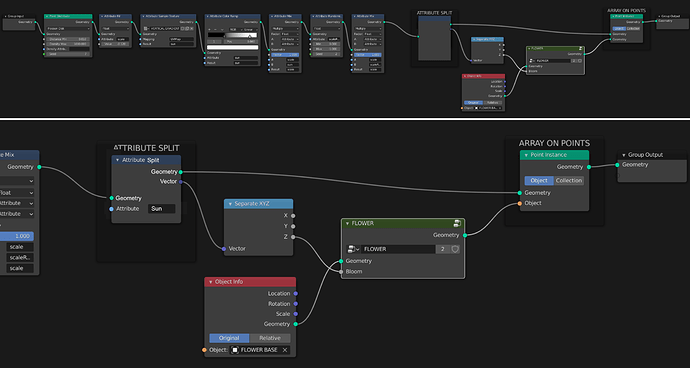

Not sure how well this builds into the existing project but for my workflow, this is what I was expecting to do and then found I couldn’t. This is from the flowers blooming in the sun test I did further up the thread.

What I wanted, and often want to do with procedural tools, is scattering generated models across generated points where values at each point (or through a list of values from eg, number range node) control certain attributes of the generated geo.

Excuse the rough mockup but this is what I wanted the process to look like:

Essentially to take the Sun attribute which is defined earlier by the Texture Sample node (image sequence of baked direct lighting maps). I’m not sure how attributes are stored, if they’re all vectors or if they change but perhaps if they’re a mix of scalar and vector then you could have a versatile socket that switches scalar / XYZ / RGBA depending on selected attribute. Anyway I wanted the 0-1 value from the Sun attribute as a Scalar value to go through the maths I’d set up for moving the flower petals and then have it arrayed over the points.

Not 100% if that’s the right order. Perhaps it wants to array across points first and then take the sun values afterwards to control how open each flower is etc. Just the workflow I was looking for for that project anyway.

I think this might come down to a misunderstanding about the way geometry nodes are evaluated. In shader nodes, the entire node tree is evaluated potentially millions of times, once whenever a light ray hits a surface, for example. The node tree is compiled and then used later by cycles or EEVEE. In geometry nodes it’s quite different-- the entire node tree is calculated only once. Then each node operates on every single element of the geometry at a certain point. Sometimes this is called “data flow”.

An attribute is basically a list. The length of the list might be the number of vertices, the number of face corners, faces, edges, etc. In other words, every vertex has a “weight” value, etc. The attributes are tied to the geometry because of the strong connection between the mesh topology and the attribute list. So splitting a single value from an attribute and passing it around doesn’t really make sense in the context of the evaluation system.

That said, there will be ways to transfer attributes from one geometry to another, but it will probably happen with a dedicated node.

Hopefully that makes sense and I understood your idea properly.

Yes, it just has to be implemented like the other attribute types.

The viewport value is tied to the modifier system rather than the render engine, so it really comes down to bigger questions like per-viewport dependency graphs, etc (one viewport can be in workbench mode while another might be a cycles preview).

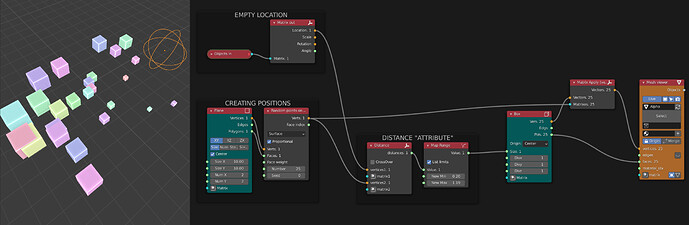

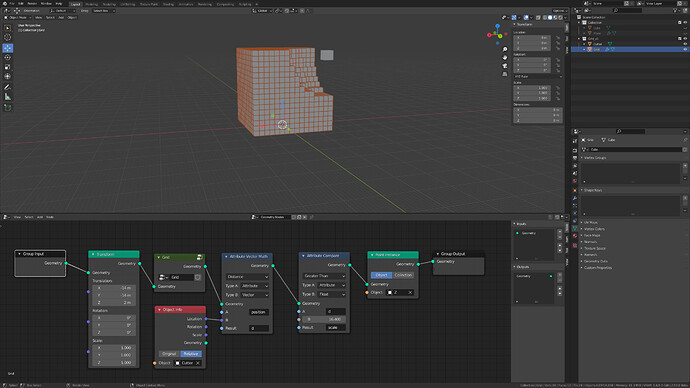

This example is simplified but the data flow I was expecting as in Sverchok:

The distance list is a list of scalar values with a length equal to the number of positions and this goes into the cube’s size which generates X number of cubes in a single position. Then when this is arrayed across the positions, each cube has a size that matches the list index between size and position which keeps everything making sense.

I think maybe my understanding of the intended scope of Geometry Nodes not right. What role do the developers see GN taking within professional workflows? Specific use cases beyond the randomly scattering objects which is already in a good place?

I think you have to use poisson disk mode of Point distribute node to ensure there are no overlapping points.

You are saying this like it is impossible with what I can’t agree. Such programs like Sverchok treats every aspect of a mesh separately. Even edges and faces are just array of figures. And nevertheless the program is able of doing something.

The question is would it be really appropriate to extract such data from geometry because in this case this data would definetly become just array of values. From my point of view there are certain disadvantages of doing this. In this case a tree will consist with nodes of different level of abstraction and I expect it will be difficult to switch from reading nodes wich dealing with meshes to nodes dealing with arrays. And the second is that user should keep in mind how separated array is mapping to geometry elements. I believe the last one is real problem in Sverchok.

Hey, that’s assuming we will not tinker with the particles position, and we will! see the example just below

And this is just one example!

assuming users won’t have the need to merge points near each other because there’s a distribution node that can initially avoid points near each other is wrong ![]()

BTW are you Sergey the developper working for the foundation?

If I understand correctly you want to have different flower geometries for different values of sun, and use that geometry on each point based on the sun attribute.

I can think of at least one workaround for this within geometry-nodes. Assuming 10 hardcoded sun values you separate the points and instance pre-made assets based on it.

For full control over the deformation of each “instance” you would need to first instance the flowers and then deform the individual flowers. One of the ideas (not implemented yet) is to have the instances to “inherit” the non-builtin attributes from the points they were created from. This way you could:

- Copy the original point position to “original_position” attribute.

- Deform the instanced geometries based on the “sun” attribute + where the original point was (assuming you even need that).

The downside is that performance will take a hit since you will no longer be levering the benefit of having all the flowers to look the same.

All that said and done, this seems more a better fit for “collection modifier” (working name). This would permit to do object-level operations such as blending actions and moving bones. It is a bit early to say, though, since we first started to contemplate that this week. The pseudo-workflow would be such that:

- The flower asset is created with an animation where a bone control how open the flowers are.

- Geometry nodes is used to generate the points with the right attributes based on the sun influence.

- Collection modifier uses the points object to instance the flower objects, each one with a different “action value”.

Actually I don’t see why to use Point distribute node for creating grids. I think there will be dedicated for this separate node in the future.

Yes I’m Sergey indeed but I can name myself nothing more than Sverchok developer.

That’s just one example,

merging nearby points is useful in a lot of scenarios when we start to play with the points position

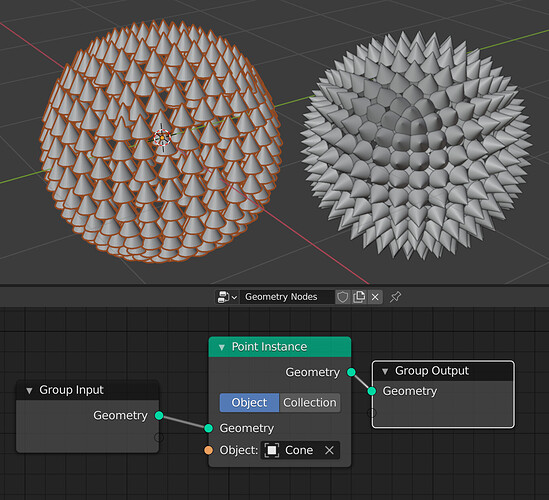

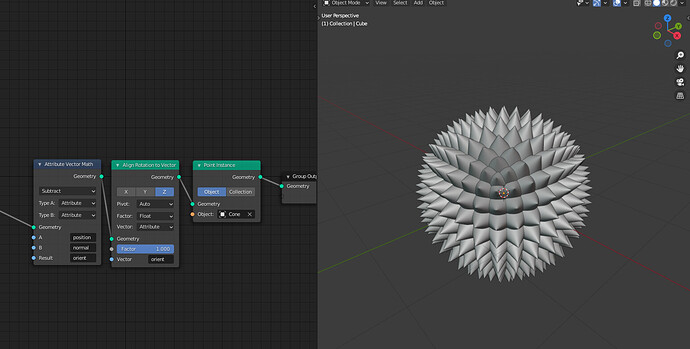

Is there any way to orient to normal objects instantiated with Point Instance? I don’t see how

Using the old method of Instancing from objects, it was done by default, and also allowed to do it from faces.