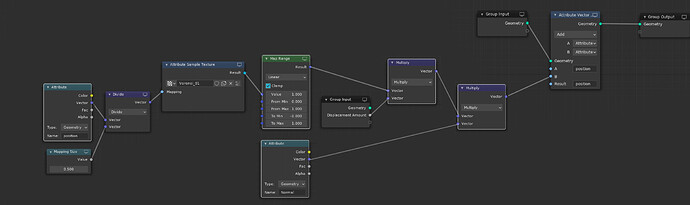

No, not at all, that’s something totally different. Also useful, but different. What I want is something like this. This is how simple it should be to displace mesh along normals using a texture with Geometry Nodes:

(Ignore the mistake where two group input nodes have different set of inputs. It’s mockup composed of multiple screenshots)

Do you see that giant snake I posted a few posts above, with all the confusing names for custom attributes I had to come up with just so that my intermediate computations do not mix? Well, this is what could replace it. Something this trivial. In fact it’d actually be even simpler looking as I just copy pasted shader editor’s attribute node. The GN attribute node could be simpler and smarter, with just a text field for attribute name and single pale blue node output slot.

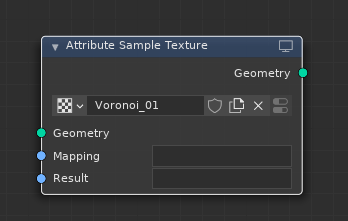

I mean look at the Attribute sample texture for example:

It inputs geometry and it outputs geometry? Why?.. Just because how limited the attribute workflow is. All you need to sample the texture are the mapping coordinates. Look at how simplified it is in my example. Rather than even these most trivial nodes having Geometry struct input and outputting this cryptic “everything” geometry struct, why don’t they output just RGB data as vector type node output and input just mapping coords as a vector type node input?

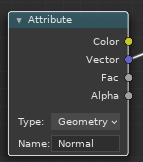

We can have just simple Attribute get node, like we have in the shader editor:

Which could then easily be used to put any custom attribute to be used as mapping for the Attribute sample texture node. Image sampler itself doesn’t need to input geometry just to access the attribute. This whole workflow is so overcomplicated and clunky.