Then it’s just not for you, doesn’t mean it isn’t useful for everyone else

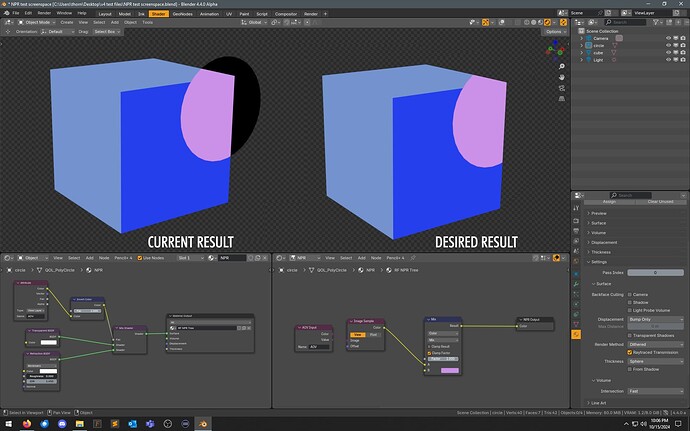

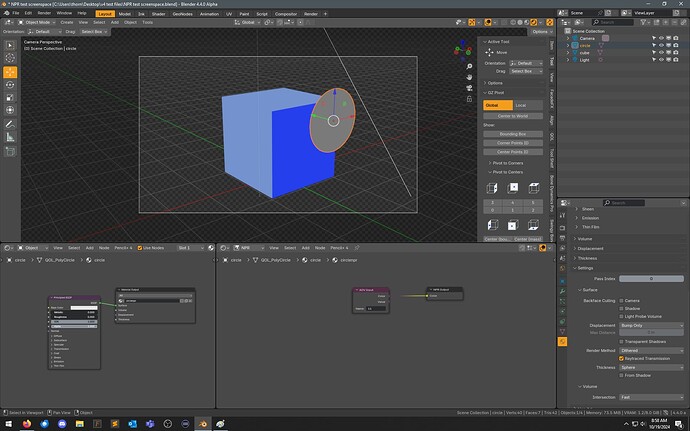

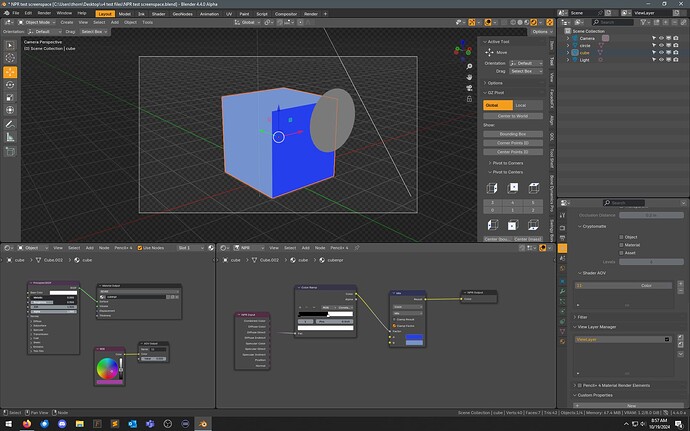

I’m not sure if this is possible, impossible, planned, not planned… but, would be quite cool if this could be done. I’ve tried a ton of variations on nodes, but couldn’t end up with the desired result.

(IE, I want the area of the circle mesh that isn’t overlaying the cube to be transparent)

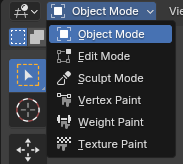

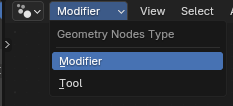

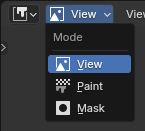

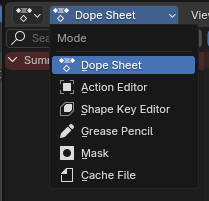

As I said on a reply on projects, “Modes” are seemingly a general UI in Blender, but the Tab shortcut is only used in the 3D Viewport, so I believe it would make sense for it to be global.

FWIW, I’d prefer it not be hardcoded to TAB. (Though, having an assignable hotkey would be nice.)

I will say though - so far, once I set the base shader up in Object and go into NPR, I’m not going back into Object that often. Nearly all my adjustments are being done in NPR mode.

This definitely feels like an oversight, Transparent should be Transparent. File a bug report?

I don’t know much about NPR, so excuse me for asking stupid questions. I probably misunderstand how things work, but as I see @modmoderVAAAA has the same trouble understanding I think it’s useful to ask my questions anyway.

- Do I understand correctly that this new NPR tree always is appended after the (‘normal’/PBR) material output ?

- Somewhere it says ‘all the NPR inputs are images’. And also, we can have only one NPR input and not feed back into a BSDF. Does this mean all images are in screen space?

- And if so isn’t this conceptually more like a compositor step, even though it runs in the render engine and can use shader nodes instead of compositor nodes.

- And if it is more like a compositor step, wouldn’t it be more logical to implement these things in the compositor and be able to use the same NPR functionality in cycles?

As said, I’m probably completely missing the point. But since I am missing the point I can imagine other people who are not really into NPR stuff might have the same problem…

I think the proposal page does a good job at explaining why NPR should happen at this stage:

NPR Challenges

- NPR requires way more customization support than PBR, and requires exposing parts of the rendering process that are typically kept behind the render engine “black box”. At the same time, we need to keep the process intuitive, artist-friendly, and fail-safe.

- Some common techniques require operating on converged, noiseless data (eg. applying thresholds or ramps to AO and soft shadows), but renderers typically rely on stochastic techniques that converge over multiple samples.

- Filter-based effects that require sampling contiguous surfaces are extremely common. However, filter-based effects are prone to temporal instability (perspective aliasing and disocclusion artifacts), and not all render techniques can support them (blended/hashed transparency, raytracing).

For these reasons and because of the usual lack of built-in support, NPR is often done as a post-render compositing process, but this has its own issues:

- Material/object-specific stylization requires a lot of extra setup and render targets.

- It only really works for primary rays on opaque surfaces. Applying stylization to transparent, reflected, or refracted surfaces is practically impossible.

- The image is already converged, so operating on it without introducing aliasing issues can be challenging.

- Many useful data is already lost (eg. lights and shadows are already applied/merged)

Yes, the ShaderToRGB is not supposed to go anywhere anytime soon.

However, as EEVEE keeps evolving it’s going to be quite tricky (if not impossible) to keep properly supporting it.

One of the goals of this design is to try to come up with something that is more “future proof”.

Yes, not the first feedback I get about this.

I’m not sure if we can (or should) solve it automatically.

But at worst we could add an explicit Alpha socket to the NPR output, so that you can manually do something like the Holdout node.

The way alpha is handled in nodes is kind of weird, tbh.

About the “isn’t this just compositing?”

The design proposal goes into more detail, but there are 2 key differences:

- Compositing is applied after the render has finished. Here the goal is to have the filtering intergrated inside the render pipeline, so it also works with transparency and indirect lighting. Basically, have the same level of consistency you would expect for PBR.

- NPR Trees will also support custom shading.

So yeah, in a sense this overlaps a lot with compositing.

Ideally, I’d love to end up with a system where both compositing and shader nodes are available inside the same tree.

But for now it’s much simpler to add some basic filtering support to shader nodes.

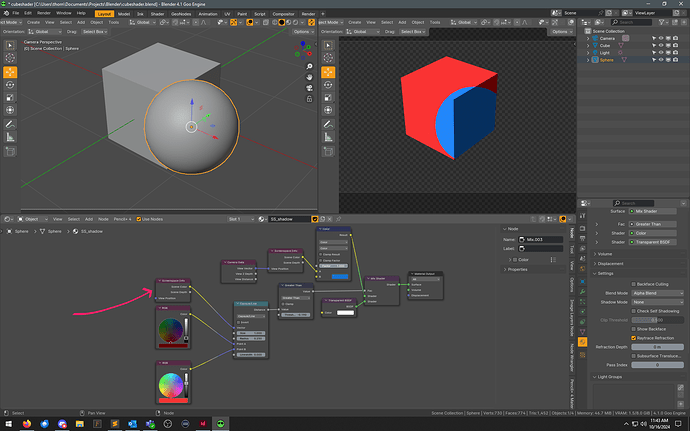

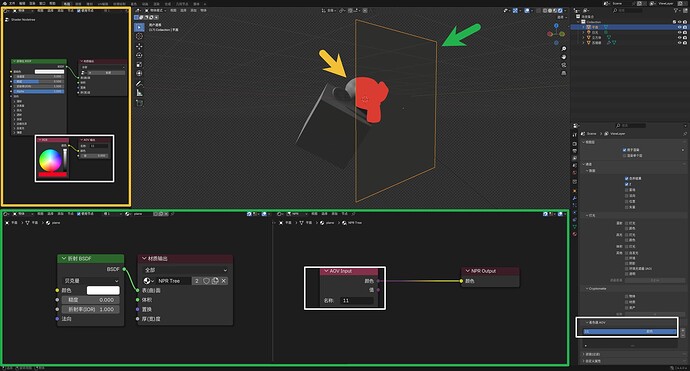

You’re probably already familiar with this, but just in case - here’s a similar (kind of) setup in goo. Here, alpha is actually pulled from the scene alpha itself (i believe that’s the math) not the cube specifically. Works great.

So, it’s almost like i’m looking for output connection on NPR input for scene alpha, and/or alpha factor/connection on the NPR output.

(FWIW, Holdout was one of the nodes i played with to see if it would “take”, so - funny that you had a similar thought.)

And this was my point:

I mean, i do not see approach lets just insert function a() in to function b() since its semapler when it is possible to compose them all at the same level with just exposing more internal info, like 10-100 z-ordered layers with transparency seems still better and scalable rather than 10 minute compilation of mega shader from 4 node trees with 8 different nodal system and 100 levels of abstraction.

If you read my design proposal, that’s basically what it says.

Compositing passes done per sample and per ray-depth level.

Add some extra passes (like per-light shadow masks), and a buffer with per-light data of the scene, so custom shading is still doable in an engine-agnostic way.

And allow setting a different compositing shader for each object, for convenience.

So I don’t disagree with you, really.

If you can convince the other Blender devs that that approach is the better way to go, you’re super welcome.

That said, I don’t think making up things like this is going to help you to convince anyone.

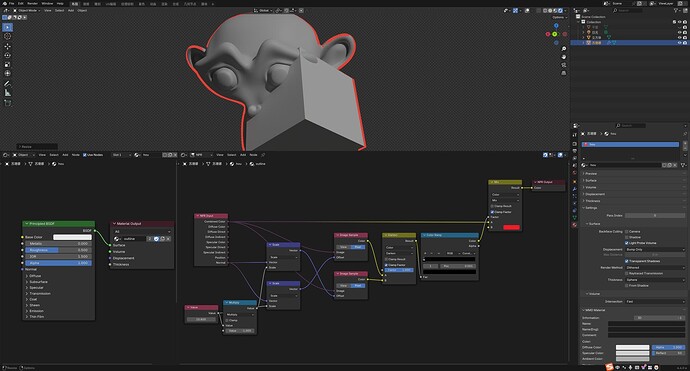

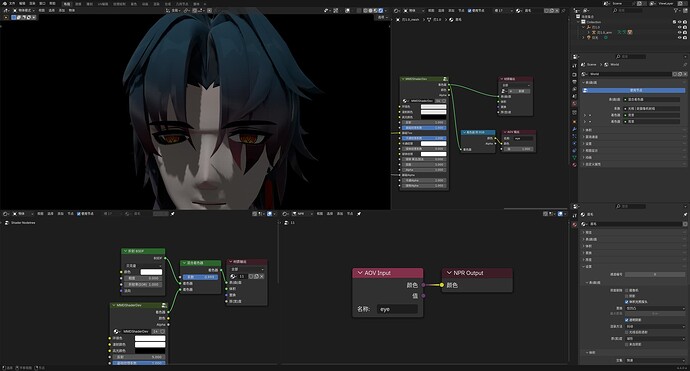

This facial detail is a mesh, “casting” a shader onto another object via scene transparency and target base color.

The neck “shadow”, the dark lines above/below the eye, and the eyebrows - same setup.

This isn’t something quickly accomplished in compositing with a few Z layers and a cryptomatte. Not to mention the overhead of all the z layers that I don’t want, and/or edge fringes from view layer mattes.

And the shader compiles quicker than the average Eevee Next shader.

Dude, if you don’t have anything to contribute other than conjecture and you don’t use this workflow, don’t contribute ![]()

I have no experience with that area so i have to make some silicon case as example to describe this at least somehow ![]()

If you read my design proposal, that’s basically what it says.

I think here was some the threshold for understanding this that i did not bypass or only my blindness \

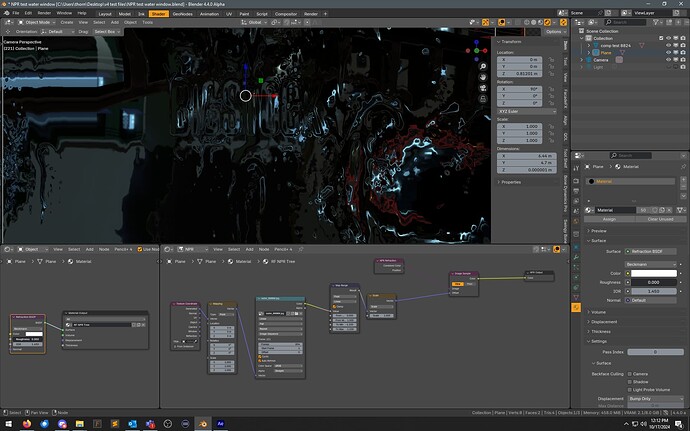

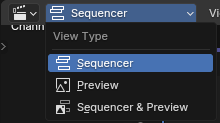

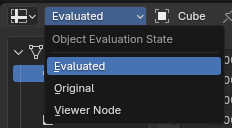

Looks like the Image Sequence node (using an image, or movie) doesn’t work at present; perhaps not implemented yet?

Have also noticed that if this material (in screenshot) is present in the scene, the image/movie will not play in the scene even if assigned as a non-NPR material to another object.