Update:

Hello everyone!

As you might know, we have an ongoing project in collaboration with Goo Studios to improve NPR support in Blender.

We had a Workshop in July and decided to start by making a prototype to gather feedback before committing to a final design.

The prototype’s goal is to provide something as powerful and flexible as we can, see what the user community can achieve with it, and from there evaluate what features should be implemented in the main branch.

(So don’t expect backward compatibility)

The main things we want you to share with us are:

- What results you can achieve with these features that were not possible before.

- What results you still can’t achieve.

Note that we have limited resources, so expect some lack of polish (again, keep in mind this is just a prototype).

Overall NPR Targets

- Light & Shadow linking

This one has been already merged into 4.3! - Custom Shading

Provide direct access to light and shading data, and allow users to process and combine them in arbitrary ways. - Filters and mesh-based compositing

Provide a per-mesh/material post-processing workflow that interacts automatically with other render features like reflections and refractions.

Implemented Features

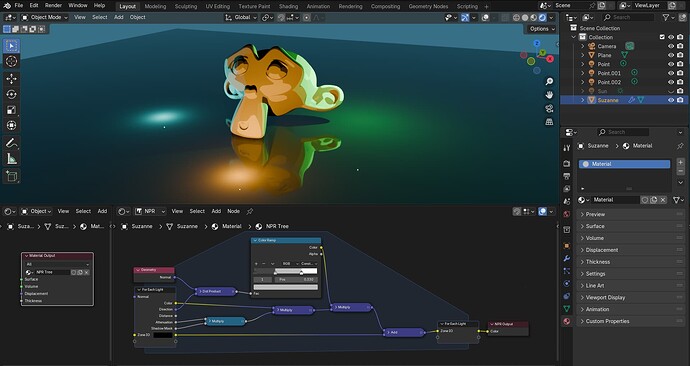

NPR Tree

For the prototype, we are using a separate NPR node tree where these features are being added.

NPR trees use the same nodes as Materials, except:

- New NPR-only nodes are available.

- BSDF nodes can’t be used here.

The NPR tree is selected in the Material Output node.

You can swap between the Material and the NPR node tree with Ctrl+Tab.

NPR Input

You can think of this as an extension of the ShaderToRGB workflow.

NPR Refraction

The NPR Refraction is similar to the NPR Input, but it reads the layer behind a refraction material.

It needs the Raytraced Transmission option enabled in the material.

Image Sample

These nodes output image sockets, so you can sample them with an offset and make filters.

AOV Input

Similar to the NPR Input and the NPR Refraction, the AOV Input lets your sample AOV images.

Here we can read the AOV from the behind layer because the Refraction Material doesn’t write to the AOV (otherwise it would overwrite the Suzzane AOV).

Multi-layer Refraction

Refraction layers allow chaining multiple refractions for a “mesh-based” compositing workflow.

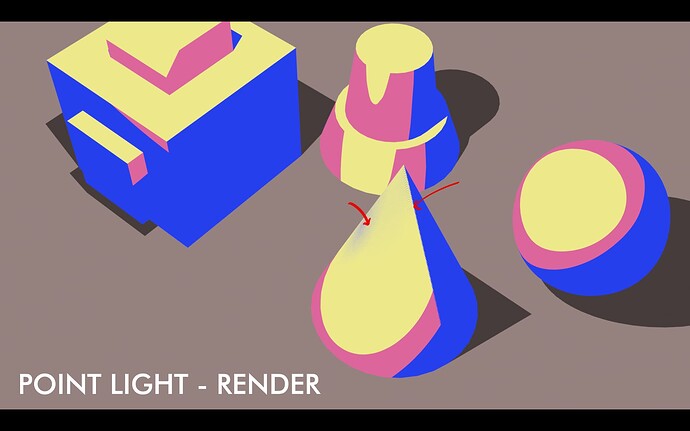

For Each Light

For Each Light zones allow creating custom shading that works consistently with arbitrary light types and colors.

To Dos

Main Features

Known Issues

- While you can read and write AOVs from NPR nodes, you shouldn’t read and write to the same AOV (even from different NPR materials) since the results may vary randomly.

Download

Bug Reports

The NPR-Prototype branch has its own separate bug tracker:

To report a bug:

- Verify that the bug is not already reported.

- Verify that the bug can’t be reproduced in the main branch daily builds.

- Verify that you’re using the latest NPR-Prototype build.

- Open the NPR-Prototype build, click

Help > Report a Bug, and fill the required info.