The material editing workflow is meant to stay as close as possible to the current one.

So no, there’s no current plan for this.

You shouldn’t rely on the order the light loop is executed, the order can change depending on internal factors.

If you have an example use case for what you’re trying to achieve we can figure out something.

Keep in mind we plan to allow having For Each Light zones for specific light linking groups, and adding Light Nodes support.

We need to figure out better units/workflow for the Image Sample node. Pixel offsets by themselves are not too useful, especially without access to the render resolution.

Still, making screen space curvature work consistently across resolutions and render distance is tricky.

I haven’t thought about this, to be honest. As a workaround, you should be able to post-process the background from a regular mesh using a refraction material.

A big inverted cube with backface culling and shadow visibility disabled should do the trick.

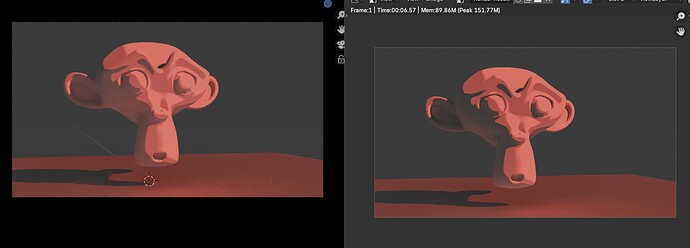

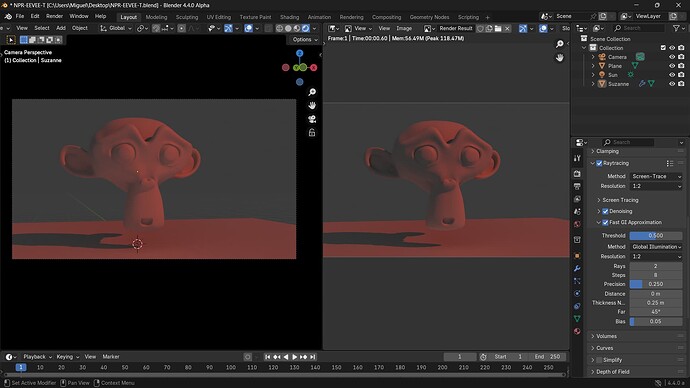

I can repro this on the main branch too:

Can you open a report on the main tracker?

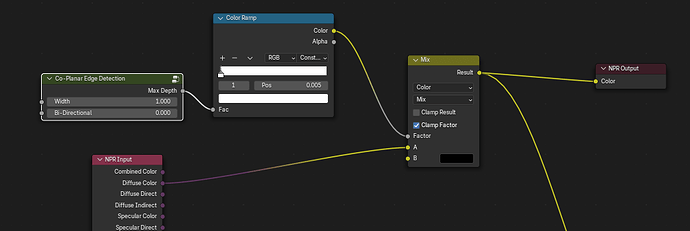

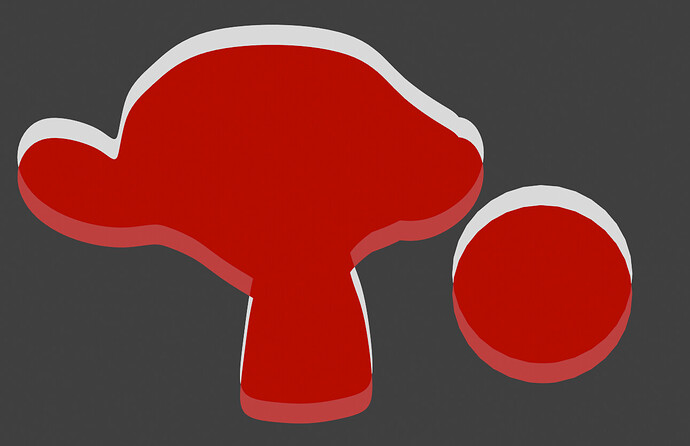

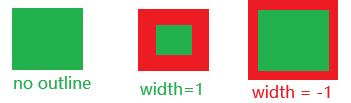

I am recently planning on switching from malt to this build. There is a node asset “co-planar edge detection”. I don’t know what it is designed for initially, but it is very close to malt outline node. I can get pretty good outline by this node setup

The only problem is if i set the width to -1, the outline is supposed to be drawn outside model because the offset is negated. But actually, even if i set it to -1, it still draw line inside model, which is strange

In case I didn’t explain it well. Here is an illustration of width = -1

Total noob here with npr and this workflow/branch but so far its super cool and i’m having fun!

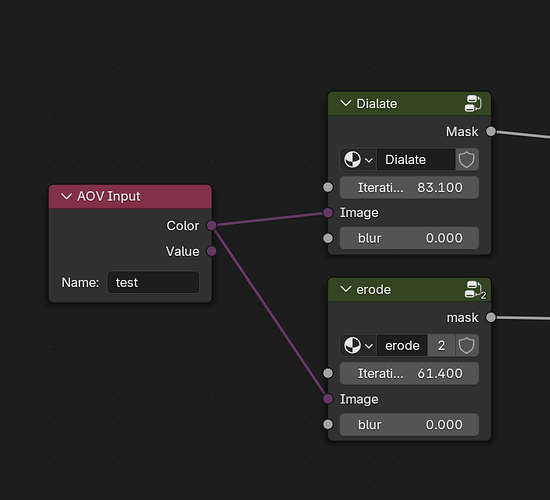

Notes so far: I would really like a dilate and erode node, or an easy way to achieve this with other nodes. I was able to recreate the effect with a really hacky node group lol. If you guys are interested I can share nodes!

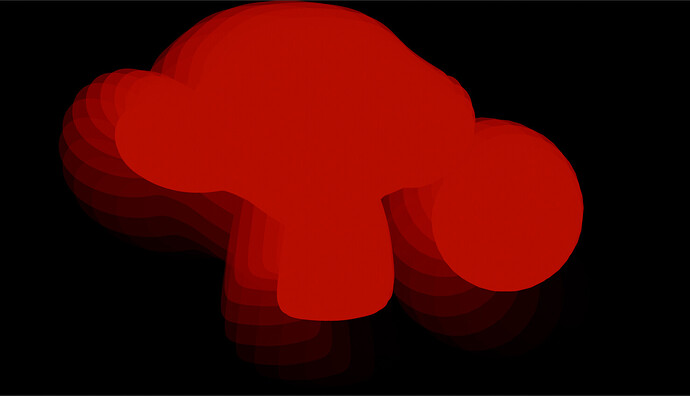

I was able to create an effect where I could displace an object beyond the edges of an AOV using the dilate nodegroup. its also really easy to create an outline using this nodegroup.

Let me know what you think! I can’t upload videos yet so I uploaded to youtube

wow how do you achieve this? I am trying to render an outline outside object but failed. Can I take a look at your dilate node?

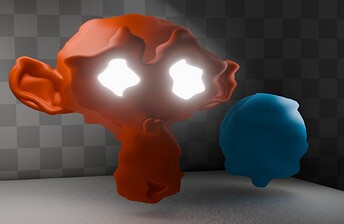

Quite cool, very nice. ![]()

Not specifically related to the NPR built itself, but - for any Goo Engine users, who are missing the SDF shader nodes. The addon below covers most of those bases, and does work in the 4.4 NPR build.

(Obviously the values of a goo vs b3d SDF node may need tweaking, but so will many other things if one decides to go all-in on the NPR renderer.)

Hat tip to Late, who alerted me to it’s existance.

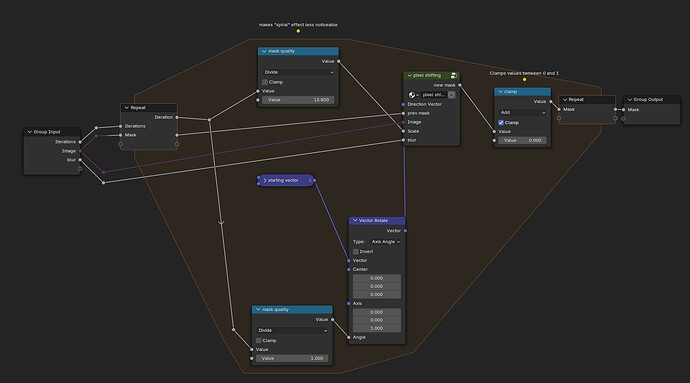

Sure! I’ll attach a link to the blend file in a sec but i’ll explain how it works first.

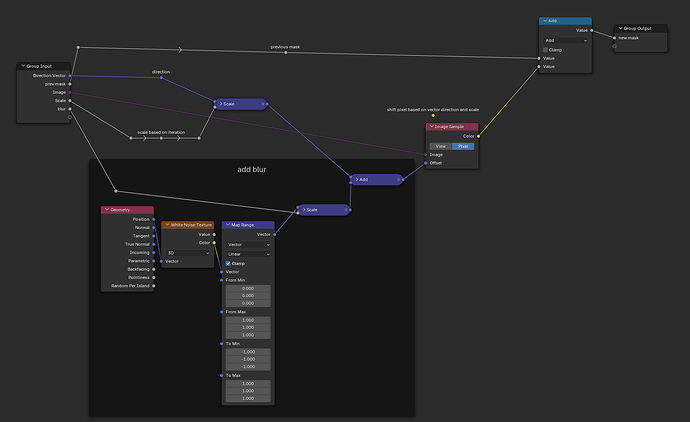

so using the image sample node we can shift a mask along a vector, if say we choose the vector [0,1,0] we would shift the image down by one pixel.

If we use a repeat zone and continually scale the vector while adding previous masks to the new mask we effectively get a motion blur effect without the gradient.

(gradient added for the sake of visualization)

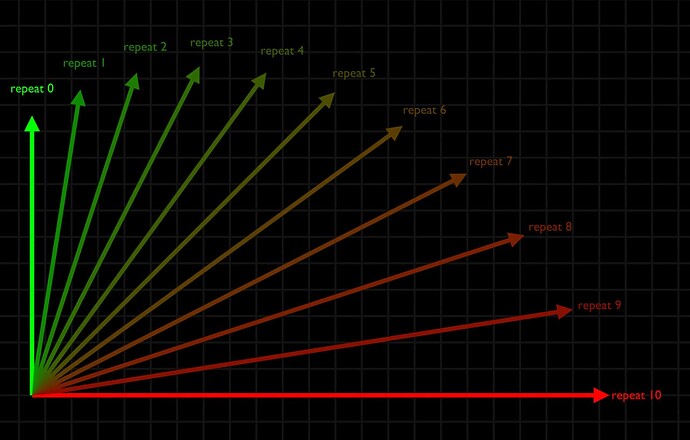

If however we use a Vector Rotate node to rotate our vector based on the repeat zone’s iteration we end up scaling the vector along side rotating it- which creates a sort of spiral mask.

here’s a visual representation of how the Image Sample node’s offset changes based on the repeat zone’s iteration.

if we make the scale and rotation of the vector small enough we are able to approximate (very poorly im guessing) image dilation and erosion !

I’m sure there’s lots of cool ways to use dilate and erode for NPR rendering but you can get some pretty neat effects pretty quick.

EDIT!! See below for a far more easy (but let optimized?) solution!!

anddddd Here’s the link to the blend file, I tried to clean it up best I could lol.

(using 4.4 alpha build 9051c11b72aa)

For anyone not wanting to download the blend i’ll supply the nodes ![]()

![]()

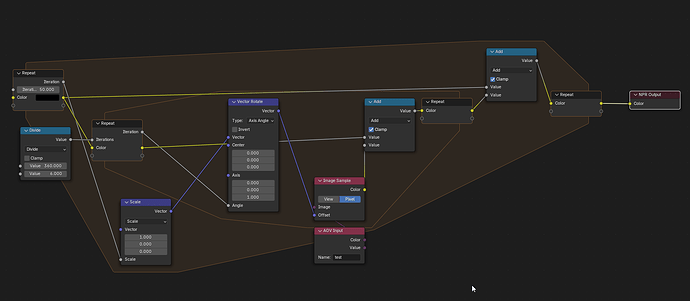

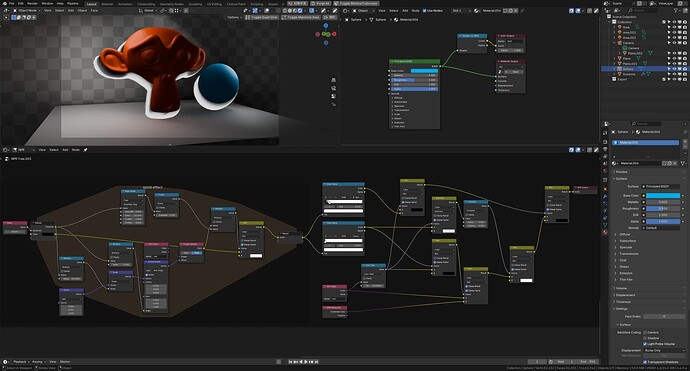

SETUP: I parented a plane to a camera and set up all the NPR stuff there. There’s an AOV on Suzanne and the sphere called “test” which i am using as the starting mask.

Dilate Nodegroup

Pixel Shifting Nodegroup

If anyone uses this technique I’d love to see what you make with it!!

Happy Blending!

since i’m having fun ![]() here’s one last video showcasing how the mask is built up over time - for the fellow visual learners out there!!

here’s one last video showcasing how the mask is built up over time - for the fellow visual learners out there!!

EDIT

just realized you could probably get a far cleaner result by using multiple repeat zones, one to scale the vector and one nested inside that rotates the vector 360 degrees over multiple increments. that way the quality slider would be just the number of divisions of 360 you use. Oh well!! it was fun to mess with ![]()

EDIT EDIT

my god… that’s so much simpler… ![]()

after further testing it seems this method is weirdly less optimized for larger dilation values than the previous… i don’t know much about image processing or how dilation works so i’m going to to leave it here haha- I recommend using the other method if you need extreme dilation values and use this one if you just need a quick outline lol.

Your explanation is a perfect medium of math vs process. Thanks for taking the time to document, as it will definitely spark experiments. ![]()

Thank you! It was a fun experiment, I hope to do more in the future

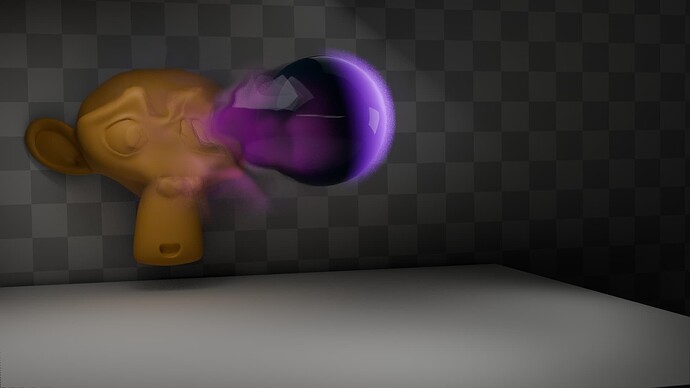

Tonight’s experiments:

subtracting an object’s motion so it appears almost static - i sorta just eyeballed the values so its not perfect. Was done by storing the objects position in geo nodes then transforming that vector to screen space. (geo nodes attb > shader to aov > npr)

stylized fire effect, affected by the object’s velocity (calculated in geo nodes then stored in an AOV)

I tried a version with a smoke trail, its insanely hacky and i can’t recommend it for any project yet.

I made the smoke trail by first starting with a simple trail effect. I stored an object’s position per frame and then offset based on that position over multiple frames. It’s extremely hacky and disgusting behind the scenes - but in theory it could work with some fine tuning, i’ll see if i can figure something out later.

My notes for tonight are it would be really helpful for the devs to implement a way store data between frames somehow. maybe sourcing data from another part of blender like geometry nodes. I also think AOVs are a great way of transferring data between other parts of blender like shaders/geometry nodes but it can feel clunky in some use cases; it would be really cool to have a way to access data easier (maybe like an object info node like geo nodes has)…

That’s it for tonight!

Not sure where you get that idea, but it’s not supposed to work like that (Malt lines don’t work like that either). ![]()

An object can’t draw outside itself, if you want lines to go beyond the object shape you need to do it on a separate object with a refraction material.

@chasemussey Really cool experiments! ![]()

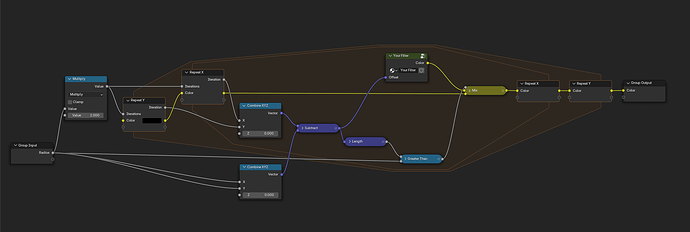

I would try swapping the order of your loops. So instead of Rotation > Scale you do Scale > Rotation.

Even then, you are oversampling the center and (for large radius) undersampling the outer area.

Something like this may work better:

I think it would be nice to have a built-in Repeat Zones that sets up common filtering kernels for the user, so you just set the radius and get the offset vector (for example).

I think this was already mentioned at some point in the thread, but yes, something like an AOV that is not cleared between frames shouldn’t be super hard to add.

The Attribute node should solve this. Although I think it’s broken atm, I have to check it.

BTW, I’ve updated the experimental build.

It doesn’t have any new features, but it’s now up to date with the main branch, so it should be more stable/less buggy in general.

Thanks for sharing ideas and nodes, try to simulate the effect of flat paper jam on the basis of the original file: add a slightly larger solid color paper on the back of the object, and there is a shadow between the object and the paper

During production, the process had problems with the stacking of different effect layers, and if NPR had an Alpha Over node of the synthesizer or something similar, it might be possible to use the process as another synthesizer

Thanks! it’s been fun messing with the new branch,

makes sense! I’m gonna try this node group out later, thank you

Yeah this would be super cool! messed around with a similar effect after seeing your tests and I see the pain points without some way of layering effects. I would have liked to have the ball have a paper outline in front of Suzie without having to do too much manual index assignment… maybe something to experiment with later ![]()

few bonus experiments from tonight,

I managed to store object’s position and velocity over time in a lot cleaner way this evening and I could see this method finally being used in a project.

it’s always fun looking beyond the camera view to see the regular render view lol!

I would love for there to be a way to get the color of a pixel at a specific coordinate, this trail effect is really complicated and could be cut down quite a bit by the introduction of a node like this.

and lastly I messed around with a per object pixelized effect

effect is once again pretty hacky, with my research into the trail effect from above i think i could get a cleaner result

That’s all for tonight folks!

Can you share more of your experiment files? Incredible work here