Hi,

I was wondering if there was any plans to decouple Eevee’s ambient occlusion realtime sampling, and possibly other effects (shadows, anti aliasing?), from global sampling.

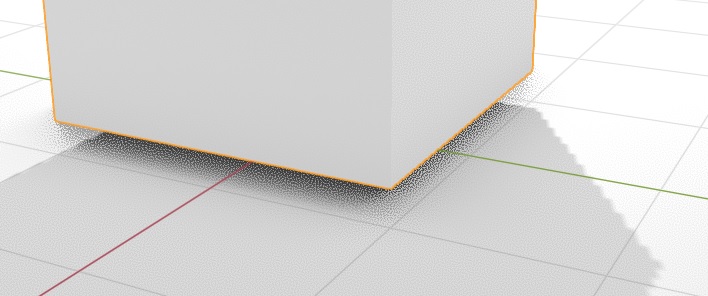

The main issue is that, when playing back animations, Eevee is rendering one sample per frame only, leaving noisy ambient occlusion:

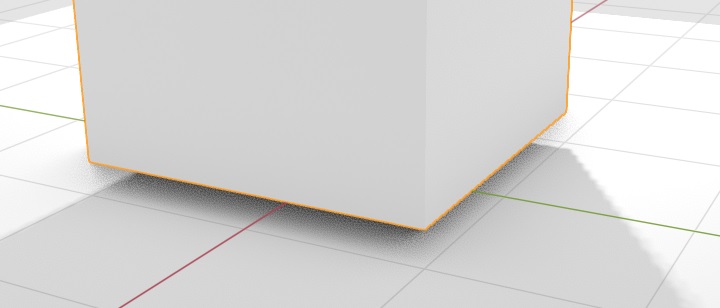

Sub-sampling at 4-6 samples per frame would almost totally solve it:

Eevee has of course the temporal denoiser, but the drawback is the ghosting effect.

I’ve digged into the code, but so far no luck… If a developer familiarized with this development area (@fclem ?) coud point me in the right direction, that would be very appreciated. Is this something easily feasible or does it require a lot of changes in Eevee’s code structure?