Yes, in general a 2990WX is as fast as a 2080, the thing is that you have to use small tile size to be sure that the slower machine does not slow down the whole render, it should be faster in that situation, if it’s not something may be happening, because even when the ripper is slower it has many cores, and the GPU is just one, all the tiles in the ripper must be rendered faster than just 1 tile of the GPU, so it must accelerate the render no matter how, unless the CPU is suuuuuper slow, like an old i5 or i3, but with a ripper it should not be slower no matter what.

Yes on small tiles of course !

Im not the only one who find out that hybrid rendering is too slo for them, there’s multiple RTX users who also think that, on the blender artist optix thread.

Well, now that @brecht is going to focus on Cycles i’m sure a lot of things will improve, hopefully also in this front

I also heard that Mathieu, the creator from e-cycles might join the team and officially working for blender cycles. (???)

That would be great. He is such a nice and kind guy. Does anyone know if cycles will get viewport denoise that work also with amd cards?

I’d find that surprising, but would welcome it. I read a lot about the speed of E-Cycles, but I’ve never bought it, because I don’t want to be dependent on a paid third-party version of Cycles.

x4 speed boost is quite a serious achievement.

I’d be really happy to hear his arrival on the team unfortunately i heard that his work was never accepted for the main build for reasons i don’t understand yet. It’s quite sad we could have a native engine as fast as octane.

This is not the case.

This is also not true. Mathieu promised to contribute e-cycles improvements after a year, but that year has not passed yet. When that happens we will happily accept such speedups. In the past some contributions from him have been accepted, some rejected, but nothing related to speedups for NVIDIA cards.

Will it be possible to implement OptiX for those who have old GTX cards (GTX 9xx for example)?

And if this is possible, will we have good render times improvements compared to current CUDA?

How would you be dependant? Unlike Adobe and co, even if you buy the monthly option, the program works forever. You can also buy the course to do the updates yourself to be 100% independant and even put the speedup on top of the branch you like (sculpt, mantaflow, you name it).

I offered to work for the foundation 2 years ago after I made the AO simplify patch and this offer was refused back then. So at this time indeed I could have worked for the BF. Ton wanted to have Cycles dev to be paid by external studios and send me to Insydium… They never paid me so that I had to live from social help.

I asked a BF developer if he knew someone who could be interested and could pay for the work that was done. At this point again, the BF dev could just have discussed with me so that it goes in main Blender but was pointed to third parties. I asked to the enterprises I was pointed to and they all said no, because they were selling render time and cutting render time would mostly just make loses.

So seeing I was the only one believing in my project, I started E-Cycles, but of course by selling a product it was hard to say as a still very unknown independent dev “hey please buy 1 year of support but maybe in 2 weeks it will be for free in Blender”. So I engaged myself to only make the patches public a year after their respective release. Until now Brecht and the BF respected that which I find good.

I discussed with Nathan who is now in charge of coordinating and on boarding in private. That’s all. I’ll try to do the best of whatever comes next, open or closed source. The only thing I can state is that I can’t live from social help and use that money to push Blender forever. So indeed after the patch get public if the income goes to low, I’ll have to go develop E-Cycles for other platforms or do something completely different.

Now that everyone saw what you can do with cycles and that money is finally flowing into the dev fund it may change everything ?

Keep up with your good work, you have all our encouragements.

Many of us hope that Mathieu and his great work will be funded by the BF as has happened with Pablo Dobarro through the dev fund.

What I meant is being dependent on an external developer who can decide to or be forced to quit development. This is less of a problem with minor add-ons, but Cycles is an essential part of Blender, and until now I prefer to keep using the official version.

This is purely a personal consideration. It doesn’t mean I think E-Cycles is wrong or not useful, not at all. The speed gain seems impressive. It’s just that I’m careful in deciding which third-party add-ons I come to depend on.

I have to recommend the course from Mathieu.

@MetinSeven if you take the course and build up your own build you will end up with nearly the same as e-cycles (and I can assure you that you will get awesome speed improvements) but it will depend on you, you will leran on how to deal with patches and other things, even minor changes to the actual Blender code to fix a problem you may have, and this way you won’t depend on Mathieu but on yourself.

And file saved with E-Cycles can be opened in Blender and rendered with vanilla Cycles.

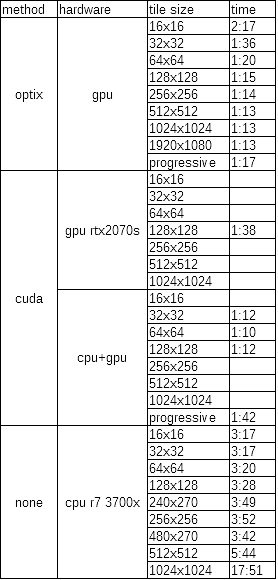

I tested optix vs cuda vs cpu.

So far optix is a bit faster than cuda, I guess that will be improved over time.

This is not 100% valid benchmark as I used my own scene for tests, while I should use ones from blender site, but it gives you some ideas.

this score don’t look normal at all.

What do you mean, weird numbers? to me they are not that different from this article (cuda vs optix ratio), and 2070s is known for anomalous performance in blender.

I’ve noticed that OptiX makes the GPU run out of VRAM relatively fast. I tried rendering a scene with some high-res PBR textures and micro-displacement, and got an out of memory error on my high-end NVIDIA Geforce.

A CPU + GPU option that divides the memory resources between CPU RAM and GPU RAM (Octane-style) would be very welcome.